diff --git a/unit4/unit4.ipynb b/unit4/unit4.ipynb

deleted file mode 100644

index 9232ad6..0000000

--- a/unit4/unit4.ipynb

+++ /dev/null

@@ -1,569 +0,0 @@

-{

- "cells": [

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "view-in-github",

- "colab_type": "text"

- },

- "source": [

- " "

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "2D3NL_e4crQv"

- },

- "source": [

- "# DEPRECIATED NOTEBOOK, THE NEW VERSION OF THIS UNIT IS HERE: https://huggingface.co/deep-rl-course/unit5/introduction",

- "\n",

- "**Everything under is depreciated** 👇, the new version of the course is here: https://huggingface.co/deep-rl-course/unit5/introduction",

- "\n",

- "\n",

- "# Unit 4: Let's learn about Unity ML-Agents with Hugging Face 🤗\n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "J6Jgc0L7Ujx6"

- },

- "source": [

- ""

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "In this notebook, you'll learn about ML-Agents and use one of the pre-made environments: Pyramids. \n",

- "\n",

- "In this environment, we’ll train an agent that needs to press a button to spawn a pyramid, then navigate to the pyramid, knock it over, and move to the gold brick at the top.\n",

- "\n",

- "❓ If you have questions, please post them on #study-group-unit1 discord channel 👉 https://discord.gg/aYka4Yhff9\n",

- "\n",

- "🎮 Environments: \n",

- "- [Pyramids](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Learning-Environment-Examples.md#pyramids)\n",

- "\n",

- "\n",

- "⬇️ Here is an example of what **you will achieve at the end of this notebook.** ⬇️"

- ],

- "metadata": {

- "id": "FMYrDriDujzX"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "cBmFlh8suma-"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "## This notebook is from Deep Reinforcement Learning Class\n",

- "\n",

- "This notebook was written by [Abid Ali Awan alias kingabzpro](https://github.com/kingabzpro) 🤗\n",

- "\n",

- ""

- ],

- "metadata": {

- "id": "mUlVrqnBv2o1"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "In this free course, you will:\n",

- "\n",

- "- 📖 Study Deep Reinforcement Learning in **theory and practice**.\n",

- "- 🧑💻 Learn to **use famous Deep RL libraries** such as Stable Baselines3, RL Baselines3 Zoo, and RLlib.\n",

- "- 🤖 Train **agents in unique environments** \n",

- "\n",

- "And more check 📚 the syllabus 👉 https://github.com/huggingface/deep-rl-class\n",

- "\n",

- "The best way to keep in touch is to join our discord server to exchange with the community and with us 👉🏻 https://discord.gg/aYka4Yhff9"

- ],

- "metadata": {

- "id": "pAMjaQpHwB_s"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "## Prerequisites 🏗️\n",

- "To dive in this notebook, **you need to read in parallel the tutorial** 👉 https://link.medium.com/KOpvPdyz4qb\n"

- ],

- "metadata": {

- "id": "6r7Hl0uywFSO"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "an3ByrXYQ4iK"

- },

- "source": [

- "### Step 1: Clone the repository and install the dependencies 🔽\n",

- "- We need to clone the repository, that **contains the experimental version of the library that allows you to push your trained agent to the Hub.**"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "6WNoL04M7rTa"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "# Clone the repository (can take 1min)\n",

- "!git clone https://github.com/huggingface/ml-agents/"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "d8wmVcMk7xKo"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "# Go inside the repository and install the package\n",

- "%cd ml-agents\n",

- "!pip3 install -e ./ml-agents-envs\n",

- "!pip3 install -e ./ml-agents"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "HRY5ufKUKfhI"

- },

- "source": [

- "### Step 2: Download and move the environment zip file in `./trained-envs-executables/linux/`\n",

- "- Our environment executable is in a zip file.\n",

- "- We need to download it and place it to `./trained-envs-executables/linux/`"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "C9Ls6_6eOKiA"

- },

- "outputs": [],

- "source": [

- "!mkdir ./trained-envs-executables\n",

- "!mkdir ./trained-envs-executables/linux"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "jsoZGxr1MIXY"

- },

- "source": [

- "Download the file Pyramids.zip from https://drive.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H using `wget`. Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "QU6gi8CmWhnA"

- },

- "outputs": [],

- "source": [

- "!wget --load-cookies /tmp/cookies.txt \"https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\\1\\n/p')&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H\" -O ./trained-envs-executables/linux/Pyramids.zip && rm -rf /tmp/cookies.txt"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "5yIV74OPOa1i"

- },

- "source": [

- "**OR** Download directly to local machine and then drag and drop the file from local machine to `./trained-envs-executables/linux`"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "H7JmgOwcSSmF"

- },

- "source": [

- "Wait for the upload to finish and then run the command below. \n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "8FPx0an9IAwO"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "!unzip -d ./trained-envs-executables/linux/ ./trained-envs-executables/linux/Pyramids.zip"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "nyumV5XfPKzu"

- },

- "source": [

- "Make sure your file is accessible "

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "EdFsLJ11JvQf"

- },

- "outputs": [],

- "source": [

- "!chmod -R 755 ./trained-envs-executables/linux/Pyramids/Pyramids"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "### Step 3: Modify the PyramidsRND config file\n",

- "- In ML-Agents, you define the **training hyperparameters into config.yaml files.**\n",

- "For this first training, we’ll modify one thing:\n",

- "- The total training steps hyperparameter is too high since we can hit the benchmark in only 1M training steps.\n",

- "👉 To do that, we go to config/ppo/PyramidsRND.yaml,**and modify these to max_steps to 1000000.**"

- ],

- "metadata": {

- "id": "NAuEq32Mwvtz"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "- Click here to open the config.yaml: /content/ml-agents/config/ppo/PyramidsRND.yaml"

- ],

- "metadata": {

- "id": "r9wv5NYGw-05"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "- Modify the `max_steps` to 500,000"

- ],

- "metadata": {

- "id": "ob_crJkhygQz"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "LY7GI7QpymSu"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "As an experimentation, you should also try to modify some other hyperparameters, Unity provides a very [good documentation explaining each of them here](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Training-Configuration-File.md).\n",

- "\n",

- "We’re now ready to train our agent 🔥."

- ],

- "metadata": {

- "id": "JJJdo_5AyoGo"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "f9fI555bO12v"

- },

- "source": [

- "### Step 4: Train our agent\n",

- "\n",

- "To train our agent, we just need to **launch mlagents-learn and select the executable containing the environment.**\n",

- "\n",

- "We define four parameters:\n",

- "\n",

- "1. `mlagents-learn `: the path where the hyperparameter config file is.\n",

- "2. `--env`: where the environment executable is.\n",

- "3. `--run_id`: the name you want to give to your training run id.\n",

- "4. `--no-graphics`: to not launch the visualization during the training.\n",

- "\n",

- "Train the model and use the `--resume` flag to continue training in case of interruption. \n",

- "\n",

- "> It will fail first time when you use `--resume`, try running the block again to bypass the error. \n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "The training will take 30 to 45min depending on your machine, go take a ☕️you deserve it 🤗."

- ],

- "metadata": {

- "id": "lN32oWF8zPjs"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "bS-Yh1UdHfzy"

- },

- "outputs": [],

- "source": [

- "!mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./trained-envs-executables/linux/Pyramids/Pyramids --run-id=\"Pyramids Training\" --no-graphics"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "5Vue94AzPy1t"

- },

- "source": [

- "### Step 5: Push the agent to the 🤗 Hub\n",

- "- Now that we trained our agent, we’re **ready to push it to the Hub and see him playing online 🔥.**"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "To be able to share your model with the community there are three more steps to follow:\n",

- "\n",

- "1️⃣ (If it's not already done) create an account to HF ➡ https://huggingface.co/join\n",

- "\n",

- "2️⃣ Sign in and then, you need to store your authentication token from the Hugging Face website.\n",

- "- Create a new token (https://huggingface.co/settings/tokens) **with write role**"

- ],

- "metadata": {

- "id": "izT6FpgNzZ6R"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "ynDmQo5TzdBq"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "rKt2vsYoK56o"

- },

- "outputs": [],

- "source": [

- "from huggingface_hub import notebook_login\n",

- "notebook_login()"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "If you don't want to use a Google Colab or a Jupyter Notebook, you need to use this command instead: `huggingface-cli login`"

- ],

- "metadata": {

- "id": "ew59mK19zjtN"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "Xi0y_VASRzJU"

- },

- "source": [

- "Then, we simply need to run `mlagents-push-to-hf`.\n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "NtuBFDHGzuG_"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "And we define 4 parameters:\n",

- "\n",

- "1. `--run-id`: the name of the training run id.\n",

- "2. `--local-dir`: where the agent was saved, it’s results/, so in my case results/First Training.\n",

- "3. `--repo-id`: the name of the Hugging Face repo you want to create or update. It’s always /\n",

- "If the repo does not exist **it will be created automatically**\n",

- "4. `--commit-message`: since HF repos are git repository you need to define a commit message."

- ],

- "metadata": {

- "id": "KK4fPfnczunT"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "dGEFAIboLVc6"

- },

- "outputs": [],

- "source": [

- "!mlagents-push-to-hf --run-id=\"Pyramids Training\" --local-dir=\"./results/Pyramids Training\" --repo-id=\"ThomasSimonini/testpyramidsrnd\" --commit-message=\"First Pyramids\""

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "Else, if everything worked you should have this at the end of the process(but with a different url 😆) :\n",

- "\n",

- "\n",

- "\n",

- "```\n",

- "Your model is pushed to the hub. You can view your model here: https://huggingface.co/ThomasSimonini/MLAgents-Pyramids\n",

- "```\n",

- "\n",

- "It’s the link to your model, it contains a model card that explains how to use it, your Tensorboard and your config file. **What’s awesome is that it’s a git repository, that means you can have different commits, update your repository with a new push etc.**"

- ],

- "metadata": {

- "id": "yborB0850FTM"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "6YSyjSqy0NJH"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "But now comes the best: **being able to visualize your agent online 👀.**"

- ],

- "metadata": {

- "id": "5Uaon2cg0NrL"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "### Step 6: Watch our agent play 👀\n",

- "\n",

- "For this step it’s simple:\n",

- "\n",

- "Go to your repository\n",

- "In Watch Your Agent Play section click on the link: https://huggingface.co/spaces/unity/ML-Agents-Pyramids"

- ],

- "metadata": {

- "id": "VMc4oOsE0QiZ"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "Pp93P0fM0ZQP"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "1. In step 1, choose your model repository which is the model id (in my case ThomasSimonini/MLAgents-Pyramids).\n",

- "\n",

- "2. In step 2, **choose what model you want to replay**:\n",

- " - I have multiple one, since we saved a model every 500000 timesteps. \n",

- " - But if I want the more recent I choose Pyramids.onnx\n",

- "\n",

- "👉 What’s nice **is to try with different models step to see the improvement of the agent.**"

- ],

- "metadata": {

- "id": "Djs8c5rR0Z8a"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

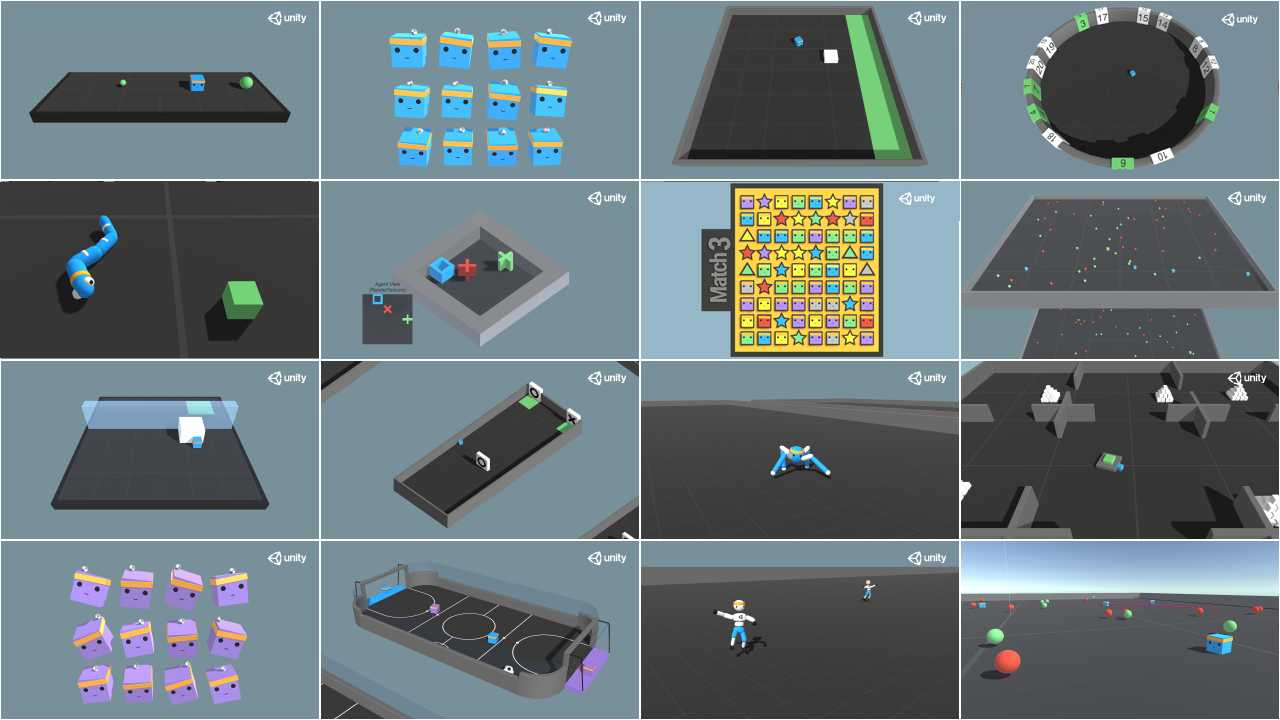

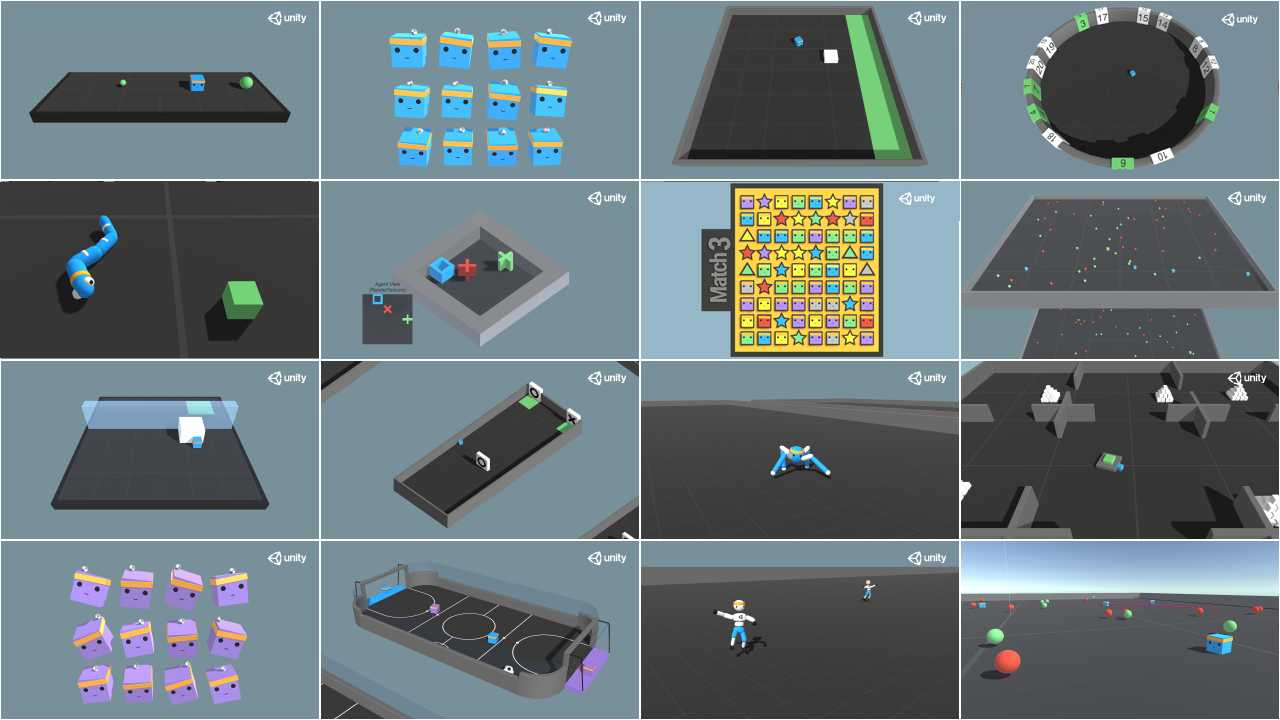

- "### 🎁 Bonus: Why not train on another environment?\n",

- "Now that you know how to train an agent using MLAgents, **why not try another environment?** \n",

- "\n",

- "MLAgents provides 18 different and we’re building some custom ones. The best way to learn is to try things of your own, have fun.\n",

- "\n"

- ],

- "metadata": {

- "id": "hGG_oq2n0wjB"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "KSAkJxSr0z6-"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "You have the full list of the one currently available on Hugging Face here 👉 https://github.com/huggingface/ml-agents#the-environments"

- ],

- "metadata": {

- "id": "YiyF4FX-04JB"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "That’s all for today. Congrats on finishing this tutorial! You’ve just trained your first ML-Agent and shared it to the Hub 🥳.\n",

- "\n",

- "The best way to learn is to practice and try stuff. Why not try another environment? ML-Agents has 18 different environments, but you can also create your own? Check the documentation and have fun!\n",

- "\n",

- "## Keep Learning, Stay awesome 🤗"

- ],

- "metadata": {

- "id": "PI6dPWmh064H"

- }

- }

- ],

- "metadata": {

- "accelerator": "GPU",

- "colab": {

- "collapsed_sections": [],

- "name": "Unit 4: Unity3D DeepRL on Colab.ipynb",

- "provenance": [],

- "private_outputs": true,

- "include_colab_link": true

- },

- "gpuClass": "standard",

- "kernelspec": {

- "display_name": "Python 3",

- "name": "python3"

- },

- "language_info": {

- "name": "python"

- }

- },

- "nbformat": 4,

- "nbformat_minor": 0

-}

"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "2D3NL_e4crQv"

- },

- "source": [

- "# DEPRECIATED NOTEBOOK, THE NEW VERSION OF THIS UNIT IS HERE: https://huggingface.co/deep-rl-course/unit5/introduction",

- "\n",

- "**Everything under is depreciated** 👇, the new version of the course is here: https://huggingface.co/deep-rl-course/unit5/introduction",

- "\n",

- "\n",

- "# Unit 4: Let's learn about Unity ML-Agents with Hugging Face 🤗\n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "J6Jgc0L7Ujx6"

- },

- "source": [

- ""

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "In this notebook, you'll learn about ML-Agents and use one of the pre-made environments: Pyramids. \n",

- "\n",

- "In this environment, we’ll train an agent that needs to press a button to spawn a pyramid, then navigate to the pyramid, knock it over, and move to the gold brick at the top.\n",

- "\n",

- "❓ If you have questions, please post them on #study-group-unit1 discord channel 👉 https://discord.gg/aYka4Yhff9\n",

- "\n",

- "🎮 Environments: \n",

- "- [Pyramids](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Learning-Environment-Examples.md#pyramids)\n",

- "\n",

- "\n",

- "⬇️ Here is an example of what **you will achieve at the end of this notebook.** ⬇️"

- ],

- "metadata": {

- "id": "FMYrDriDujzX"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "cBmFlh8suma-"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "## This notebook is from Deep Reinforcement Learning Class\n",

- "\n",

- "This notebook was written by [Abid Ali Awan alias kingabzpro](https://github.com/kingabzpro) 🤗\n",

- "\n",

- ""

- ],

- "metadata": {

- "id": "mUlVrqnBv2o1"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "In this free course, you will:\n",

- "\n",

- "- 📖 Study Deep Reinforcement Learning in **theory and practice**.\n",

- "- 🧑💻 Learn to **use famous Deep RL libraries** such as Stable Baselines3, RL Baselines3 Zoo, and RLlib.\n",

- "- 🤖 Train **agents in unique environments** \n",

- "\n",

- "And more check 📚 the syllabus 👉 https://github.com/huggingface/deep-rl-class\n",

- "\n",

- "The best way to keep in touch is to join our discord server to exchange with the community and with us 👉🏻 https://discord.gg/aYka4Yhff9"

- ],

- "metadata": {

- "id": "pAMjaQpHwB_s"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "## Prerequisites 🏗️\n",

- "To dive in this notebook, **you need to read in parallel the tutorial** 👉 https://link.medium.com/KOpvPdyz4qb\n"

- ],

- "metadata": {

- "id": "6r7Hl0uywFSO"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "an3ByrXYQ4iK"

- },

- "source": [

- "### Step 1: Clone the repository and install the dependencies 🔽\n",

- "- We need to clone the repository, that **contains the experimental version of the library that allows you to push your trained agent to the Hub.**"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "6WNoL04M7rTa"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "# Clone the repository (can take 1min)\n",

- "!git clone https://github.com/huggingface/ml-agents/"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "d8wmVcMk7xKo"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "# Go inside the repository and install the package\n",

- "%cd ml-agents\n",

- "!pip3 install -e ./ml-agents-envs\n",

- "!pip3 install -e ./ml-agents"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "HRY5ufKUKfhI"

- },

- "source": [

- "### Step 2: Download and move the environment zip file in `./trained-envs-executables/linux/`\n",

- "- Our environment executable is in a zip file.\n",

- "- We need to download it and place it to `./trained-envs-executables/linux/`"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "C9Ls6_6eOKiA"

- },

- "outputs": [],

- "source": [

- "!mkdir ./trained-envs-executables\n",

- "!mkdir ./trained-envs-executables/linux"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "jsoZGxr1MIXY"

- },

- "source": [

- "Download the file Pyramids.zip from https://drive.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H using `wget`. Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "QU6gi8CmWhnA"

- },

- "outputs": [],

- "source": [

- "!wget --load-cookies /tmp/cookies.txt \"https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\\1\\n/p')&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H\" -O ./trained-envs-executables/linux/Pyramids.zip && rm -rf /tmp/cookies.txt"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "5yIV74OPOa1i"

- },

- "source": [

- "**OR** Download directly to local machine and then drag and drop the file from local machine to `./trained-envs-executables/linux`"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "H7JmgOwcSSmF"

- },

- "source": [

- "Wait for the upload to finish and then run the command below. \n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "8FPx0an9IAwO"

- },

- "outputs": [],

- "source": [

- "%%capture\n",

- "!unzip -d ./trained-envs-executables/linux/ ./trained-envs-executables/linux/Pyramids.zip"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "nyumV5XfPKzu"

- },

- "source": [

- "Make sure your file is accessible "

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "EdFsLJ11JvQf"

- },

- "outputs": [],

- "source": [

- "!chmod -R 755 ./trained-envs-executables/linux/Pyramids/Pyramids"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "### Step 3: Modify the PyramidsRND config file\n",

- "- In ML-Agents, you define the **training hyperparameters into config.yaml files.**\n",

- "For this first training, we’ll modify one thing:\n",

- "- The total training steps hyperparameter is too high since we can hit the benchmark in only 1M training steps.\n",

- "👉 To do that, we go to config/ppo/PyramidsRND.yaml,**and modify these to max_steps to 1000000.**"

- ],

- "metadata": {

- "id": "NAuEq32Mwvtz"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "- Click here to open the config.yaml: /content/ml-agents/config/ppo/PyramidsRND.yaml"

- ],

- "metadata": {

- "id": "r9wv5NYGw-05"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "- Modify the `max_steps` to 500,000"

- ],

- "metadata": {

- "id": "ob_crJkhygQz"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "LY7GI7QpymSu"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "As an experimentation, you should also try to modify some other hyperparameters, Unity provides a very [good documentation explaining each of them here](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Training-Configuration-File.md).\n",

- "\n",

- "We’re now ready to train our agent 🔥."

- ],

- "metadata": {

- "id": "JJJdo_5AyoGo"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "f9fI555bO12v"

- },

- "source": [

- "### Step 4: Train our agent\n",

- "\n",

- "To train our agent, we just need to **launch mlagents-learn and select the executable containing the environment.**\n",

- "\n",

- "We define four parameters:\n",

- "\n",

- "1. `mlagents-learn `: the path where the hyperparameter config file is.\n",

- "2. `--env`: where the environment executable is.\n",

- "3. `--run_id`: the name you want to give to your training run id.\n",

- "4. `--no-graphics`: to not launch the visualization during the training.\n",

- "\n",

- "Train the model and use the `--resume` flag to continue training in case of interruption. \n",

- "\n",

- "> It will fail first time when you use `--resume`, try running the block again to bypass the error. \n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "The training will take 30 to 45min depending on your machine, go take a ☕️you deserve it 🤗."

- ],

- "metadata": {

- "id": "lN32oWF8zPjs"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "bS-Yh1UdHfzy"

- },

- "outputs": [],

- "source": [

- "!mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./trained-envs-executables/linux/Pyramids/Pyramids --run-id=\"Pyramids Training\" --no-graphics"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "5Vue94AzPy1t"

- },

- "source": [

- "### Step 5: Push the agent to the 🤗 Hub\n",

- "- Now that we trained our agent, we’re **ready to push it to the Hub and see him playing online 🔥.**"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "To be able to share your model with the community there are three more steps to follow:\n",

- "\n",

- "1️⃣ (If it's not already done) create an account to HF ➡ https://huggingface.co/join\n",

- "\n",

- "2️⃣ Sign in and then, you need to store your authentication token from the Hugging Face website.\n",

- "- Create a new token (https://huggingface.co/settings/tokens) **with write role**"

- ],

- "metadata": {

- "id": "izT6FpgNzZ6R"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "ynDmQo5TzdBq"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "rKt2vsYoK56o"

- },

- "outputs": [],

- "source": [

- "from huggingface_hub import notebook_login\n",

- "notebook_login()"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "If you don't want to use a Google Colab or a Jupyter Notebook, you need to use this command instead: `huggingface-cli login`"

- ],

- "metadata": {

- "id": "ew59mK19zjtN"

- }

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "Xi0y_VASRzJU"

- },

- "source": [

- "Then, we simply need to run `mlagents-push-to-hf`.\n",

- "\n"

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "NtuBFDHGzuG_"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "And we define 4 parameters:\n",

- "\n",

- "1. `--run-id`: the name of the training run id.\n",

- "2. `--local-dir`: where the agent was saved, it’s results/, so in my case results/First Training.\n",

- "3. `--repo-id`: the name of the Hugging Face repo you want to create or update. It’s always /\n",

- "If the repo does not exist **it will be created automatically**\n",

- "4. `--commit-message`: since HF repos are git repository you need to define a commit message."

- ],

- "metadata": {

- "id": "KK4fPfnczunT"

- }

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "dGEFAIboLVc6"

- },

- "outputs": [],

- "source": [

- "!mlagents-push-to-hf --run-id=\"Pyramids Training\" --local-dir=\"./results/Pyramids Training\" --repo-id=\"ThomasSimonini/testpyramidsrnd\" --commit-message=\"First Pyramids\""

- ]

- },

- {

- "cell_type": "markdown",

- "source": [

- "Else, if everything worked you should have this at the end of the process(but with a different url 😆) :\n",

- "\n",

- "\n",

- "\n",

- "```\n",

- "Your model is pushed to the hub. You can view your model here: https://huggingface.co/ThomasSimonini/MLAgents-Pyramids\n",

- "```\n",

- "\n",

- "It’s the link to your model, it contains a model card that explains how to use it, your Tensorboard and your config file. **What’s awesome is that it’s a git repository, that means you can have different commits, update your repository with a new push etc.**"

- ],

- "metadata": {

- "id": "yborB0850FTM"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "6YSyjSqy0NJH"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "But now comes the best: **being able to visualize your agent online 👀.**"

- ],

- "metadata": {

- "id": "5Uaon2cg0NrL"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "### Step 6: Watch our agent play 👀\n",

- "\n",

- "For this step it’s simple:\n",

- "\n",

- "Go to your repository\n",

- "In Watch Your Agent Play section click on the link: https://huggingface.co/spaces/unity/ML-Agents-Pyramids"

- ],

- "metadata": {

- "id": "VMc4oOsE0QiZ"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "Pp93P0fM0ZQP"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "1. In step 1, choose your model repository which is the model id (in my case ThomasSimonini/MLAgents-Pyramids).\n",

- "\n",

- "2. In step 2, **choose what model you want to replay**:\n",

- " - I have multiple one, since we saved a model every 500000 timesteps. \n",

- " - But if I want the more recent I choose Pyramids.onnx\n",

- "\n",

- "👉 What’s nice **is to try with different models step to see the improvement of the agent.**"

- ],

- "metadata": {

- "id": "Djs8c5rR0Z8a"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "### 🎁 Bonus: Why not train on another environment?\n",

- "Now that you know how to train an agent using MLAgents, **why not try another environment?** \n",

- "\n",

- "MLAgents provides 18 different and we’re building some custom ones. The best way to learn is to try things of your own, have fun.\n",

- "\n"

- ],

- "metadata": {

- "id": "hGG_oq2n0wjB"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- ""

- ],

- "metadata": {

- "id": "KSAkJxSr0z6-"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "You have the full list of the one currently available on Hugging Face here 👉 https://github.com/huggingface/ml-agents#the-environments"

- ],

- "metadata": {

- "id": "YiyF4FX-04JB"

- }

- },

- {

- "cell_type": "markdown",

- "source": [

- "That’s all for today. Congrats on finishing this tutorial! You’ve just trained your first ML-Agent and shared it to the Hub 🥳.\n",

- "\n",

- "The best way to learn is to practice and try stuff. Why not try another environment? ML-Agents has 18 different environments, but you can also create your own? Check the documentation and have fun!\n",

- "\n",

- "## Keep Learning, Stay awesome 🤗"

- ],

- "metadata": {

- "id": "PI6dPWmh064H"

- }

- }

- ],

- "metadata": {

- "accelerator": "GPU",

- "colab": {

- "collapsed_sections": [],

- "name": "Unit 4: Unity3D DeepRL on Colab.ipynb",

- "provenance": [],

- "private_outputs": true,

- "include_colab_link": true

- },

- "gpuClass": "standard",

- "kernelspec": {

- "display_name": "Python 3",

- "name": "python3"

- },

- "language_info": {

- "name": "python"

- }

- },

- "nbformat": 4,

- "nbformat_minor": 0

-}