diff --git a/units/en/unit2/additional-readings.mdx b/units/en/unit2/additional-readings.mdx

new file mode 100644

index 0000000..9a14724

--- /dev/null

+++ b/units/en/unit2/additional-readings.mdx

@@ -0,0 +1,15 @@

+# Additional Readings [[additional-readings]]

+

+These are **optional readings** if you want to go deeper.

+

+## Monte Carlo and TD Learning [[mc-td]]

+

+To dive deeper on Monte Carlo and Temporal Difference Learning:

+

+- Why do temporal difference (TD) methods have lower variance than Monte Carlo methods?

+- When are Monte Carlo methods preferred over temporal difference ones?

+

+## Q-Learning [[q-learning]]

+

+- Reinforcement Learning: An Introduction, Richard Sutton and Andrew G. Barto Chapter 5, 6 and 7

+- Foundations of Deep RL Series, L2 Deep Q-Learning by Pieter Abbeel

diff --git a/units/en/unit2/bellman-equation.mdx b/units/en/unit2/bellman-equation.mdx

new file mode 100644

index 0000000..6d224f0

--- /dev/null

+++ b/units/en/unit2/bellman-equation.mdx

@@ -0,0 +1,57 @@

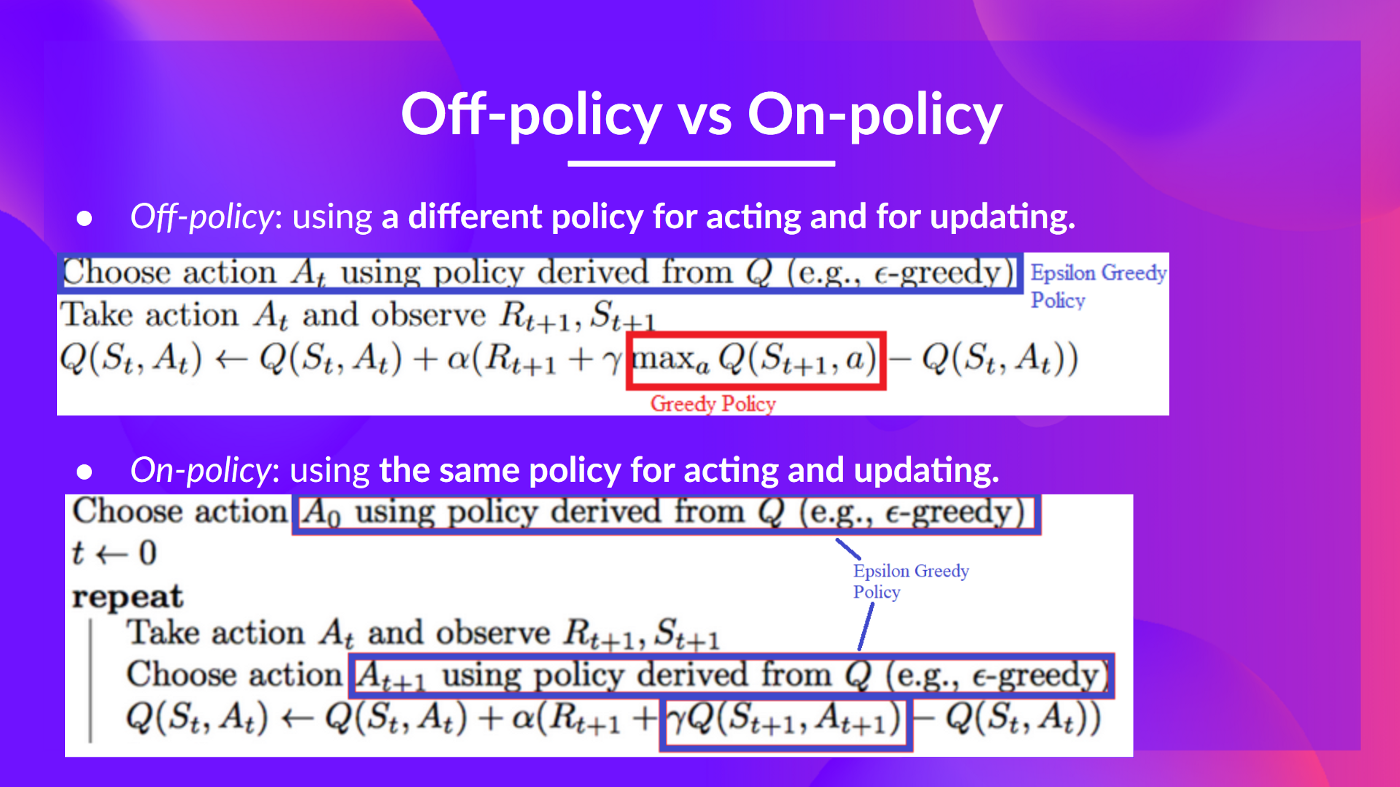

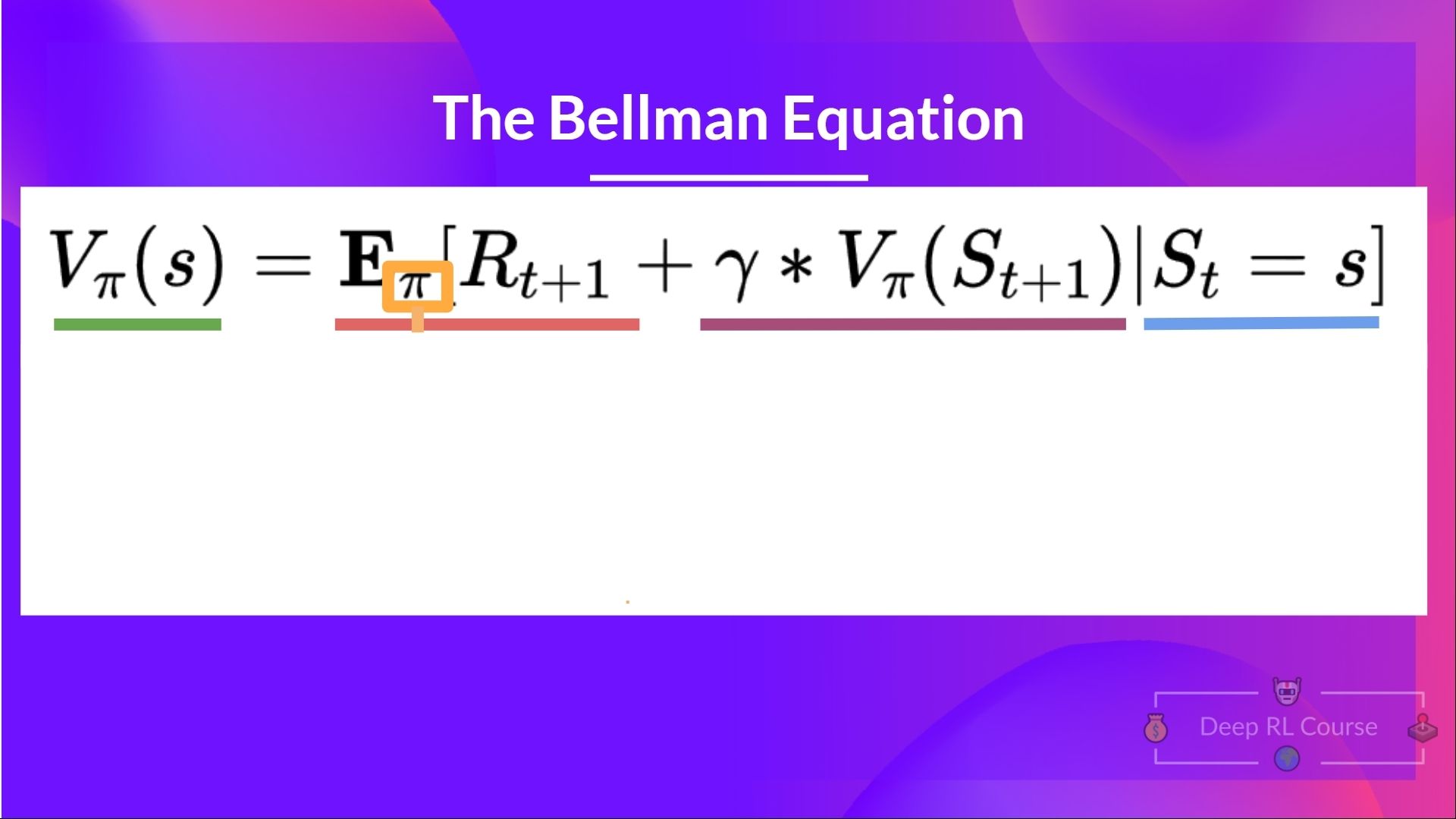

+# The Bellman Equation: simplify our value estimation [[bellman-equation]]

+

+The Bellman equation **simplifies our state value or state-action value calculation.**

+

+

+ +

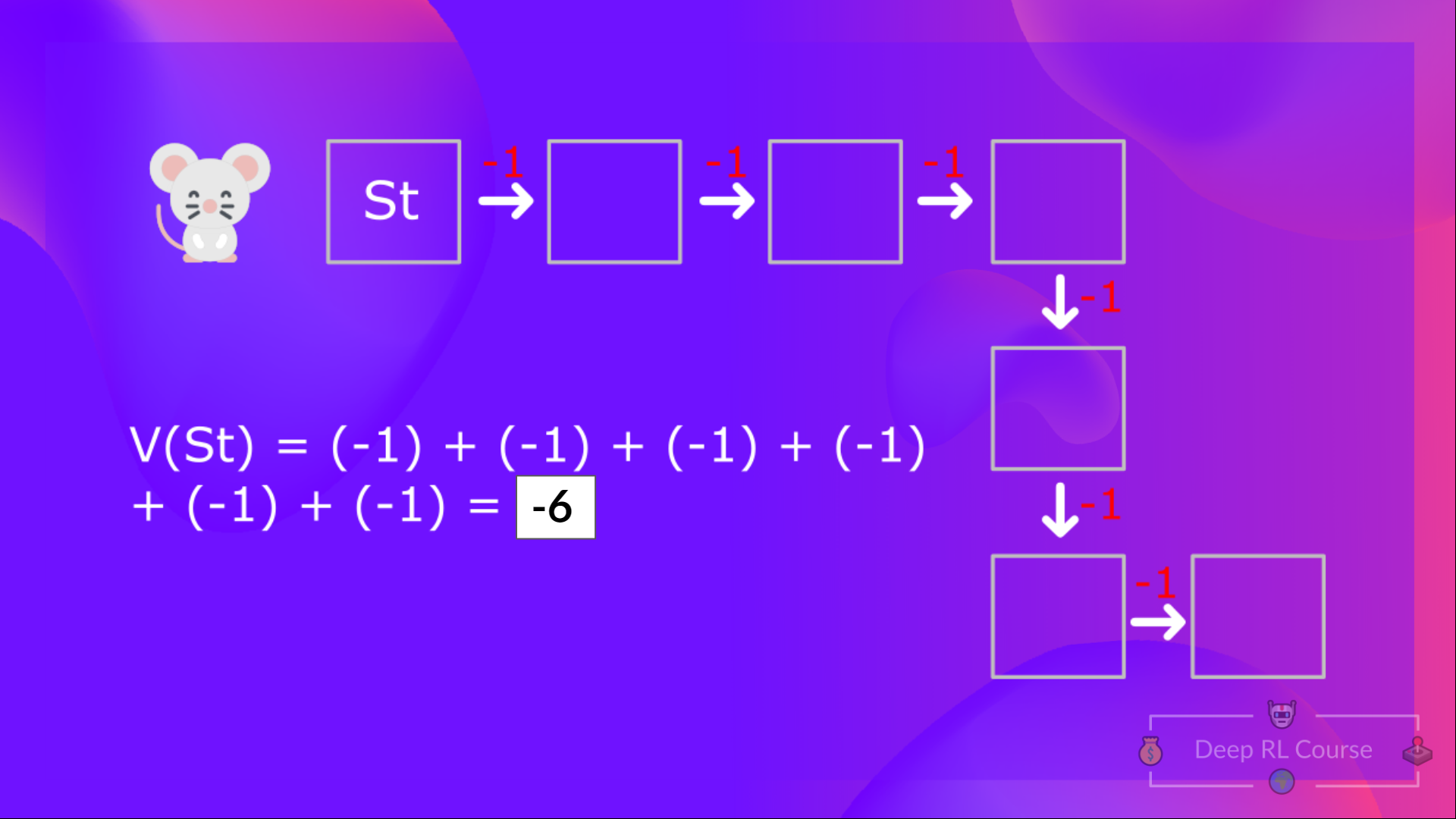

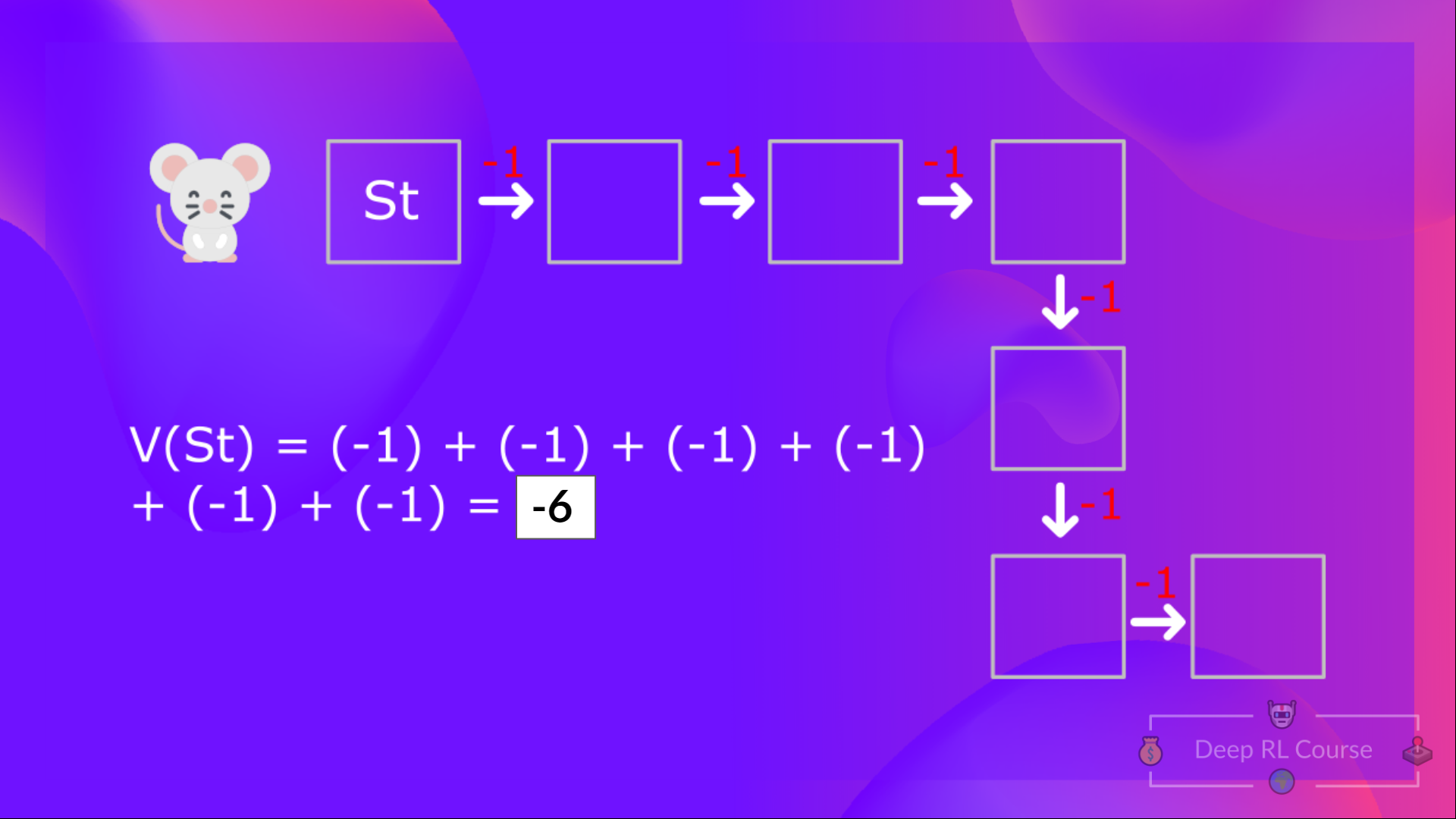

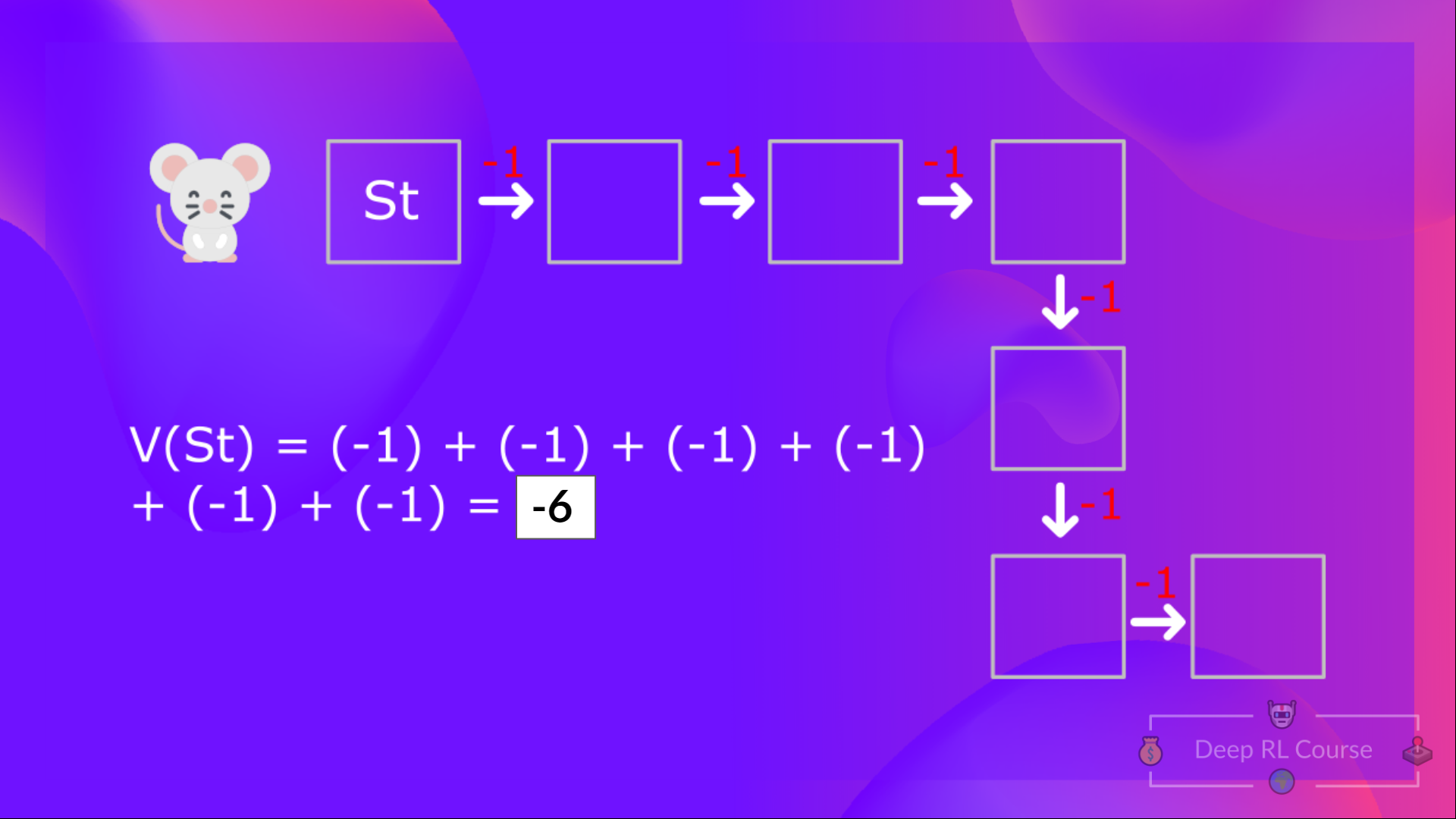

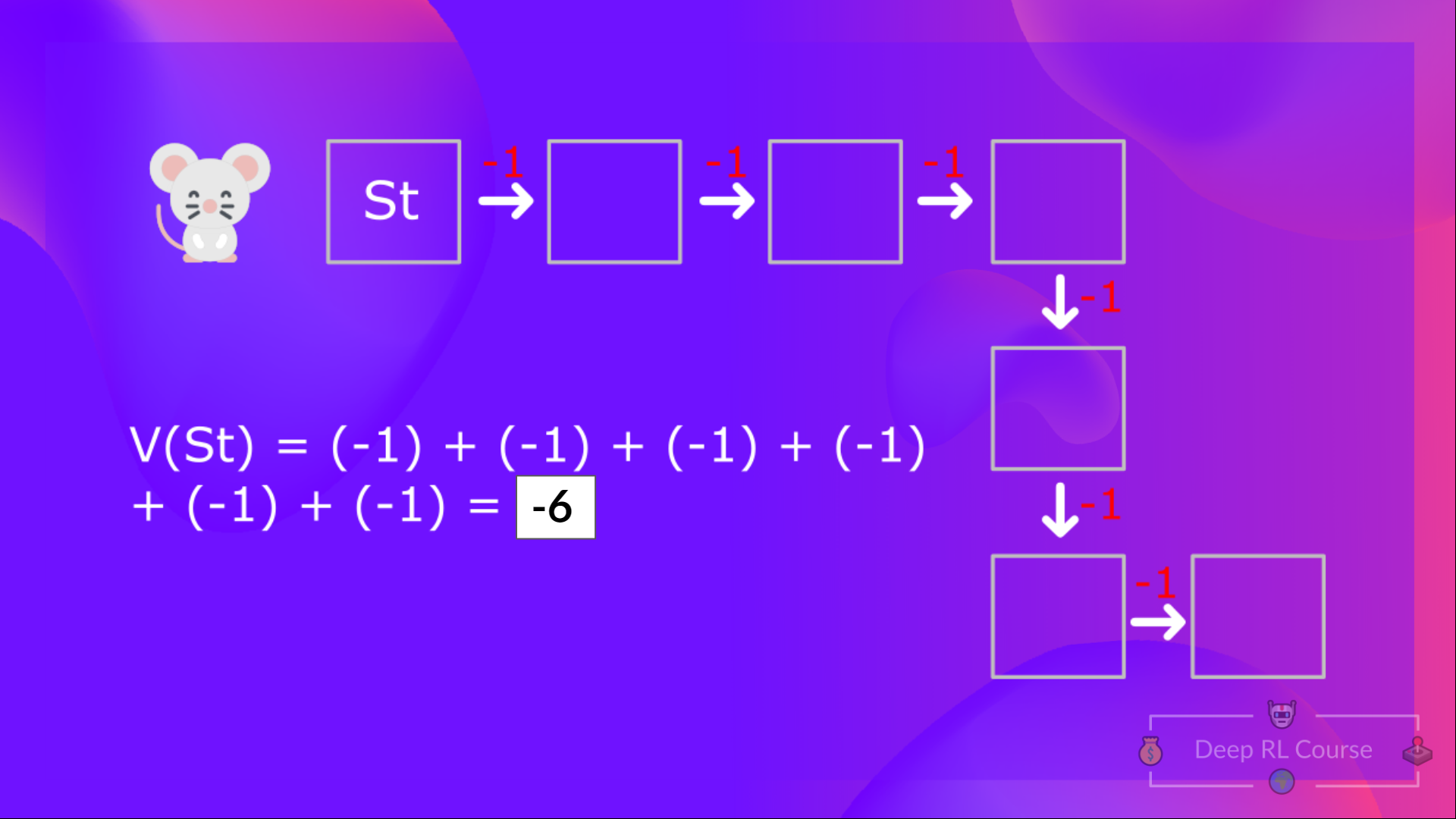

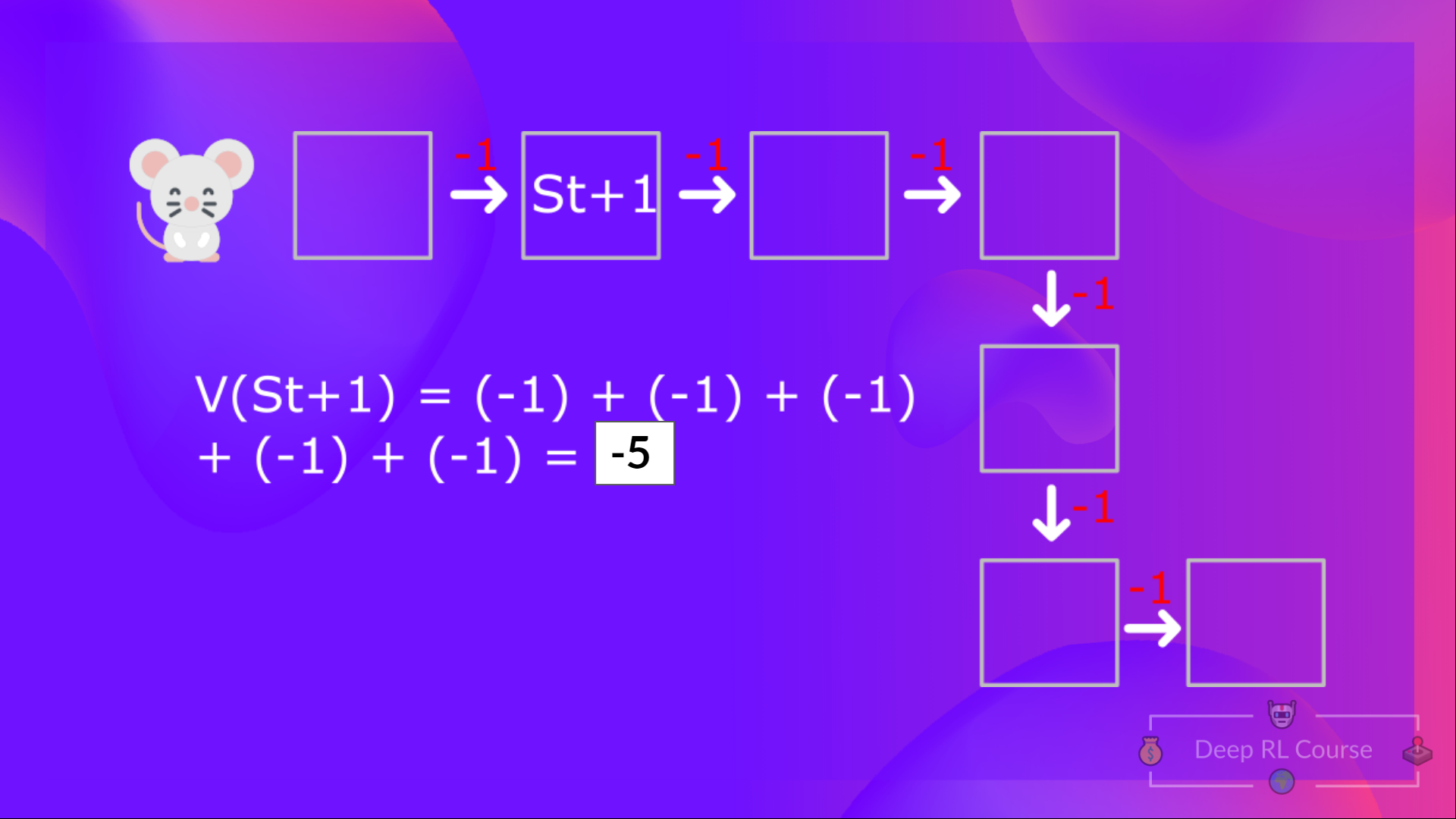

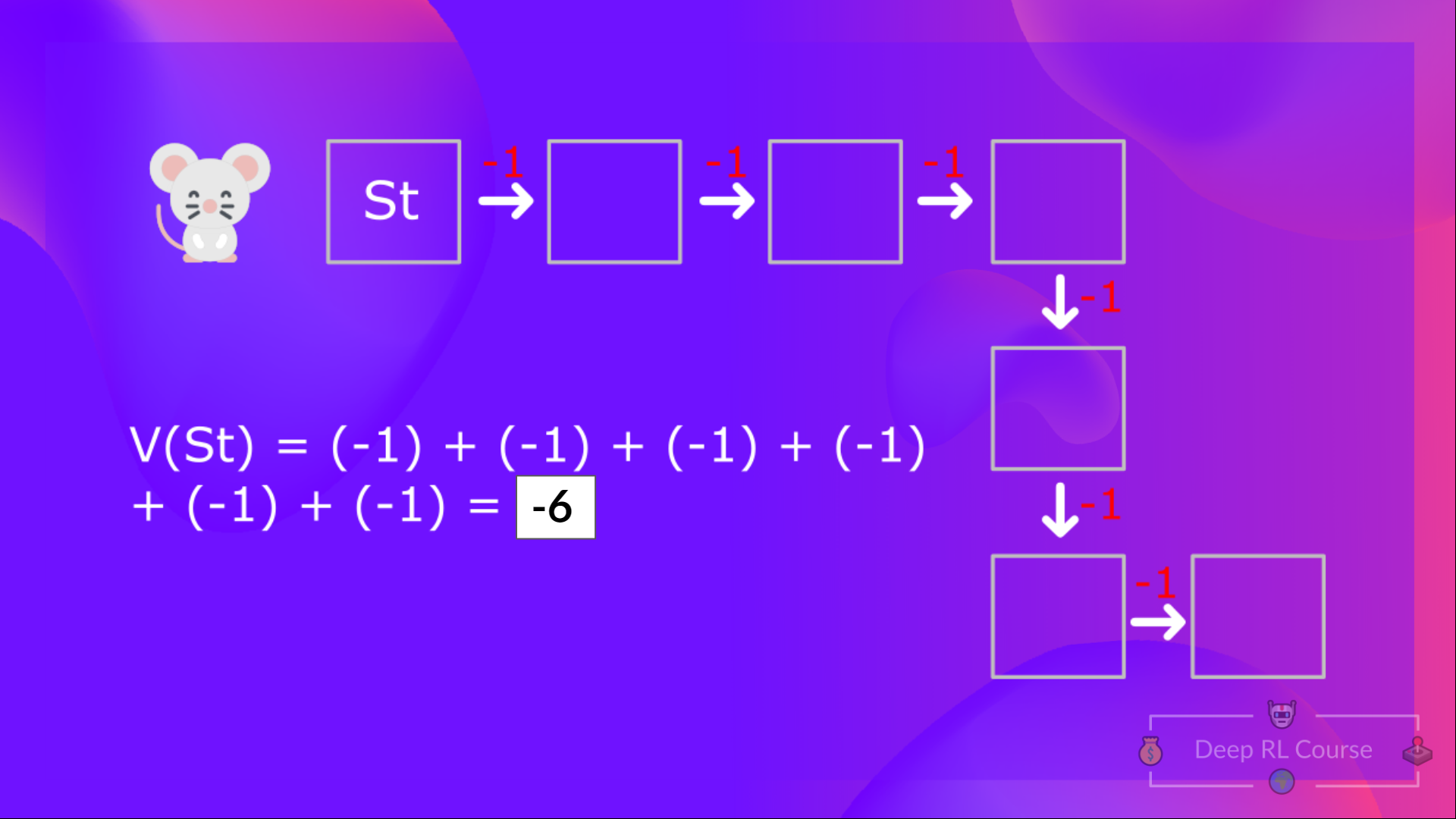

+With what we have learned so far, we know that if we calculate the \\(V(S_t)\\) (value of a state), we need to calculate the return starting at that state and then follow the policy forever after. **(The policy we defined in the following example is a Greedy Policy; for simplification, we don't discount the reward).**

+

+So to calculate \\(V(S_t)\\), we need to calculate the sum of the expected rewards. Hence:

+

+

+

+

+With what we have learned so far, we know that if we calculate the \\(V(S_t)\\) (value of a state), we need to calculate the return starting at that state and then follow the policy forever after. **(The policy we defined in the following example is a Greedy Policy; for simplification, we don't discount the reward).**

+

+So to calculate \\(V(S_t)\\), we need to calculate the sum of the expected rewards. Hence:

+

+

+  + To calculate the value of State 1: the sum of rewards if the agent started in that state and then followed the greedy policy (taking actions that leads to the best states values) for all the time steps.

+

+

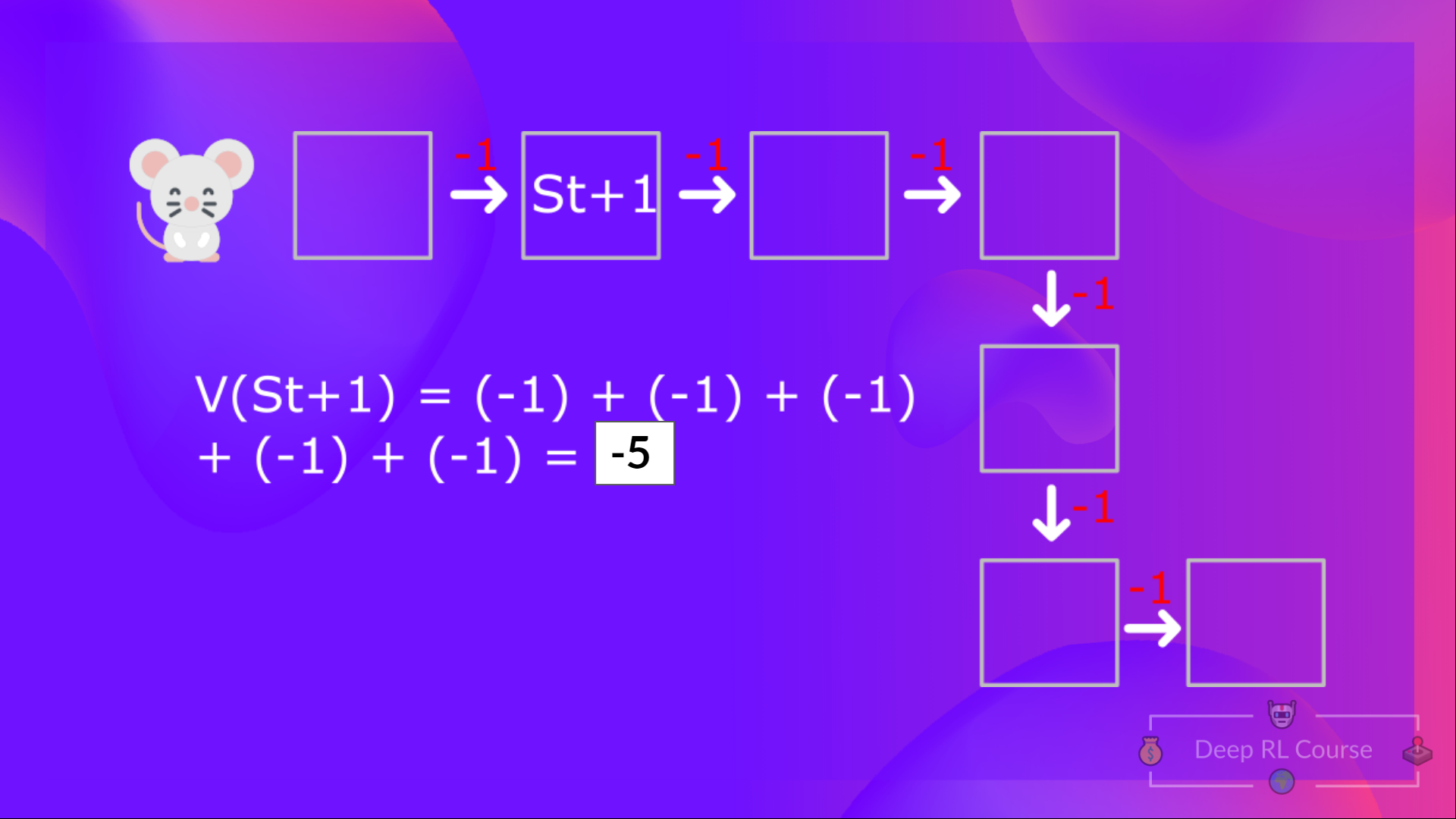

+Then, to calculate the \\(V(S_{t+1})\\), we need to calculate the return starting at that state \\(S_{t+1}\\).

+

+

+

+ To calculate the value of State 1: the sum of rewards if the agent started in that state and then followed the greedy policy (taking actions that leads to the best states values) for all the time steps.

+

+

+Then, to calculate the \\(V(S_{t+1})\\), we need to calculate the return starting at that state \\(S_{t+1}\\).

+

+

+  + To calculate the value of State 2: the sum of rewards **if the agent started in that state, and then followed the **policy for all the time steps.

+

+

+So you see, that's a pretty tedious process if you need to do it for each state value or state-action value.

+

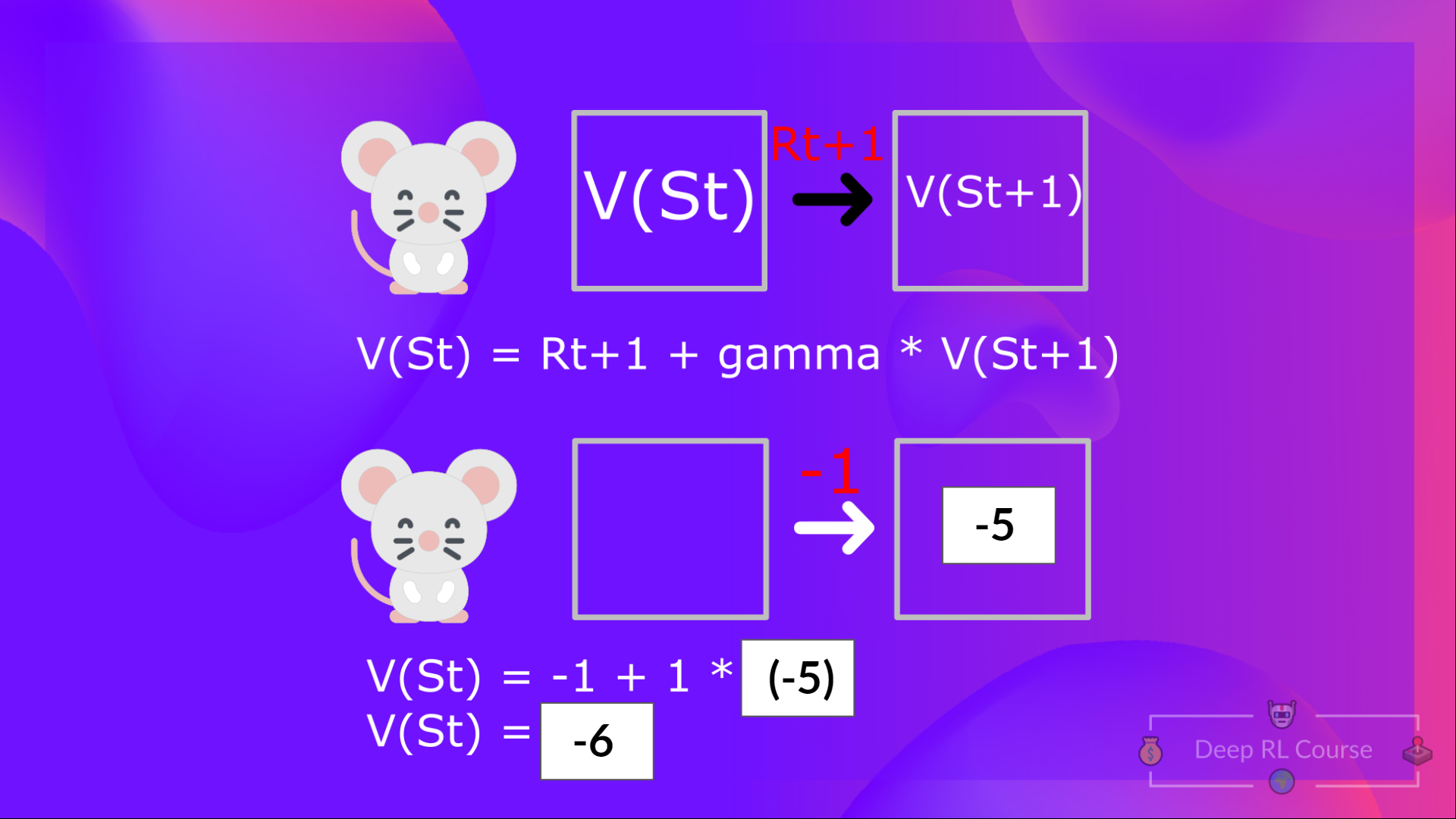

+Instead of calculating the expected return for each state or each state-action pair, **we can use the Bellman equation.**

+

+The Bellman equation is a recursive equation that works like this: instead of starting for each state from the beginning and calculating the return, we can consider the value of any state as:

+

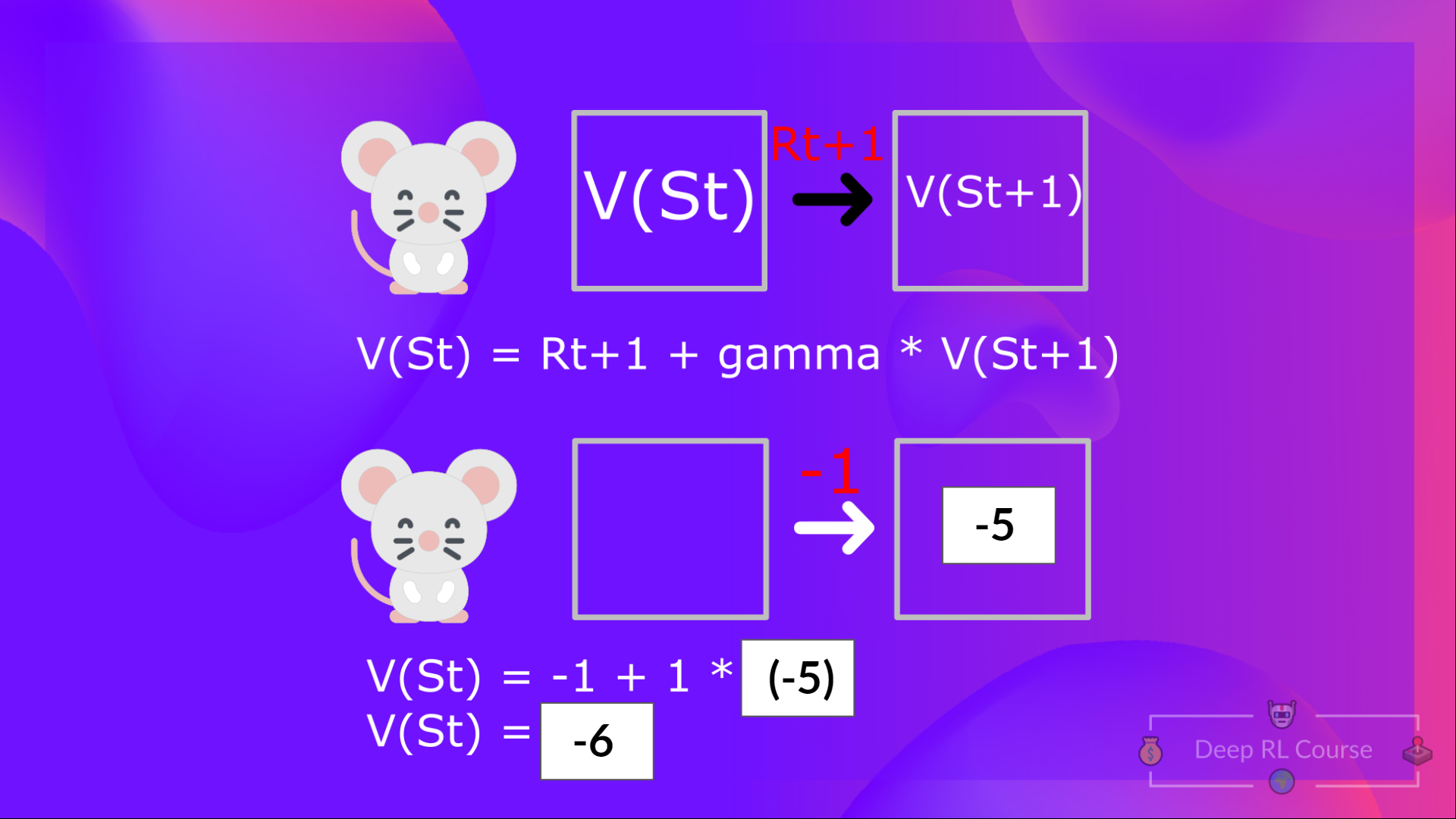

+**The immediate reward \\(R_{t+1}\\) + the discounted value of the state that follows ( \\(gamma * V(S_{t+1}) \\) ) .**

+

+

+

+ To calculate the value of State 2: the sum of rewards **if the agent started in that state, and then followed the **policy for all the time steps.

+

+

+So you see, that's a pretty tedious process if you need to do it for each state value or state-action value.

+

+Instead of calculating the expected return for each state or each state-action pair, **we can use the Bellman equation.**

+

+The Bellman equation is a recursive equation that works like this: instead of starting for each state from the beginning and calculating the return, we can consider the value of any state as:

+

+**The immediate reward \\(R_{t+1}\\) + the discounted value of the state that follows ( \\(gamma * V(S_{t+1}) \\) ) .**

+

+

+  + For simplification, here we don’t discount so gamma = 1.

+

+

+

+If we go back to our example, we can say that the value of State 1 is equal to the expected cumulative return if we start at that state.

+

+

+ For simplification, here we don’t discount so gamma = 1.

+

+

+

+If we go back to our example, we can say that the value of State 1 is equal to the expected cumulative return if we start at that state.

+

+ +

+

+To calculate the value of State 1: the sum of rewards **if the agent started in that state 1** and then followed the **policy for all the time steps.**

+

+This is equivalent to \\(V(S_{t})\\) = Immediate reward \\(R_{t+1}\\) + Discounted value of the next state \\(gamma * V(S_{t+1})\\)

+

+

+

+

+To calculate the value of State 1: the sum of rewards **if the agent started in that state 1** and then followed the **policy for all the time steps.**

+

+This is equivalent to \\(V(S_{t})\\) = Immediate reward \\(R_{t+1}\\) + Discounted value of the next state \\(gamma * V(S_{t+1})\\)

+

+ +

+

+In the interest of simplicity, here we don't discount, so gamma = 1.

+

+- The value of \\(V(S_{t+1}) \\) = Immediate reward \\(R_{t+2}\\) + Discounted value of the next state ( \\(gamma * V(S_{t+2})\\) ).

+- And so on.

+

+To recap, the idea of the Bellman equation is that instead of calculating each value as the sum of the expected return, **which is a long process.** This is equivalent **to the sum of immediate reward + the discounted value of the state that follows.**

+

+Before going to the next section, think about the role of gamma in the Bellman equation. What happens if the value of gamma is very low (e.g. 0.1 or even 0)? What happens if the value is 1? What happens if the value is very high, such as a million?

diff --git a/units/en/unit2/conclusion.mdx b/units/en/unit2/conclusion.mdx

new file mode 100644

index 0000000..f271ce0

--- /dev/null

+++ b/units/en/unit2/conclusion.mdx

@@ -0,0 +1,19 @@

+# Conclusion [[conclusion]]

+

+Congrats on finishing this chapter! There was a lot of information. And congrats on finishing the tutorials. You’ve just implemented your first RL agent from scratch and shared it on the Hub 🥳.

+

+Implementing from scratch when you study a new architecture **is important to understand how it works.**

+

+That’s **normal if you still feel confused** with all these elements. **This was the same for me and for all people who studied RL.**

+

+Take time to really grasp the material before continuing.

+

+

+In the next chapter, we’re going to dive deeper by studying our first Deep Reinforcement Learning algorithm based on Q-Learning: Deep Q-Learning. And you'll train a **DQN agent with RL-Baselines3 Zoo to play Atari Games**.

+

+

+

+

+

+In the interest of simplicity, here we don't discount, so gamma = 1.

+

+- The value of \\(V(S_{t+1}) \\) = Immediate reward \\(R_{t+2}\\) + Discounted value of the next state ( \\(gamma * V(S_{t+2})\\) ).

+- And so on.

+

+To recap, the idea of the Bellman equation is that instead of calculating each value as the sum of the expected return, **which is a long process.** This is equivalent **to the sum of immediate reward + the discounted value of the state that follows.**

+

+Before going to the next section, think about the role of gamma in the Bellman equation. What happens if the value of gamma is very low (e.g. 0.1 or even 0)? What happens if the value is 1? What happens if the value is very high, such as a million?

diff --git a/units/en/unit2/conclusion.mdx b/units/en/unit2/conclusion.mdx

new file mode 100644

index 0000000..f271ce0

--- /dev/null

+++ b/units/en/unit2/conclusion.mdx

@@ -0,0 +1,19 @@

+# Conclusion [[conclusion]]

+

+Congrats on finishing this chapter! There was a lot of information. And congrats on finishing the tutorials. You’ve just implemented your first RL agent from scratch and shared it on the Hub 🥳.

+

+Implementing from scratch when you study a new architecture **is important to understand how it works.**

+

+That’s **normal if you still feel confused** with all these elements. **This was the same for me and for all people who studied RL.**

+

+Take time to really grasp the material before continuing.

+

+

+In the next chapter, we’re going to dive deeper by studying our first Deep Reinforcement Learning algorithm based on Q-Learning: Deep Q-Learning. And you'll train a **DQN agent with RL-Baselines3 Zoo to play Atari Games**.

+

+

+ +

+

+

+### Keep Learning, stay awesome 🤗

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

new file mode 100644

index 0000000..d683cac

--- /dev/null

+++ b/units/en/unit2/hands-on.mdx

@@ -0,0 +1,14 @@

+# Hands-on [[hands-on]]

+

+Now that we studied the Q-Learning algorithm, let's implement it from scratch and train our Q-Learning agent in two environments:

+1. [Frozen-Lake-v1 (non-slippery and slippery version)](https://www.gymlibrary.dev/environments/toy_text/frozen_lake/) ☃️ : where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. [An autonomous taxi](https://www.gymlibrary.dev/environments/toy_text/taxi/) 🚖 will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+

+

+

+### Keep Learning, stay awesome 🤗

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

new file mode 100644

index 0000000..d683cac

--- /dev/null

+++ b/units/en/unit2/hands-on.mdx

@@ -0,0 +1,14 @@

+# Hands-on [[hands-on]]

+

+Now that we studied the Q-Learning algorithm, let's implement it from scratch and train our Q-Learning agent in two environments:

+1. [Frozen-Lake-v1 (non-slippery and slippery version)](https://www.gymlibrary.dev/environments/toy_text/frozen_lake/) ☃️ : where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. [An autonomous taxi](https://www.gymlibrary.dev/environments/toy_text/taxi/) 🚖 will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+ +

+Thanks to a [leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard), you'll be able to compare your results with other classmates and exchange the best practices to improve your agent's scores Who will win the challenge for Unit 2?

+

+

+**To start the hands-on click on Open In Colab button** 👇 :

+

+[]()

diff --git a/units/en/unit2/introduction.mdx b/units/en/unit2/introduction.mdx

new file mode 100644

index 0000000..409f025

--- /dev/null

+++ b/units/en/unit2/introduction.mdx

@@ -0,0 +1,26 @@

+# Introduction to Q-Learning [[introduction-q-learning]]

+

+

+

+Thanks to a [leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard), you'll be able to compare your results with other classmates and exchange the best practices to improve your agent's scores Who will win the challenge for Unit 2?

+

+

+**To start the hands-on click on Open In Colab button** 👇 :

+

+[]()

diff --git a/units/en/unit2/introduction.mdx b/units/en/unit2/introduction.mdx

new file mode 100644

index 0000000..409f025

--- /dev/null

+++ b/units/en/unit2/introduction.mdx

@@ -0,0 +1,26 @@

+# Introduction to Q-Learning [[introduction-q-learning]]

+

+ +

+

+In the first unit of this class, we learned about Reinforcement Learning (RL), the RL process, and the different methods to solve an RL problem. We also **trained our first agents and uploaded them to the Hugging Face Hub.**

+

+In this unit, we're going to **dive deeper into one of the Reinforcement Learning methods: value-based methods** and study our first RL algorithm: **Q-Learning.**

+

+We'll also **implement our first RL agent from scratch**, a Q-Learning agent, and will train it in two environments:

+

+1. Frozen-Lake-v1 (non-slippery version): where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. An autonomous taxi: where our agent will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+

+

+

+In the first unit of this class, we learned about Reinforcement Learning (RL), the RL process, and the different methods to solve an RL problem. We also **trained our first agents and uploaded them to the Hugging Face Hub.**

+

+In this unit, we're going to **dive deeper into one of the Reinforcement Learning methods: value-based methods** and study our first RL algorithm: **Q-Learning.**

+

+We'll also **implement our first RL agent from scratch**, a Q-Learning agent, and will train it in two environments:

+

+1. Frozen-Lake-v1 (non-slippery version): where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. An autonomous taxi: where our agent will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+ +

+Concretely, we will:

+

+- Learn about **value-based methods**.

+- Learn about the **differences between Monte Carlo and Temporal Difference Learning**.

+- Study and implement **our first RL algorithm**: Q-Learning.s

+

+This unit is **fundamental if you want to be able to work on Deep Q-Learning**: the first Deep RL algorithm that played Atari games and beat the human level on some of them (breakout, space invaders…).

+

+So let's get started! 🚀

diff --git a/units/en/unit2/mc-vs-td.mdx b/units/en/unit2/mc-vs-td.mdx

new file mode 100644

index 0000000..e78ee78

--- /dev/null

+++ b/units/en/unit2/mc-vs-td.mdx

@@ -0,0 +1,126 @@

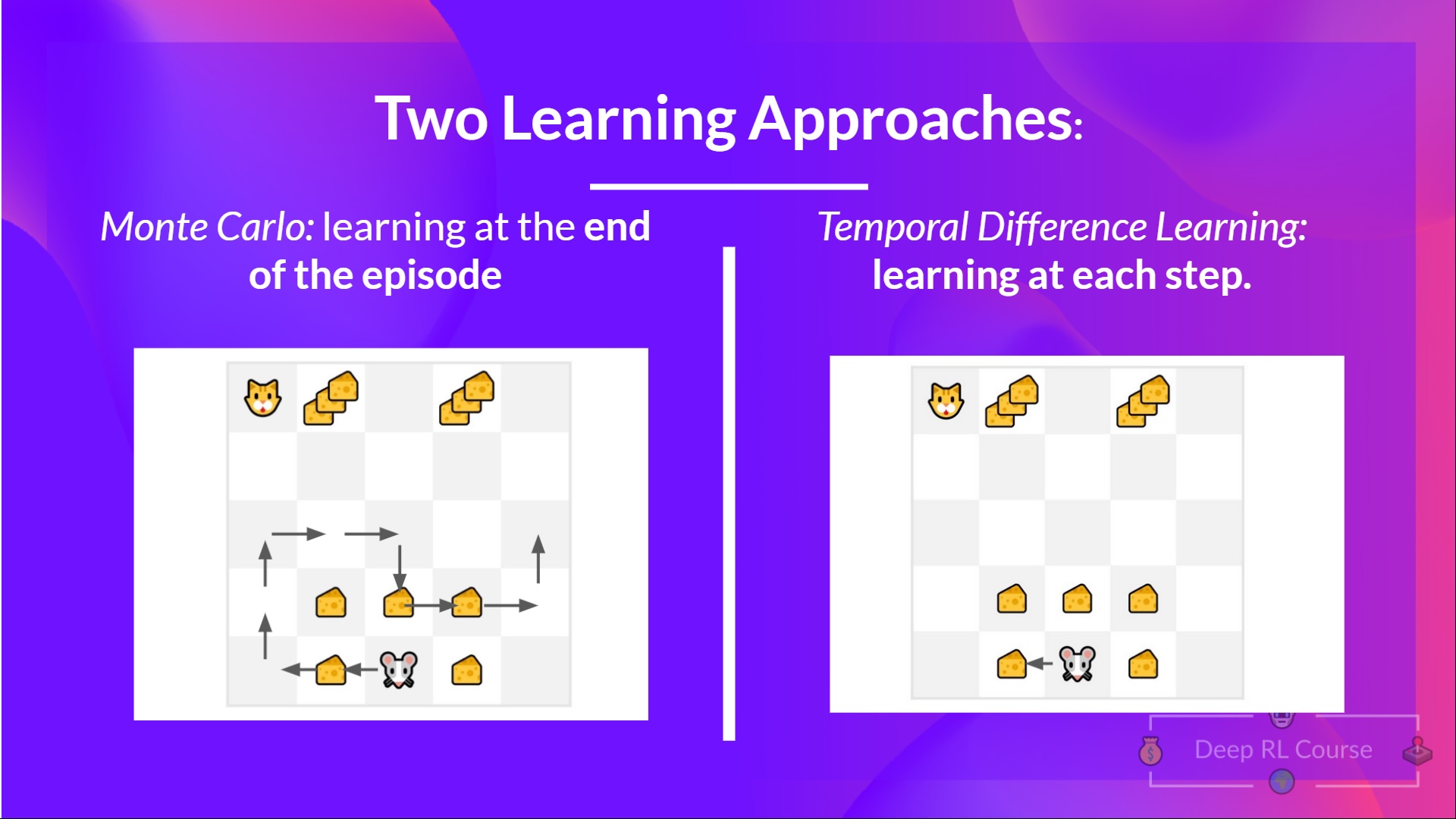

+# Monte Carlo vs Temporal Difference Learning [[mc-vs-td]]

+

+The last thing we need to talk about before diving into Q-Learning is the two ways of learning.

+

+Remember that an RL agent **learns by interacting with its environment.** The idea is that **using the experience taken**, given the reward it gets, will **update its value or policy.**

+

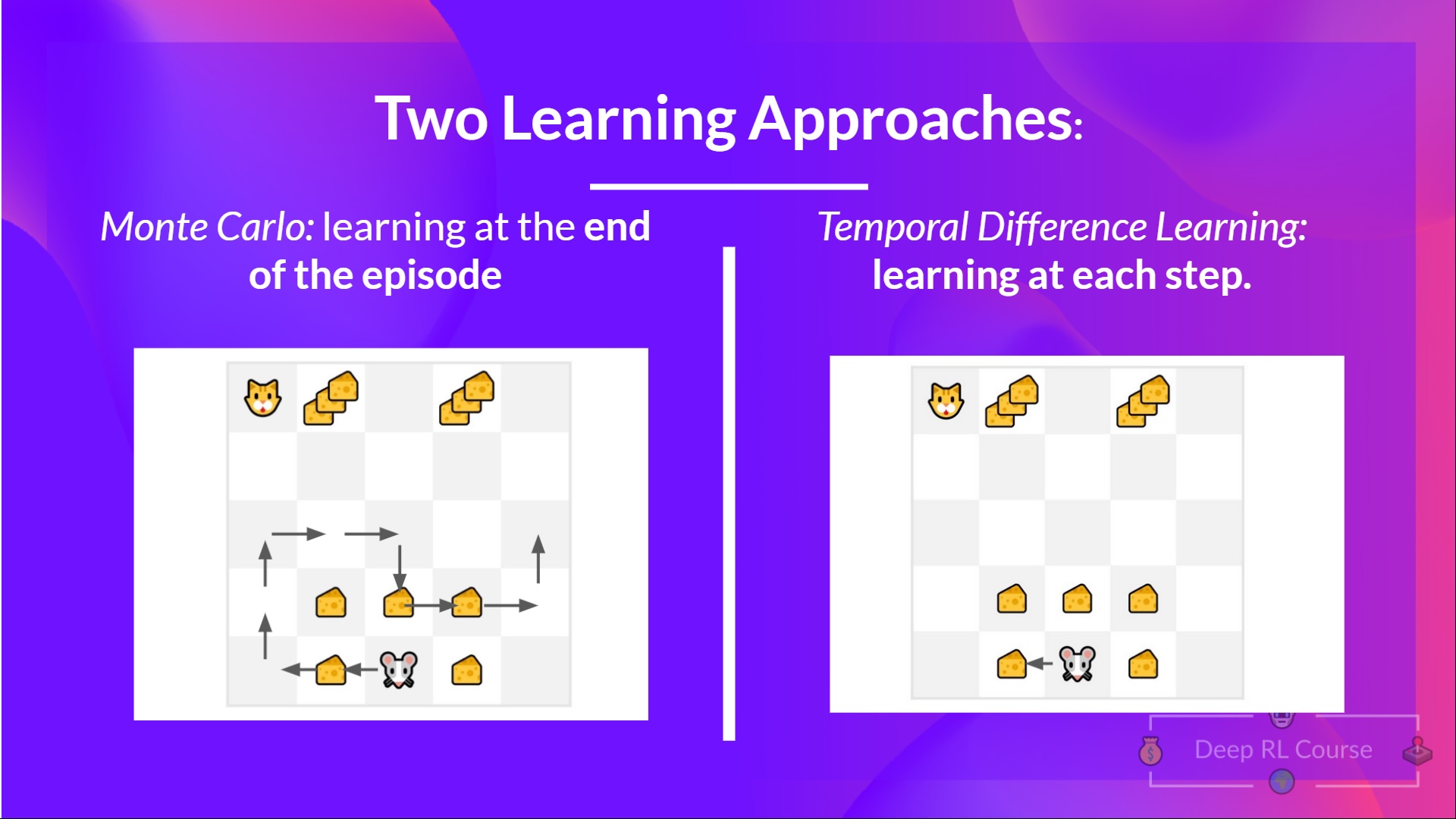

+Monte Carlo and Temporal Difference Learning are two different **strategies on how to train our value function or our policy function.** Both of them **use experience to solve the RL problem.**

+

+On one hand, Monte Carlo uses **an entire episode of experience before learning.** On the other hand, Temporal Difference uses **only a step ( \\(S_t, A_t, R_{t+1}, S_{t+1}\\) ) to learn.**

+

+We'll explain both of them **using a value-based method example.**

+

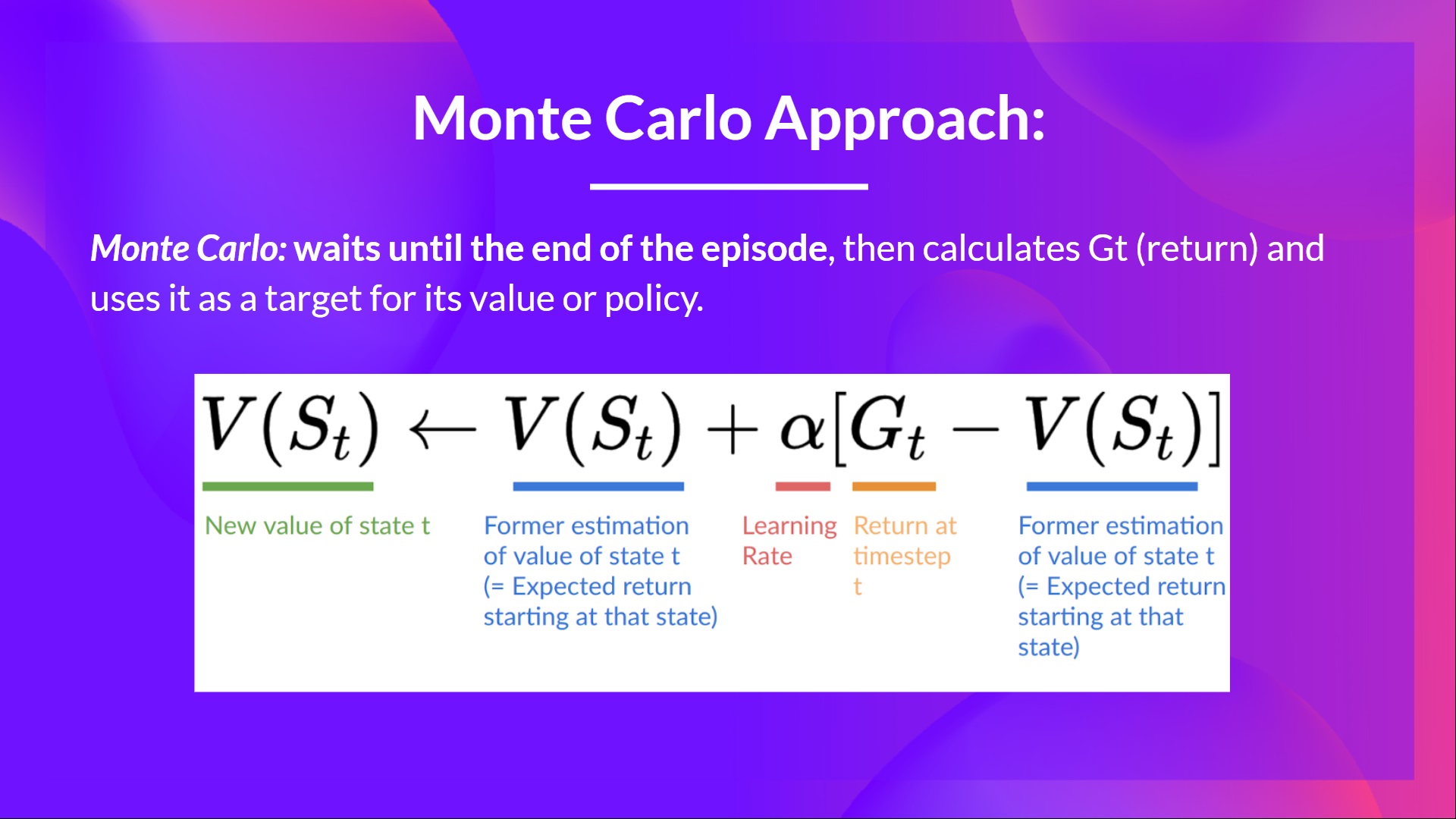

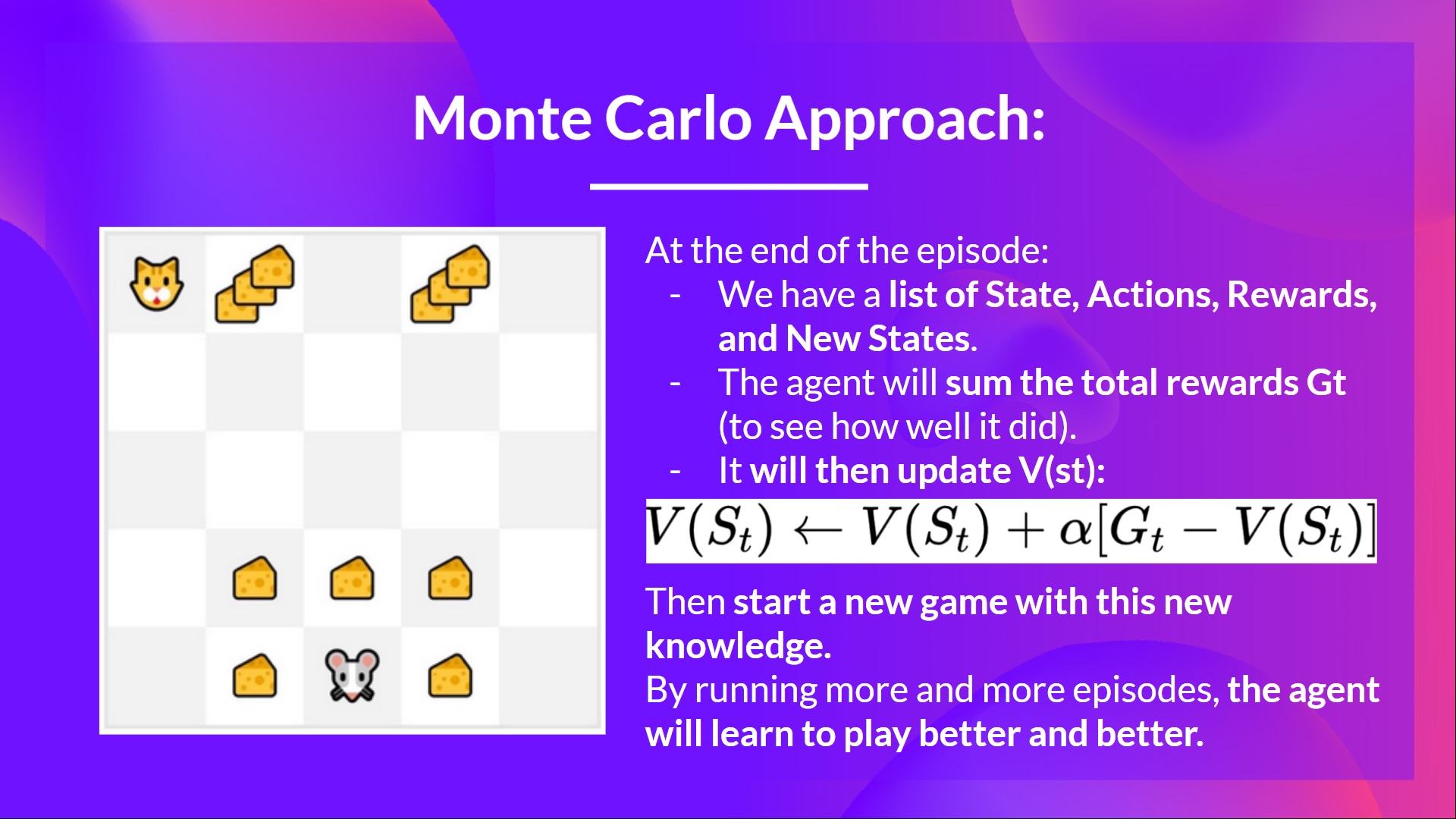

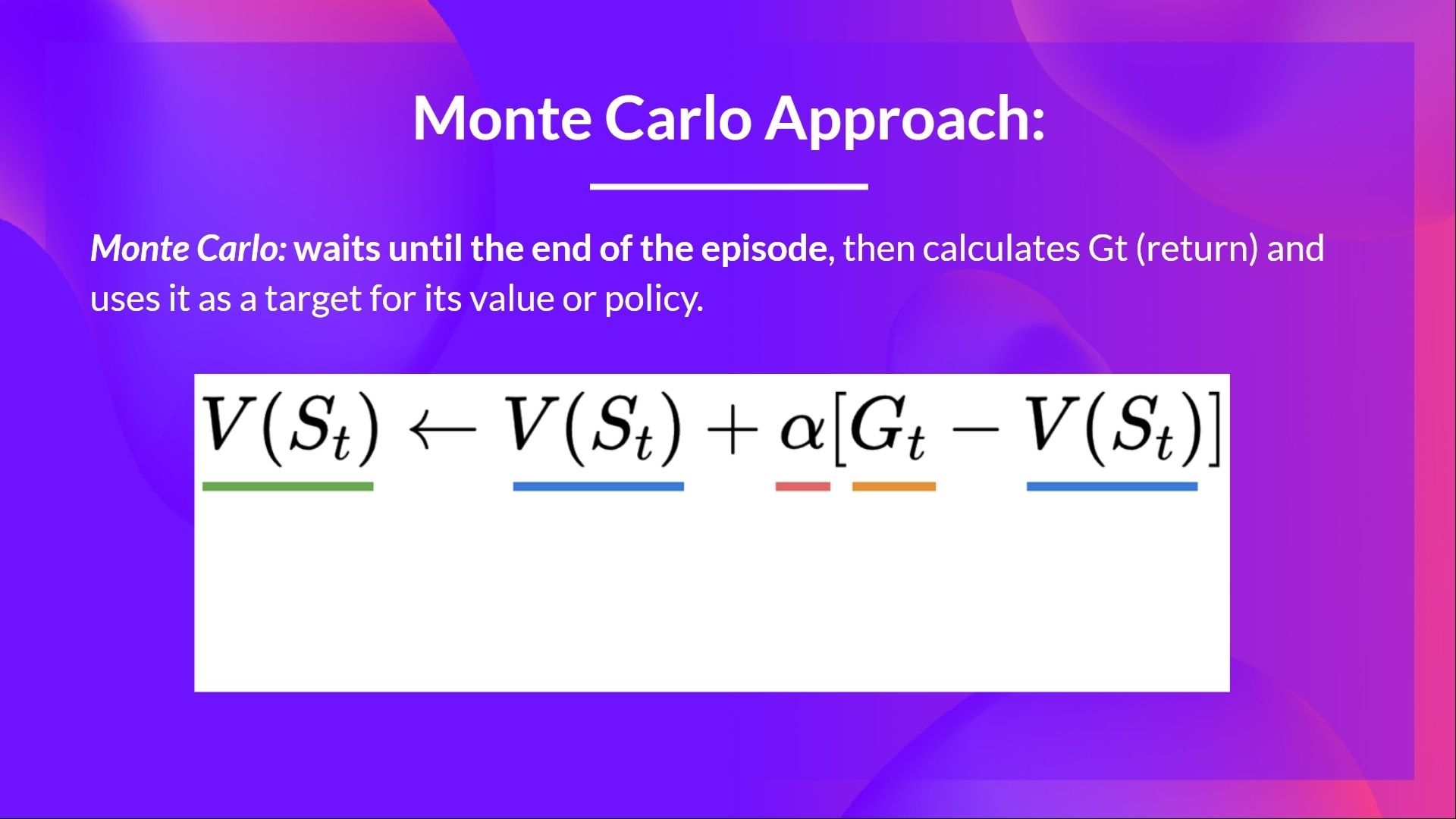

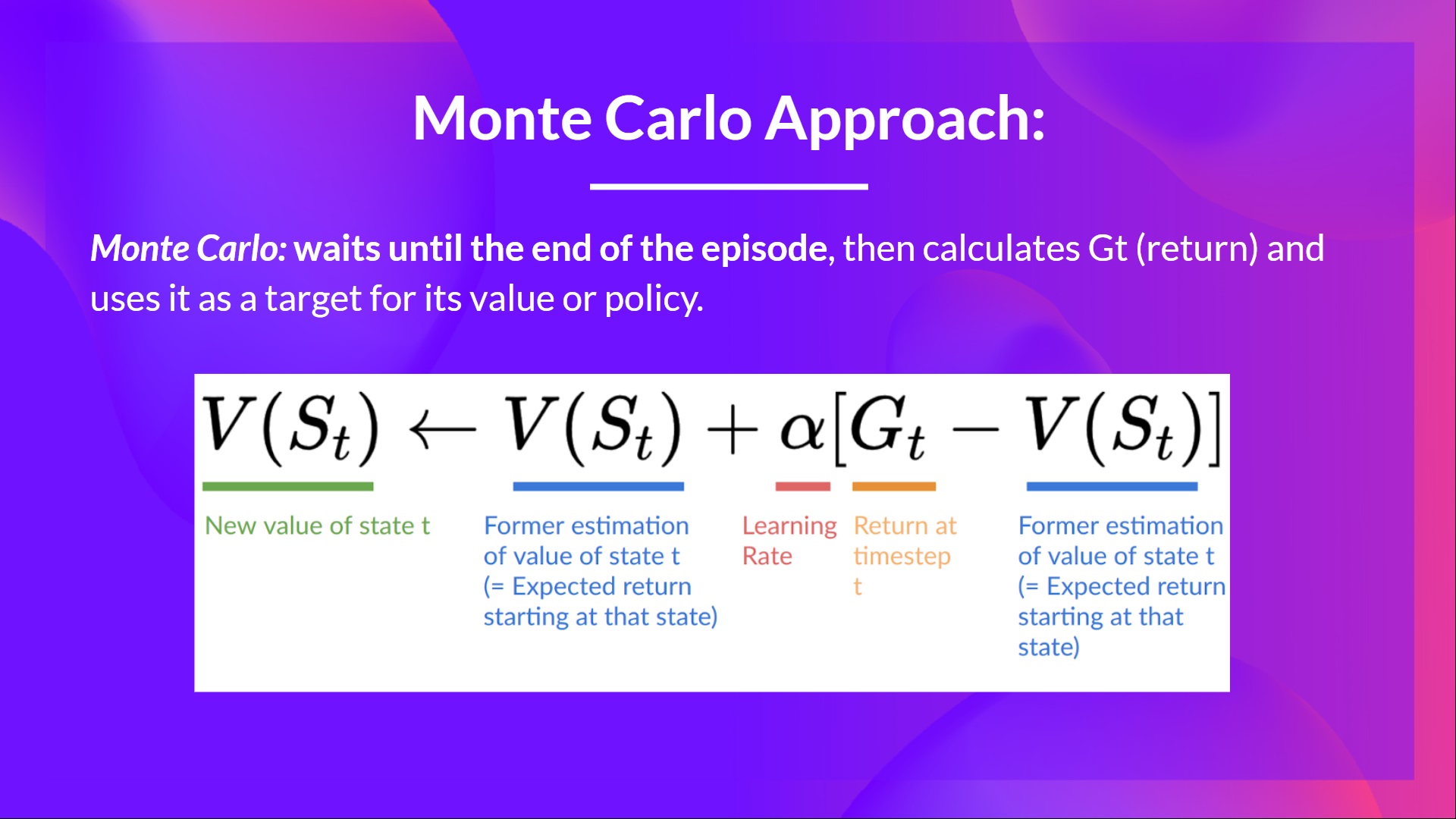

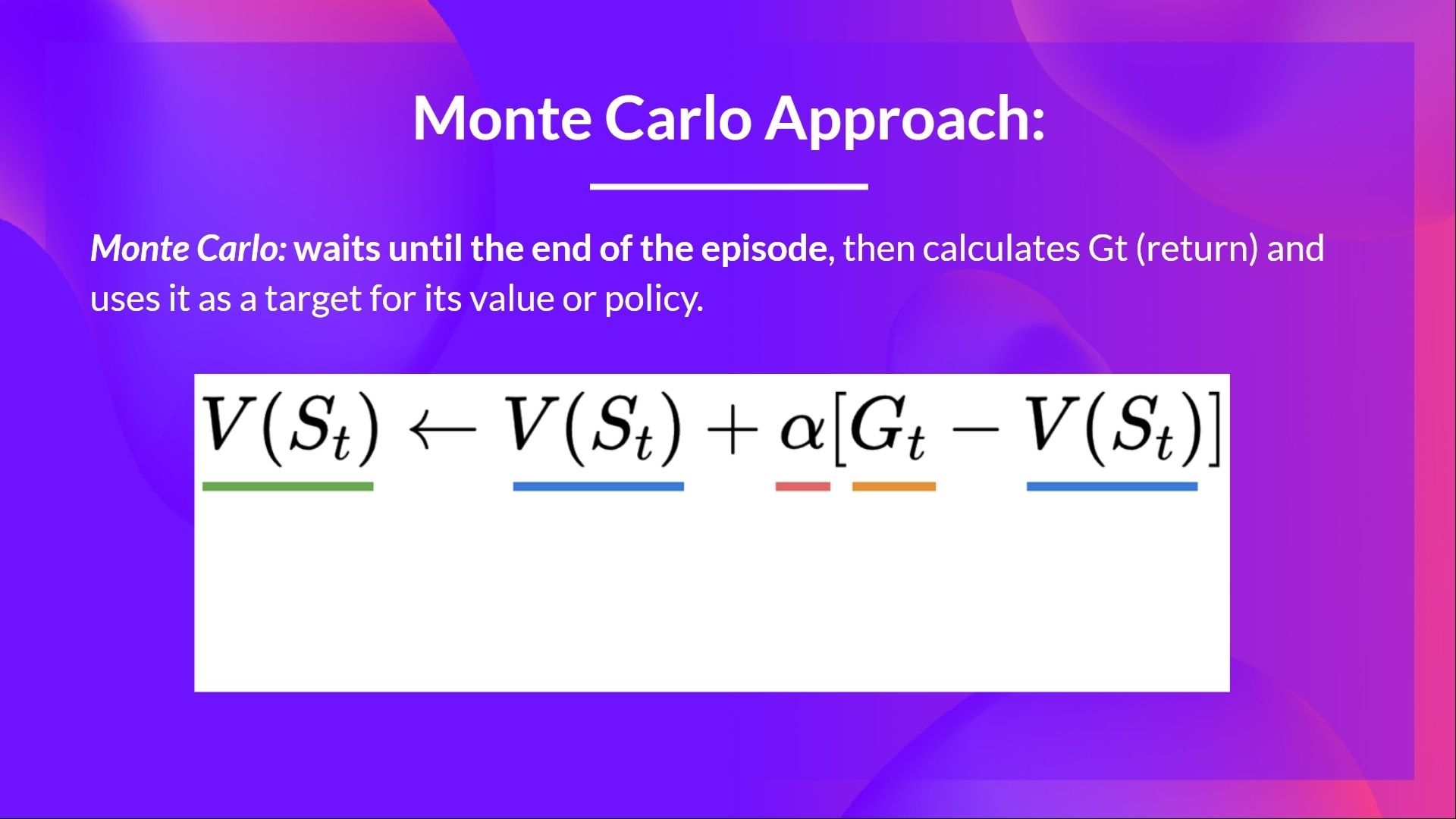

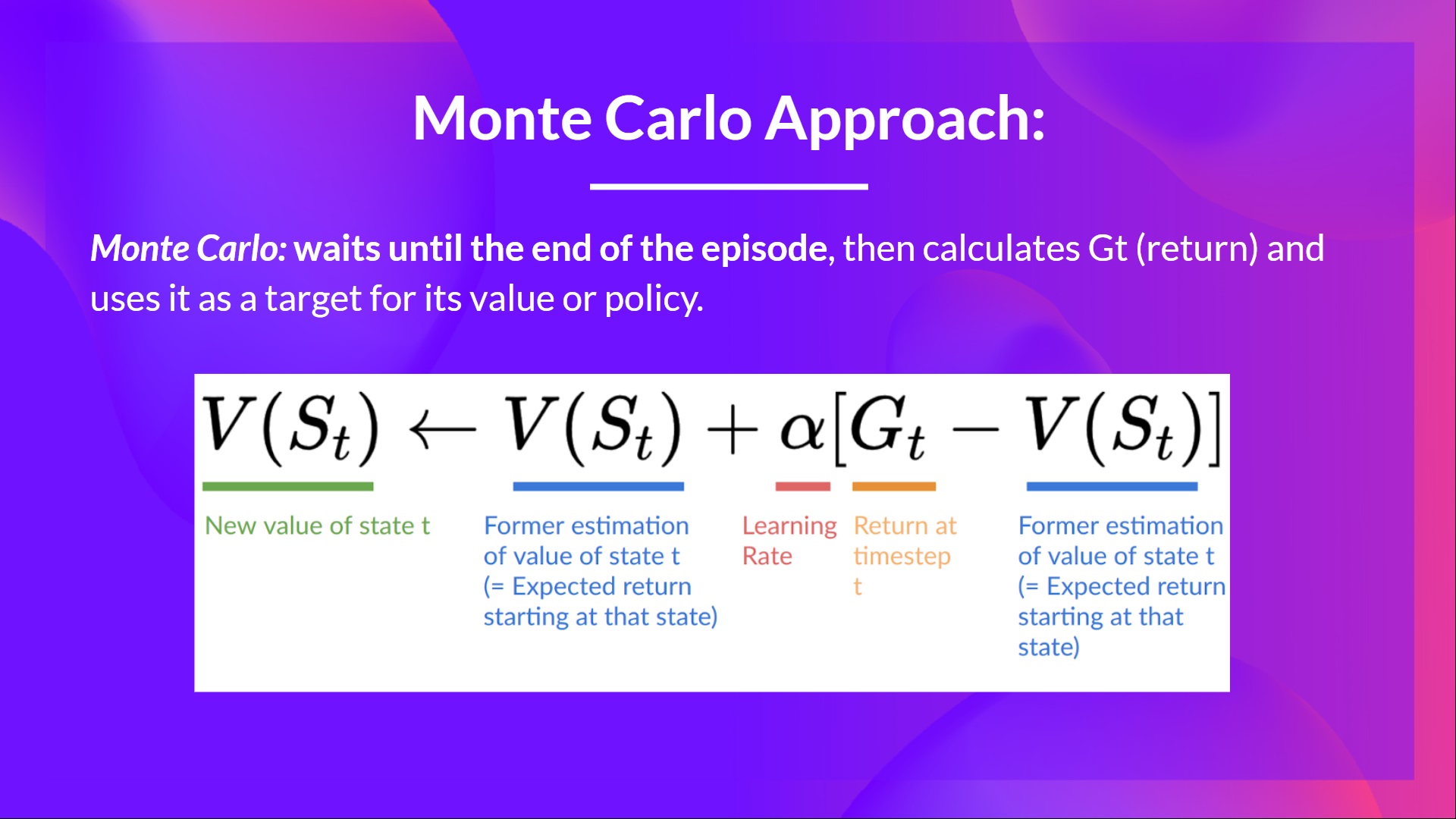

+## Monte Carlo: learning at the end of the episode [[monte-carlo]]

+

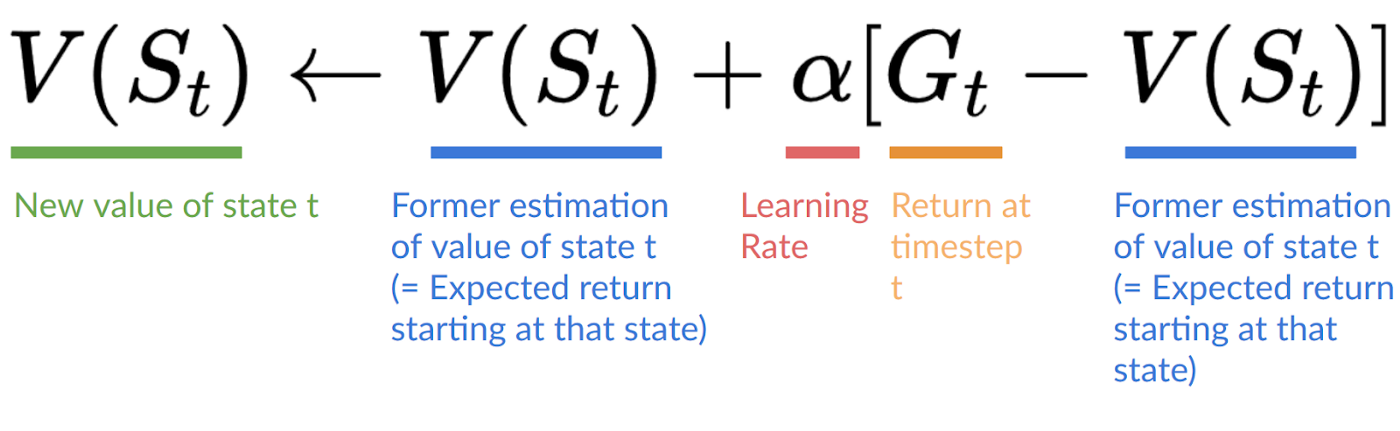

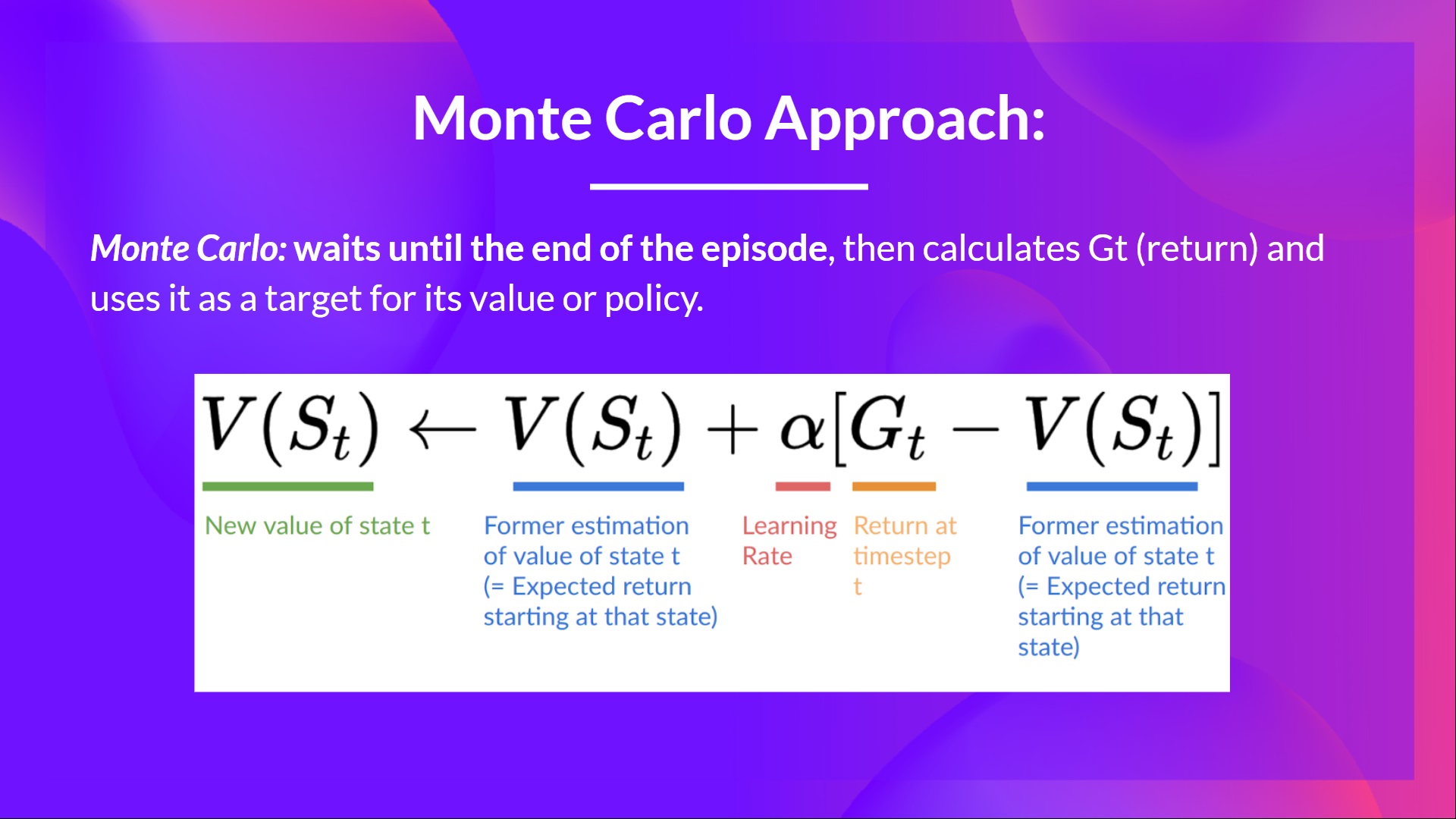

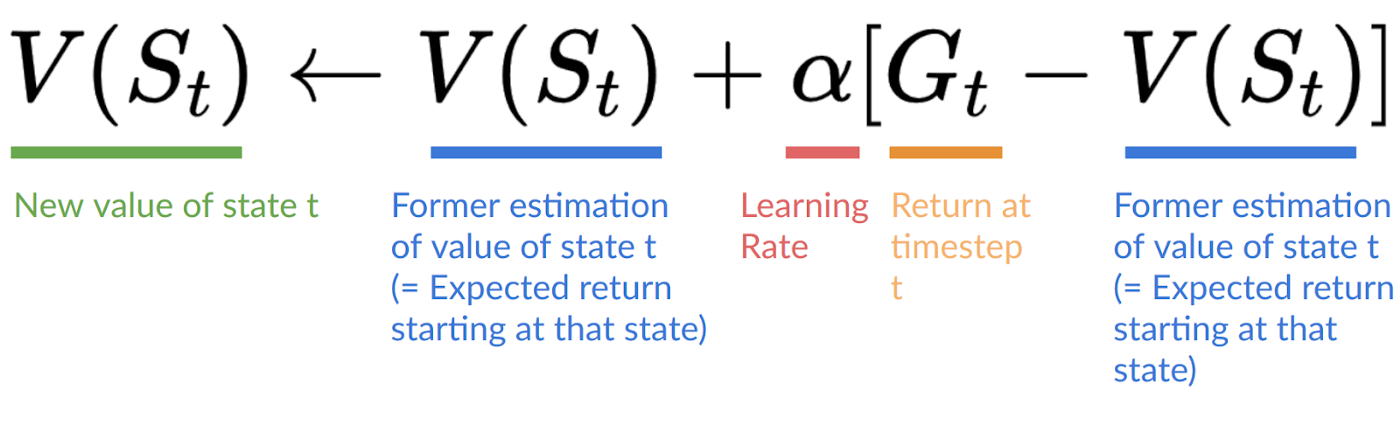

+Monte Carlo waits until the end of the episode, calculates \\(G_t\\) (return) and uses it as **a target for updating \\(V(S_t)\\).**

+

+So it requires a **complete entire episode of interaction before updating our value function.**

+

+

+

+Concretely, we will:

+

+- Learn about **value-based methods**.

+- Learn about the **differences between Monte Carlo and Temporal Difference Learning**.

+- Study and implement **our first RL algorithm**: Q-Learning.s

+

+This unit is **fundamental if you want to be able to work on Deep Q-Learning**: the first Deep RL algorithm that played Atari games and beat the human level on some of them (breakout, space invaders…).

+

+So let's get started! 🚀

diff --git a/units/en/unit2/mc-vs-td.mdx b/units/en/unit2/mc-vs-td.mdx

new file mode 100644

index 0000000..e78ee78

--- /dev/null

+++ b/units/en/unit2/mc-vs-td.mdx

@@ -0,0 +1,126 @@

+# Monte Carlo vs Temporal Difference Learning [[mc-vs-td]]

+

+The last thing we need to talk about before diving into Q-Learning is the two ways of learning.

+

+Remember that an RL agent **learns by interacting with its environment.** The idea is that **using the experience taken**, given the reward it gets, will **update its value or policy.**

+

+Monte Carlo and Temporal Difference Learning are two different **strategies on how to train our value function or our policy function.** Both of them **use experience to solve the RL problem.**

+

+On one hand, Monte Carlo uses **an entire episode of experience before learning.** On the other hand, Temporal Difference uses **only a step ( \\(S_t, A_t, R_{t+1}, S_{t+1}\\) ) to learn.**

+

+We'll explain both of them **using a value-based method example.**

+

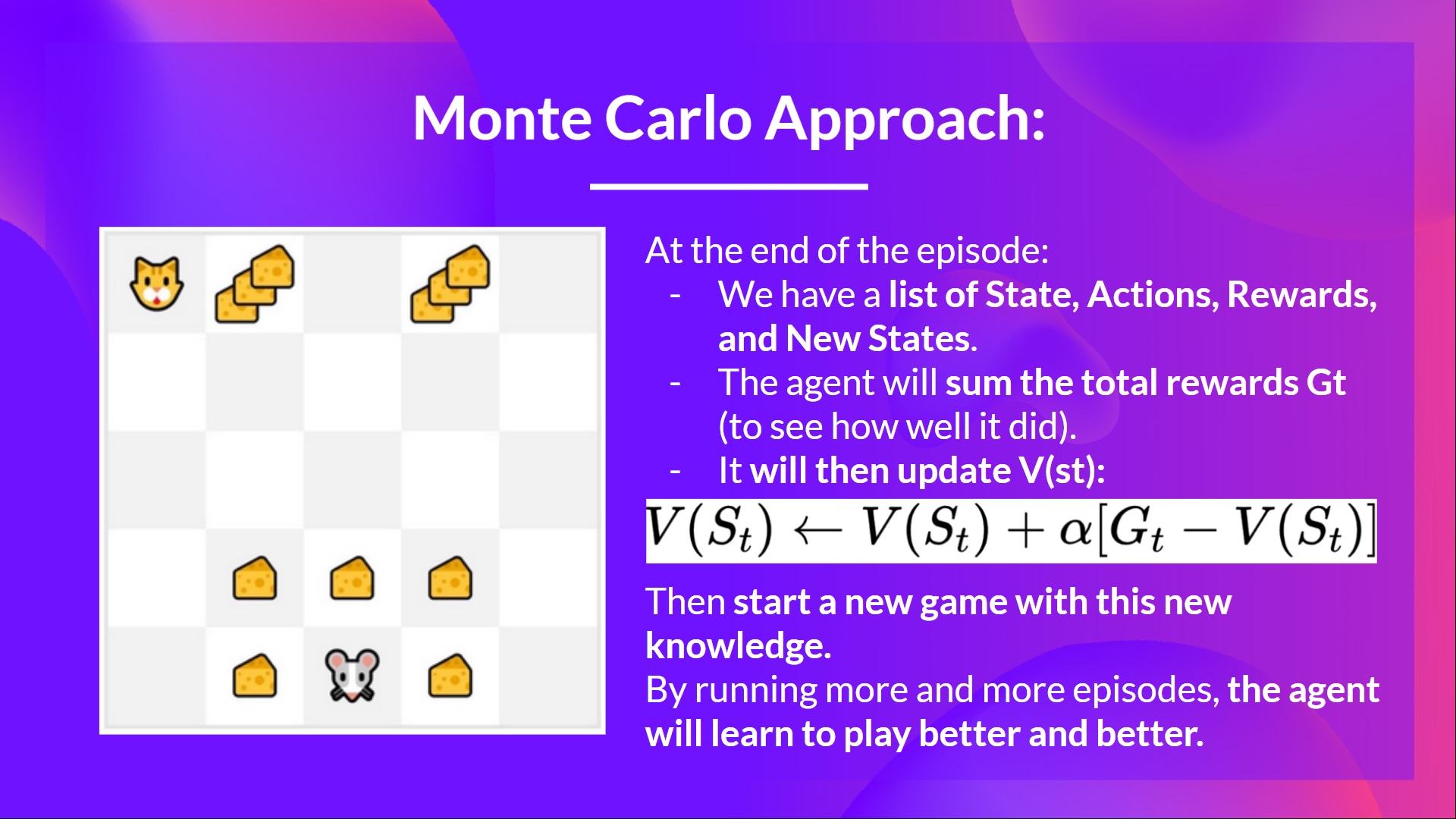

+## Monte Carlo: learning at the end of the episode [[monte-carlo]]

+

+Monte Carlo waits until the end of the episode, calculates \\(G_t\\) (return) and uses it as **a target for updating \\(V(S_t)\\).**

+

+So it requires a **complete entire episode of interaction before updating our value function.**

+

+  +

+

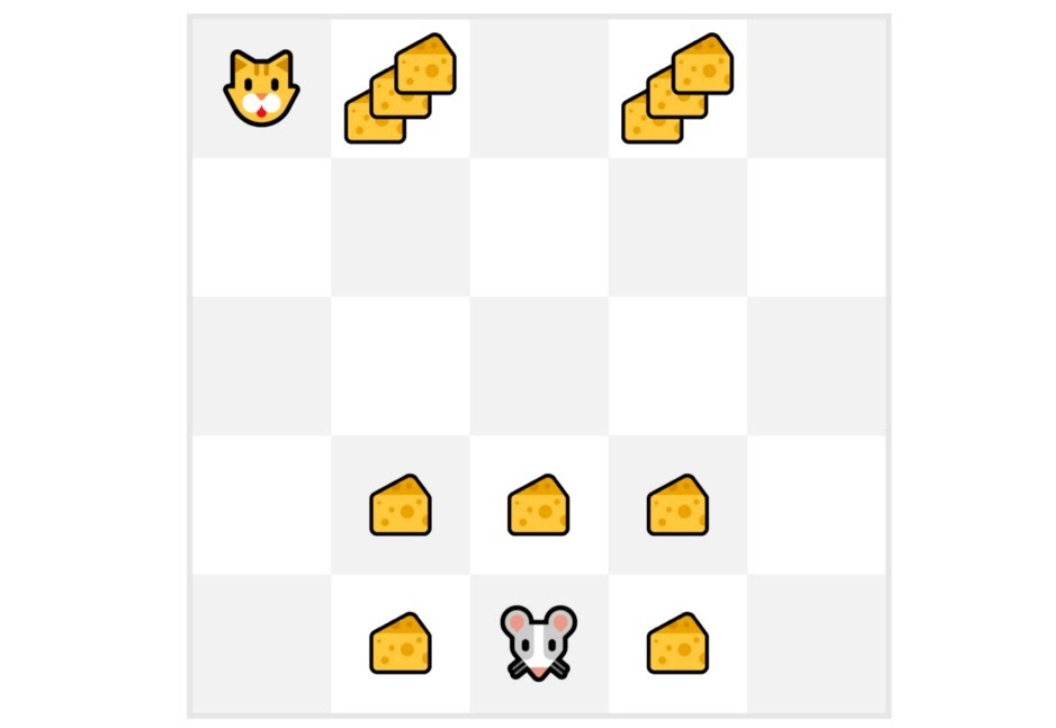

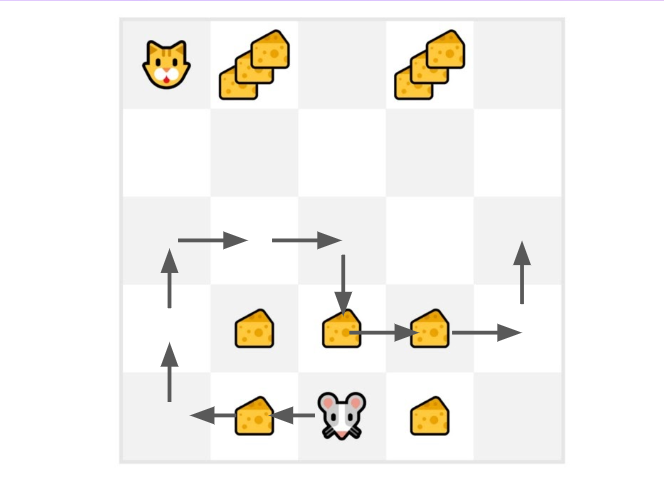

+If we take an example:

+

+

+

+

+If we take an example:

+

+  +

+

+- We always start the episode **at the same starting point.**

+- **The agent takes actions using the policy**. For instance, using an Epsilon Greedy Strategy, a policy that alternates between exploration (random actions) and exploitation.

+- We get **the reward and the next state.**

+- We terminate the episode if the cat eats the mouse or if the mouse moves > 10 steps.

+

+- At the end of the episode, **we have a list of State, Actions, Rewards, and Next States**

+- **The agent will sum the total rewards \\(G_t\\)** (to see how well it did).

+- It will then **update \\(V(s_t)\\) based on the formula**

+

+

+

+

+- We always start the episode **at the same starting point.**

+- **The agent takes actions using the policy**. For instance, using an Epsilon Greedy Strategy, a policy that alternates between exploration (random actions) and exploitation.

+- We get **the reward and the next state.**

+- We terminate the episode if the cat eats the mouse or if the mouse moves > 10 steps.

+

+- At the end of the episode, **we have a list of State, Actions, Rewards, and Next States**

+- **The agent will sum the total rewards \\(G_t\\)** (to see how well it did).

+- It will then **update \\(V(s_t)\\) based on the formula**

+

+  +

+- Then **start a new game with this new knowledge**

+

+By running more and more episodes, **the agent will learn to play better and better.**

+

+

+

+- Then **start a new game with this new knowledge**

+

+By running more and more episodes, **the agent will learn to play better and better.**

+

+  +

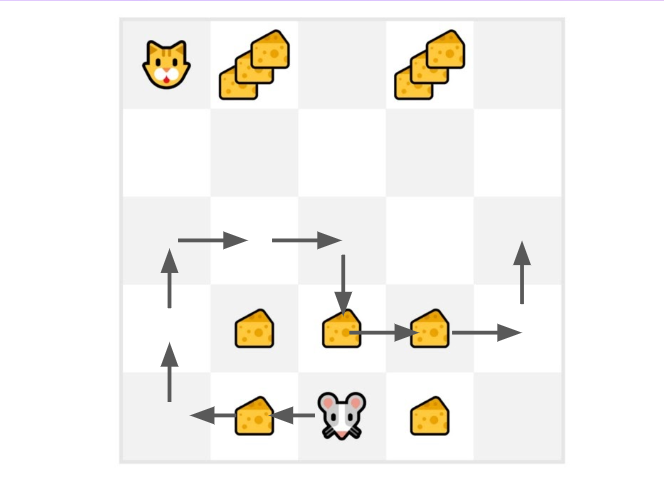

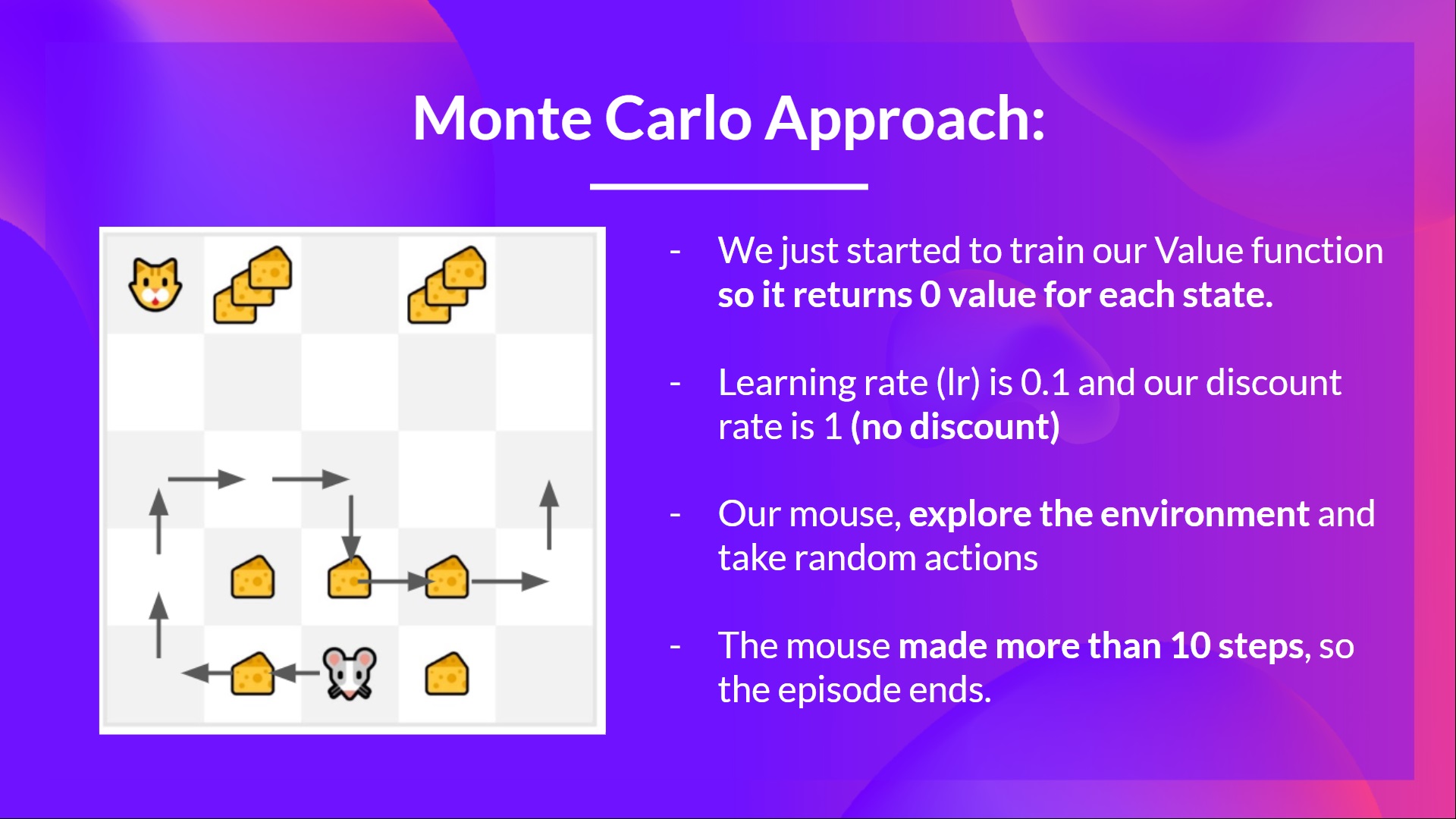

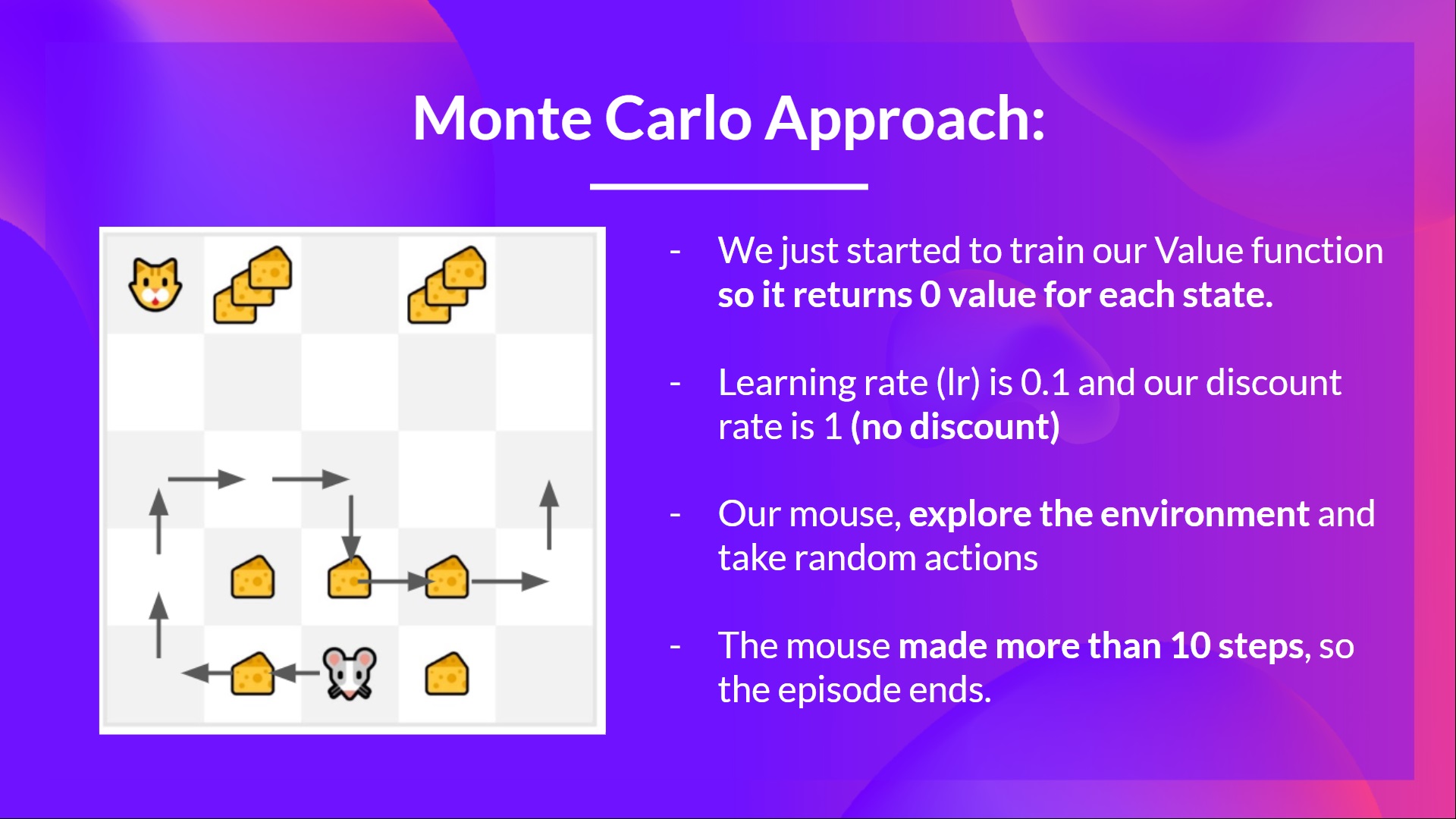

+For instance, if we train a state-value function using Monte Carlo:

+

+- We just started to train our Value function, **so it returns 0 value for each state**

+- Our learning rate (lr) is 0.1 and our discount rate is 1 (= no discount)

+- Our mouse **explores the environment and takes random actions**

+

+

+

+For instance, if we train a state-value function using Monte Carlo:

+

+- We just started to train our Value function, **so it returns 0 value for each state**

+- Our learning rate (lr) is 0.1 and our discount rate is 1 (= no discount)

+- Our mouse **explores the environment and takes random actions**

+

+  +

+

+- The mouse made more than 10 steps, so the episode ends .

+

+

+

+

+- The mouse made more than 10 steps, so the episode ends .

+

+  +

+

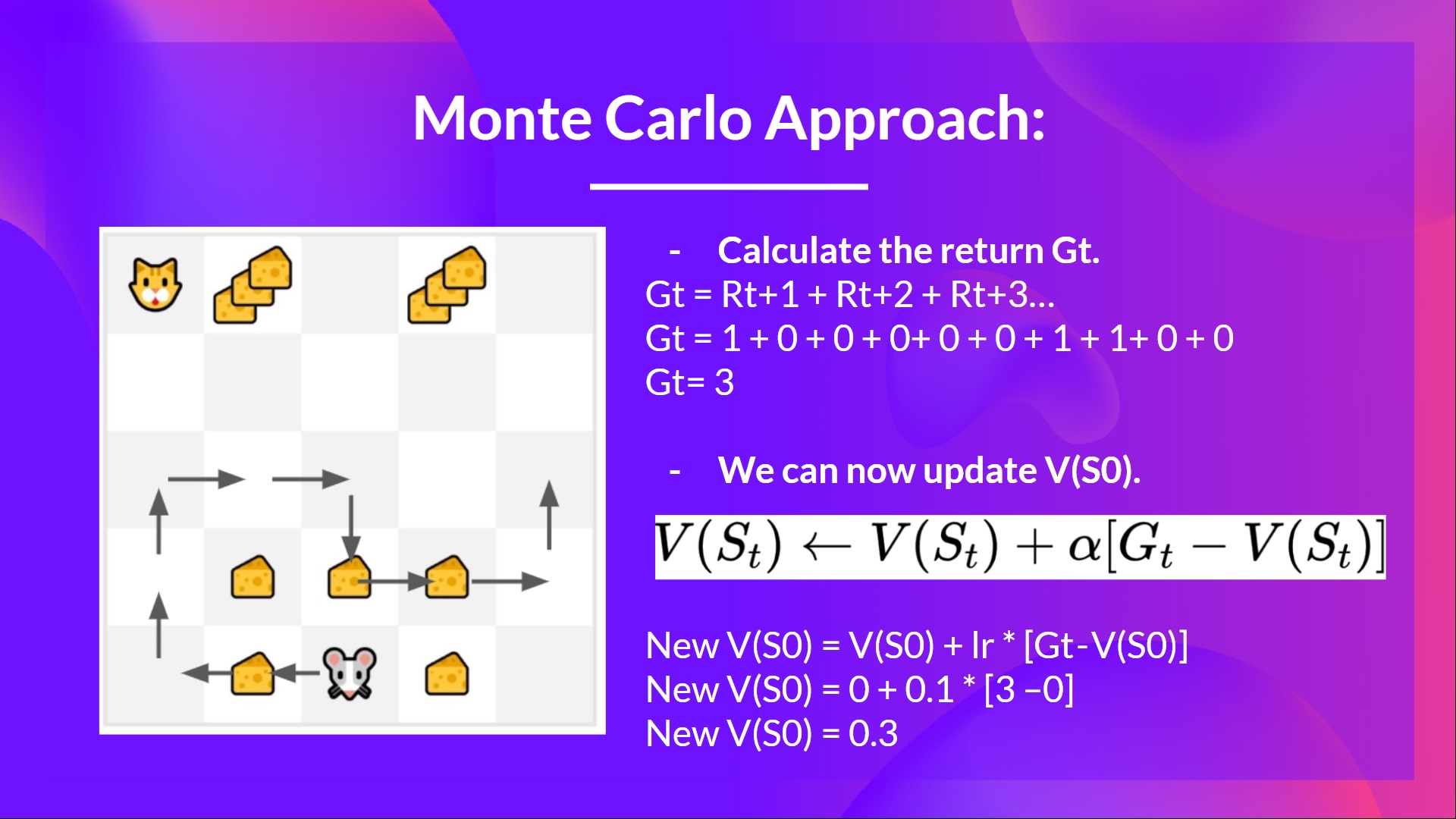

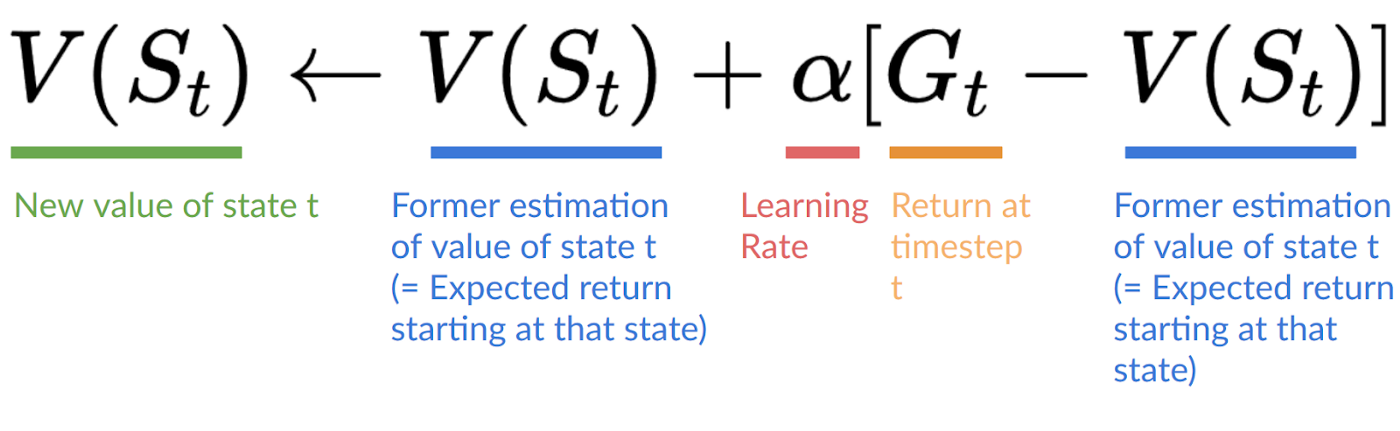

+- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t}\\)**

+- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\)

+- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3}…\\) (for simplicity we don’t discount the rewards).

+- \\(G_t = 1 + 0 + 0 + 0+ 0 + 0 + 1 + 1 + 0 + 0\\)

+- \\(G_t= 3\\)

+- We can now update \\(V(S_0)\\):

+

+

+

+

+- We have a list of state, action, rewards, next_state, **we need to calculate the return \\(G{t}\\)**

+- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3} ...\\)

+- \\(G_t = R_{t+1} + R_{t+2} + R_{t+3}…\\) (for simplicity we don’t discount the rewards).

+- \\(G_t = 1 + 0 + 0 + 0+ 0 + 0 + 1 + 1 + 0 + 0\\)

+- \\(G_t= 3\\)

+- We can now update \\(V(S_0)\\):

+

+  +

+- New \\(V(S_0) = V(S_0) + lr * [G_t — V(S_0)]\\)

+- New \\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

+- New \\(V(S_0) = 0.3\\)

+

+

+

+

+- New \\(V(S_0) = V(S_0) + lr * [G_t — V(S_0)]\\)

+- New \\(V(S_0) = 0 + 0.1 * [3 – 0]\\)

+- New \\(V(S_0) = 0.3\\)

+

+

+  +

+

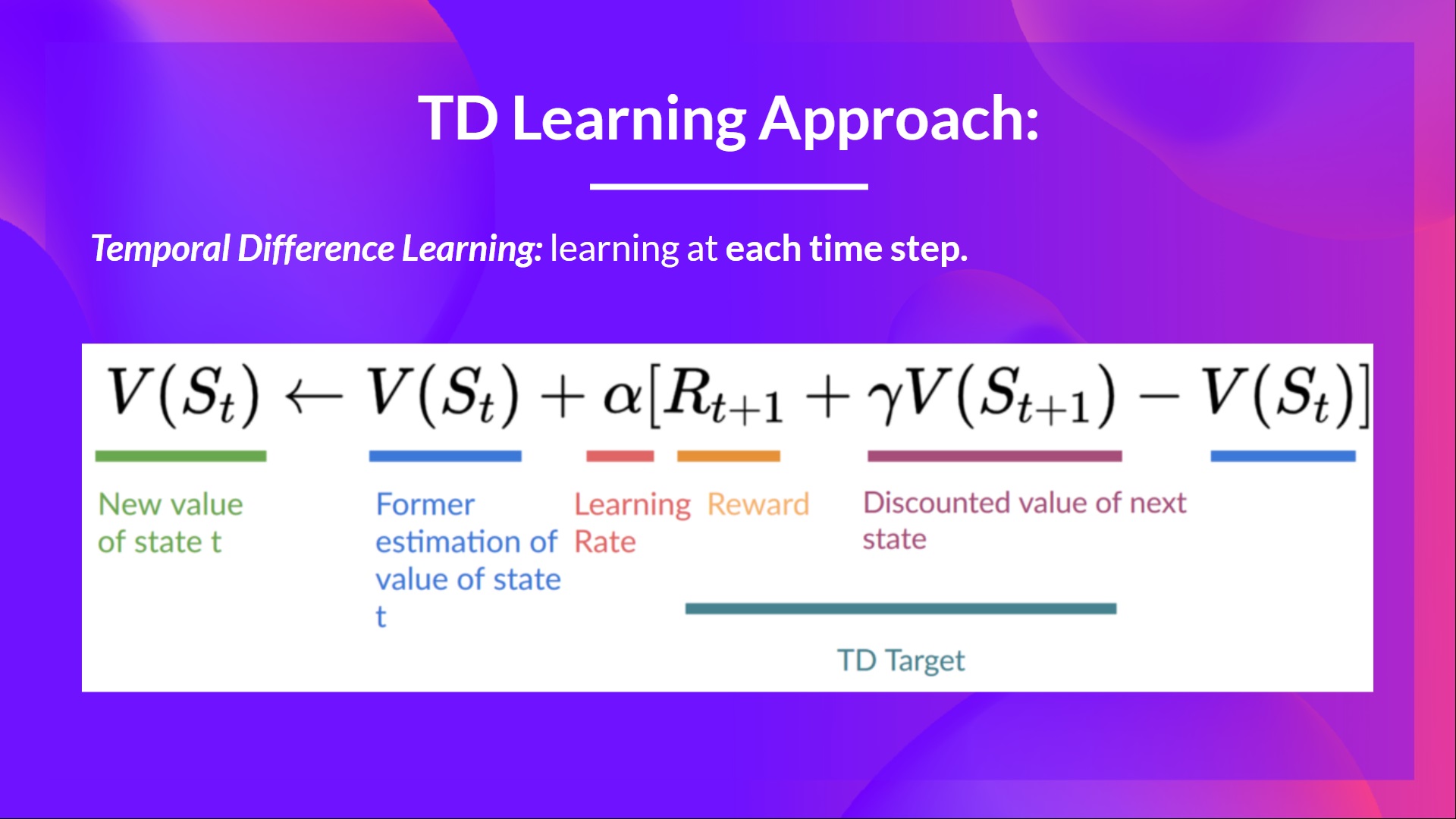

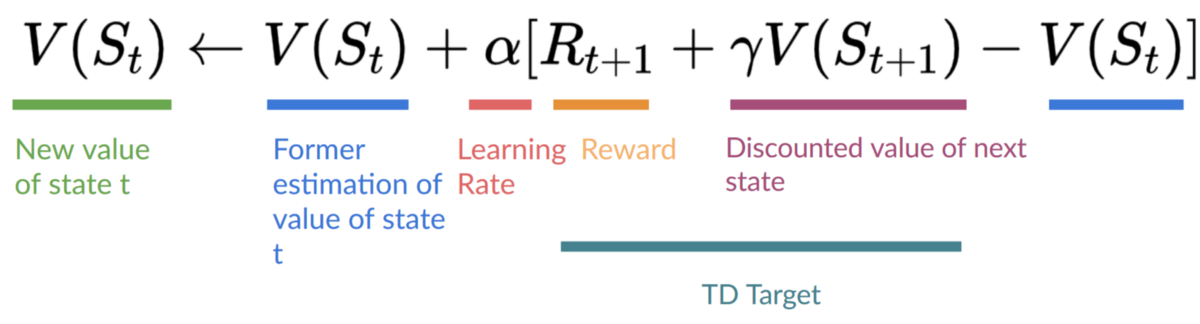

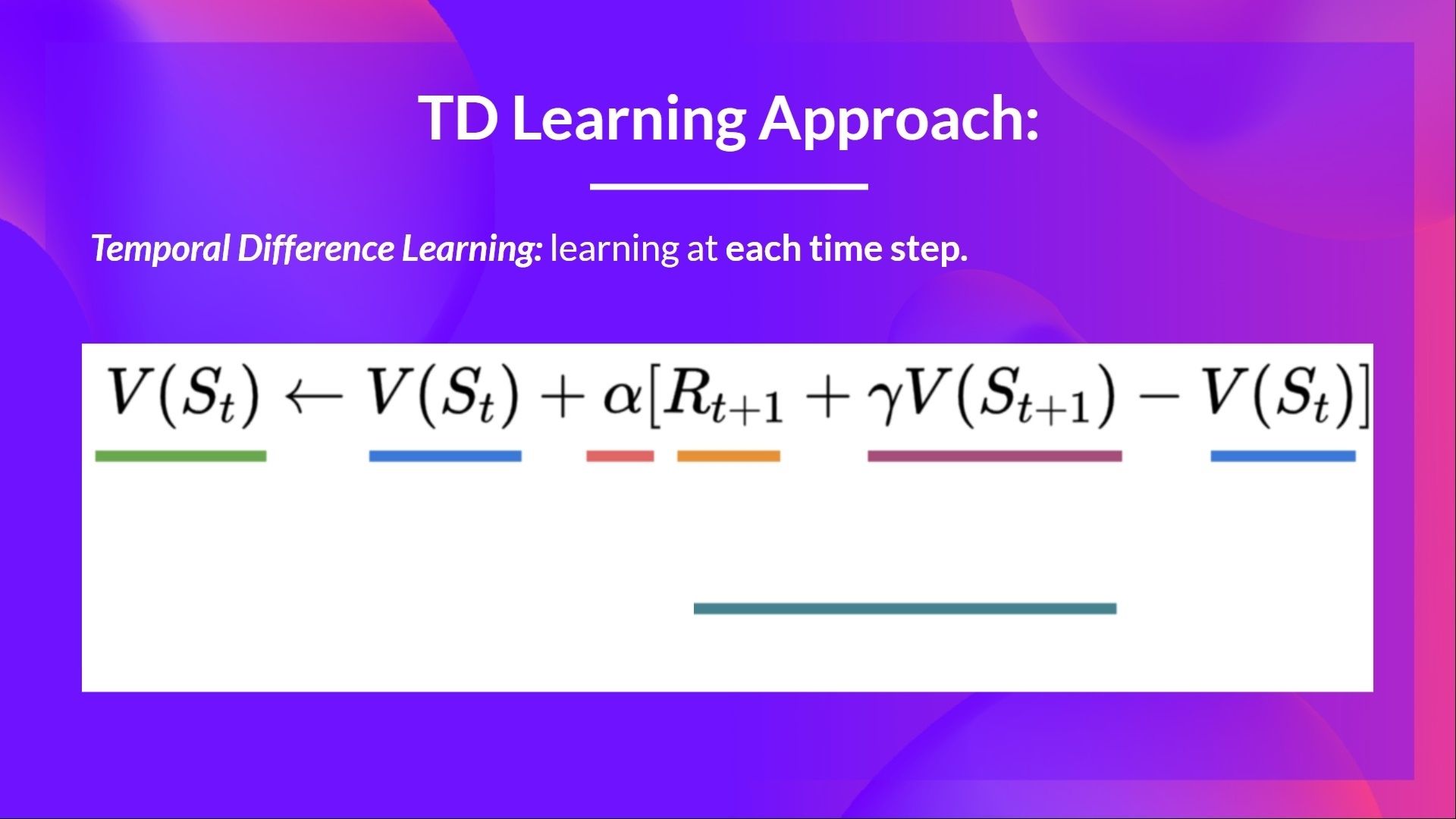

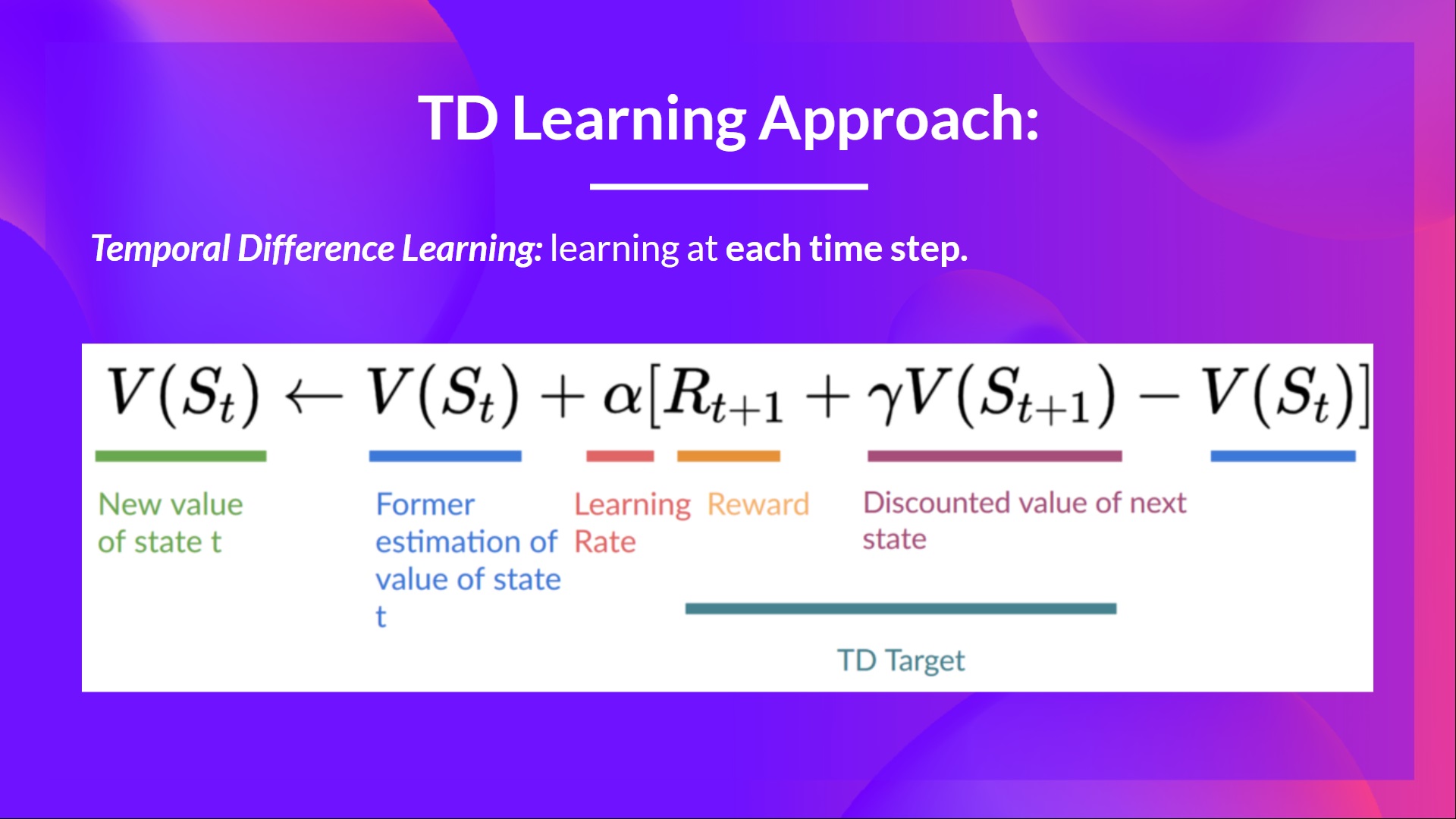

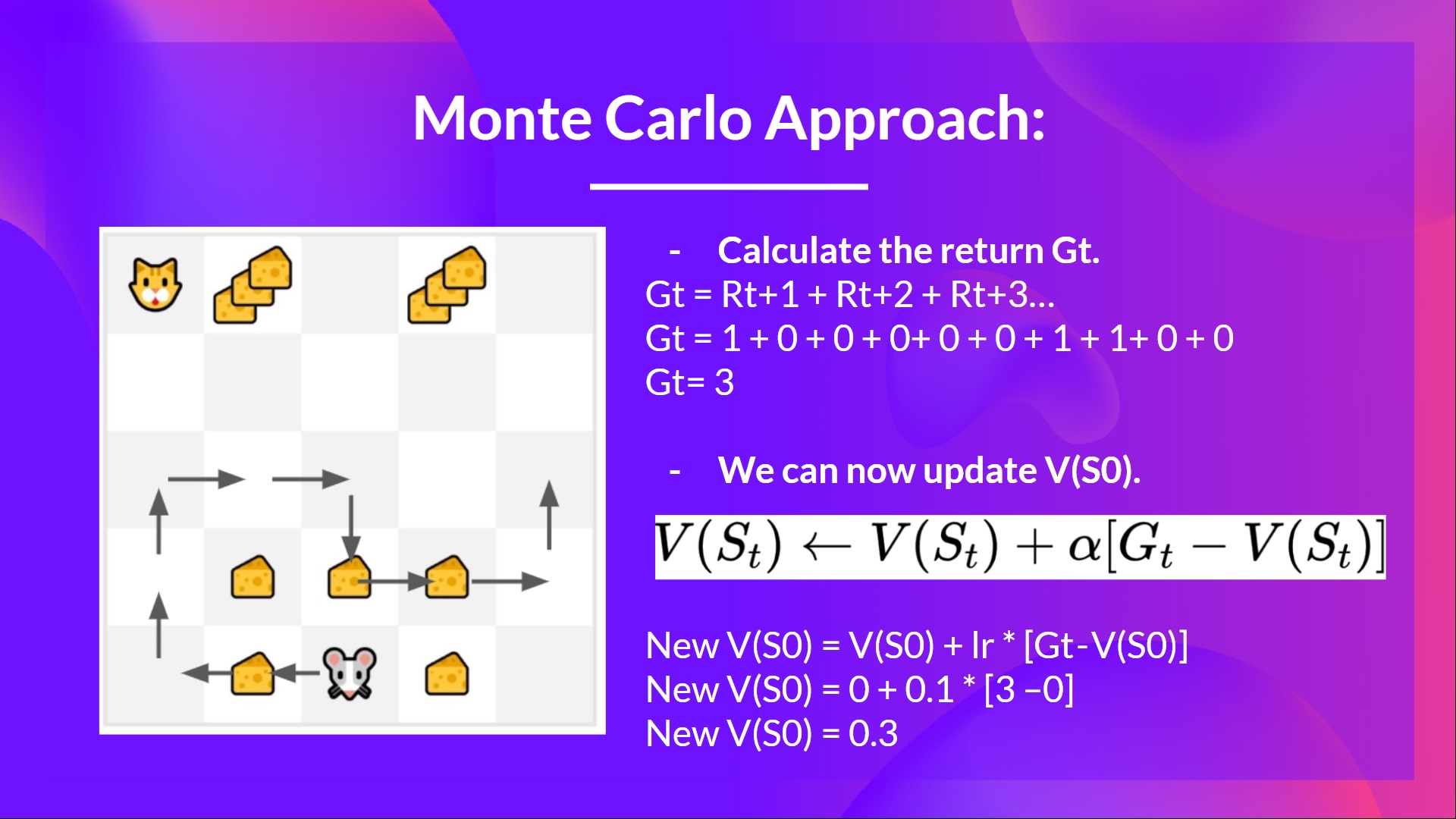

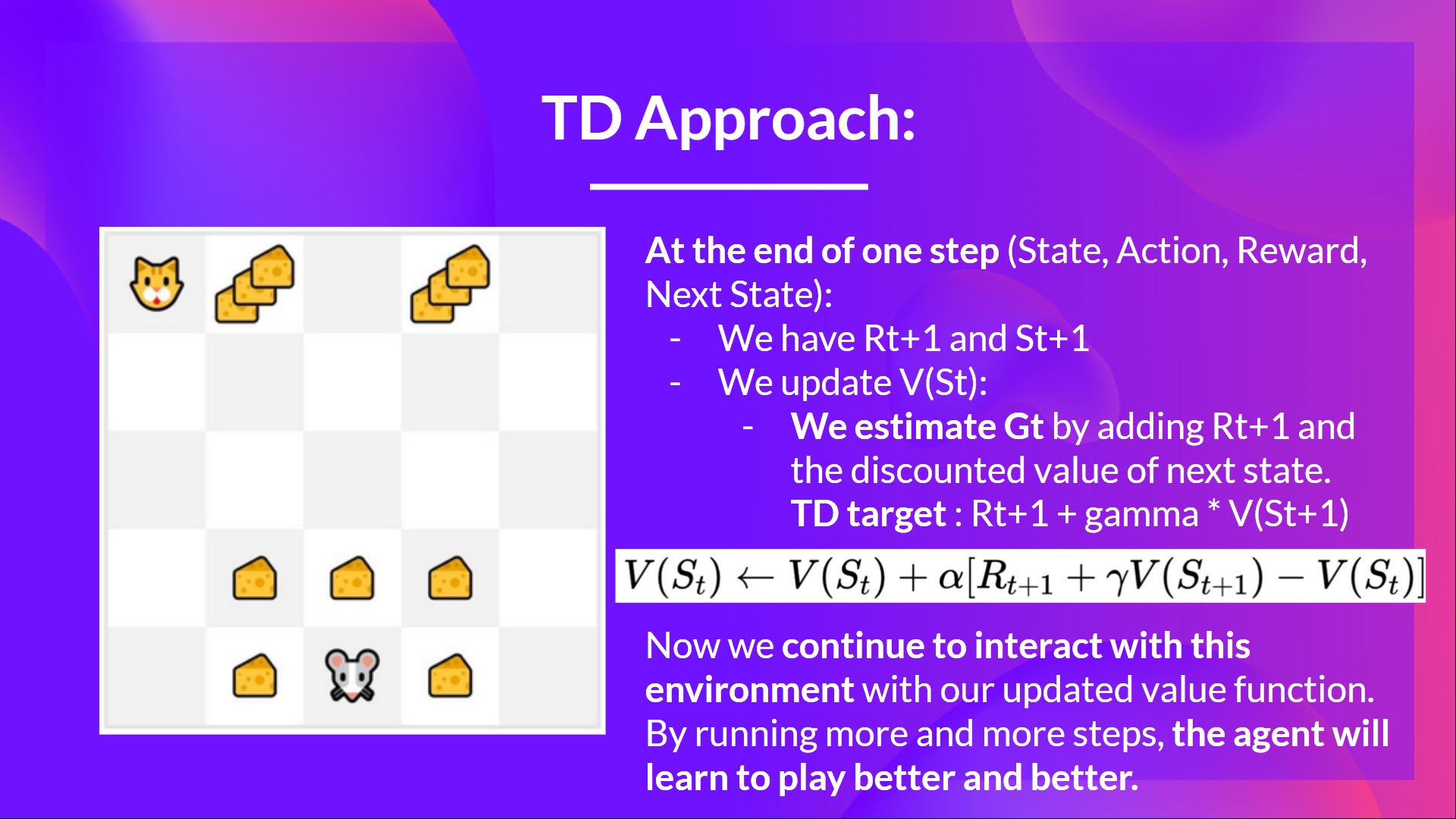

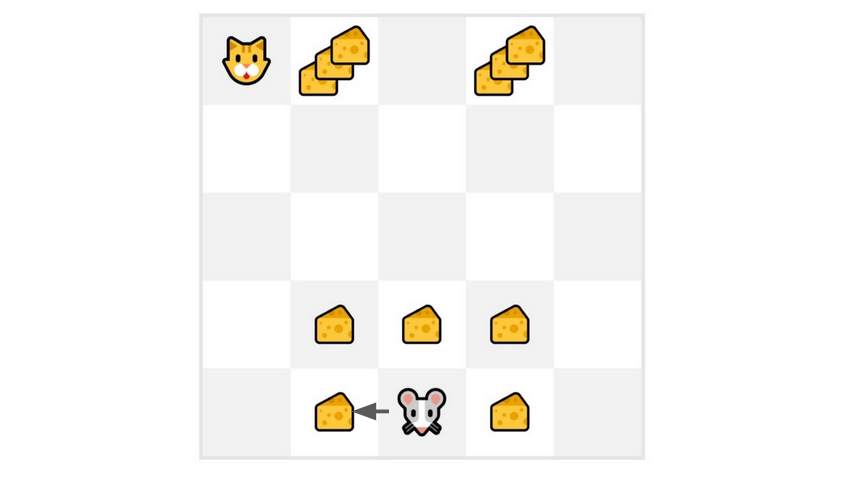

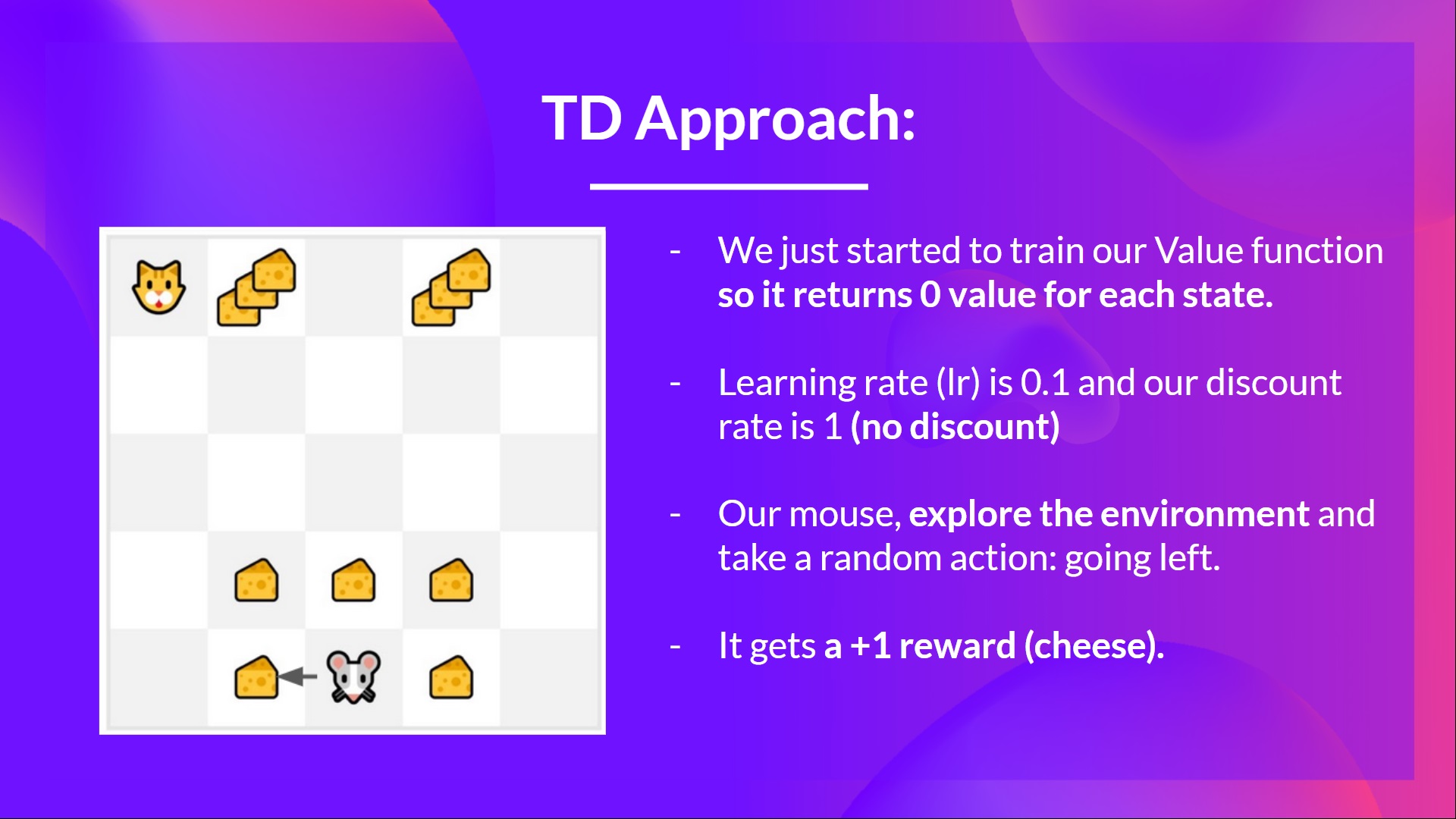

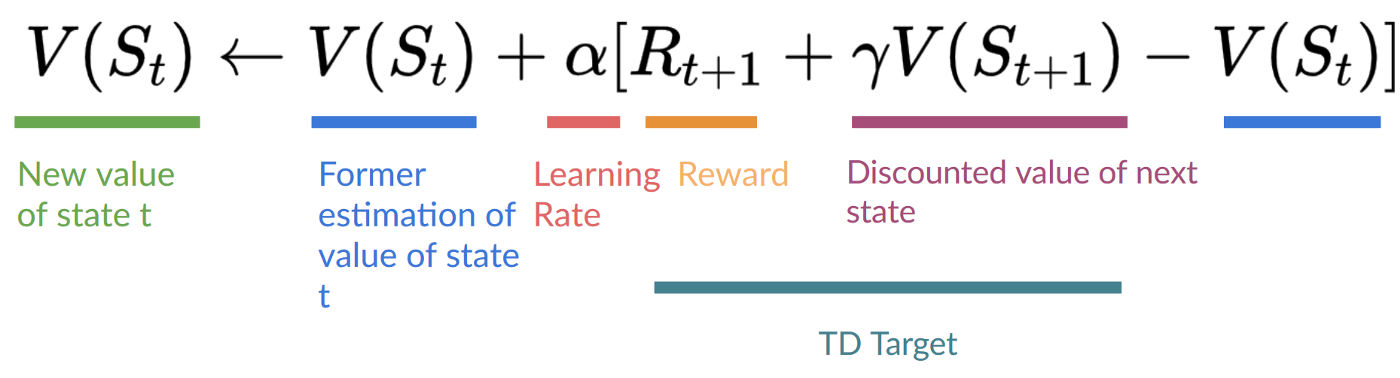

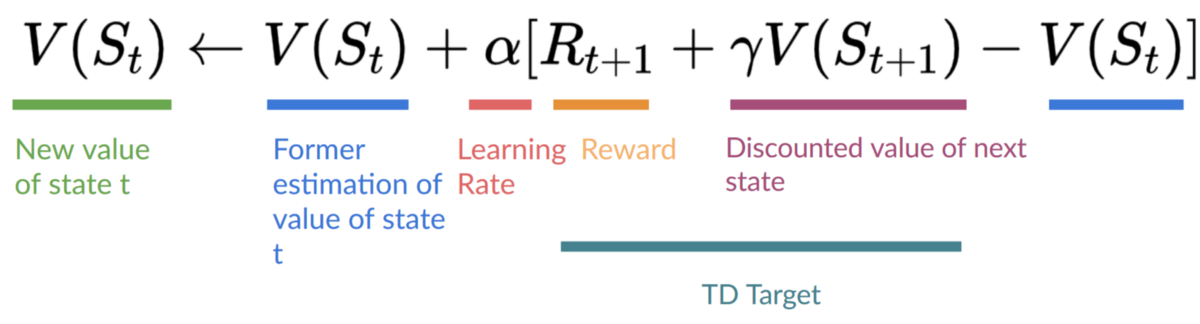

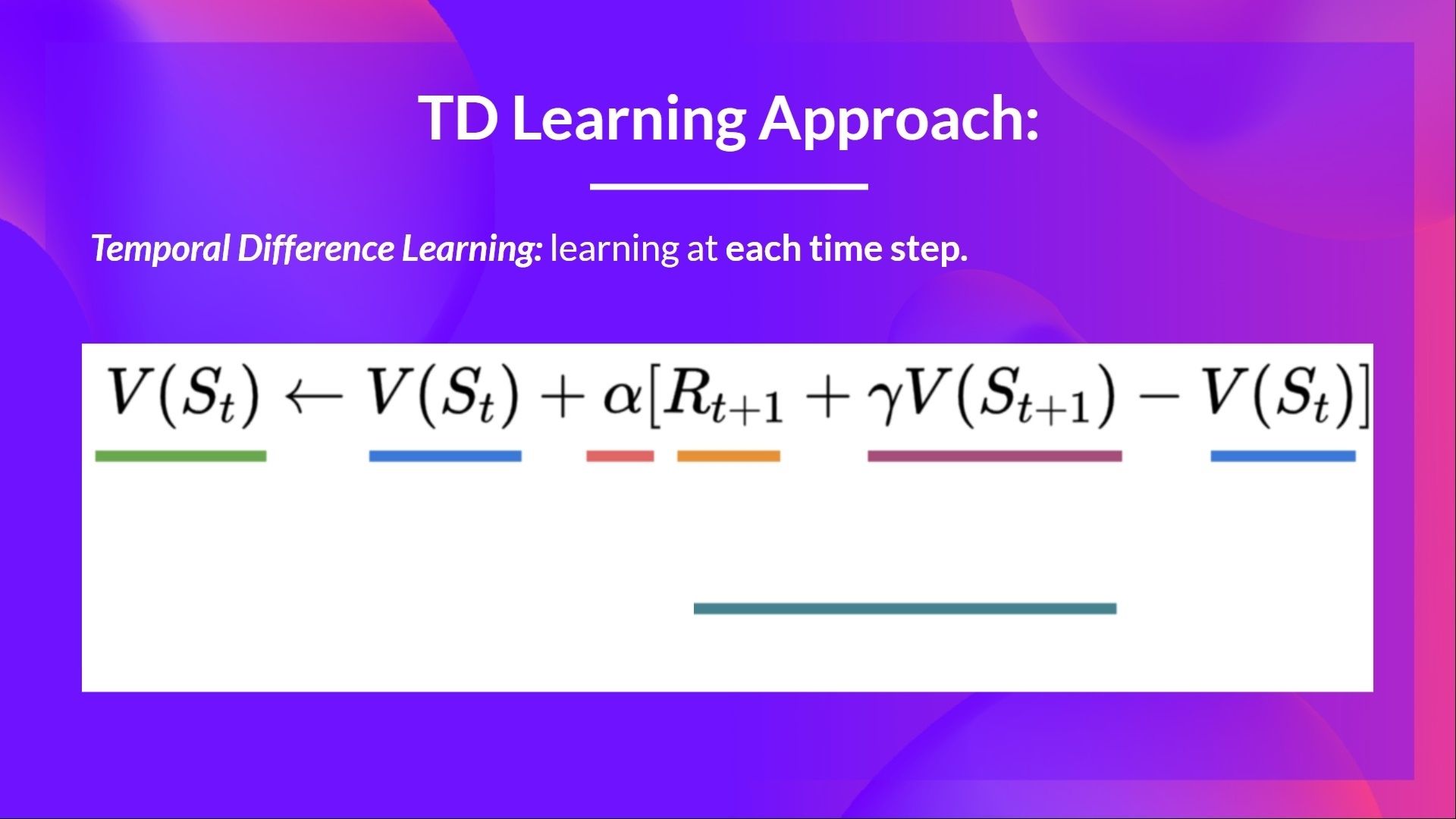

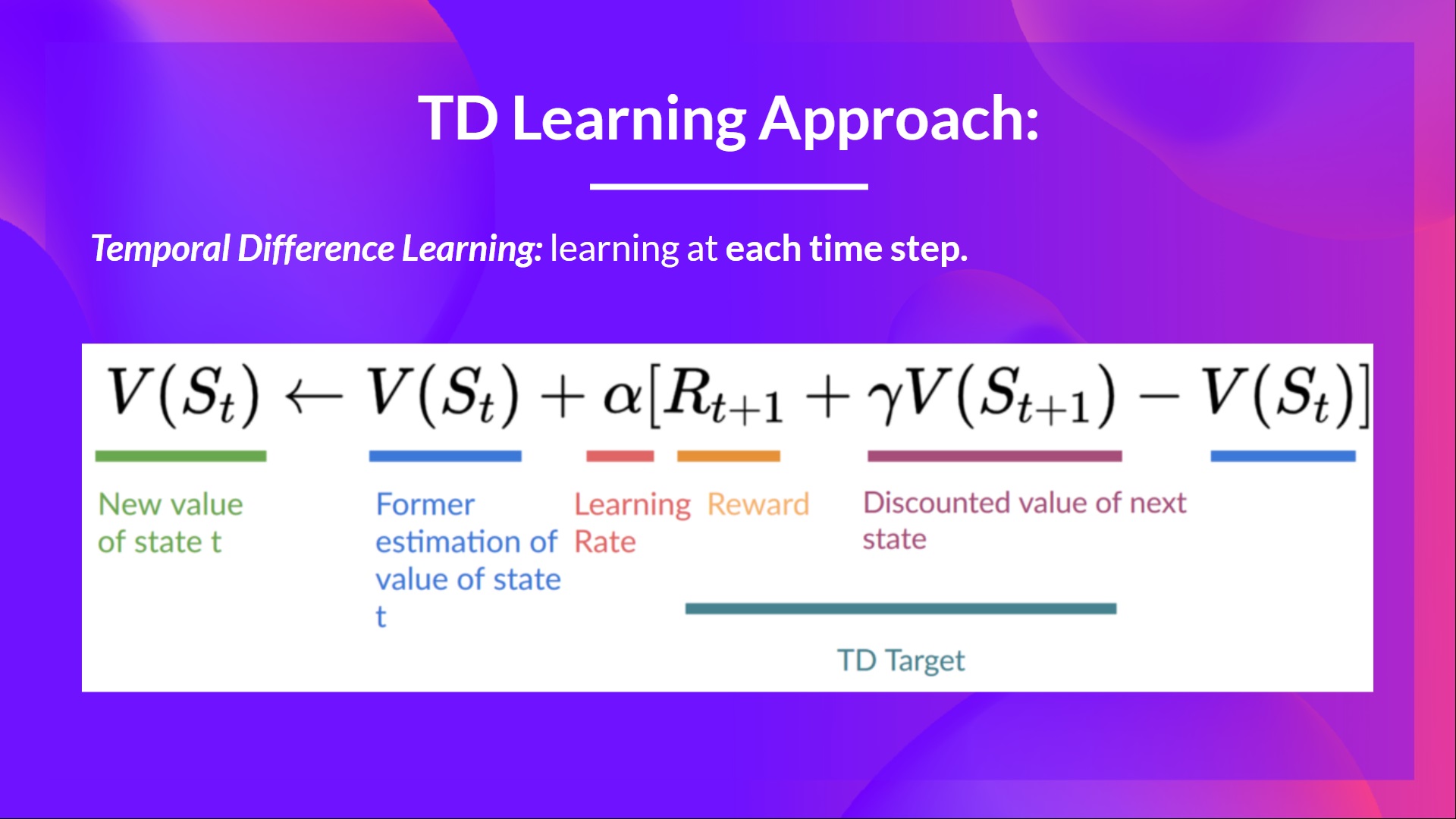

+## Temporal Difference Learning: learning at each step [[td-learning]]

+

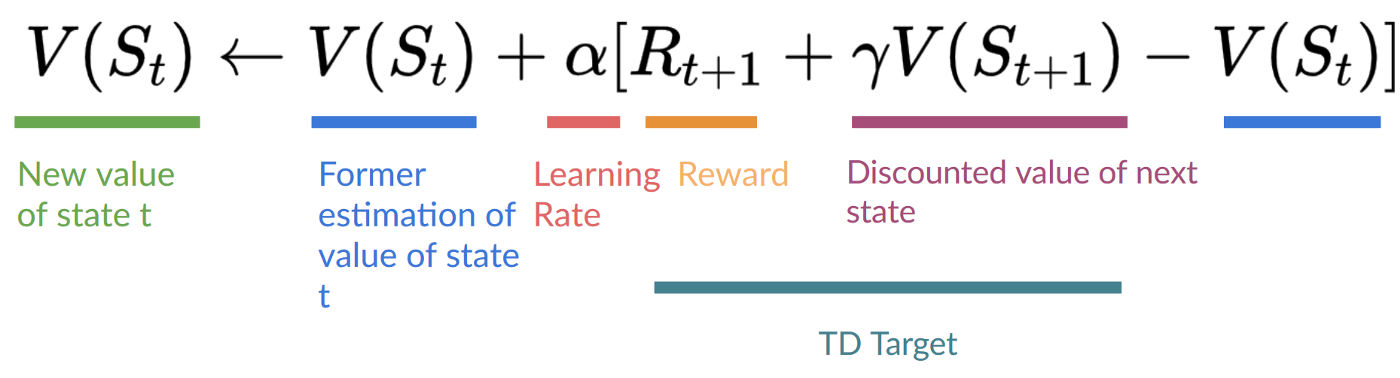

+- **Temporal difference, on the other hand, waits for only one interaction (one step) \\(S_{t+1}\\)**

+- to form a TD target and update \\(V(S_t)\\) using \\(R_{t+1}\\) and \\(gamma * V(S_{t+1})\\).

+

+The idea with **TD is to update the \\(V(S_t)\\) at each step.**

+

+But because we didn't play during an entire episode, we don't have \\(G_t\\) (expected return). Instead, **we estimate \\(G_t\\) by adding \\(R_{t+1}\\) and the discounted value of the next state.**

+

+This is called bootstrapping. It's called this **because TD bases its update part on an existing estimate \\(V(S_{t+1})\\) and not a complete sample \\(G_t\\).**

+

+

+

+

+## Temporal Difference Learning: learning at each step [[td-learning]]

+

+- **Temporal difference, on the other hand, waits for only one interaction (one step) \\(S_{t+1}\\)**

+- to form a TD target and update \\(V(S_t)\\) using \\(R_{t+1}\\) and \\(gamma * V(S_{t+1})\\).

+

+The idea with **TD is to update the \\(V(S_t)\\) at each step.**

+

+But because we didn't play during an entire episode, we don't have \\(G_t\\) (expected return). Instead, **we estimate \\(G_t\\) by adding \\(R_{t+1}\\) and the discounted value of the next state.**

+

+This is called bootstrapping. It's called this **because TD bases its update part on an existing estimate \\(V(S_{t+1})\\) and not a complete sample \\(G_t\\).**

+

+  +

+

+This method is called TD(0) or **one-step TD (update the value function after any individual step).**

+

+

+

+

+This method is called TD(0) or **one-step TD (update the value function after any individual step).**

+

+  +

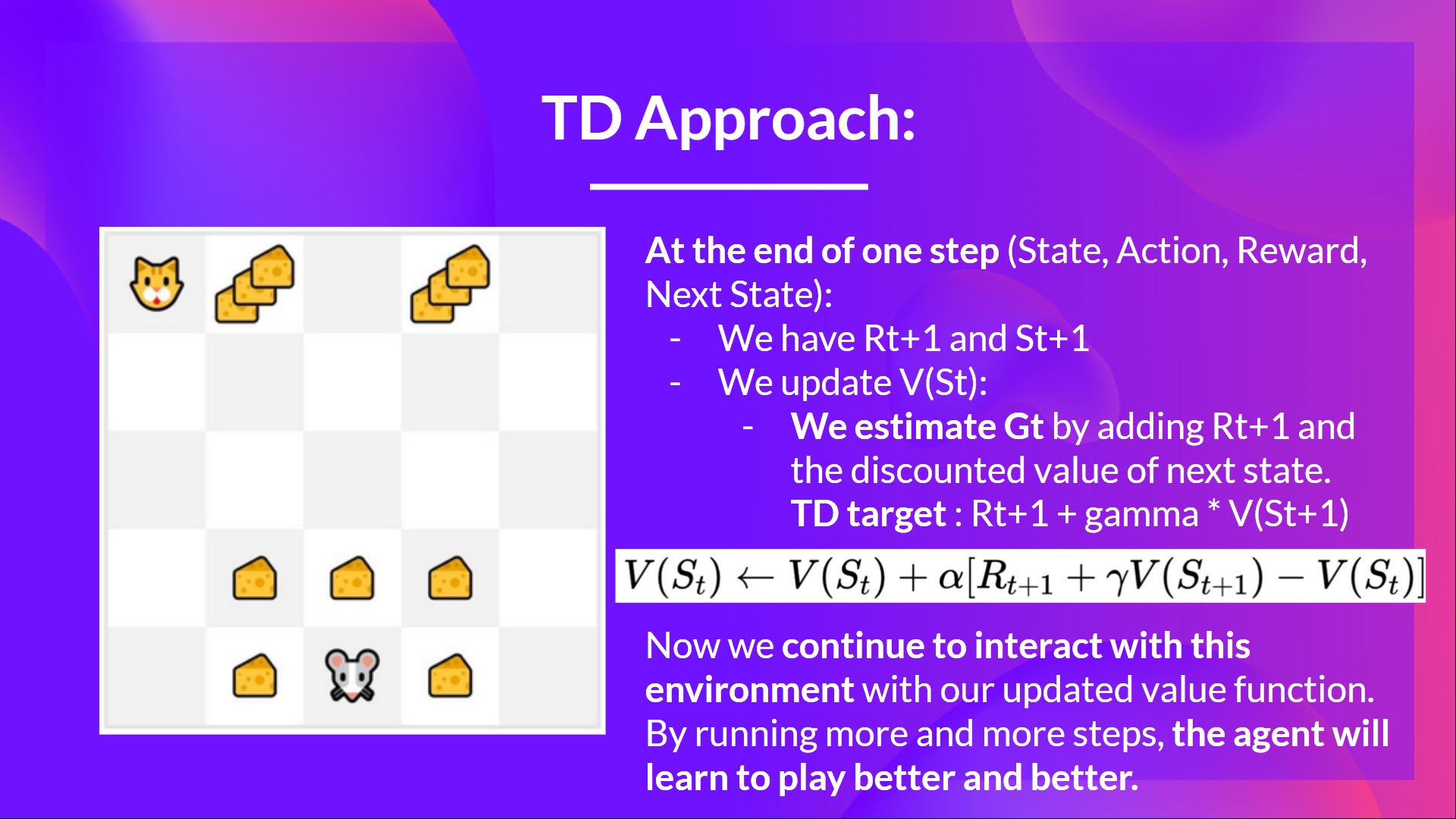

+If we take the same example,

+

+

+

+If we take the same example,

+

+  +

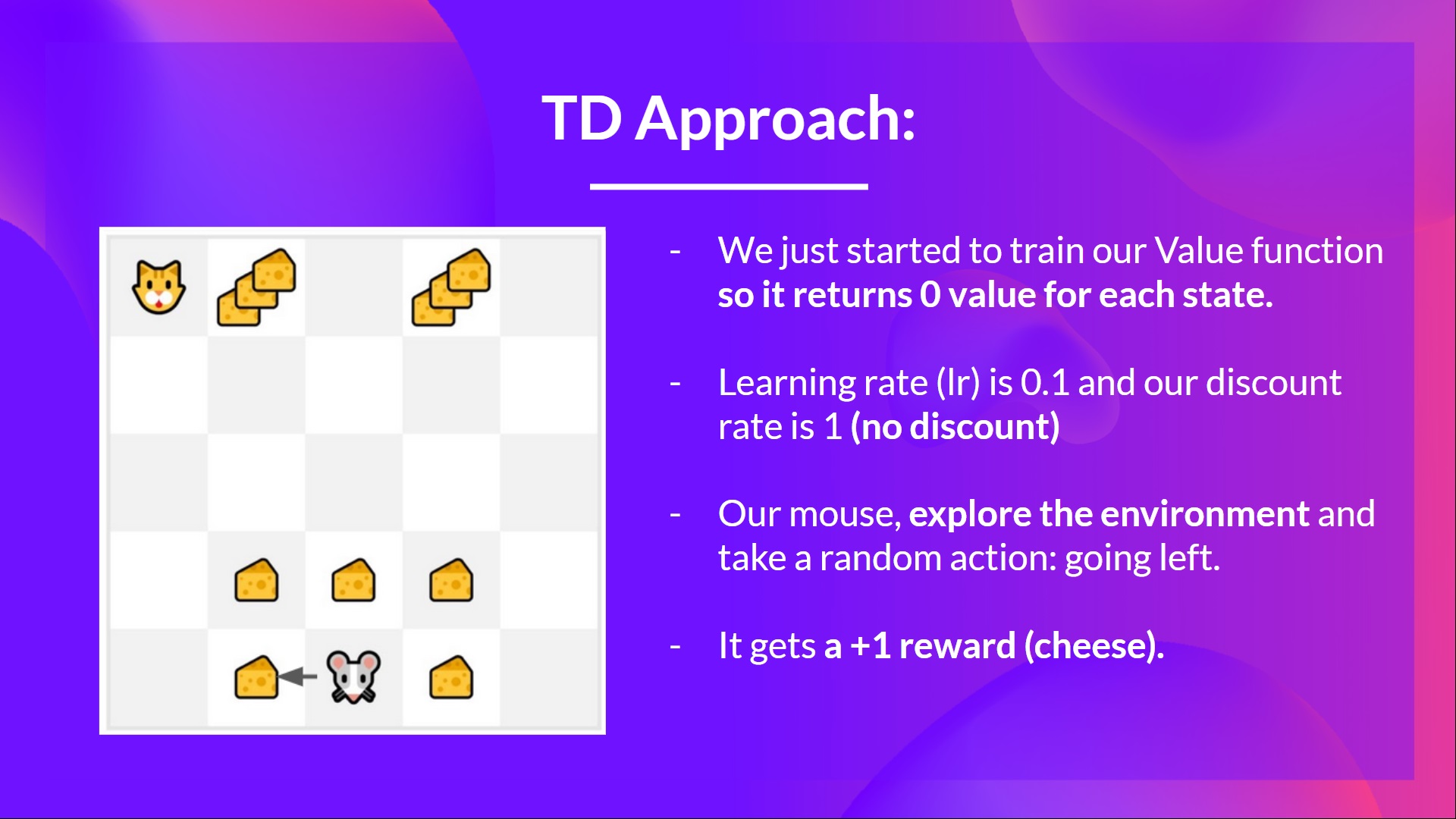

+- We just started to train our Value function, so it returns 0 value for each state.

+- Our learning rate (lr) is 0.1, and our discount rate is 1 (no discount).

+- Our mouse explore the environment and take a random action: **going to the left**

+- It gets a reward \\(R_{t+1} = 1\\) since **it eats a piece of cheese**

+

+

+

+- We just started to train our Value function, so it returns 0 value for each state.

+- Our learning rate (lr) is 0.1, and our discount rate is 1 (no discount).

+- Our mouse explore the environment and take a random action: **going to the left**

+- It gets a reward \\(R_{t+1} = 1\\) since **it eats a piece of cheese**

+

+  +

+

+

+

+

+  +

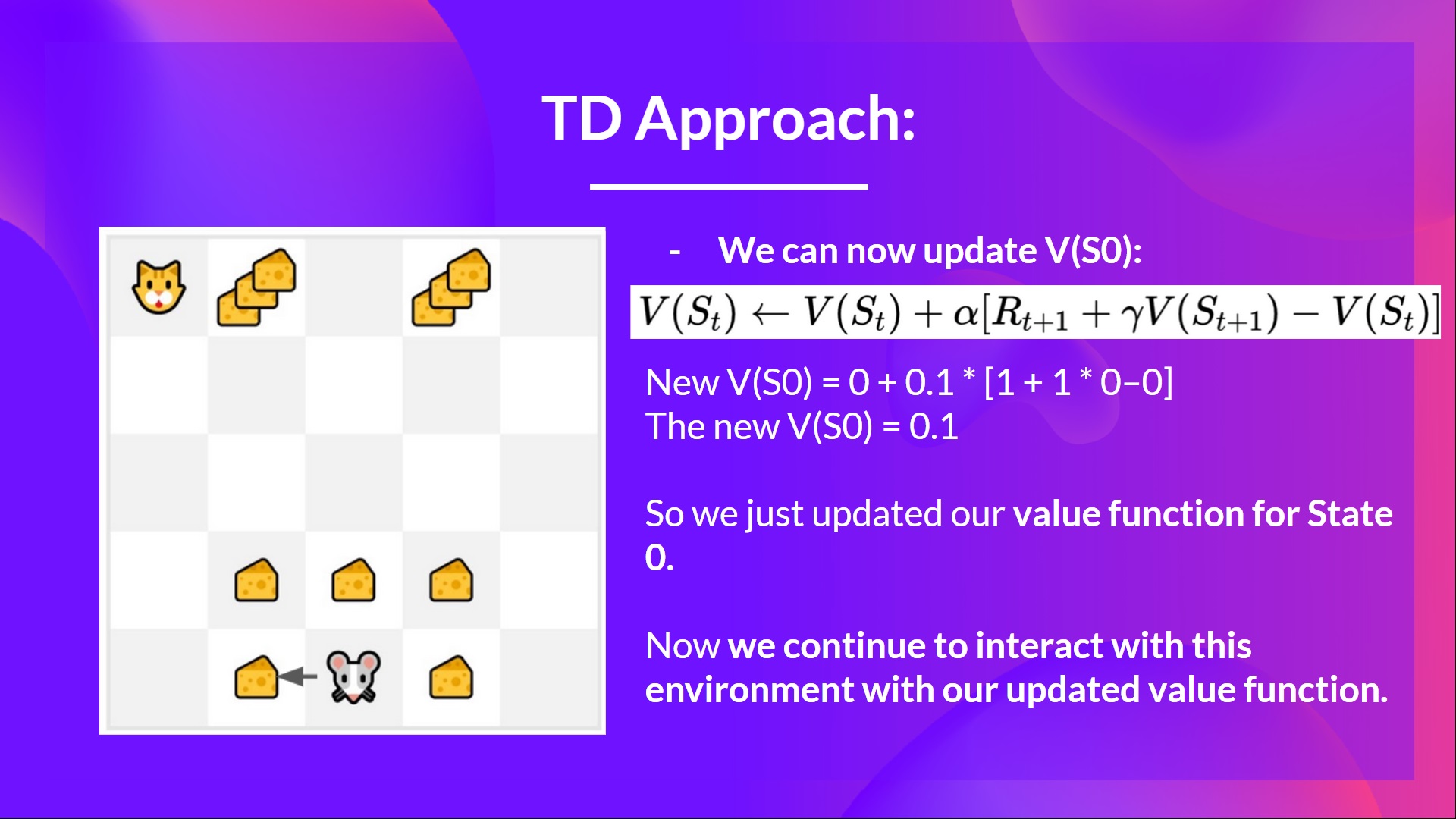

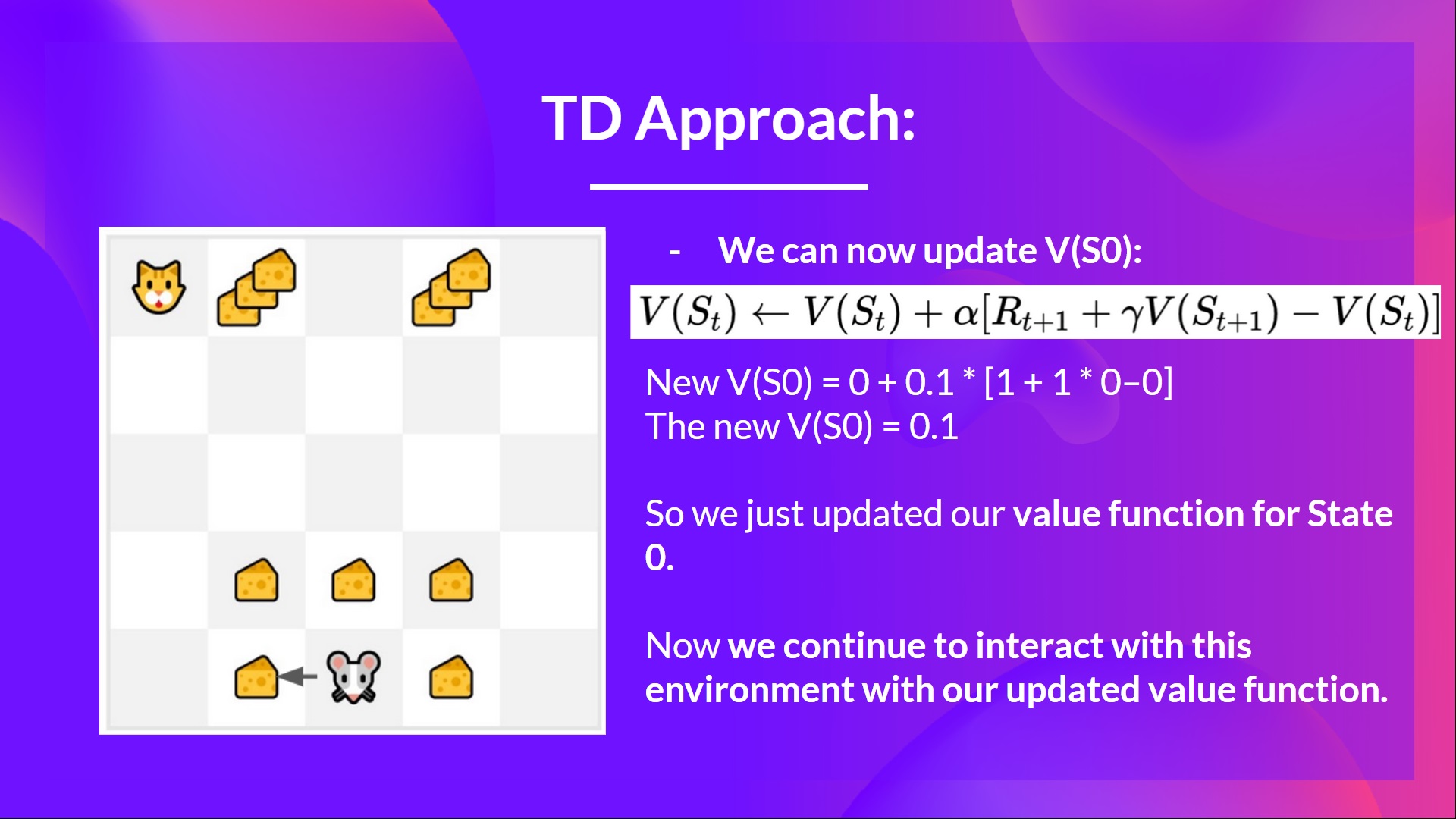

+We can now update \\(V(S_0)\\):

+

+New \\(V(S_0) = V(S_0) + lr * [R_1 + gamma * V(S_1) - V(S_0)]\\)

+

+New \\(V(S_0) = 0 + 0.1 * [1 + 1 * 0–0]\\)

+

+New \\(V(S_0) = 0.1\\)

+

+So we just updated our value function for State 0.

+

+Now we **continue to interact with this environment with our updated value function.**

+

+

+

+We can now update \\(V(S_0)\\):

+

+New \\(V(S_0) = V(S_0) + lr * [R_1 + gamma * V(S_1) - V(S_0)]\\)

+

+New \\(V(S_0) = 0 + 0.1 * [1 + 1 * 0–0]\\)

+

+New \\(V(S_0) = 0.1\\)

+

+So we just updated our value function for State 0.

+

+Now we **continue to interact with this environment with our updated value function.**

+

+  +

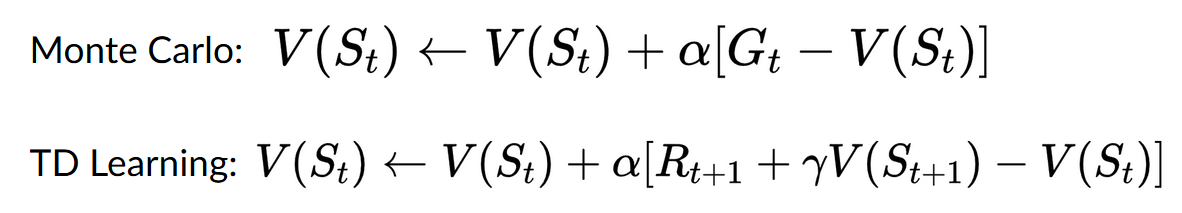

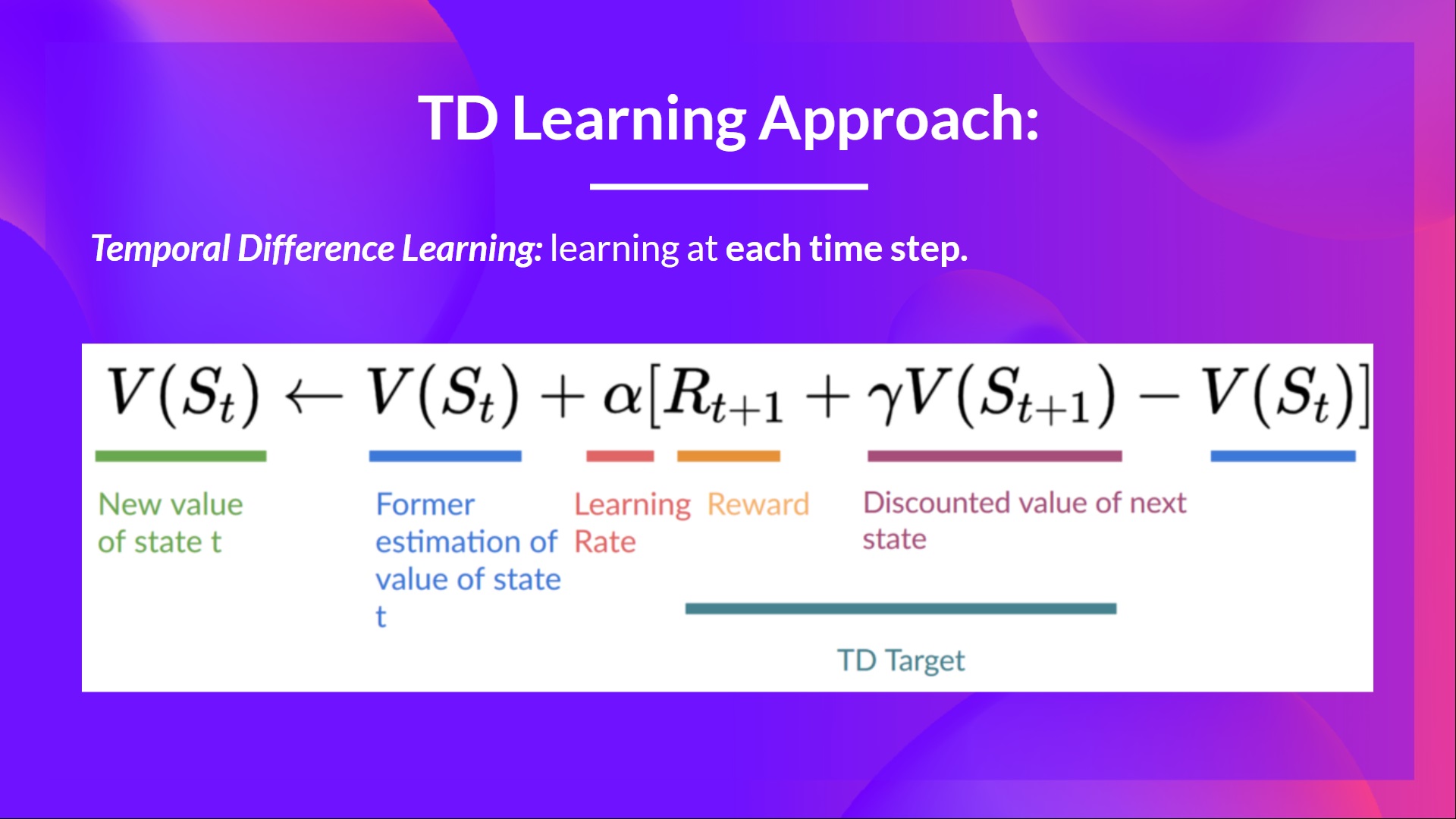

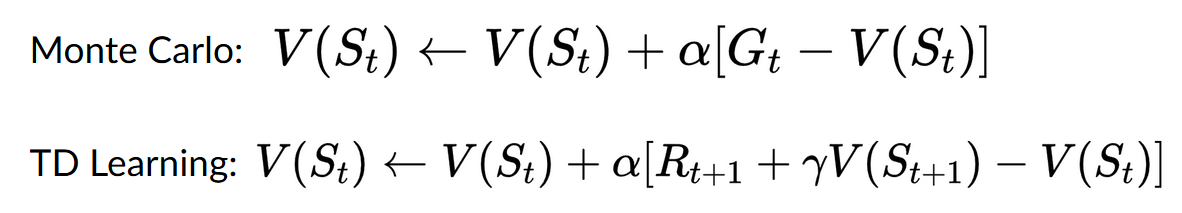

+ If we summarize:

+

+ - With *Monte Carlo*, we update the value function from a complete episode, and so we **use the actual accurate discounted return of this episode.**

+ - With *TD Learning*, we update the value function from a step, so we replace \\(G_t\\) that we don't have with **an estimated return called TD target.**

+

+

+

+ If we summarize:

+

+ - With *Monte Carlo*, we update the value function from a complete episode, and so we **use the actual accurate discounted return of this episode.**

+ - With *TD Learning*, we update the value function from a step, so we replace \\(G_t\\) that we don't have with **an estimated return called TD target.**

+

+  diff --git a/units/en/unit2/q-learning-example.mdx b/units/en/unit2/q-learning-example.mdx

new file mode 100644

index 0000000..62e9be3

--- /dev/null

+++ b/units/en/unit2/q-learning-example.mdx

@@ -0,0 +1,83 @@

+# A Q-Learning example [[q-learning-example]]

+

+To better understand Q-Learning, let's take a simple example:

+

+

diff --git a/units/en/unit2/q-learning-example.mdx b/units/en/unit2/q-learning-example.mdx

new file mode 100644

index 0000000..62e9be3

--- /dev/null

+++ b/units/en/unit2/q-learning-example.mdx

@@ -0,0 +1,83 @@

+# A Q-Learning example [[q-learning-example]]

+

+To better understand Q-Learning, let's take a simple example:

+

+ +

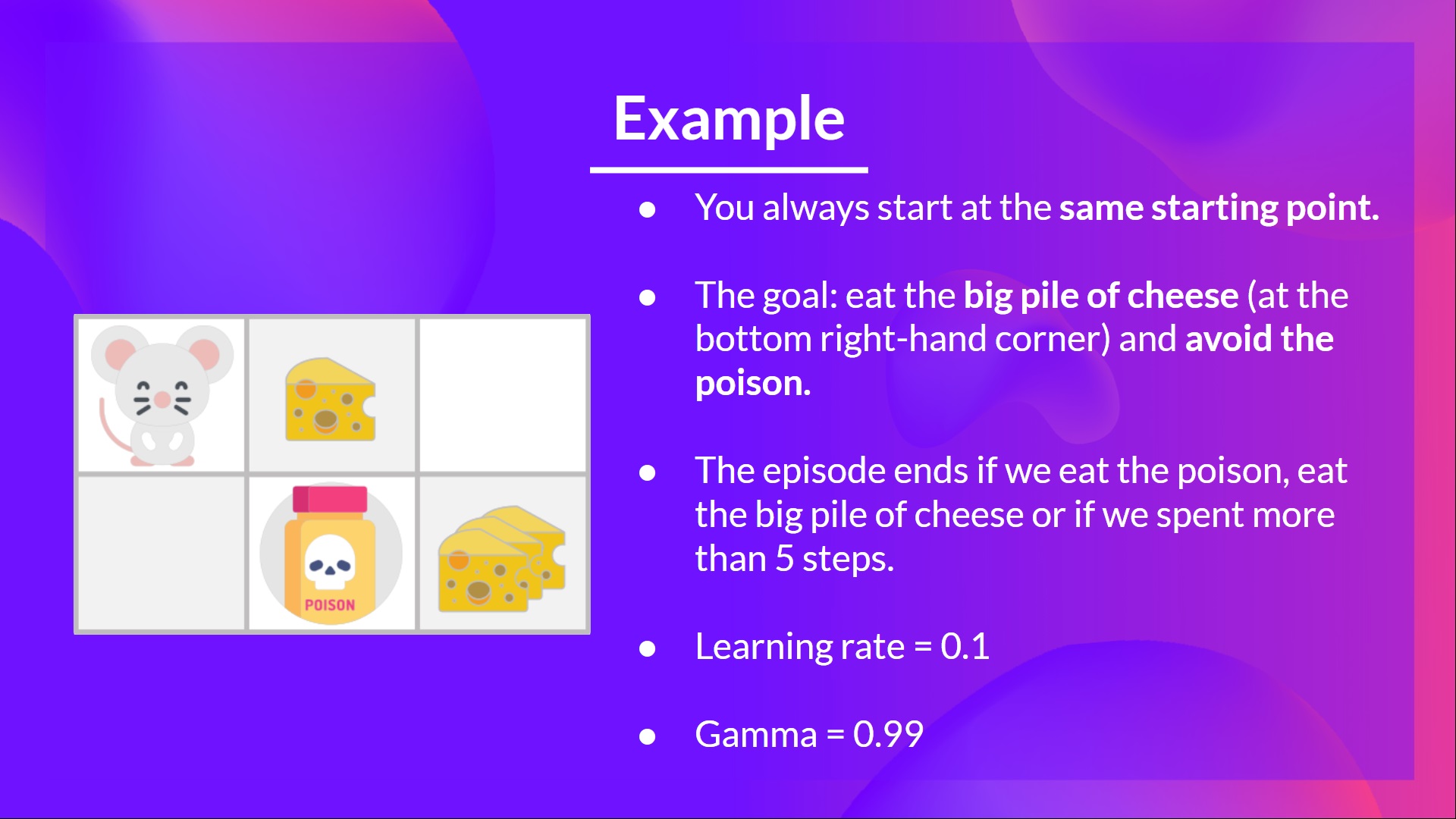

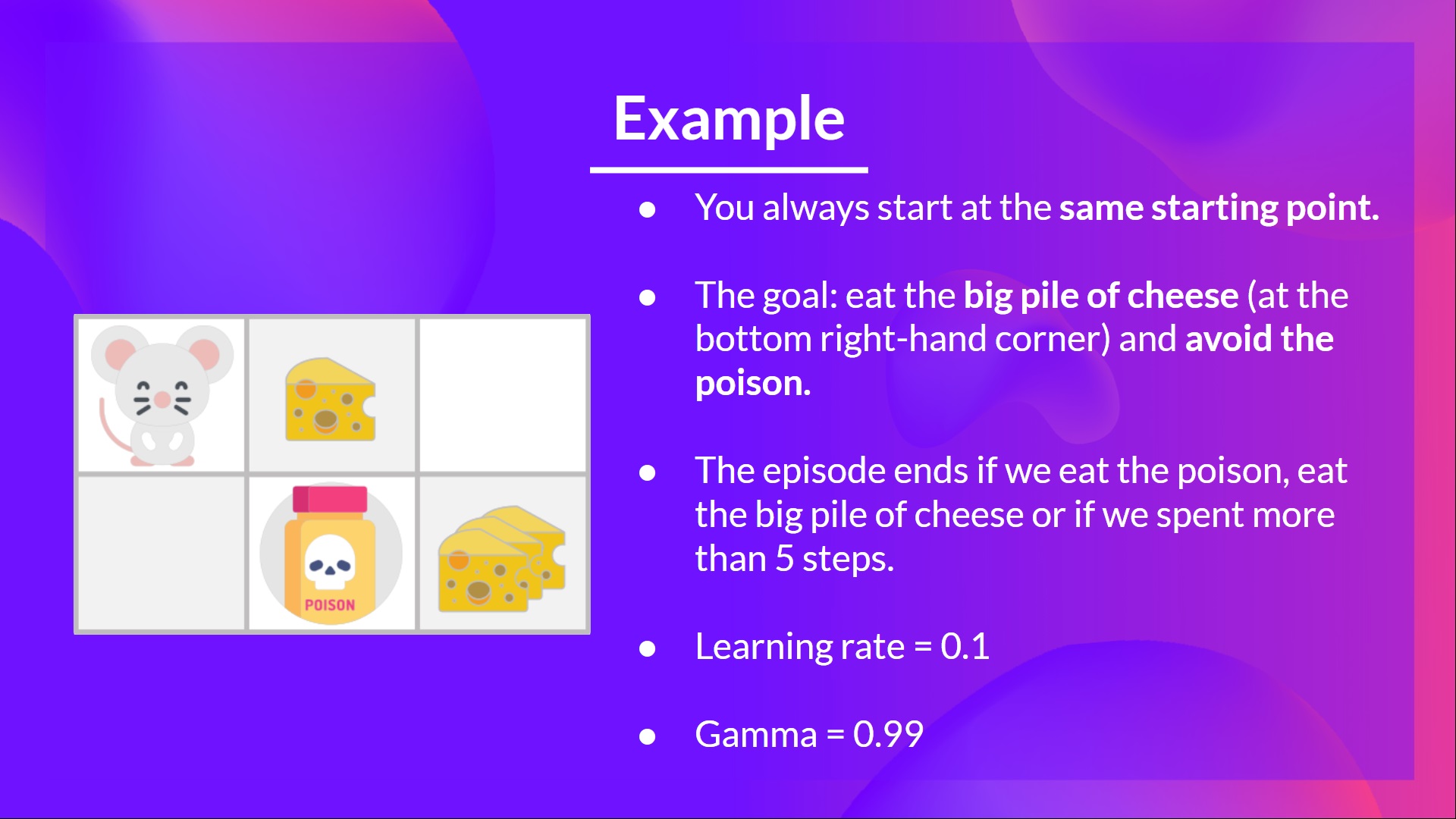

+- You're a mouse in this tiny maze. You always **start at the same starting point.**

+- The goal is **to eat the big pile of cheese at the bottom right-hand corner** and avoid the poison. After all, who doesn't like cheese?

+- The episode ends if we eat the poison, **eat the big pile of cheese or if we spent more than five steps.**

+- The learning rate is 0.1

+- The gamma (discount rate) is 0.99

+

+

+

+- You're a mouse in this tiny maze. You always **start at the same starting point.**

+- The goal is **to eat the big pile of cheese at the bottom right-hand corner** and avoid the poison. After all, who doesn't like cheese?

+- The episode ends if we eat the poison, **eat the big pile of cheese or if we spent more than five steps.**

+- The learning rate is 0.1

+- The gamma (discount rate) is 0.99

+

+ +

+

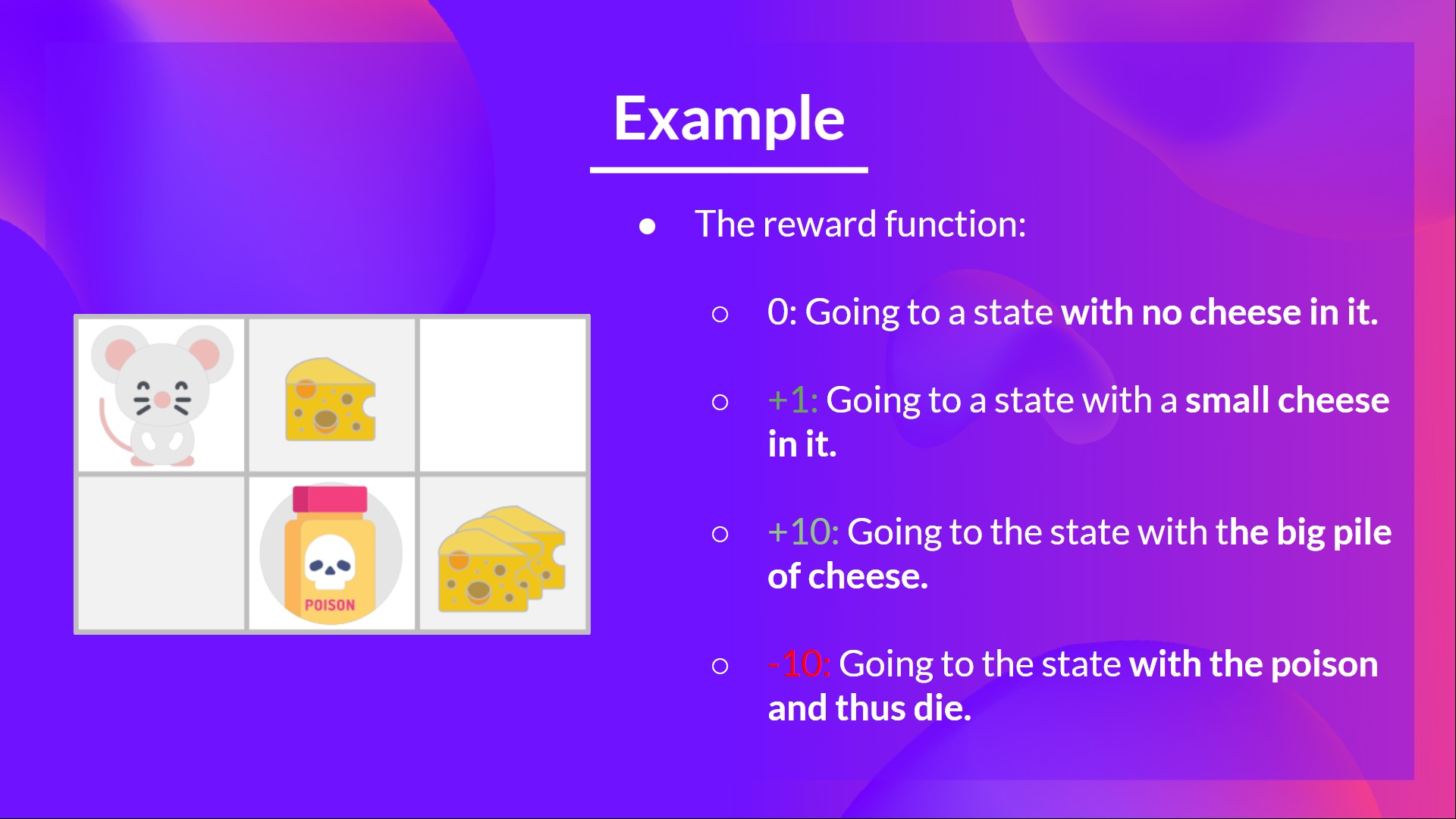

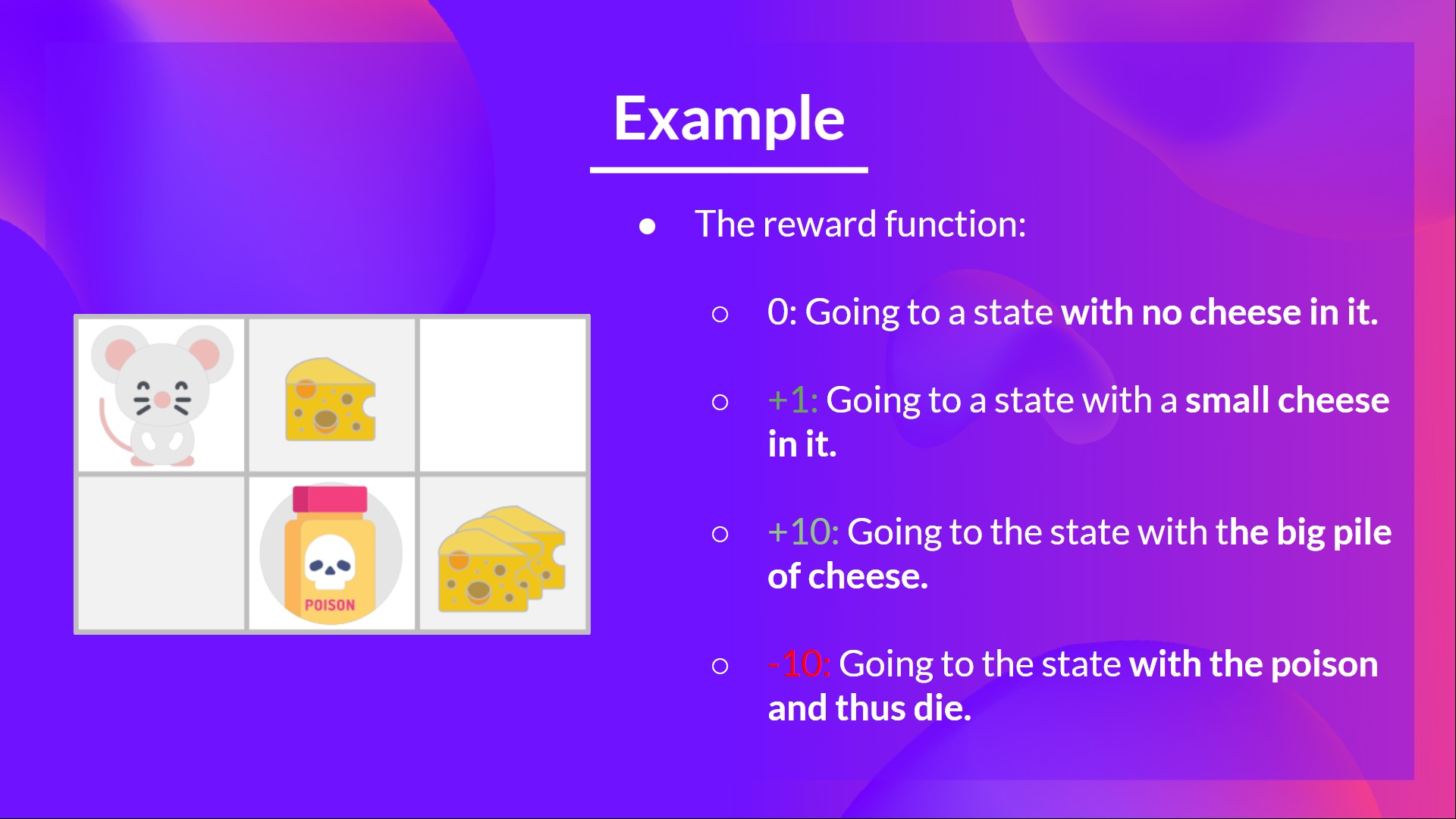

+The reward function goes like this:

+

+- **+0:** Going to a state with no cheese in it.

+- **+1:** Going to a state with a small cheese in it.

+- **+10:** Going to the state with the big pile of cheese.

+- **-10:** Going to the state with the poison and thus die.

+- **+0** If we spend more than five steps.

+

+

+

+

+The reward function goes like this:

+

+- **+0:** Going to a state with no cheese in it.

+- **+1:** Going to a state with a small cheese in it.

+- **+10:** Going to the state with the big pile of cheese.

+- **-10:** Going to the state with the poison and thus die.

+- **+0** If we spend more than five steps.

+

+ +

+To train our agent to have an optimal policy (so a policy that goes right, right, down), **we will use the Q-Learning algorithm**.

+

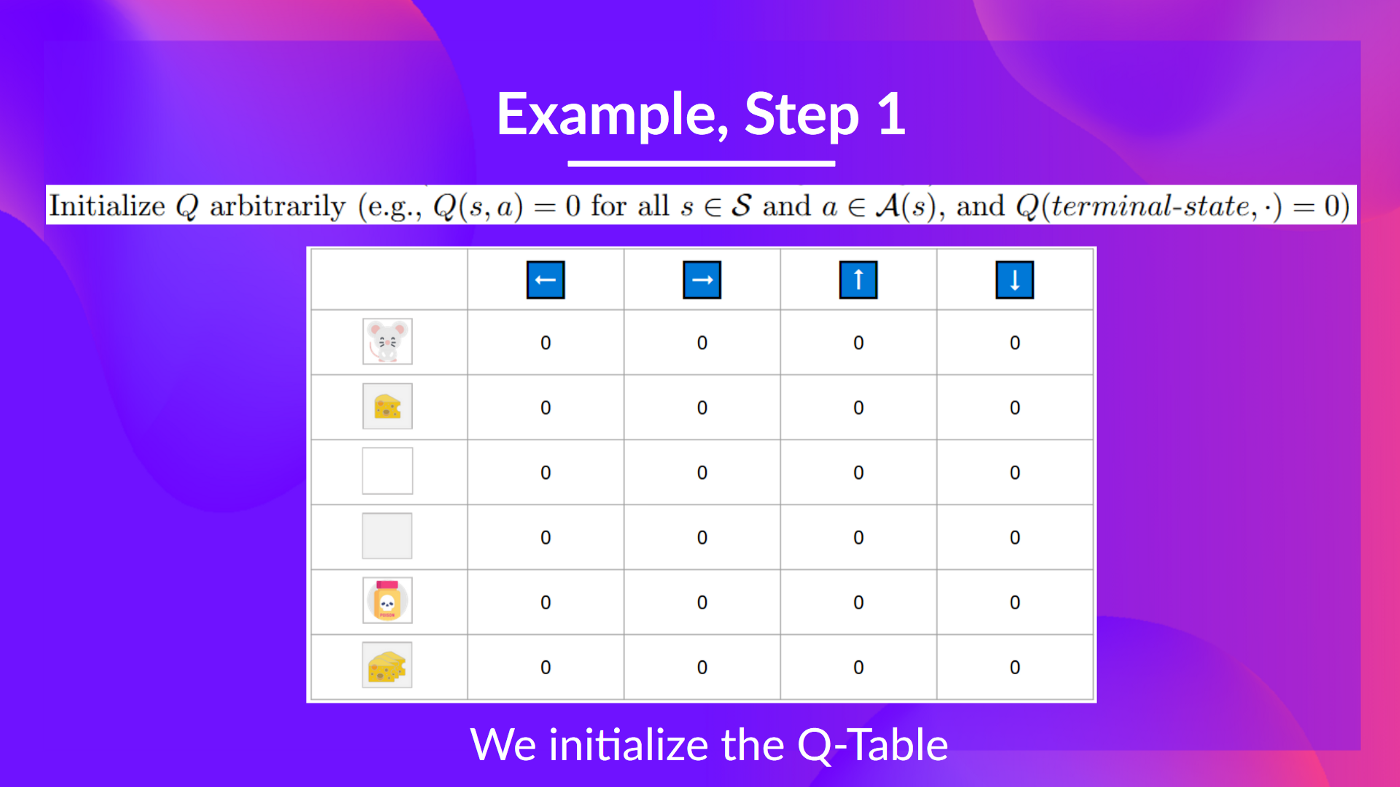

+## Step 1: We initialize the Q-Table [[step1]]

+

+

+

+To train our agent to have an optimal policy (so a policy that goes right, right, down), **we will use the Q-Learning algorithm**.

+

+## Step 1: We initialize the Q-Table [[step1]]

+

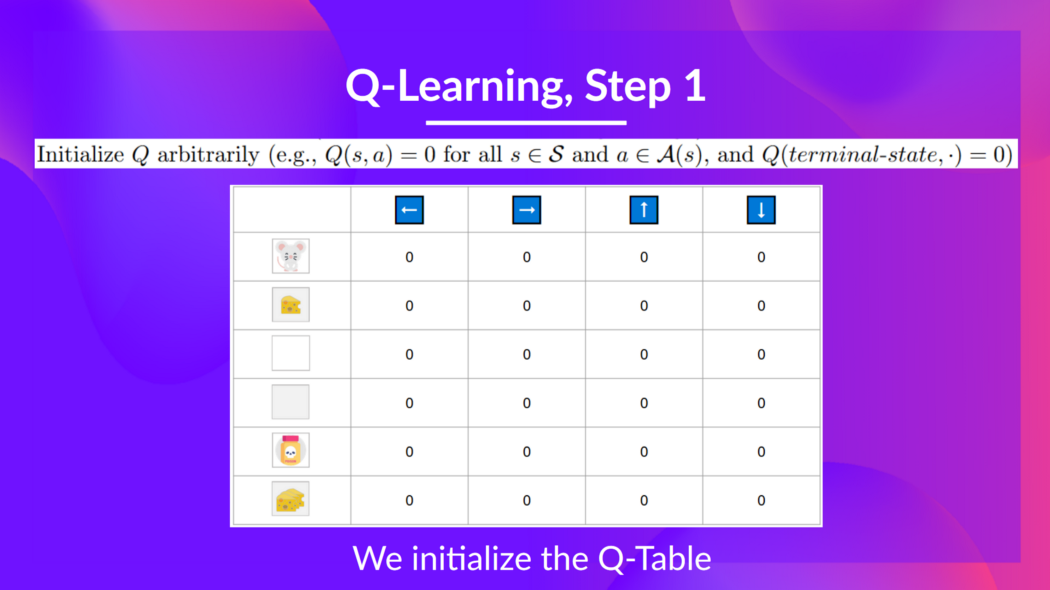

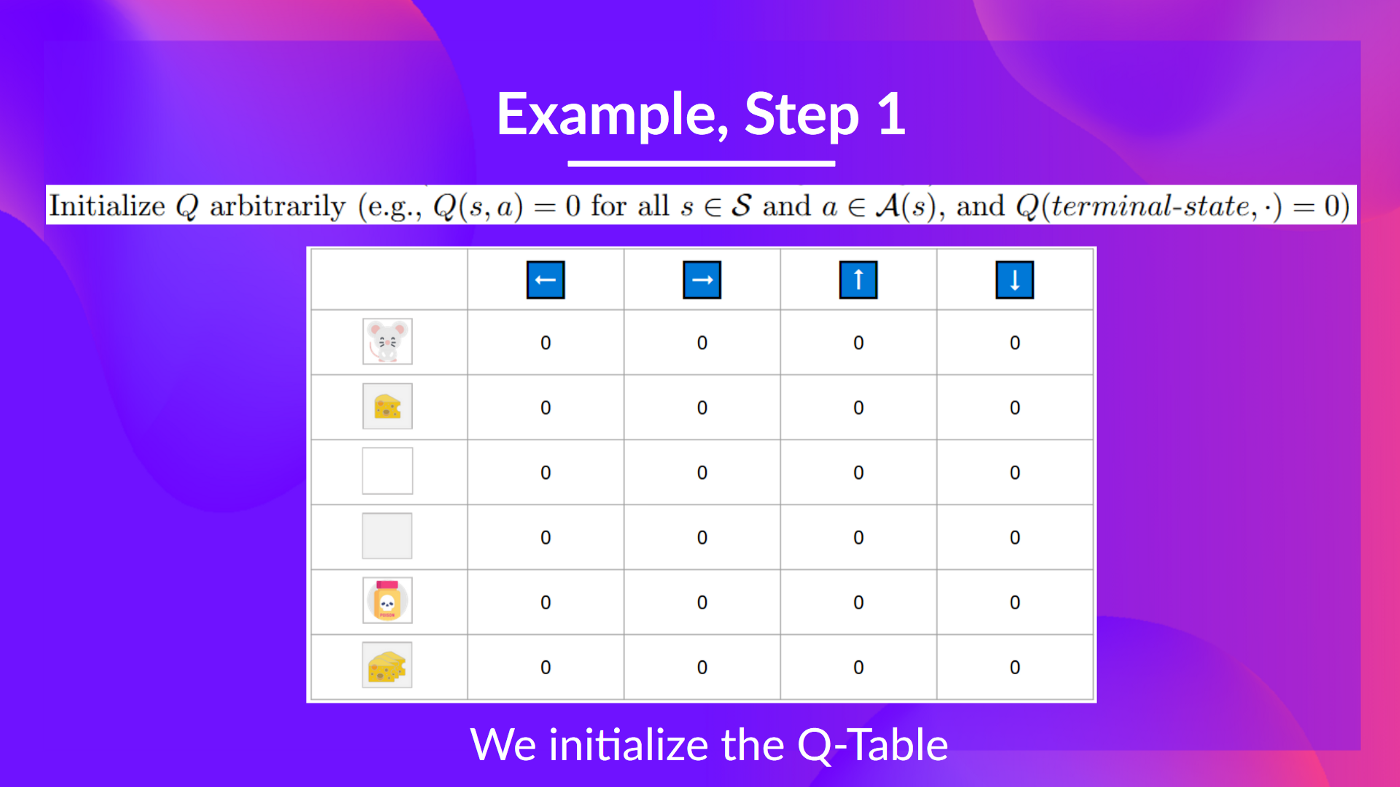

+ +

+So, for now, **our Q-Table is useless**; we need **to train our Q-function using the Q-Learning algorithm.**

+

+Let's do it for 2 training timesteps:

+

+Training timestep 1:

+

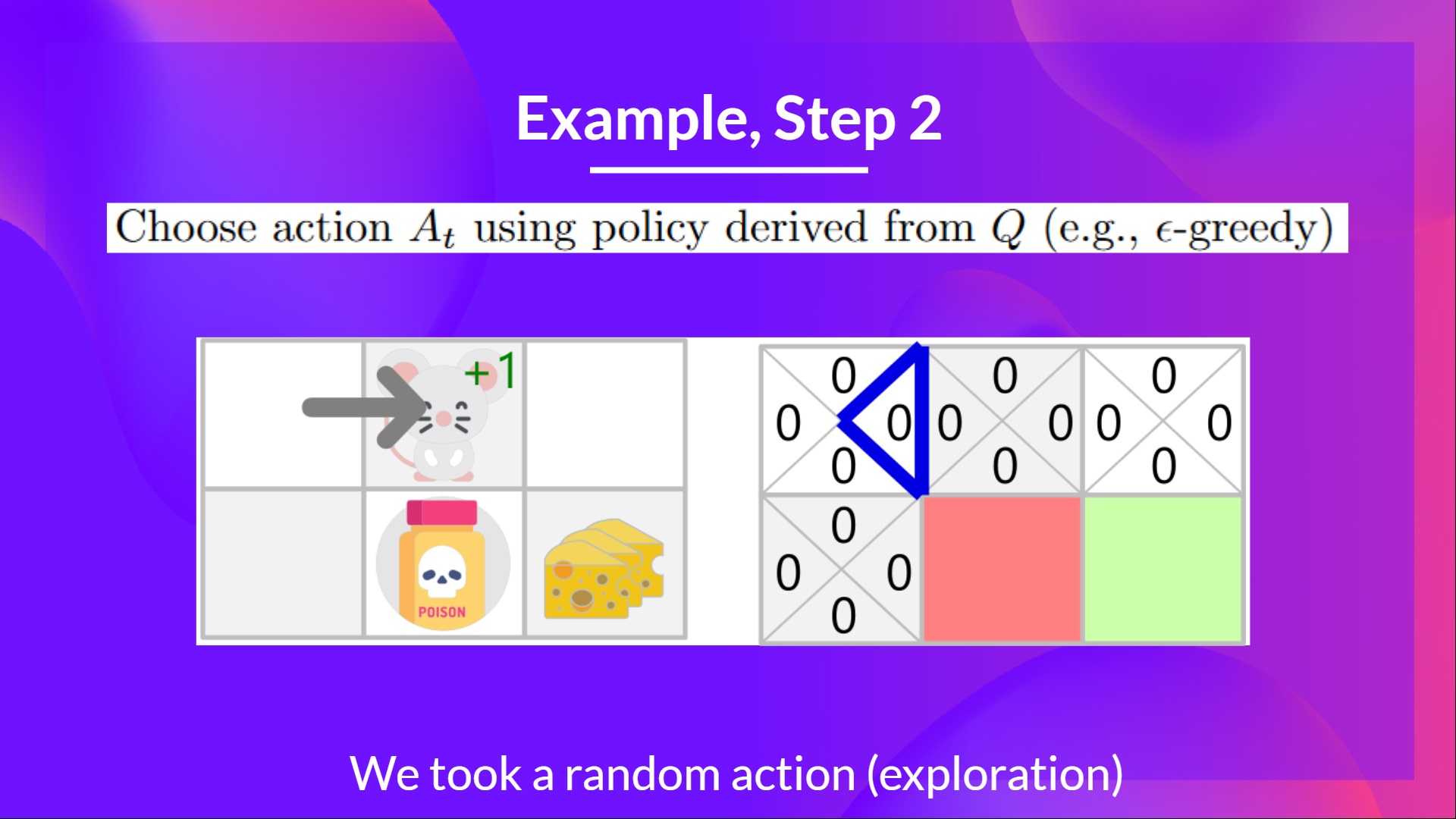

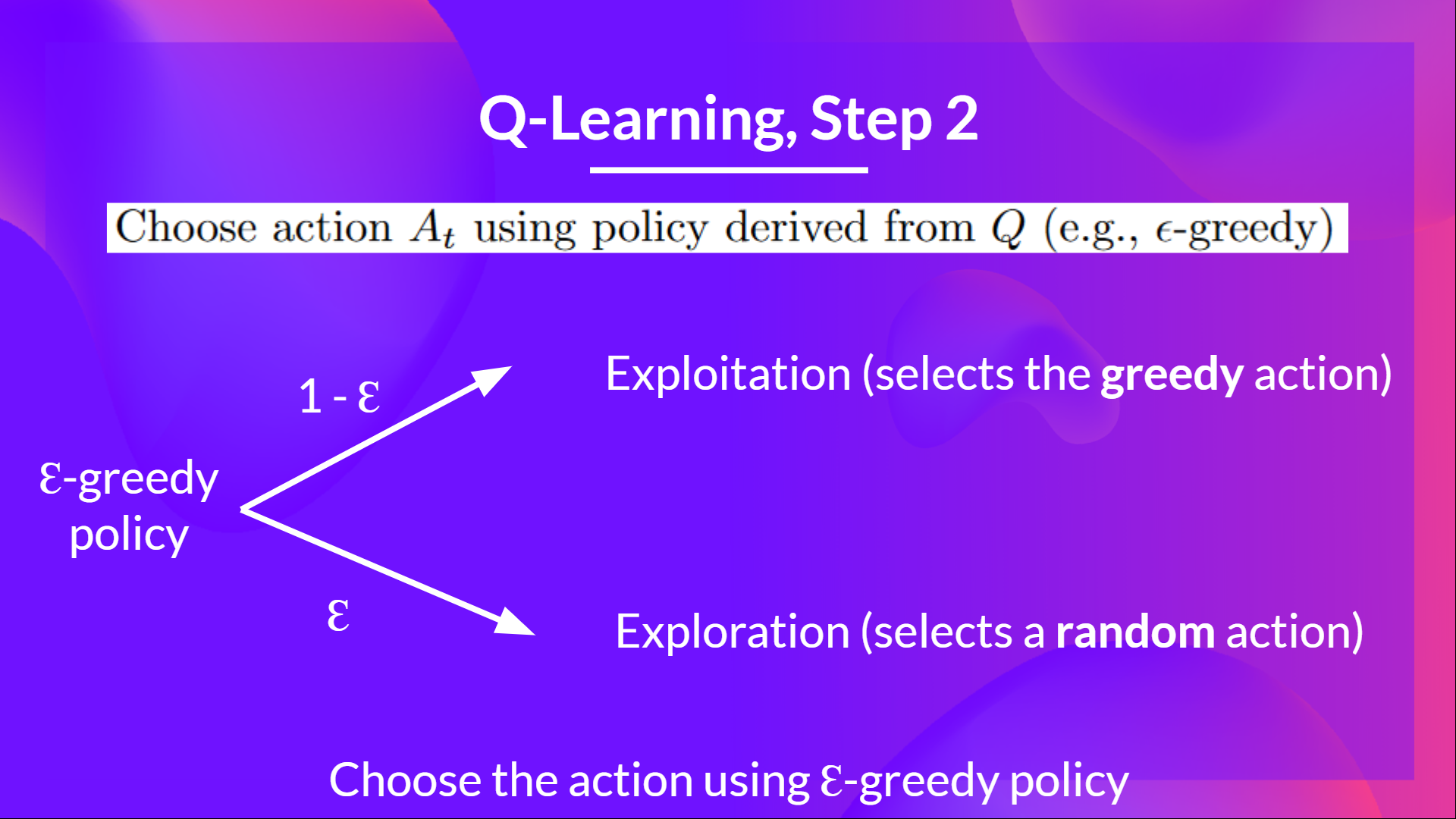

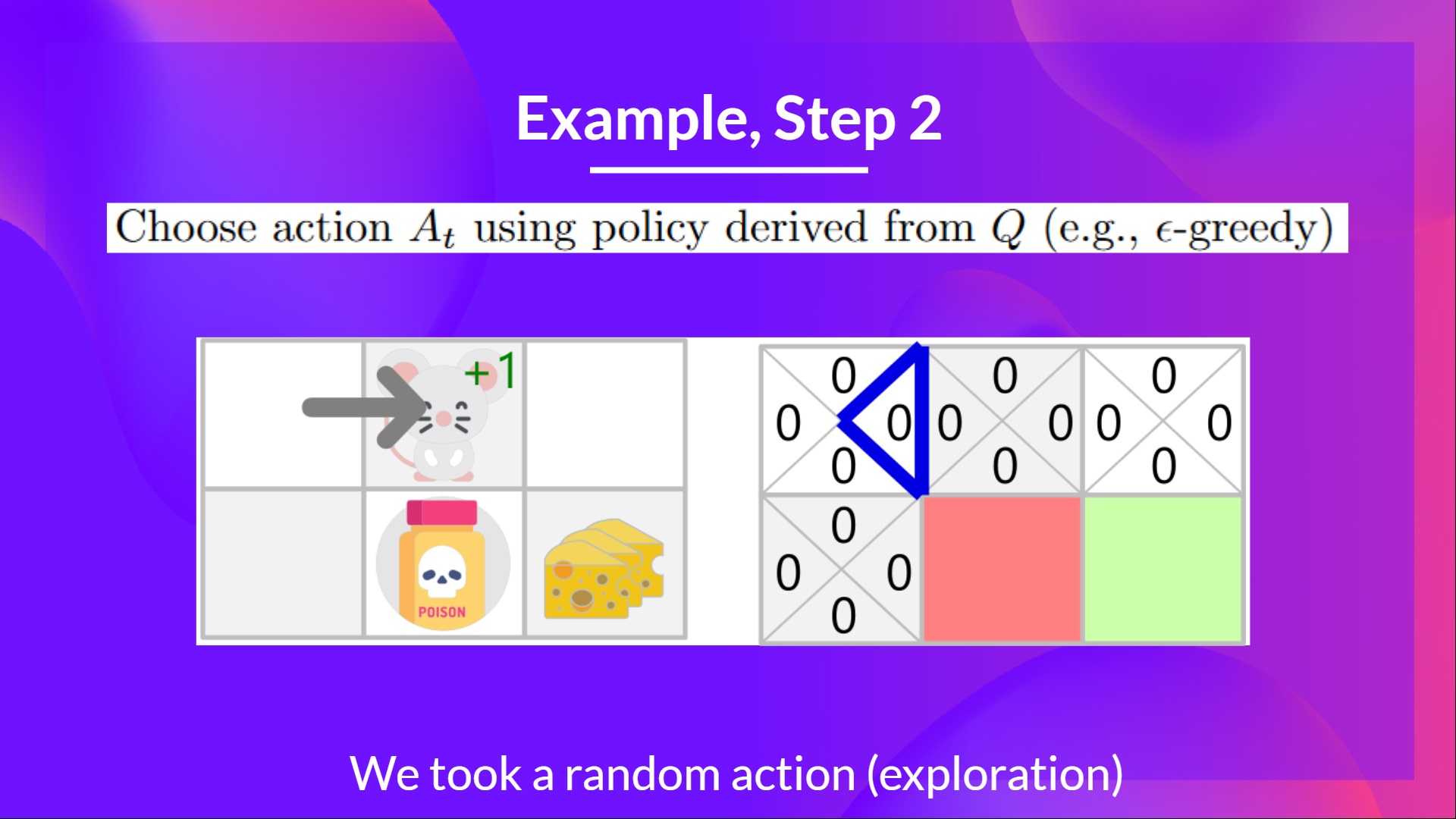

+## Step 2: Choose action using Epsilon Greedy Strategy [[step2]]

+

+Because epsilon is big = 1.0, I take a random action, in this case, I go right.

+

+

+

+So, for now, **our Q-Table is useless**; we need **to train our Q-function using the Q-Learning algorithm.**

+

+Let's do it for 2 training timesteps:

+

+Training timestep 1:

+

+## Step 2: Choose action using Epsilon Greedy Strategy [[step2]]

+

+Because epsilon is big = 1.0, I take a random action, in this case, I go right.

+

+ +

+

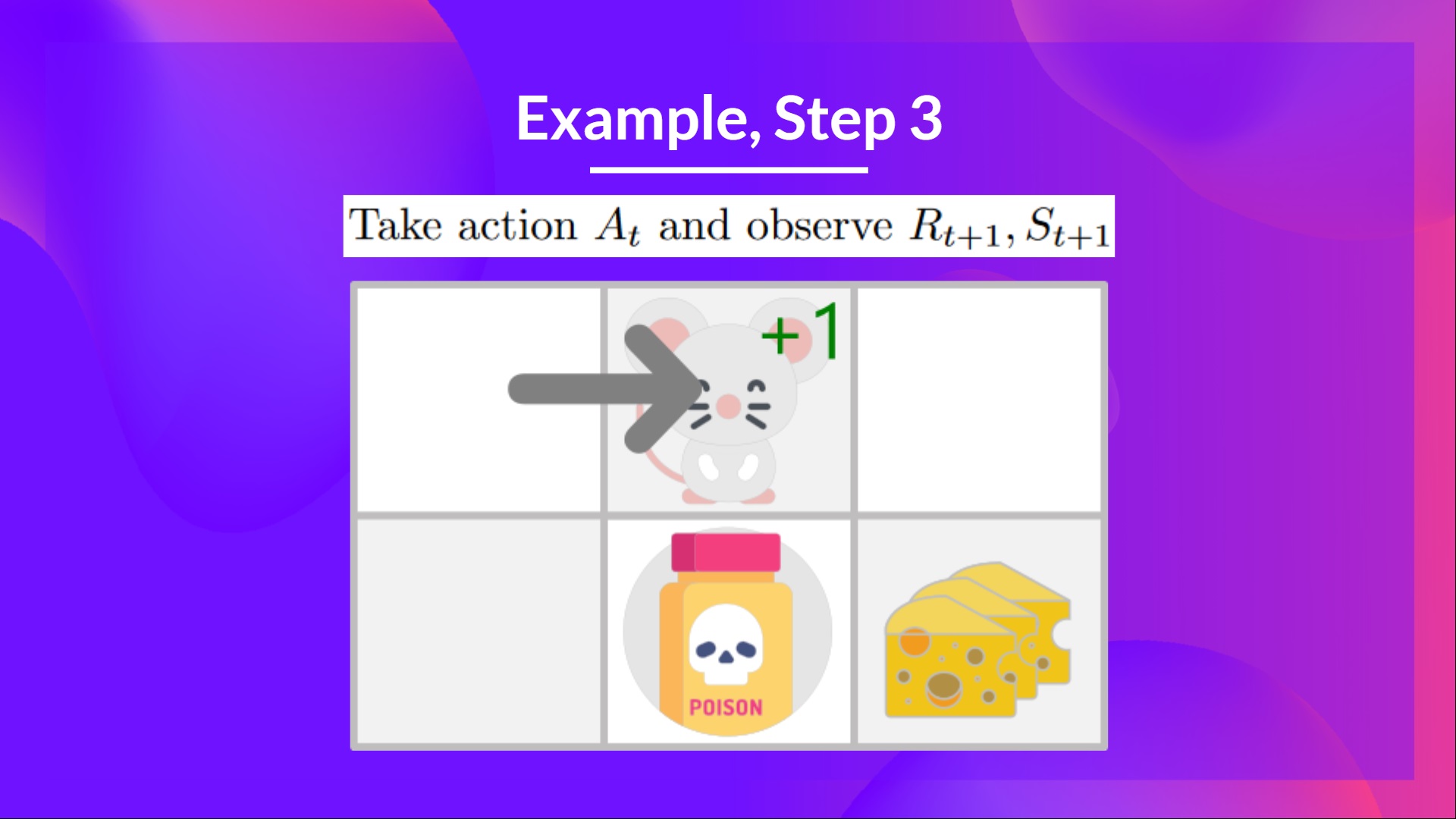

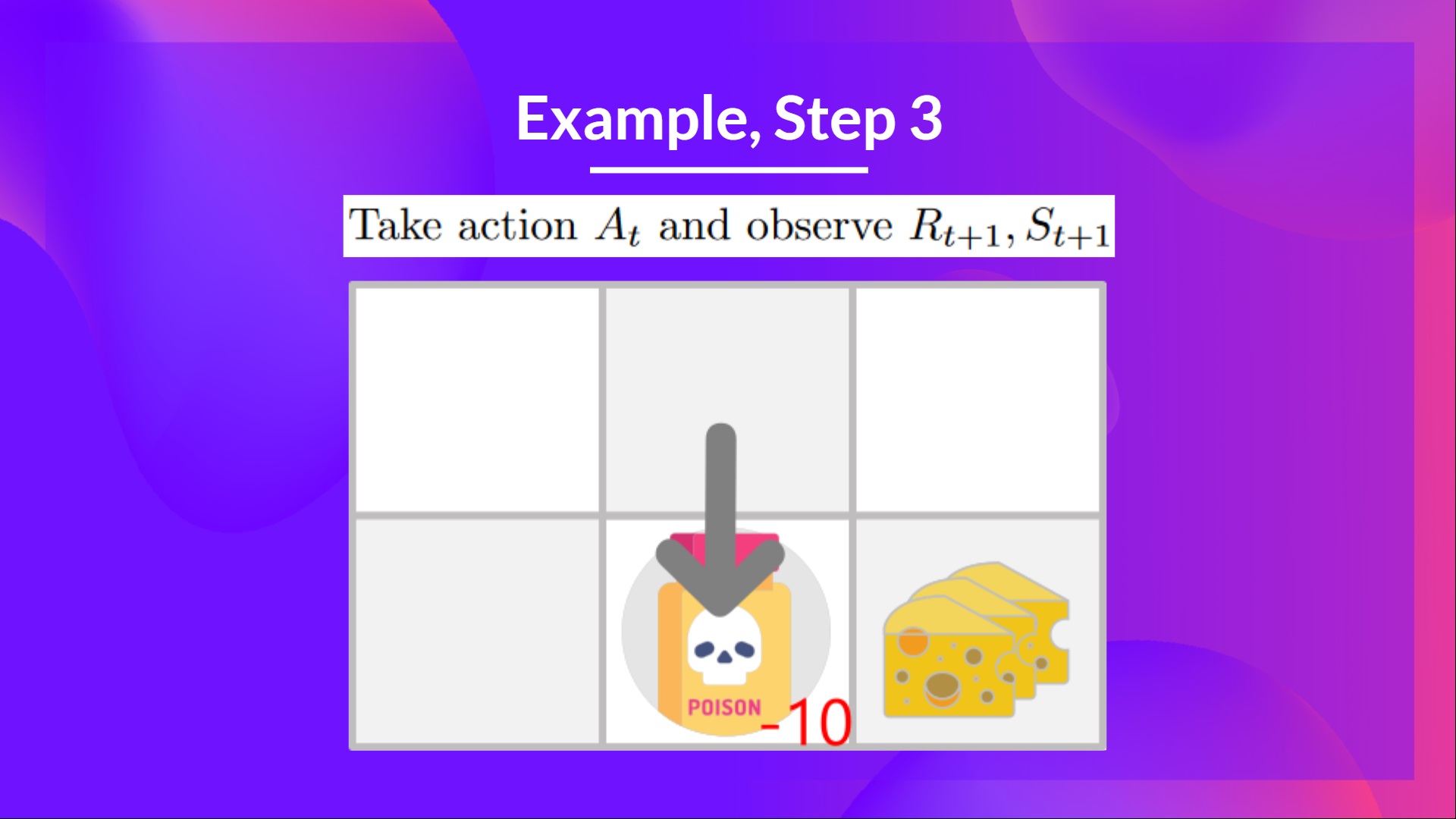

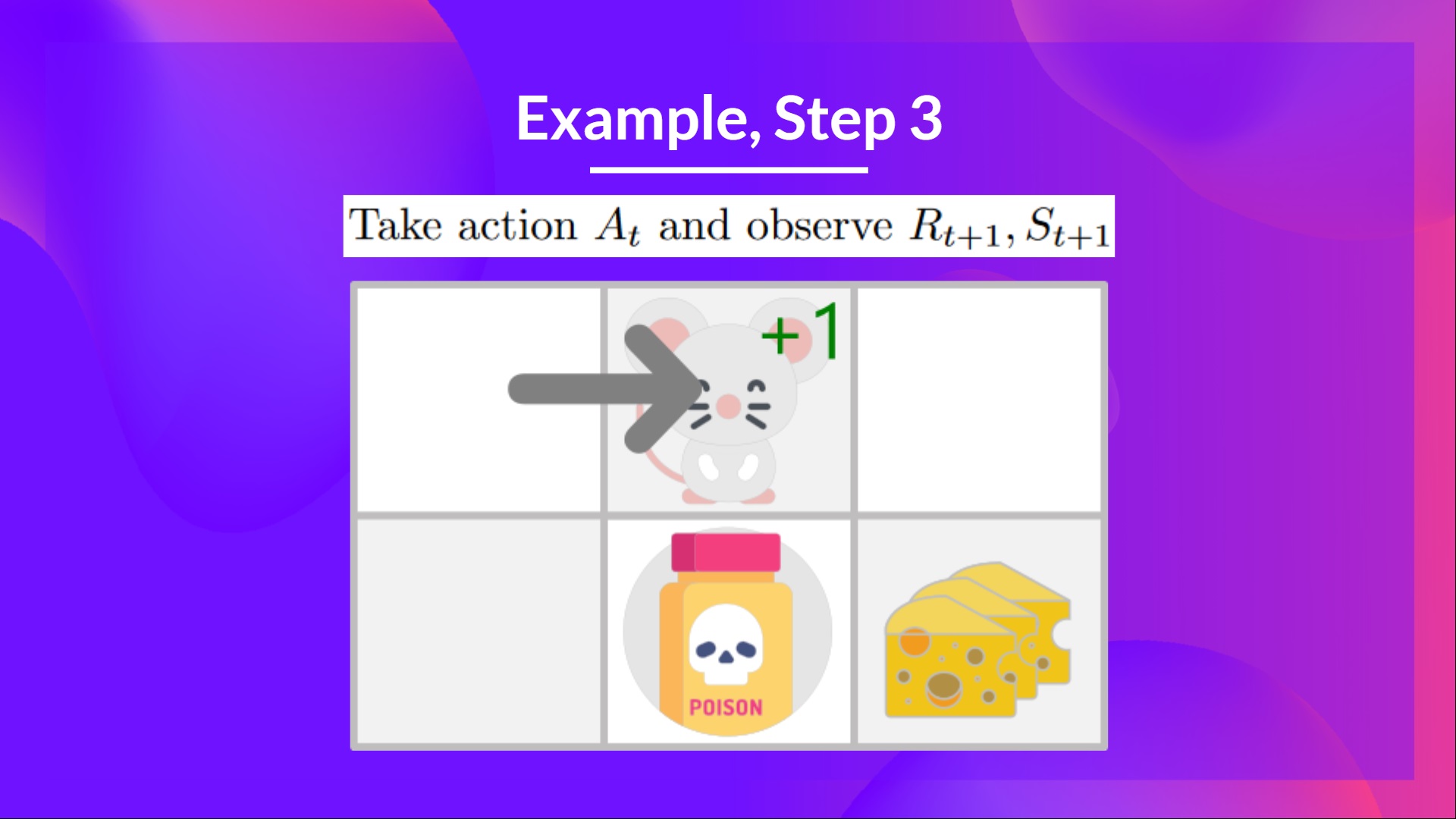

+## Step 3: Perform action At, gets Rt+1 and St+1 [[step3]]

+

+By going right, I've got a small cheese, so \\(R_{t+1} = 1\\), and I'm in a new state.

+

+

+

+

+

+## Step 3: Perform action At, gets Rt+1 and St+1 [[step3]]

+

+By going right, I've got a small cheese, so \\(R_{t+1} = 1\\), and I'm in a new state.

+

+

+ +

+

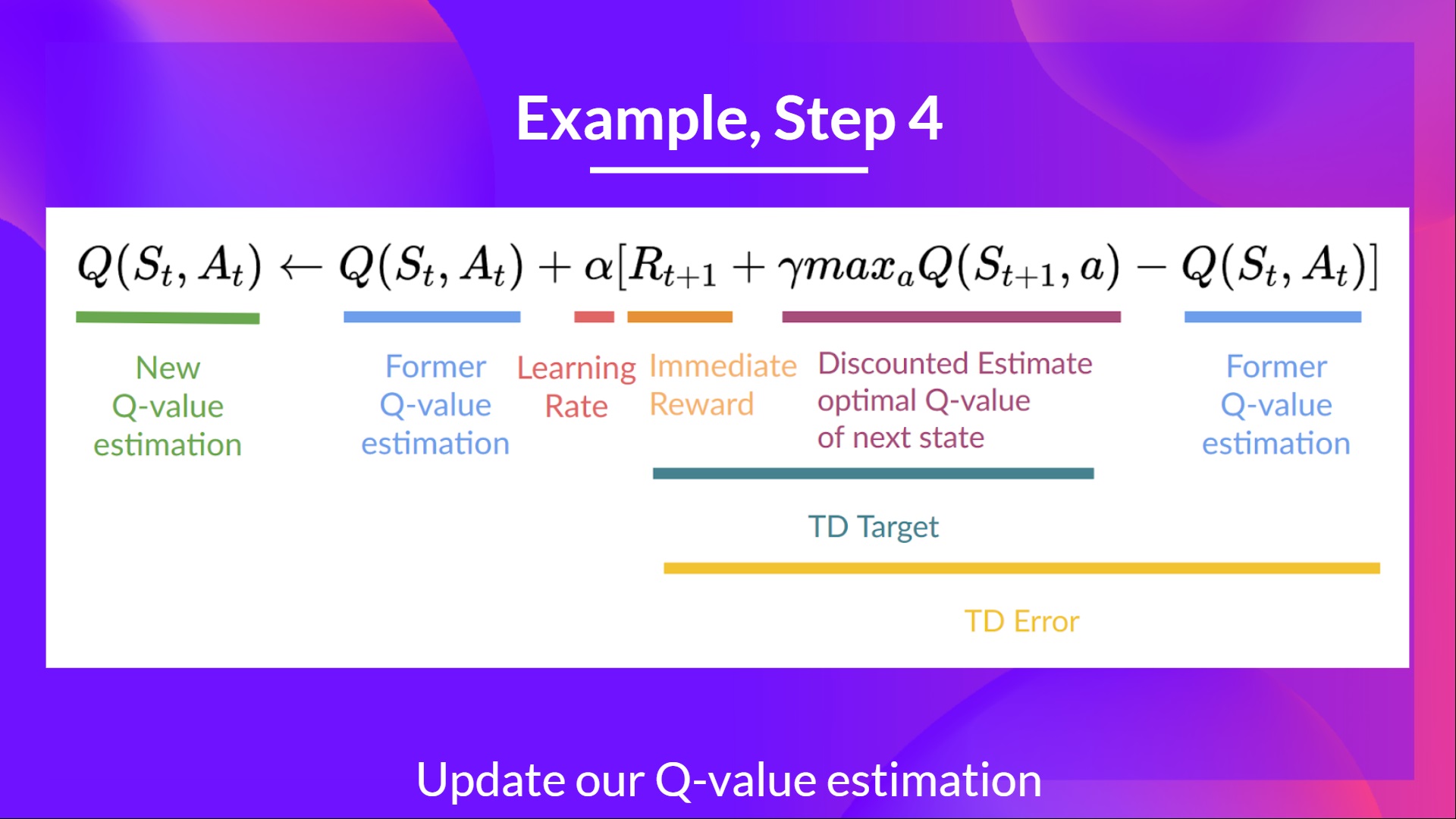

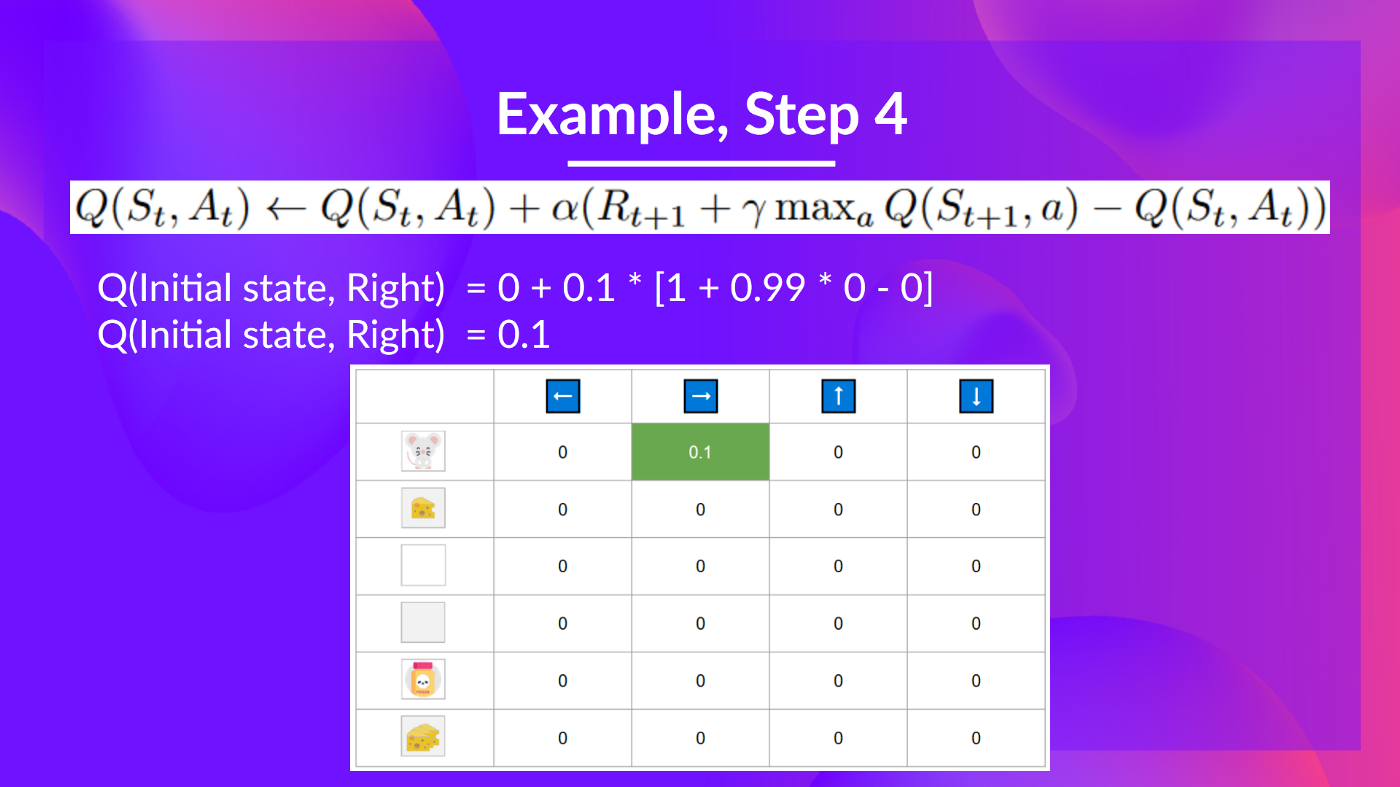

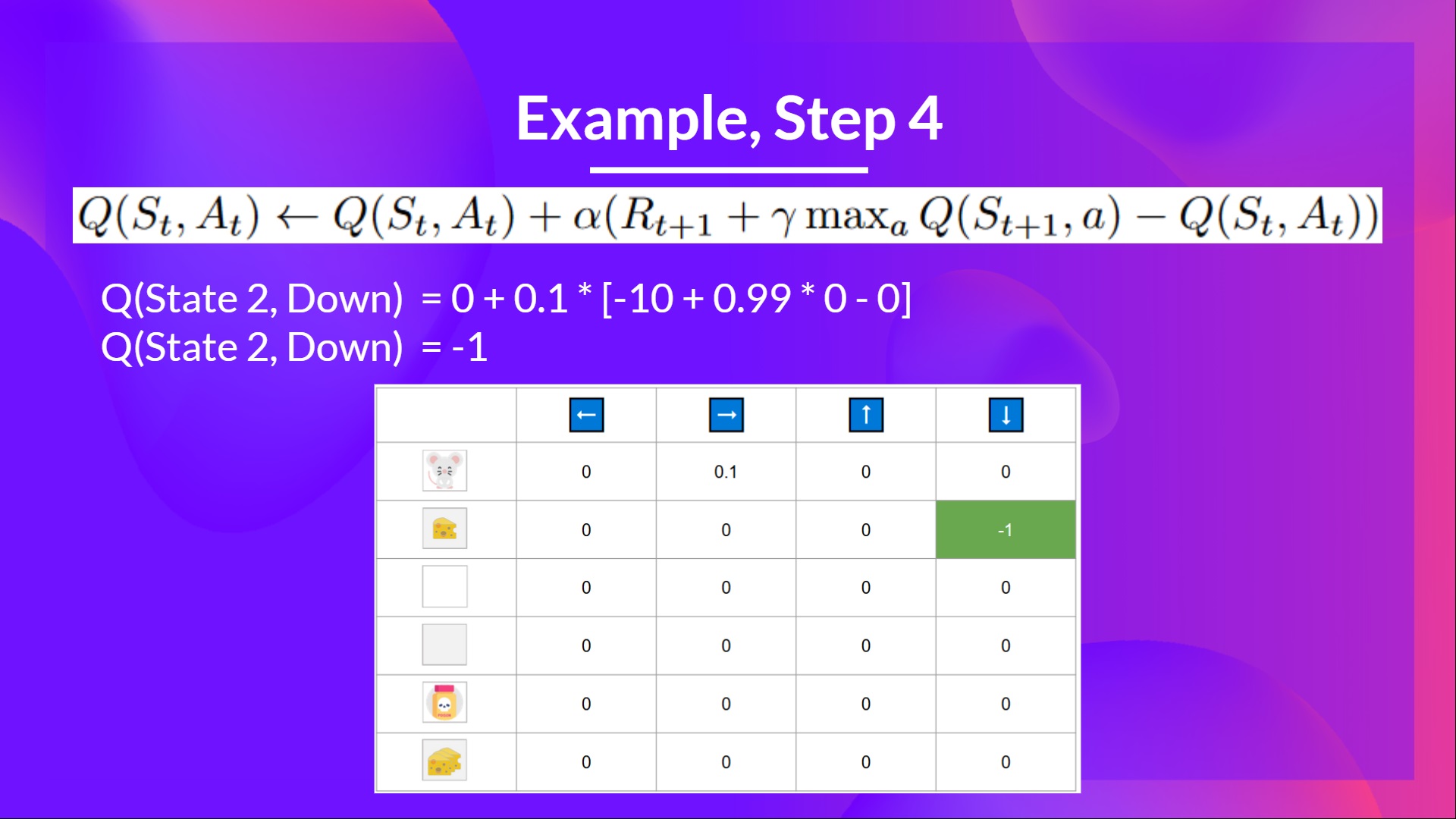

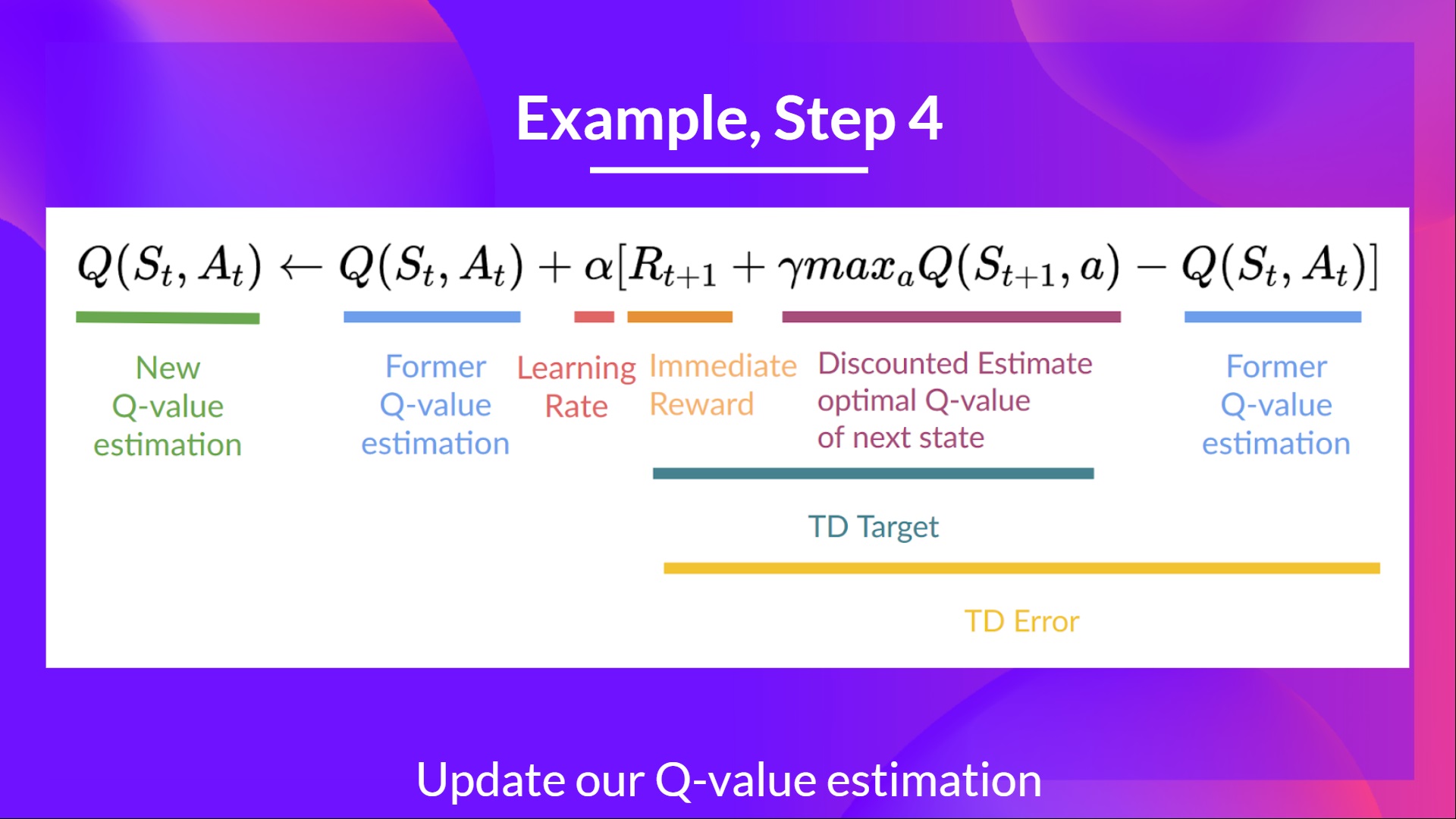

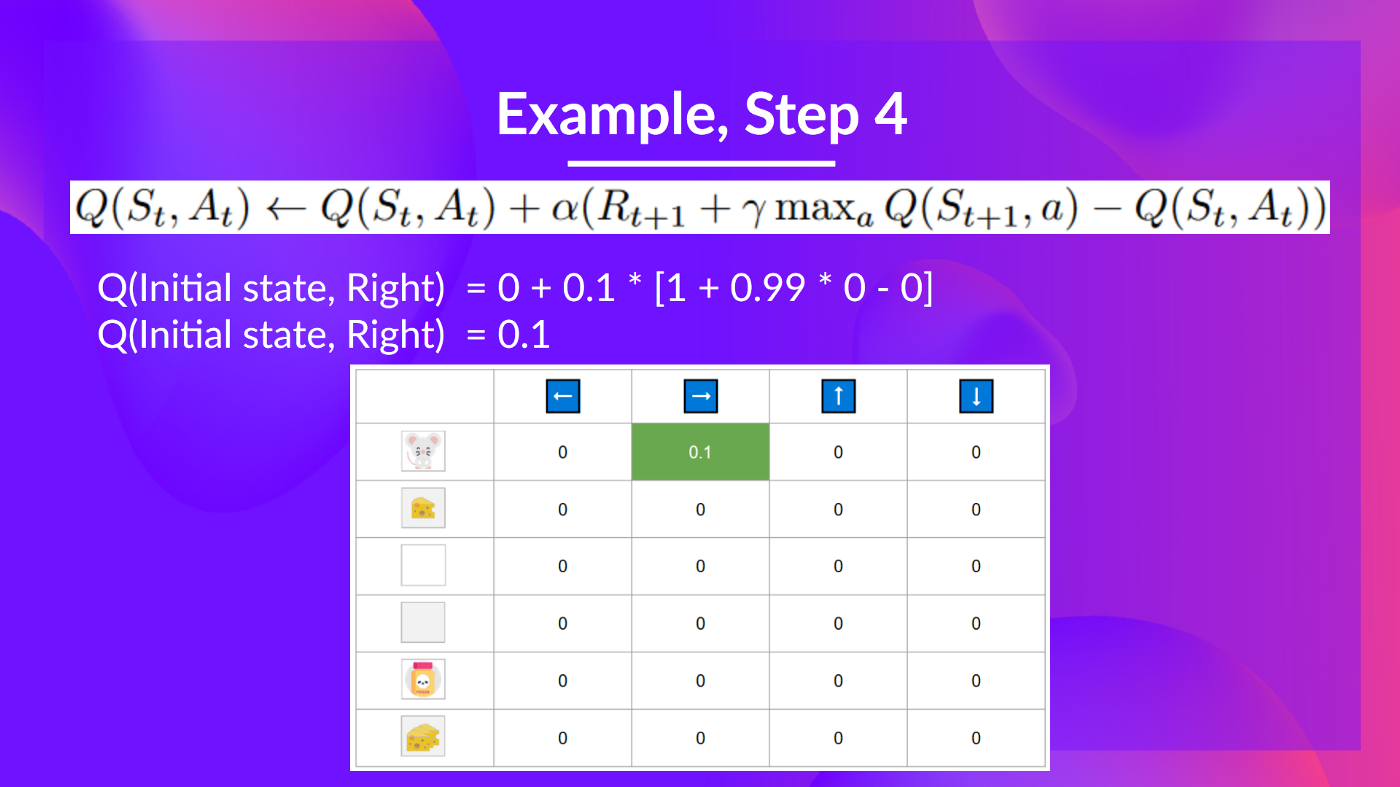

+## Step 4: Update Q(St, At) [[step4]]

+

+We can now update \\(Q(S_t, A_t)\\) using our formula.

+

+

+

+

+## Step 4: Update Q(St, At) [[step4]]

+

+We can now update \\(Q(S_t, A_t)\\) using our formula.

+

+ +

+ +

+Training timestep 2:

+

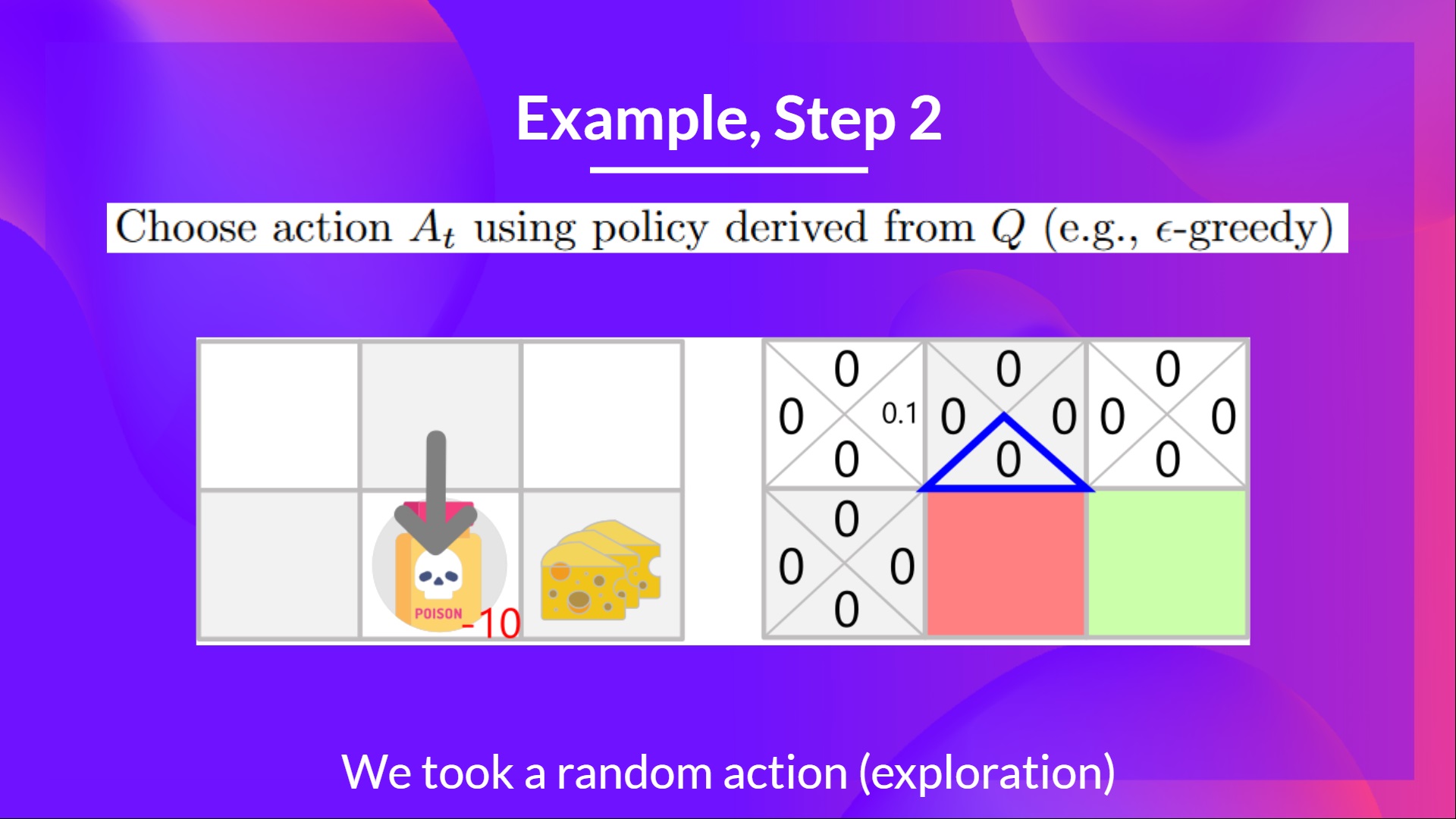

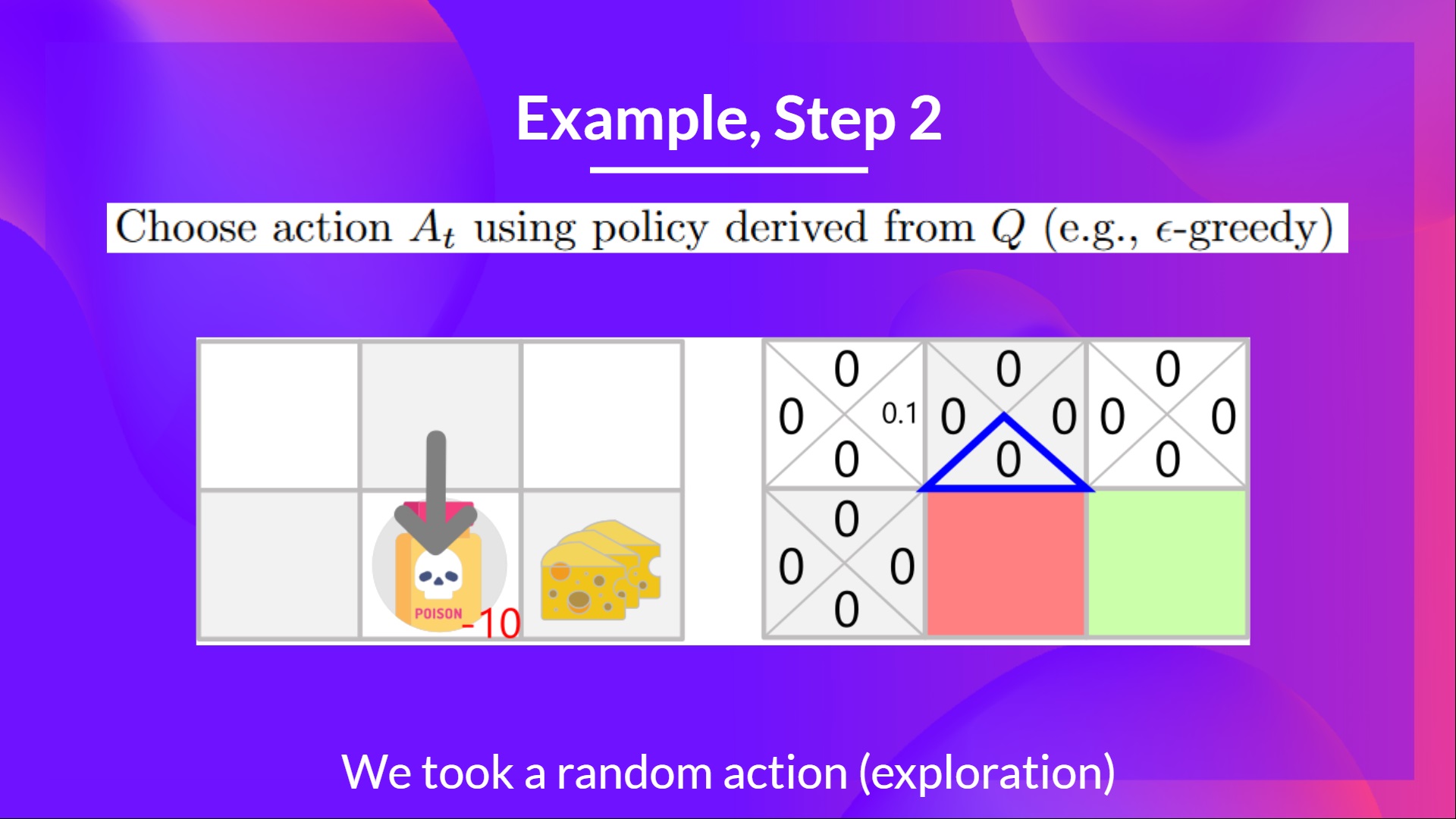

+## Step 2: Choose action using Epsilon Greedy Strategy [[step2-2]]

+

+**I take a random action again, since epsilon is big 0.99** (since we decay it a little bit because as the training progress, we want less and less exploration).

+

+I took action down. **Not a good action since it leads me to the poison.**

+

+

+

+Training timestep 2:

+

+## Step 2: Choose action using Epsilon Greedy Strategy [[step2-2]]

+

+**I take a random action again, since epsilon is big 0.99** (since we decay it a little bit because as the training progress, we want less and less exploration).

+

+I took action down. **Not a good action since it leads me to the poison.**

+

+ +

+

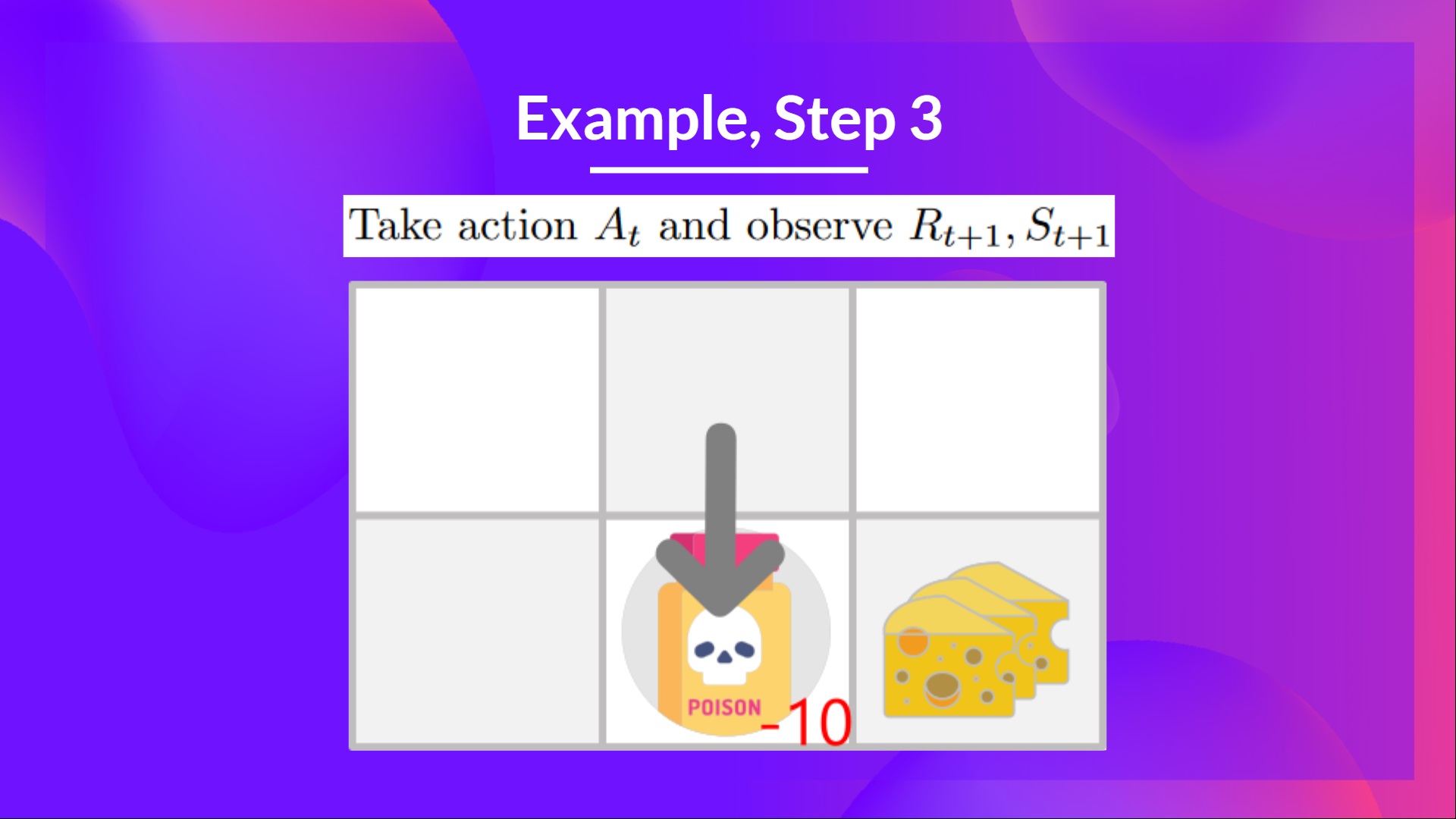

+## Step 3: Perform action At, gets \Rt+1 and St+1 [[step3-3]]

+

+Because I go to the poison state, **I get \\(R_{t+1} = -10\\), and I die.**

+

+

+

+

+## Step 3: Perform action At, gets \Rt+1 and St+1 [[step3-3]]

+

+Because I go to the poison state, **I get \\(R_{t+1} = -10\\), and I die.**

+

+ +

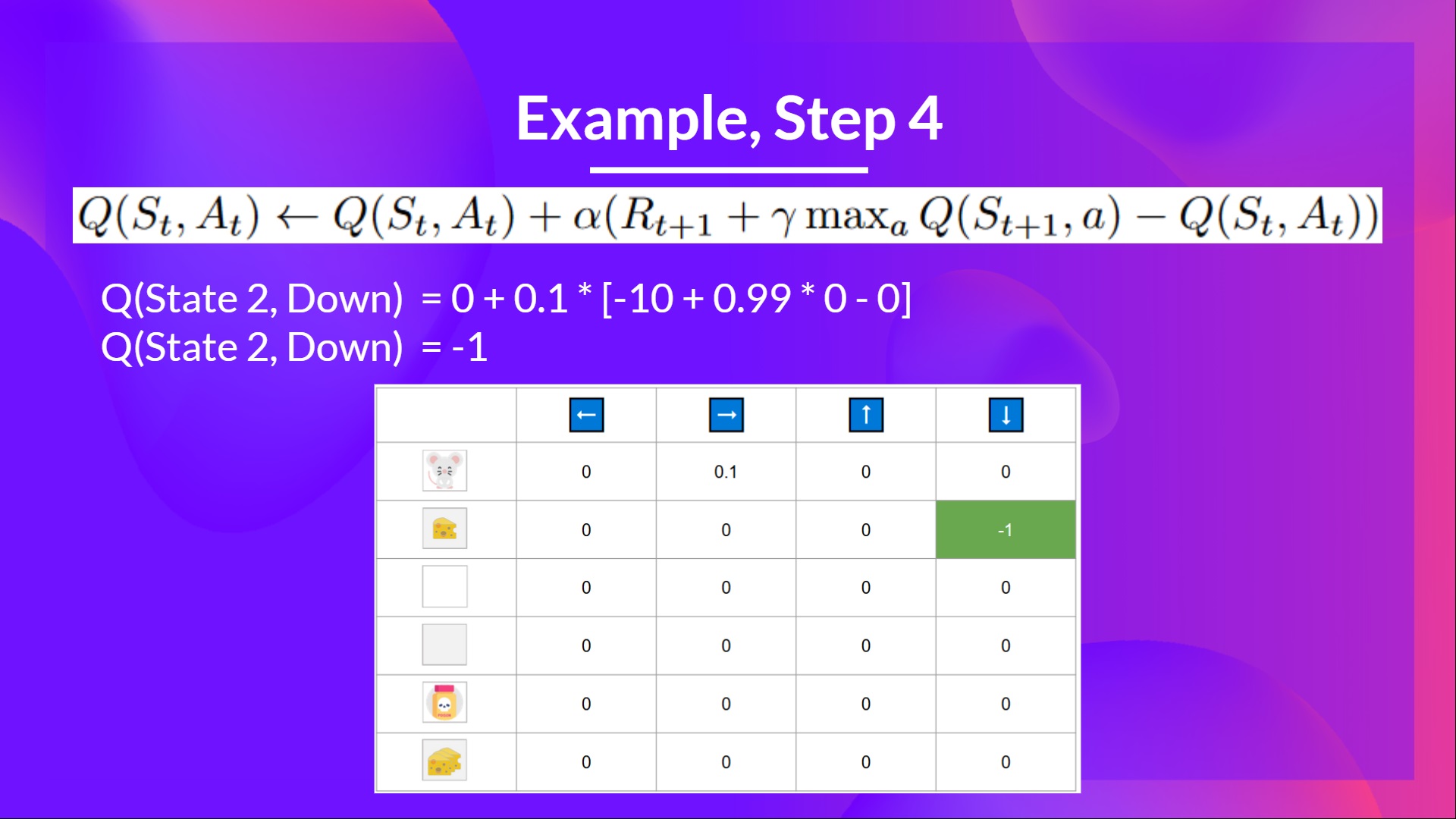

+## Step 4: Update Q(St, At) [[step4-4]]

+

+

+

+## Step 4: Update Q(St, At) [[step4-4]]

+

+ +

+Because we're dead, we start a new episode. But what we see here is that **with two explorations steps, my agent became smarter.**

+

+As we continue exploring and exploiting the environment and updating Q-values using TD target, **Q-Table will give us better and better approximations. And thus, at the end of the training, we'll get an estimate of the optimal Q-Function.**

diff --git a/units/en/unit2/q-learning.mdx b/units/en/unit2/q-learning.mdx

new file mode 100644

index 0000000..8447e4c

--- /dev/null

+++ b/units/en/unit2/q-learning.mdx

@@ -0,0 +1,153 @@

+# Introducing Q-Learning [[q-learning]]

+## What is Q-Learning? [[what-is-q-learning]]

+

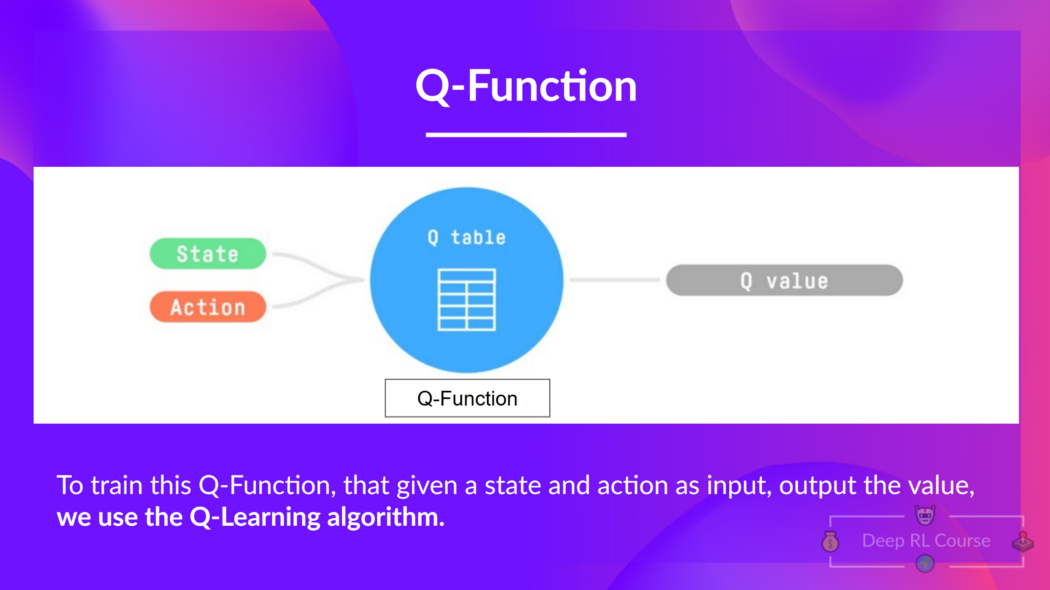

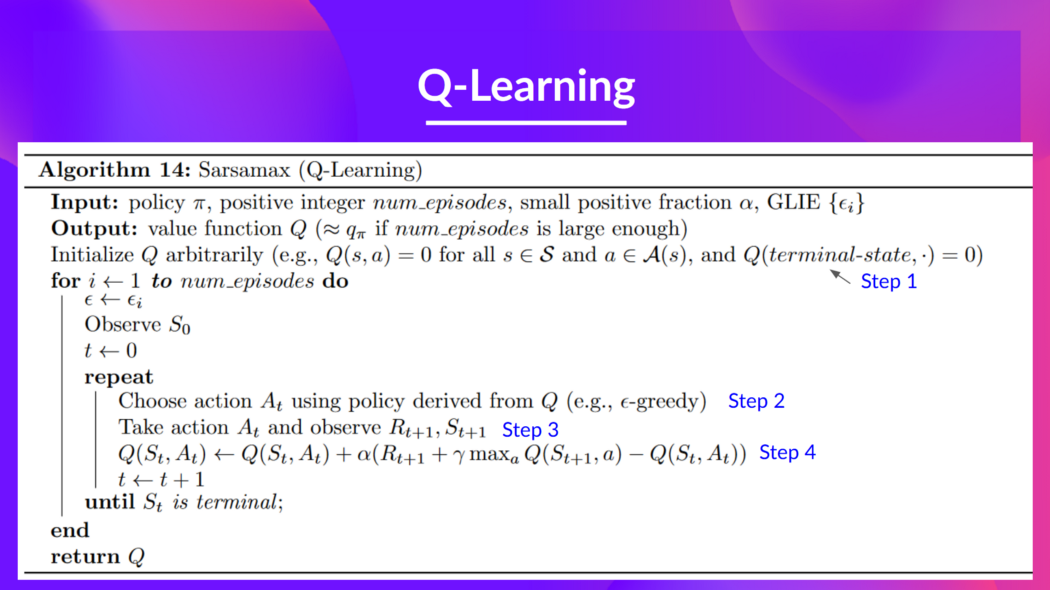

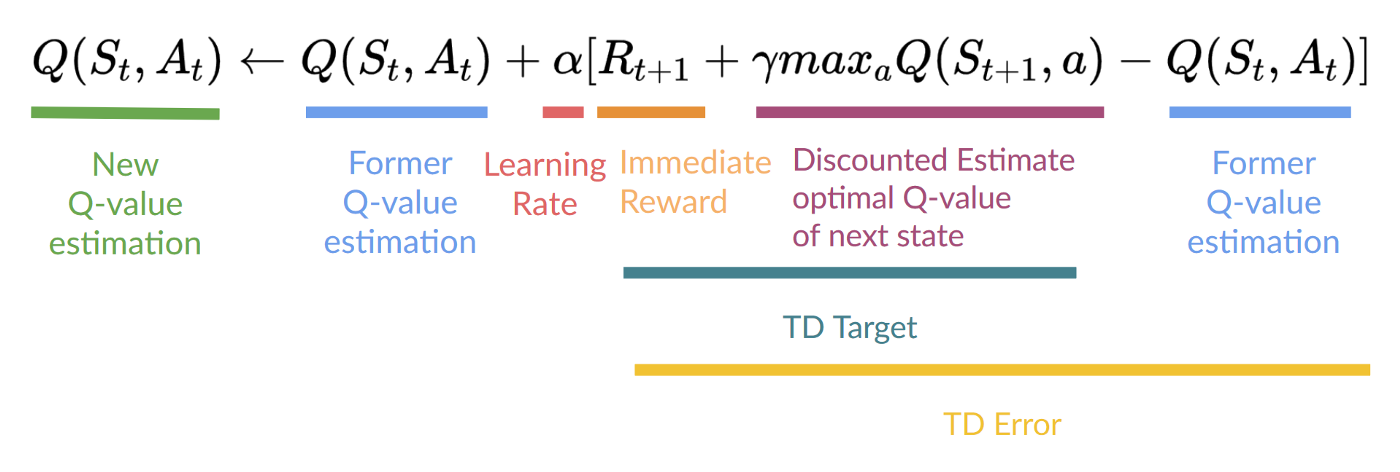

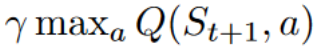

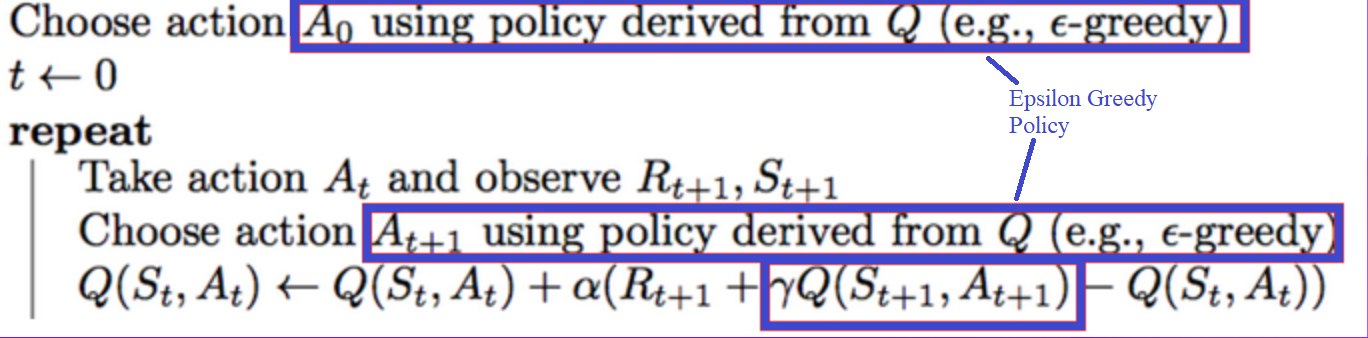

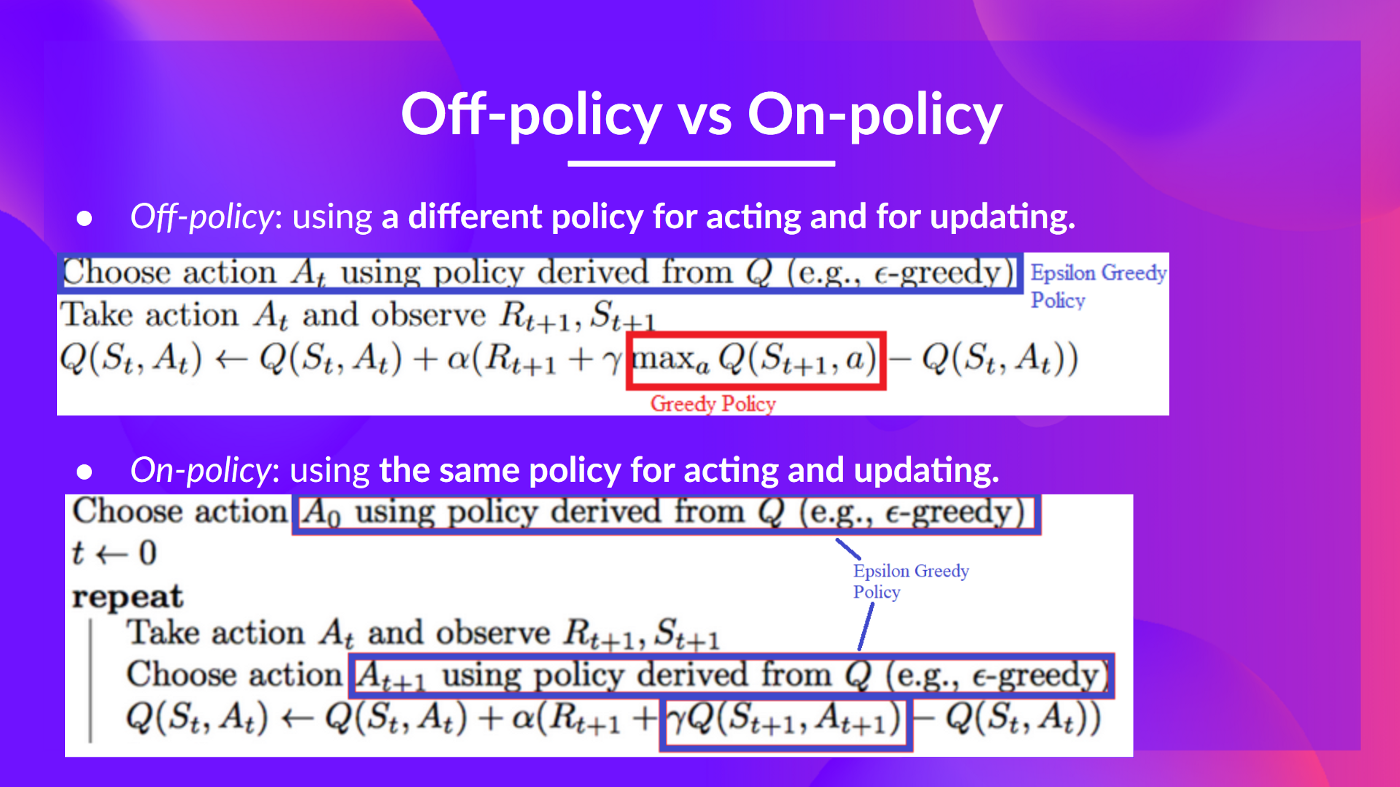

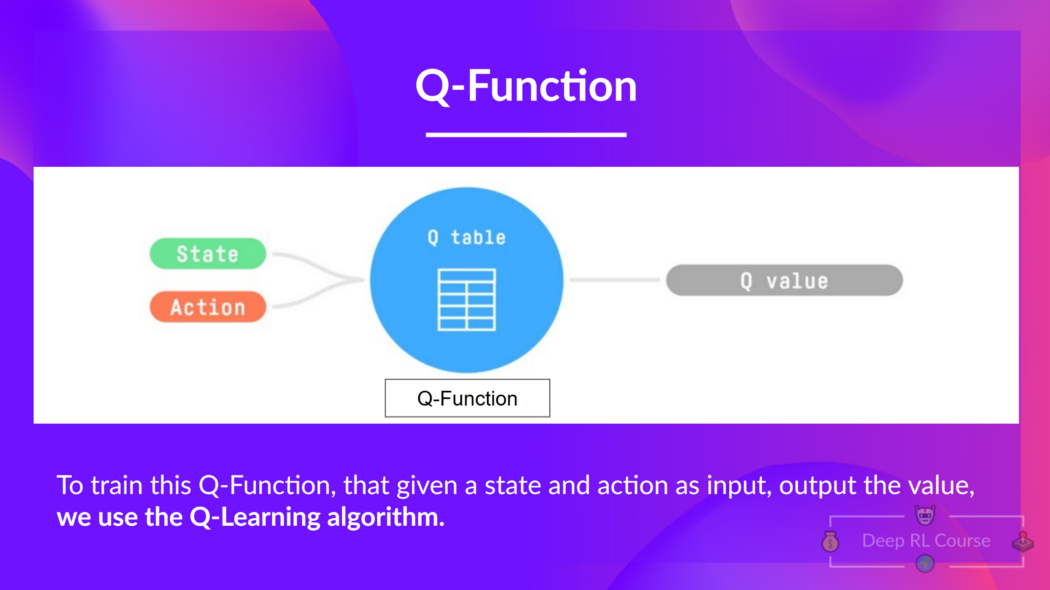

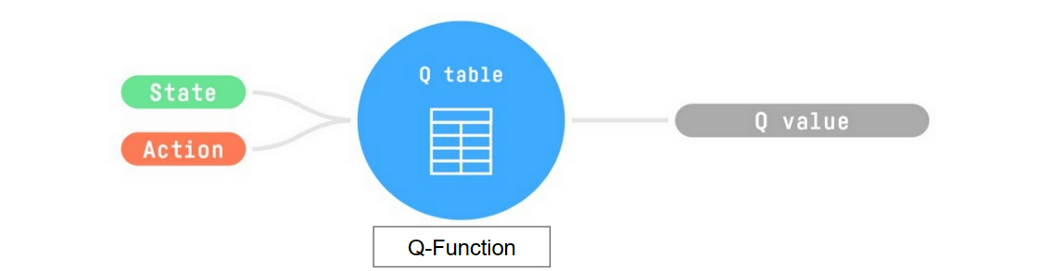

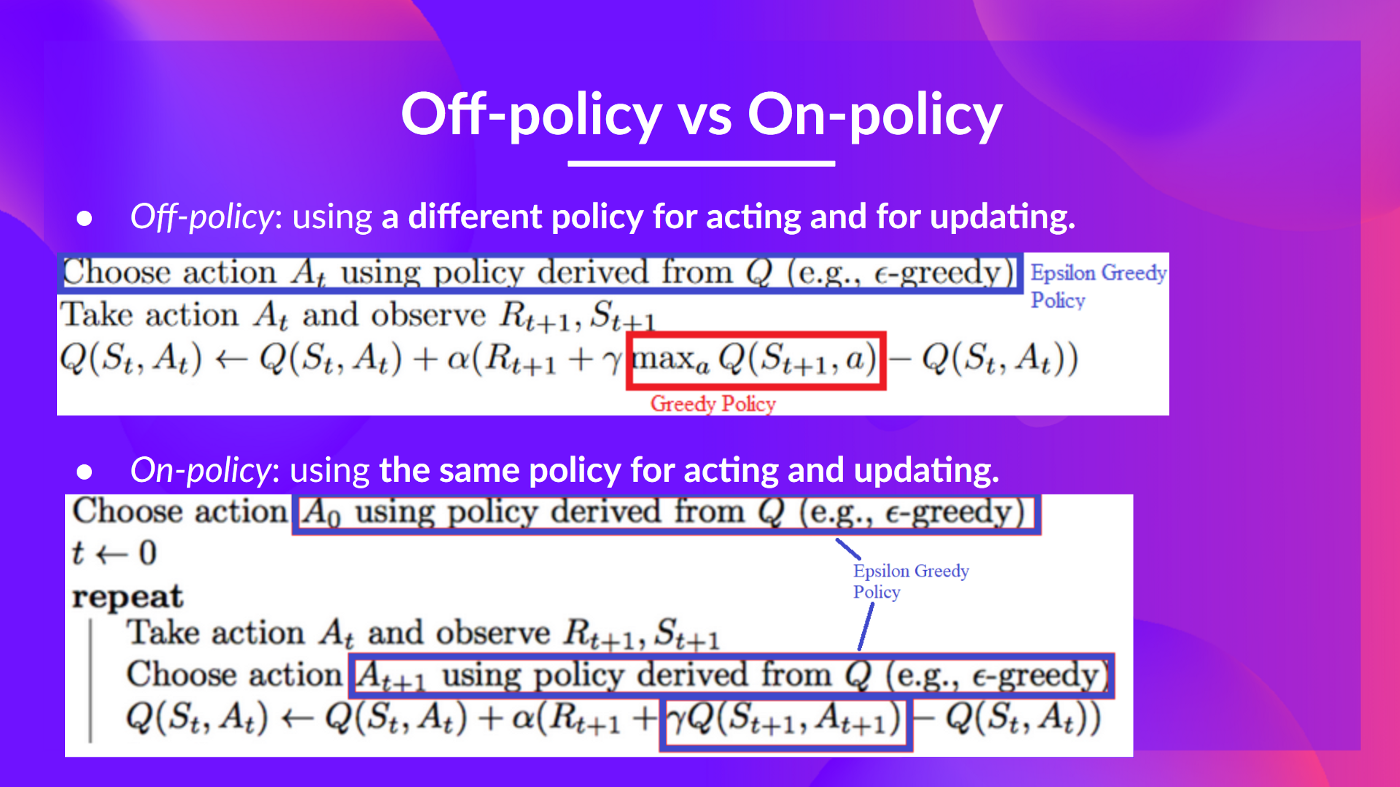

+Q-Learning is an **off-policy value-based method that uses a TD approach to train its action-value function:**

+

+- *Off-policy*: we'll talk about that at the end of this chapter.

+- *Value-based method*: finds the optimal policy indirectly by training a value or action-value function that will tell us **the value of each state or each state-action pair.**

+- *Uses a TD approach:* **updates its action-value function at each step instead of at the end of the episode.**

+

+**Q-Learning is the algorithm we use to train our Q-Function**, an **action-value function** that determines the value of being at a particular state and taking a specific action at that state.

+

+

+

+

+Because we're dead, we start a new episode. But what we see here is that **with two explorations steps, my agent became smarter.**

+

+As we continue exploring and exploiting the environment and updating Q-values using TD target, **Q-Table will give us better and better approximations. And thus, at the end of the training, we'll get an estimate of the optimal Q-Function.**

diff --git a/units/en/unit2/q-learning.mdx b/units/en/unit2/q-learning.mdx

new file mode 100644

index 0000000..8447e4c

--- /dev/null

+++ b/units/en/unit2/q-learning.mdx

@@ -0,0 +1,153 @@

+# Introducing Q-Learning [[q-learning]]

+## What is Q-Learning? [[what-is-q-learning]]

+

+Q-Learning is an **off-policy value-based method that uses a TD approach to train its action-value function:**

+

+- *Off-policy*: we'll talk about that at the end of this chapter.

+- *Value-based method*: finds the optimal policy indirectly by training a value or action-value function that will tell us **the value of each state or each state-action pair.**

+- *Uses a TD approach:* **updates its action-value function at each step instead of at the end of the episode.**

+

+**Q-Learning is the algorithm we use to train our Q-Function**, an **action-value function** that determines the value of being at a particular state and taking a specific action at that state.

+

+

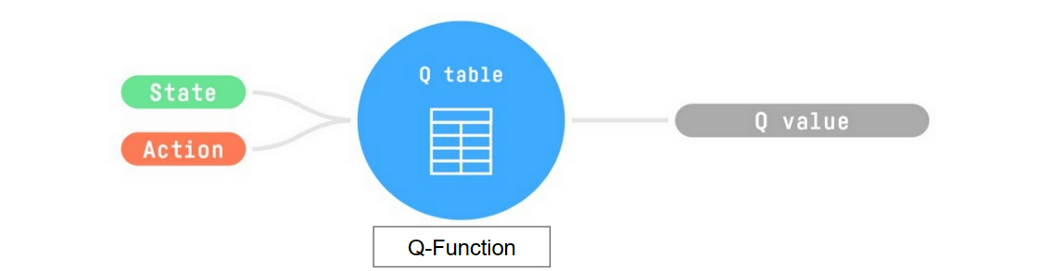

+ + Given a state and action, our Q Function outputs a state-action value (also called Q-value)

+

+

+The **Q comes from "the Quality" of that action at that state.**

+

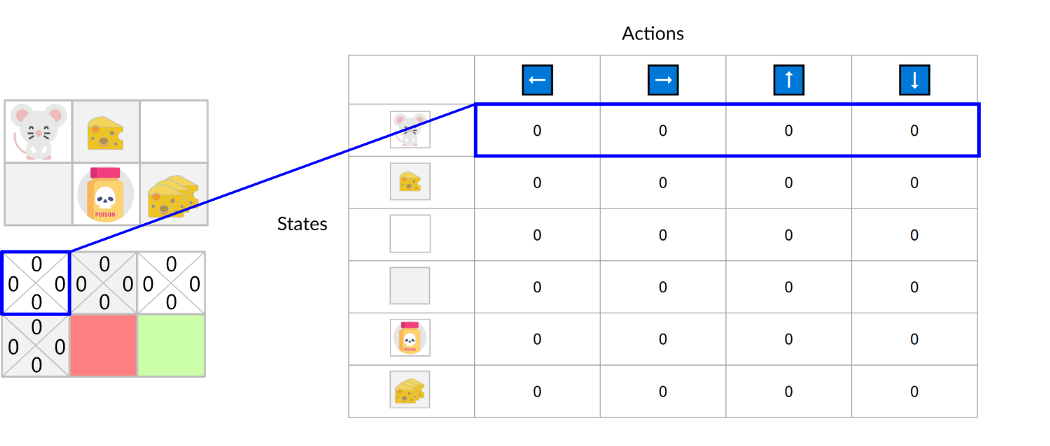

+Internally, our Q-function has **a Q-table, a table where each cell corresponds to a state-action value pair value.** Think of this Q-table as **the memory or cheat sheet of our Q-function.**

+

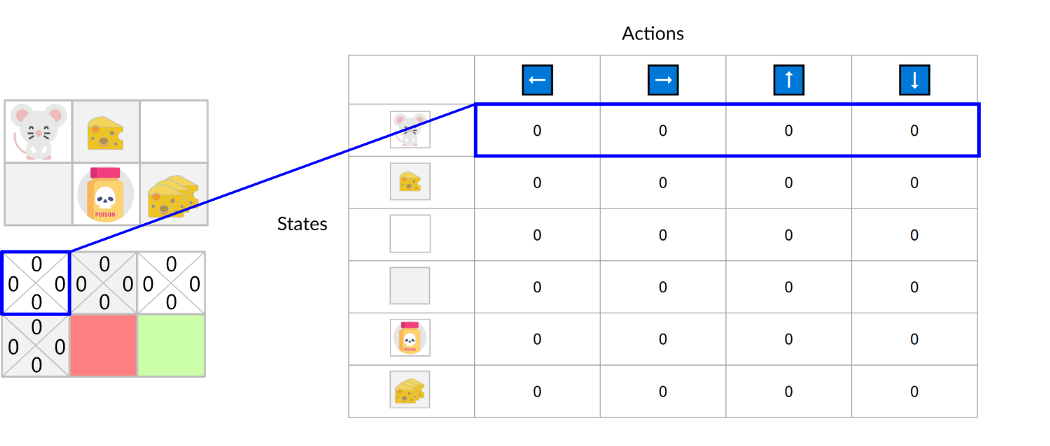

+If we take this maze example:

+

+

+ Given a state and action, our Q Function outputs a state-action value (also called Q-value)

+

+

+The **Q comes from "the Quality" of that action at that state.**

+

+Internally, our Q-function has **a Q-table, a table where each cell corresponds to a state-action value pair value.** Think of this Q-table as **the memory or cheat sheet of our Q-function.**

+

+If we take this maze example:

+

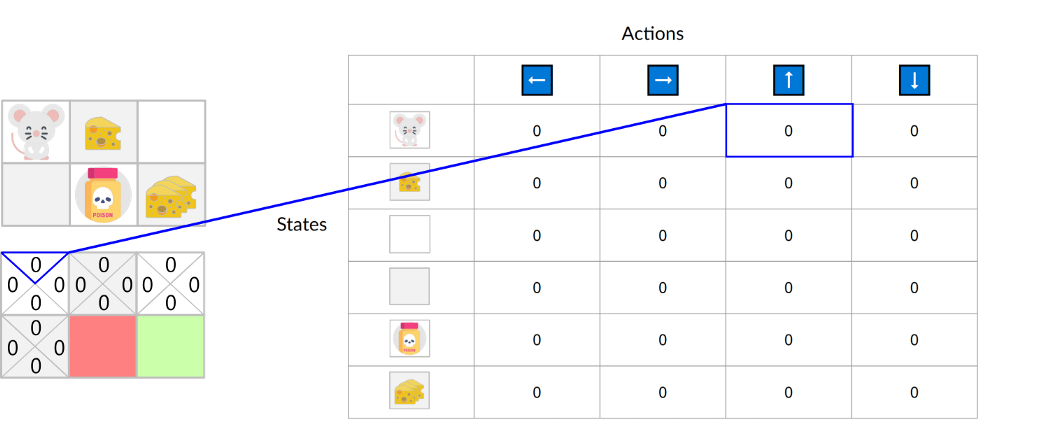

+ +

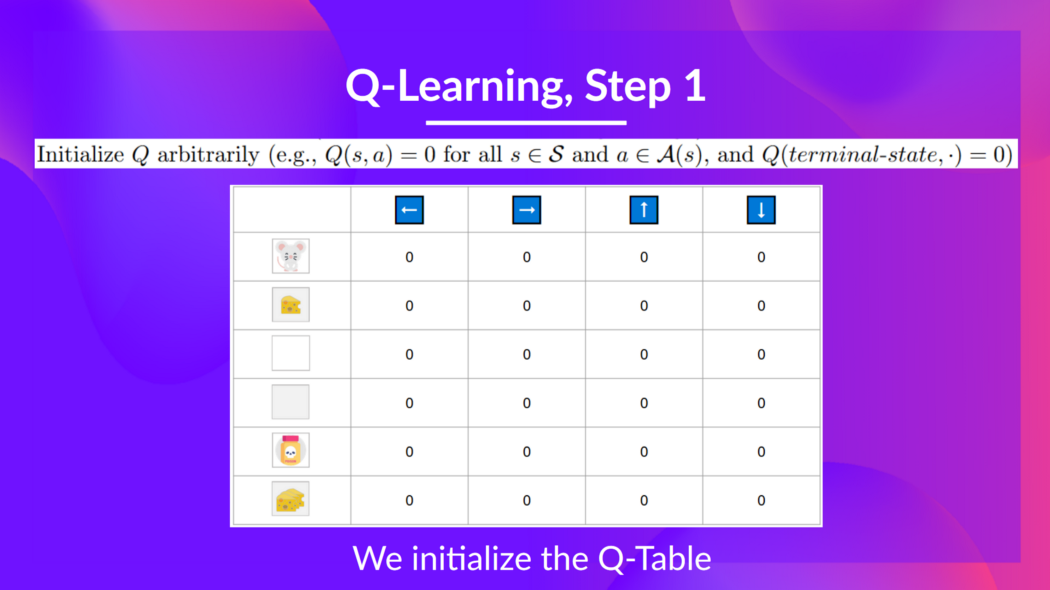

+The Q-Table is initialized. That's why all values are = 0. This table **contains, for each state, the four state-action values.**

+

+

+

+The Q-Table is initialized. That's why all values are = 0. This table **contains, for each state, the four state-action values.**

+

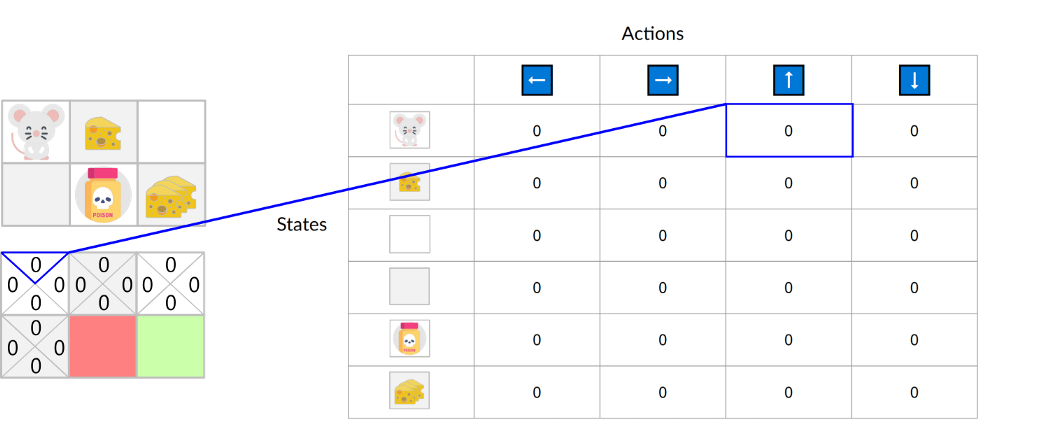

+ +

+Here we see that the **state-action value of the initial state and going up is 0:**

+

+

+

+Here we see that the **state-action value of the initial state and going up is 0:**

+

+ +

+Therefore, Q-function contains a Q-table **that has the value of each-state action pair.** And given a state and action, **our Q-Function will search inside its Q-table to output the value.**

+

+

+

+

+Therefore, Q-function contains a Q-table **that has the value of each-state action pair.** And given a state and action, **our Q-Function will search inside its Q-table to output the value.**

+

+

+ + Given a state and action pair, our Q-function will search inside its Q-table to output the state-action pair value (the Q value).

+

+

+If we recap, *Q-Learning* **is the RL algorithm that:**

+

+- Trains *Q-Function* (an **action-value function**) which internally is a *Q-table* **that contains all the state-action pair values.**

+- Given a state and action, our Q-Function **will search into its Q-table the corresponding value.**

+- When the training is done, **we have an optimal Q-function, which means we have optimal Q-Table.**

+- And if we **have an optimal Q-function**, we **have an optimal policy** since we **know for each state what is the best action to take.**

+

+

+ Given a state and action pair, our Q-function will search inside its Q-table to output the state-action pair value (the Q value).

+

+

+If we recap, *Q-Learning* **is the RL algorithm that:**

+

+- Trains *Q-Function* (an **action-value function**) which internally is a *Q-table* **that contains all the state-action pair values.**

+- Given a state and action, our Q-Function **will search into its Q-table the corresponding value.**

+- When the training is done, **we have an optimal Q-function, which means we have optimal Q-Table.**

+- And if we **have an optimal Q-function**, we **have an optimal policy** since we **know for each state what is the best action to take.**

+

+ +

+

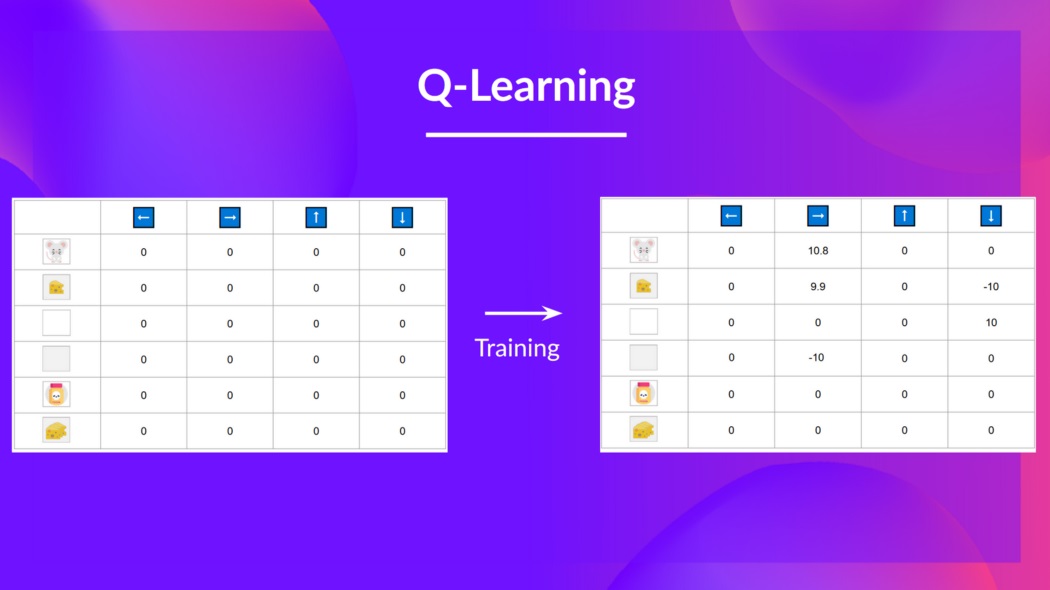

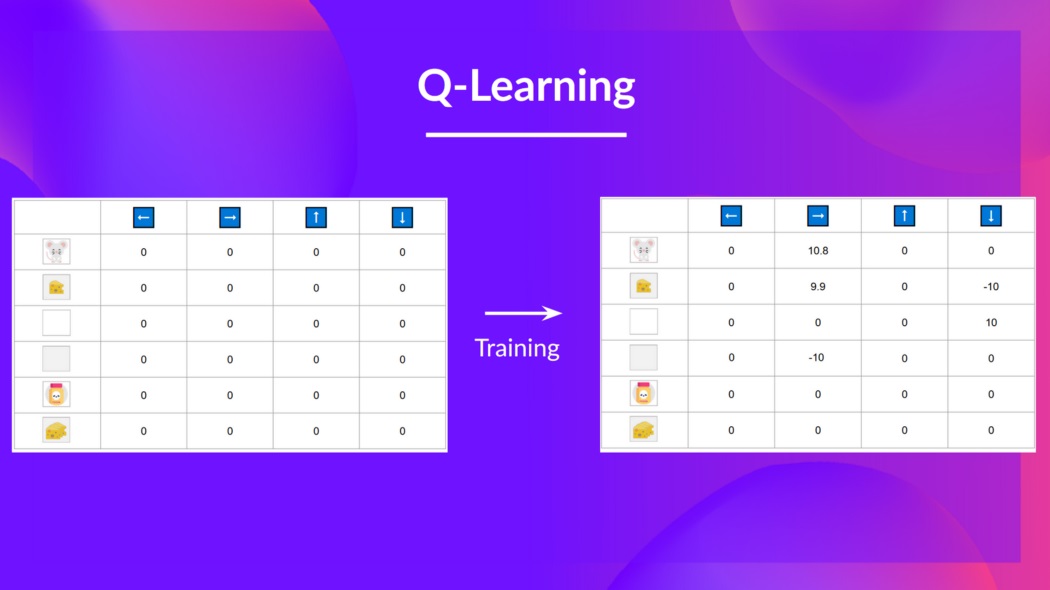

+But, in the beginning, **our Q-Table is useless since it gives arbitrary values for each state-action pair** (most of the time, we initialize the Q-Table to 0 values). But, as we'll **explore the environment and update our Q-Table, it will give us better and better approximations.**

+

+

+

+

+

+But, in the beginning, **our Q-Table is useless since it gives arbitrary values for each state-action pair** (most of the time, we initialize the Q-Table to 0 values). But, as we'll **explore the environment and update our Q-Table, it will give us better and better approximations.**

+

+

+ + We see here that with the training, our Q-Table is better since, thanks to it, we can know the value of each state-action pair.

+

+

+So now that we understand what Q-Learning, Q-Function, and Q-Table are, **let's dive deeper into the Q-Learning algorithm**.

+

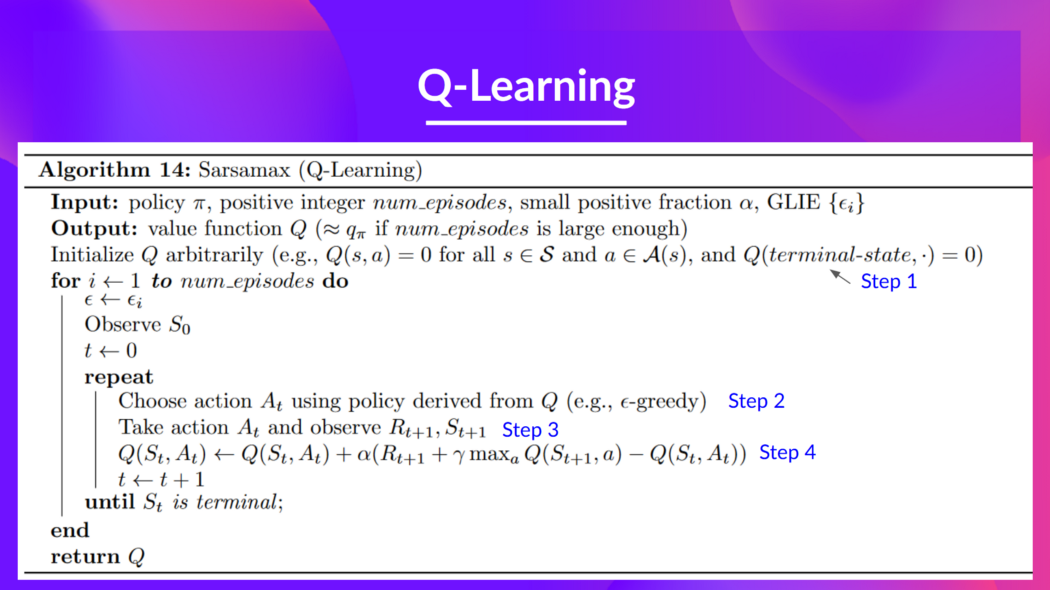

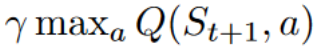

+## The Q-Learning algorithm [[q-learning-algo]]

+

+This is the Q-Learning pseudocode; let's study each part and **see how it works with a simple example before implementing it.** Don't be intimidated by it, it's simpler than it looks! We'll go over each step.

+

+

+ We see here that with the training, our Q-Table is better since, thanks to it, we can know the value of each state-action pair.

+

+

+So now that we understand what Q-Learning, Q-Function, and Q-Table are, **let's dive deeper into the Q-Learning algorithm**.

+

+## The Q-Learning algorithm [[q-learning-algo]]

+

+This is the Q-Learning pseudocode; let's study each part and **see how it works with a simple example before implementing it.** Don't be intimidated by it, it's simpler than it looks! We'll go over each step.

+

+ +

+### Step 1: We initialize the Q-Table [[step1]]

+

+

+

+### Step 1: We initialize the Q-Table [[step1]]

+

+ +

+

+We need to initialize the Q-Table for each state-action pair. **Most of the time, we initialize with values of 0.**

+

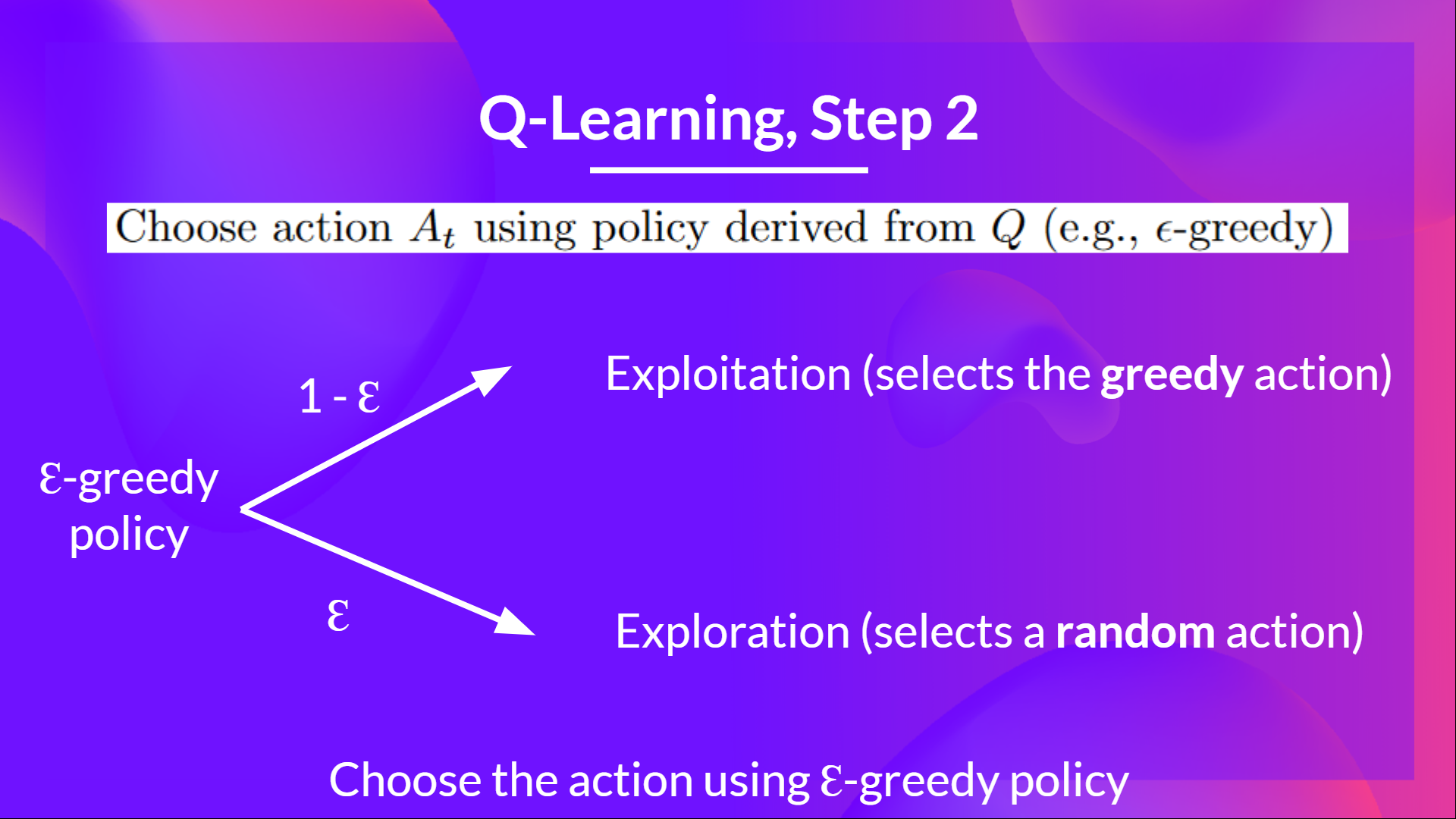

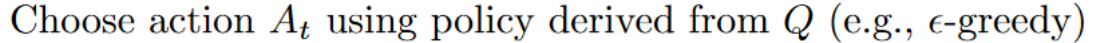

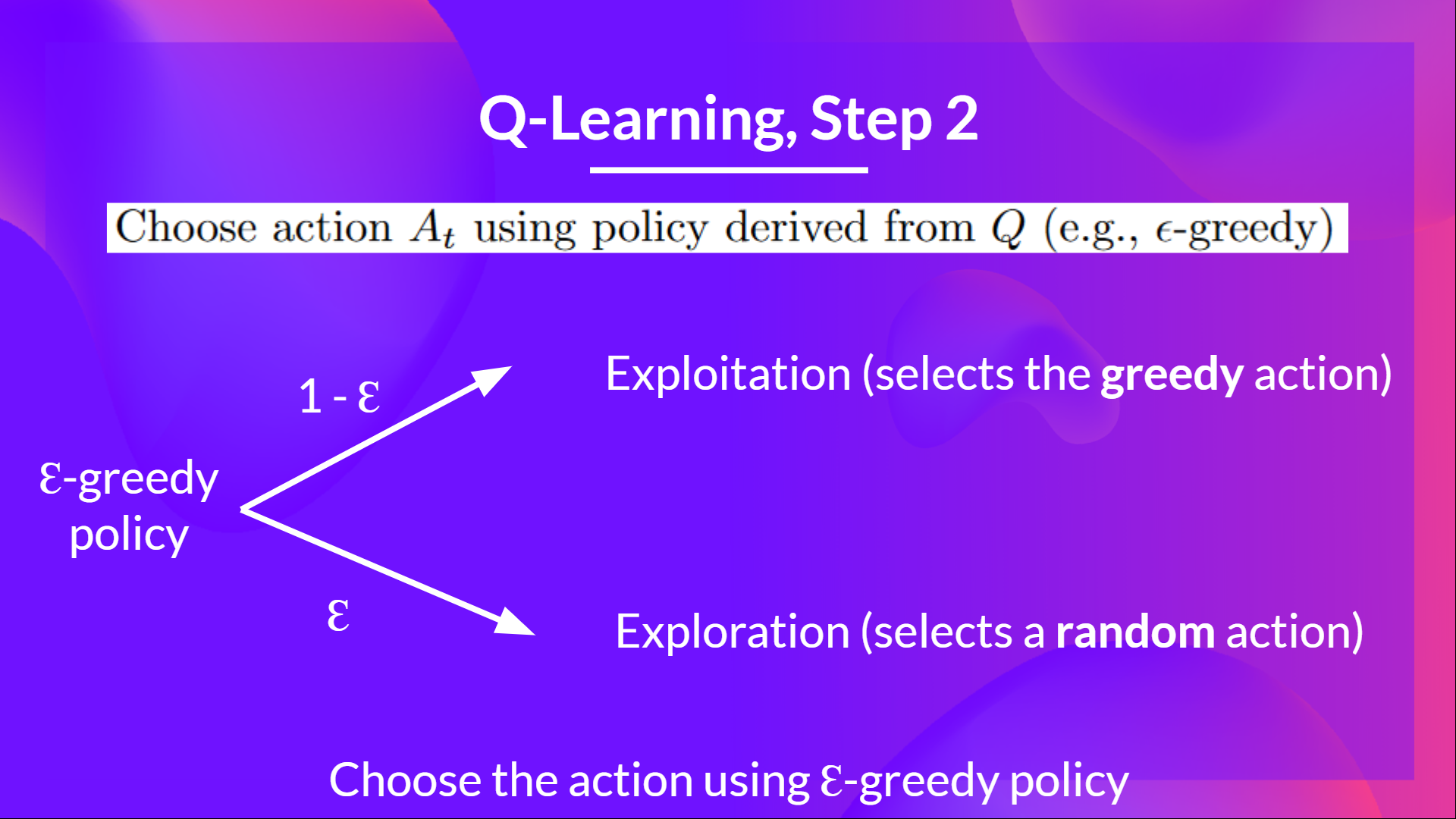

+### Step 2: Choose action using Epsilon Greedy Strategy [[step2]]

+

+

+

+

+We need to initialize the Q-Table for each state-action pair. **Most of the time, we initialize with values of 0.**

+

+### Step 2: Choose action using Epsilon Greedy Strategy [[step2]]

+

+ +

+

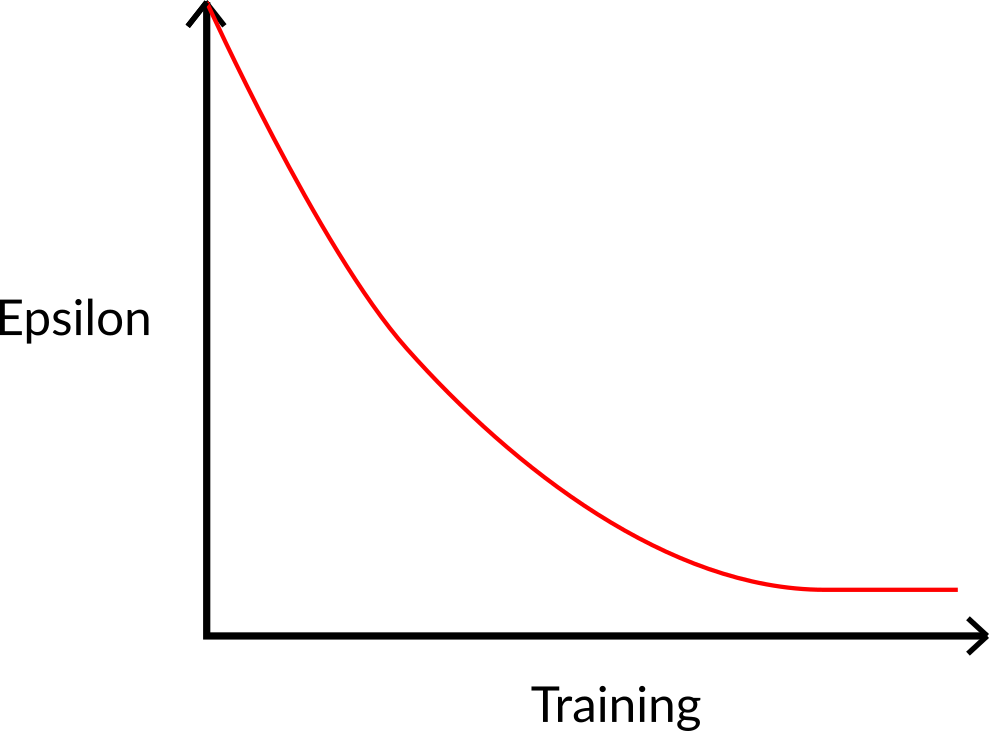

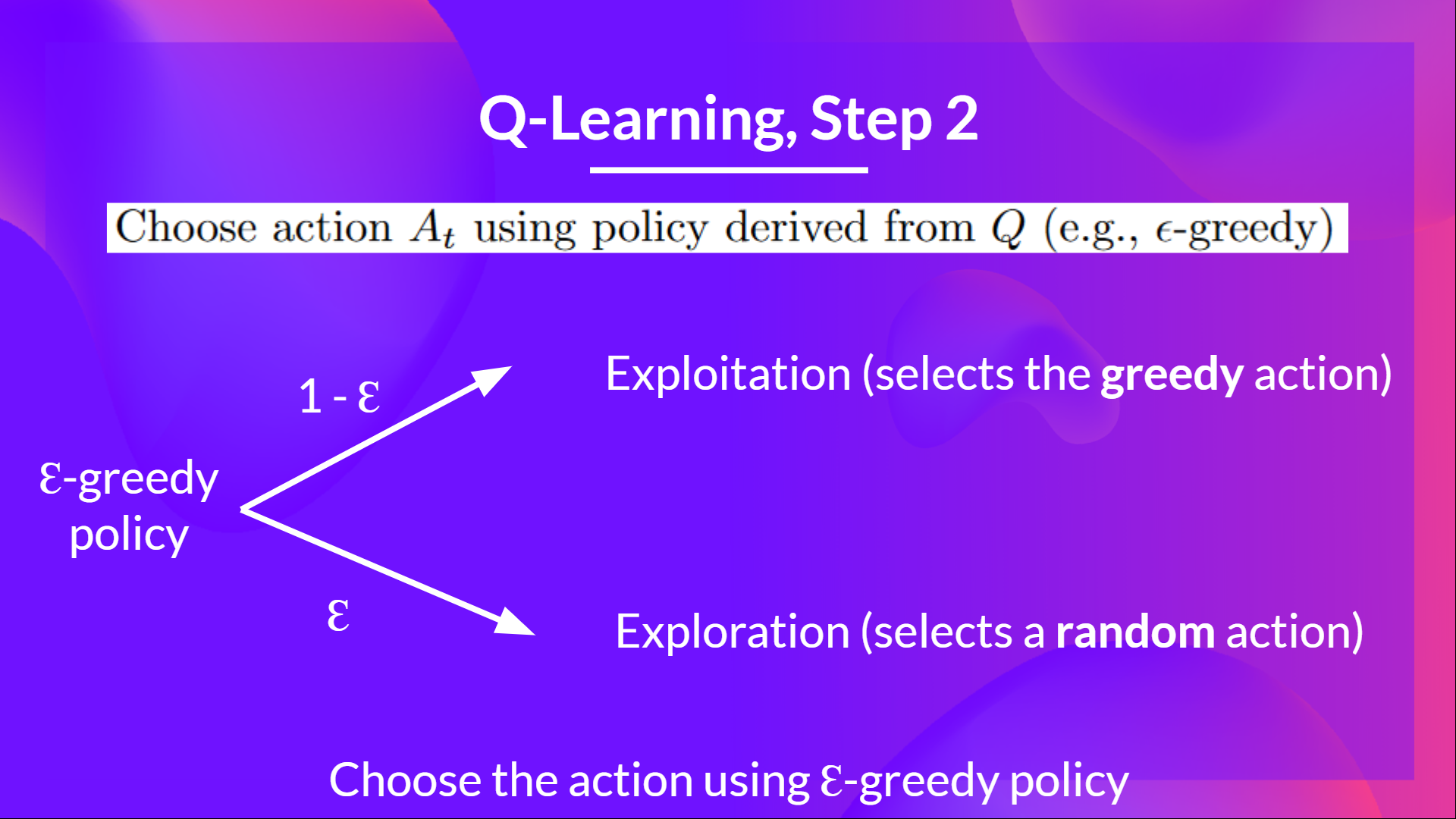

+Epsilon Greedy Strategy is a policy that handles the exploration/exploitation trade-off.

+

+The idea is that we define epsilon ɛ = 1.0:

+

+- *With probability 1 — ɛ* : we do **exploitation** (aka our agent selects the action with the highest state-action pair value).

+- With probability ɛ: **we do exploration** (trying random action).

+

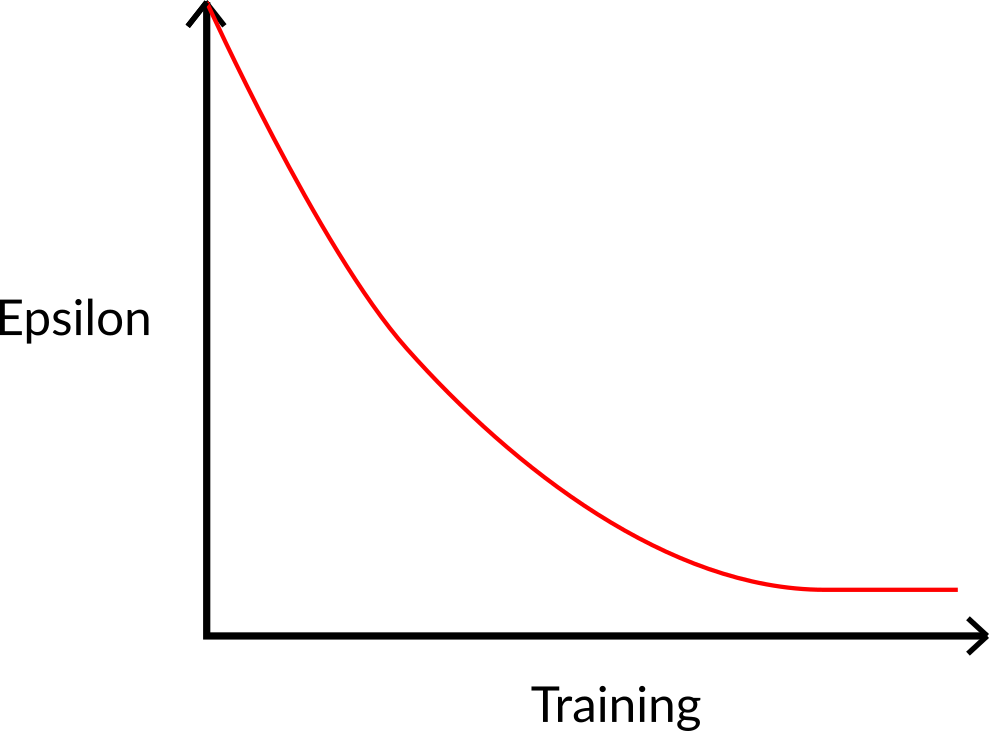

+At the beginning of the training, **the probability of doing exploration will be huge since ɛ is very high, so most of the time, we'll explore.** But as the training goes on, and consequently our **Q-Table gets better and better in its estimations, we progressively reduce the epsilon value** since we will need less and less exploration and more exploitation.

+

+

+

+

+Epsilon Greedy Strategy is a policy that handles the exploration/exploitation trade-off.

+

+The idea is that we define epsilon ɛ = 1.0:

+

+- *With probability 1 — ɛ* : we do **exploitation** (aka our agent selects the action with the highest state-action pair value).

+- With probability ɛ: **we do exploration** (trying random action).

+

+At the beginning of the training, **the probability of doing exploration will be huge since ɛ is very high, so most of the time, we'll explore.** But as the training goes on, and consequently our **Q-Table gets better and better in its estimations, we progressively reduce the epsilon value** since we will need less and less exploration and more exploitation.

+

+ +

+

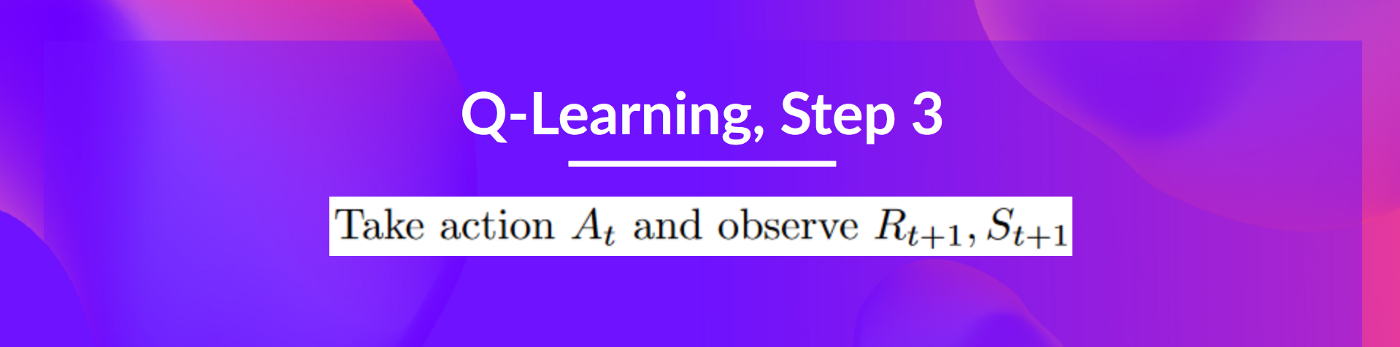

+### Step 3: Perform action At, gets reward Rt+1 and next state St+1 [[step3]]

+

+

+

+

+### Step 3: Perform action At, gets reward Rt+1 and next state St+1 [[step3]]

+

+ +

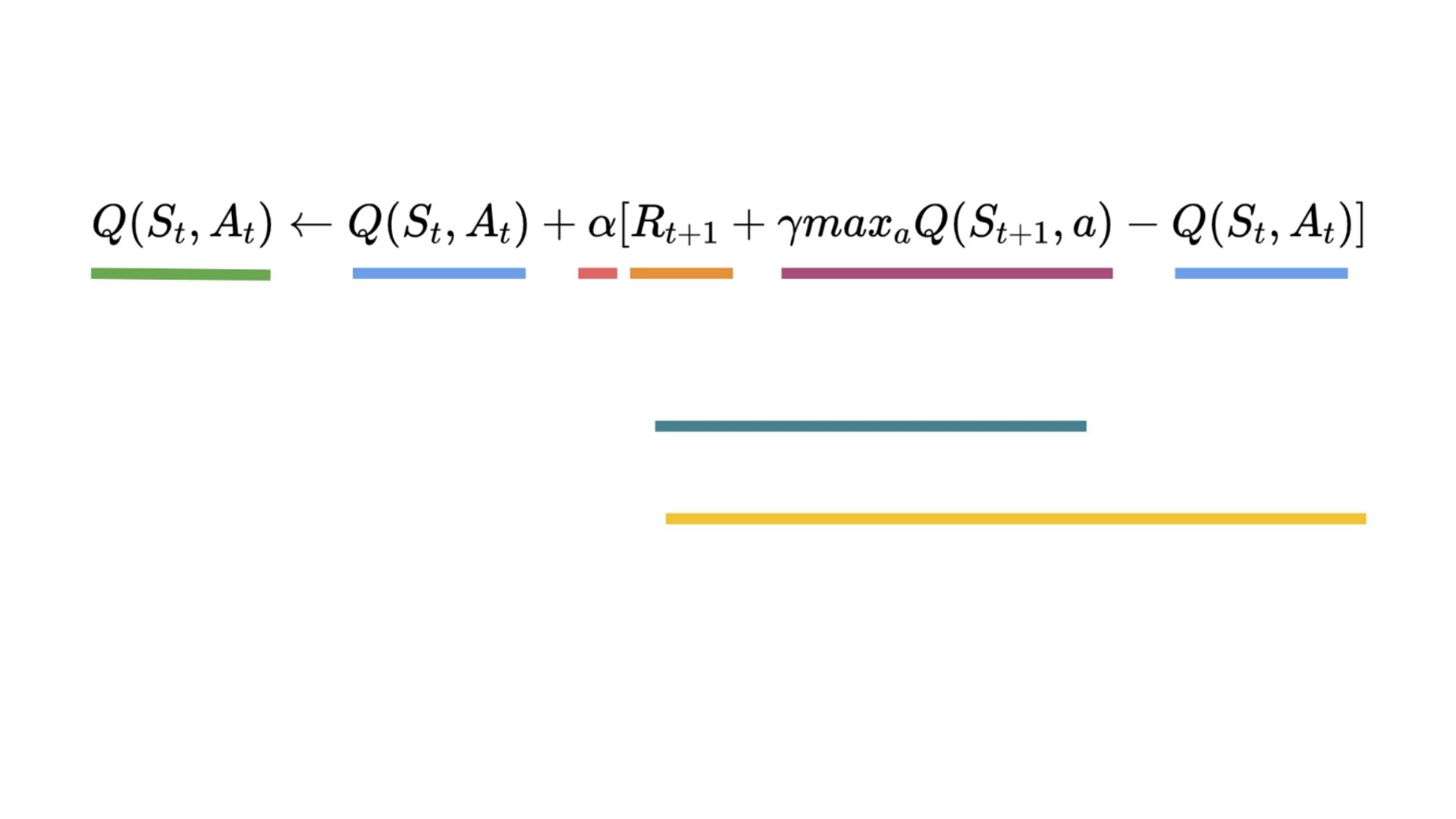

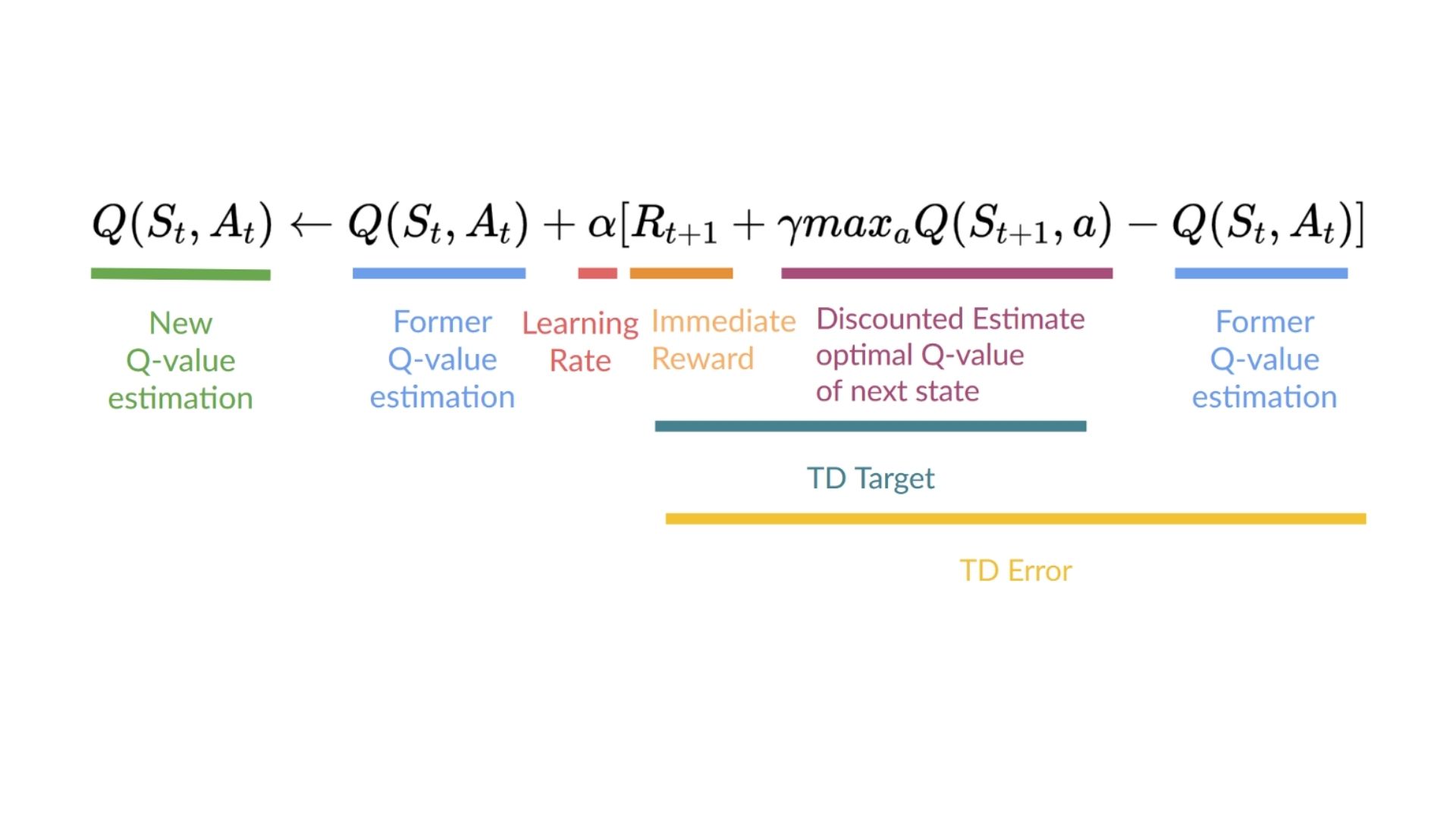

+### Step 4: Update Q(St, At) [[step4]]

+

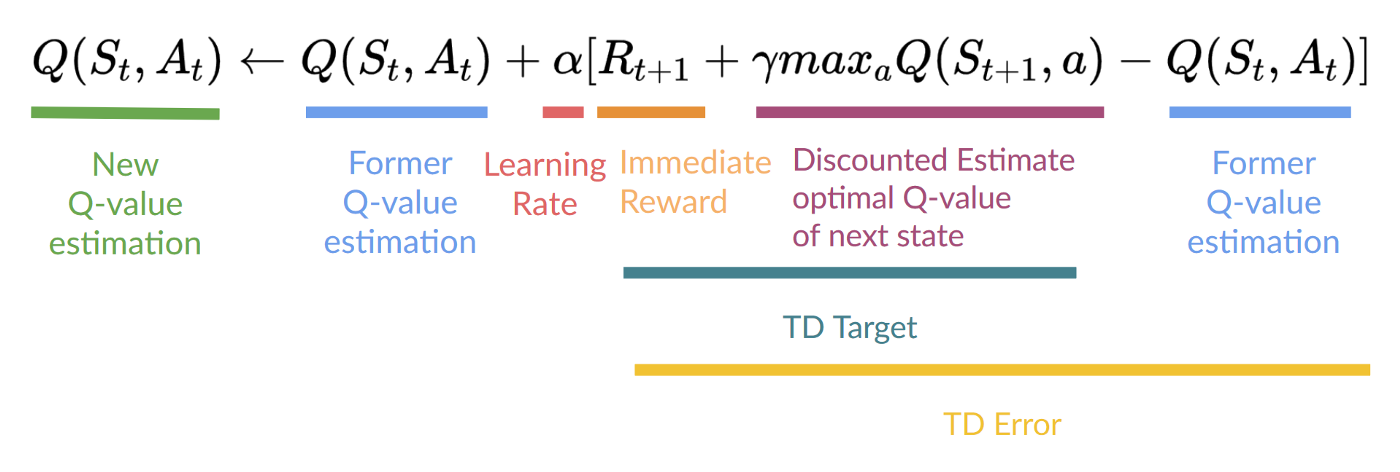

+Remember that in TD Learning, we update our policy or value function (depending on the RL method we choose) **after one step of the interaction.**

+

+To produce our TD target, **we used the immediate reward \\(R_{t+1}\\) plus the discounted value of the next state best state-action pair** (we call that bootstrap).

+

+

+

+### Step 4: Update Q(St, At) [[step4]]

+

+Remember that in TD Learning, we update our policy or value function (depending on the RL method we choose) **after one step of the interaction.**

+

+To produce our TD target, **we used the immediate reward \\(R_{t+1}\\) plus the discounted value of the next state best state-action pair** (we call that bootstrap).

+

+ +

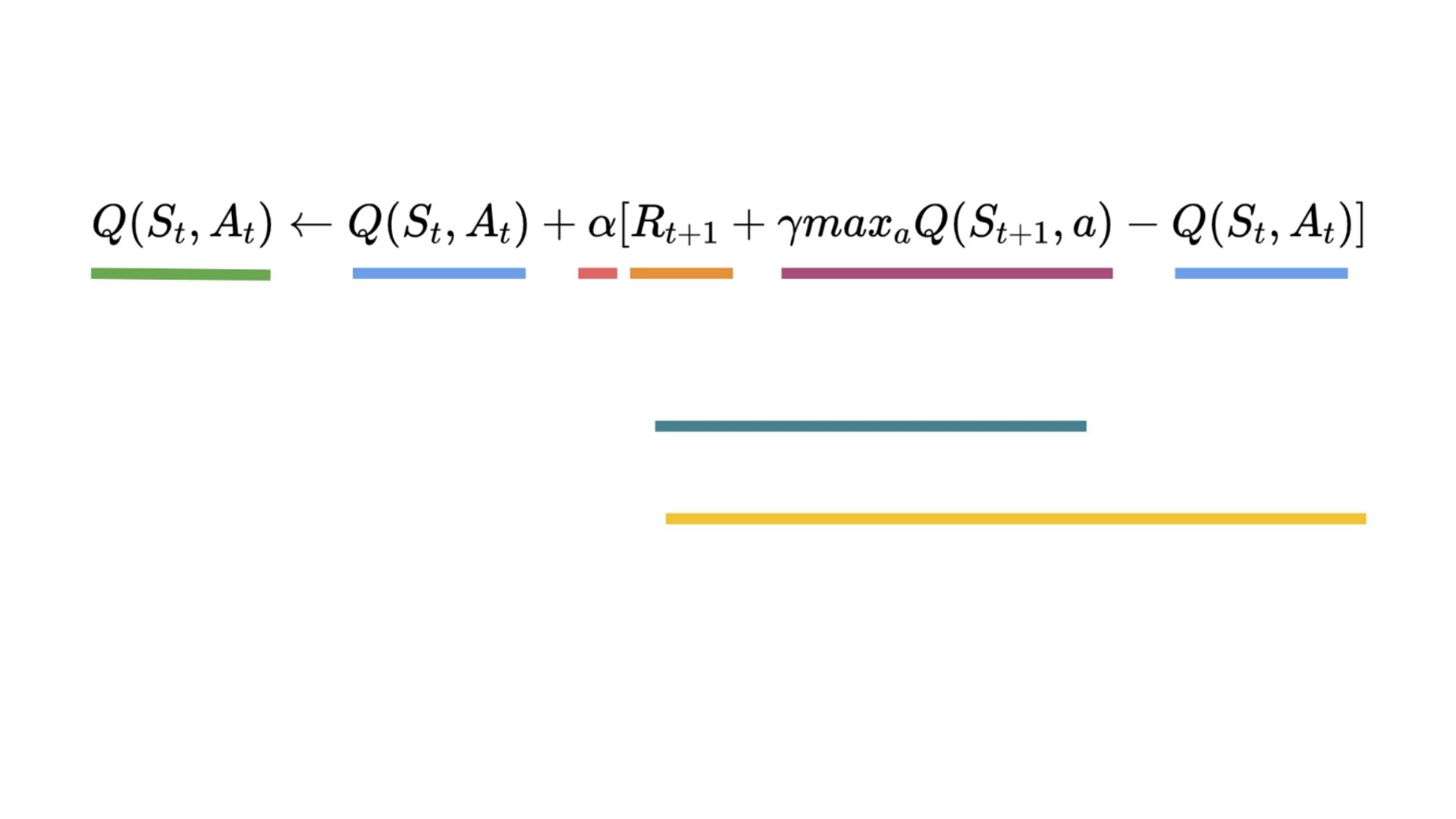

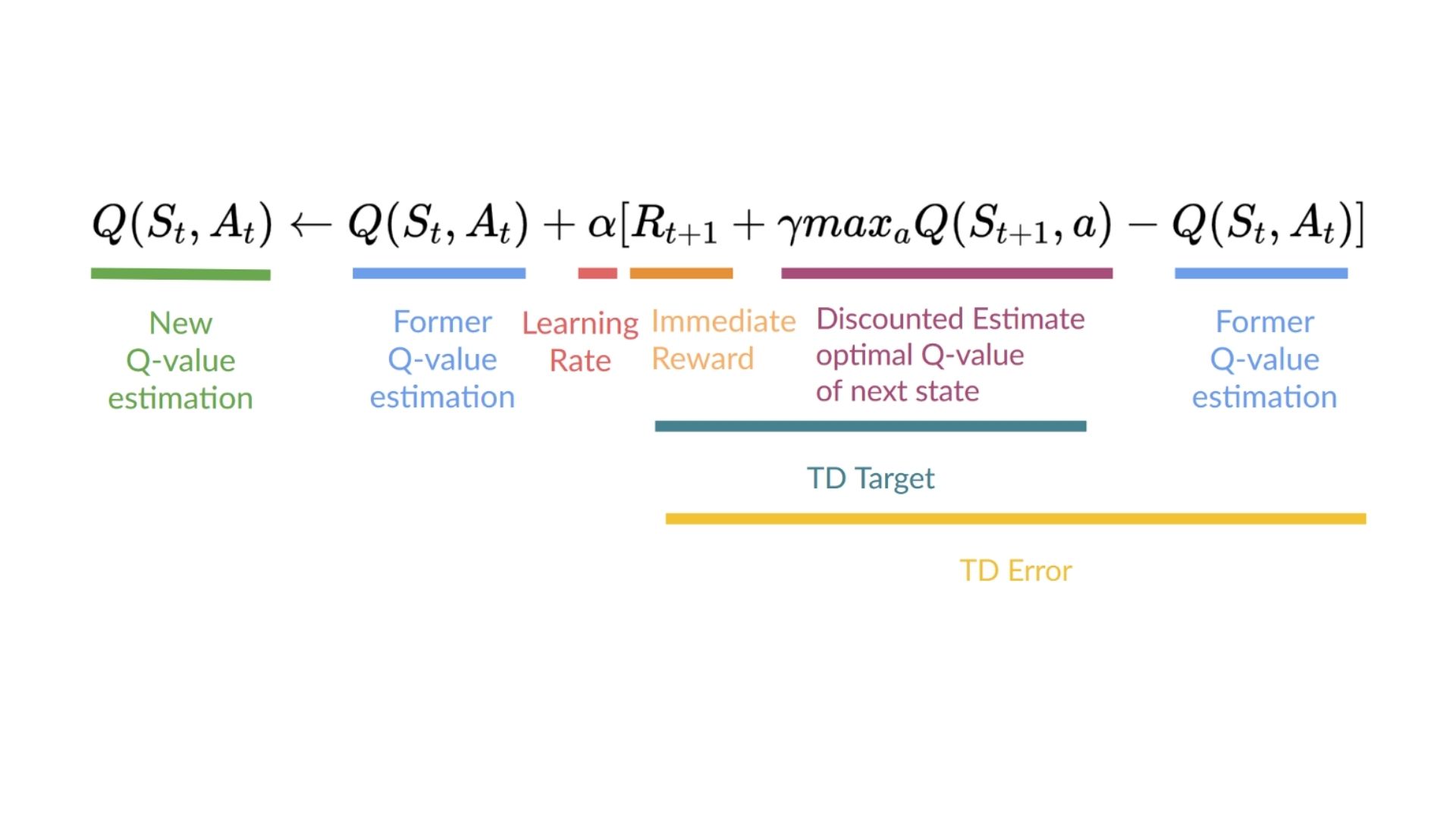

+Therefore, our \\(Q(S_t, A_t)\\) **update formula goes like this:**

+

+

+

+Therefore, our \\(Q(S_t, A_t)\\) **update formula goes like this:**

+

+ +

+

+It means that to update our \\(Q(S_t, A_t)\\):

+

+- We need \\(S_t, A_t, R_{t+1}, S_{t+1}\\).

+- To update our Q-value at a given state-action pair, we use the TD target.

+

+How do we form the TD target?

+1. We obtain the reward after taking the action \\(R_{t+1}\\).

+2. To get the **best next-state-action pair value**, we use a greedy policy to select the next best action. Note that this is not an epsilon greedy policy, this will always take the action with the highest state-action value.

+

+Then when the update of this Q-value is done. We start in a new_state and select our action **using our epsilon-greedy policy again.**

+

+**It's why we say that this is an off-policy algorithm.**

+

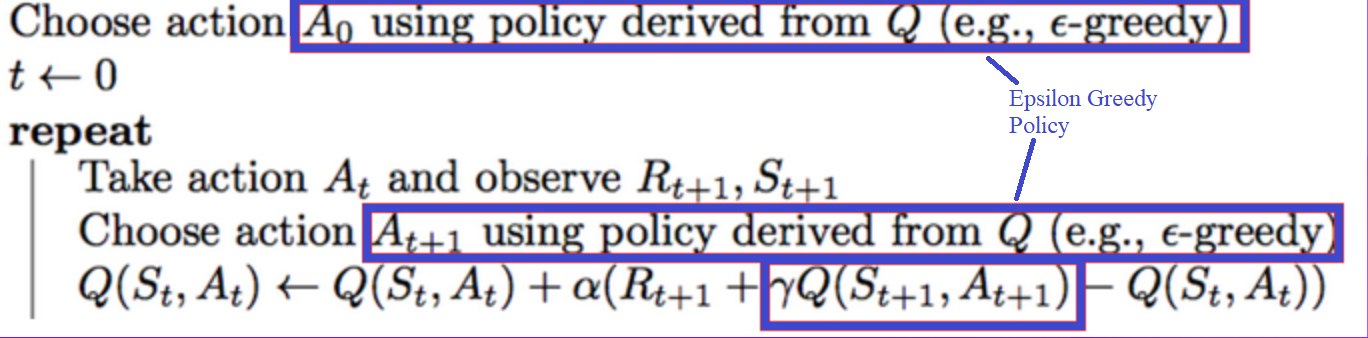

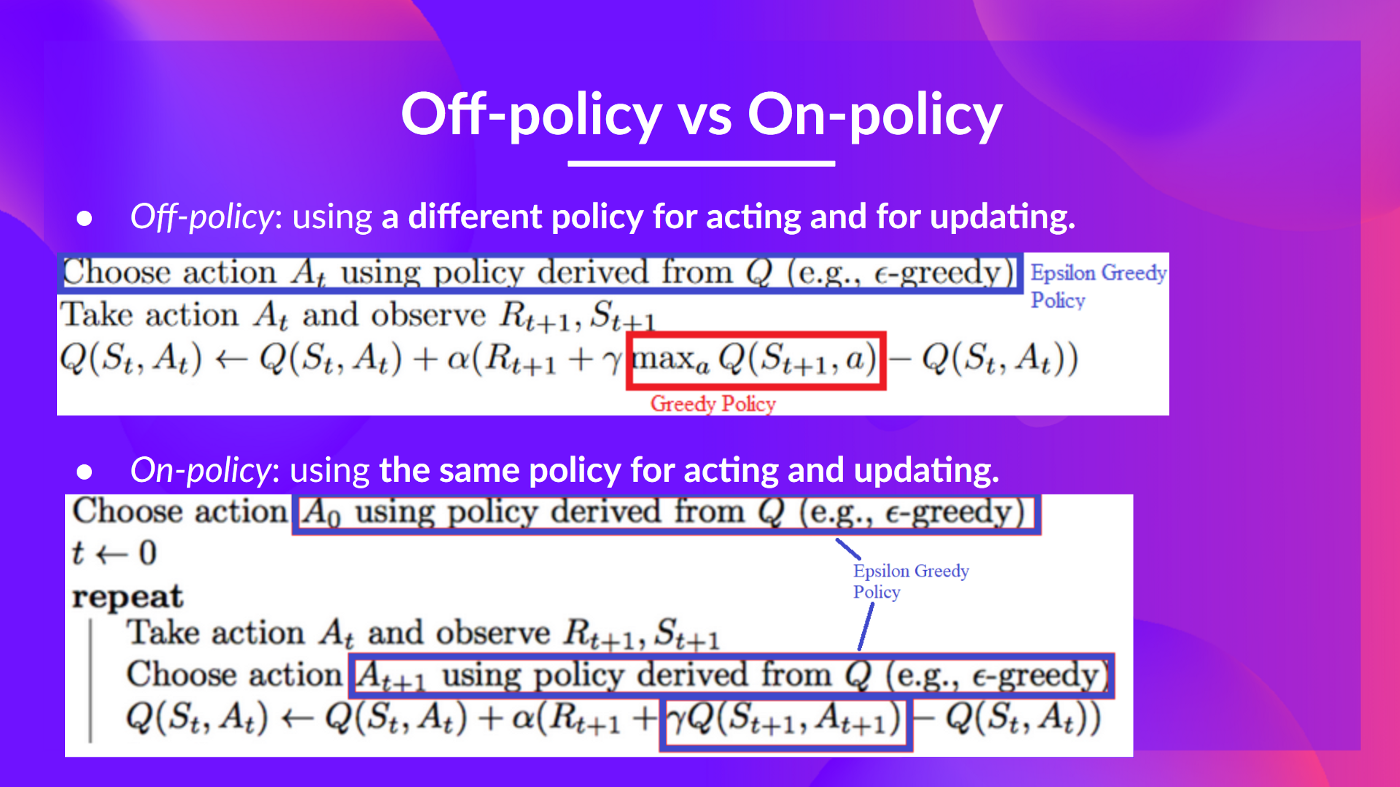

+## Off-policy vs On-policy [[off-vs-on]]

+

+The difference is subtle:

+

+- *Off-policy*: using **a different policy for acting and updating.**

+

+For instance, with Q-Learning, the Epsilon greedy policy (acting policy), is different from the greedy policy that is **used to select the best next-state action value to update our Q-value (updating policy).**

+

+

+

+

+

+

+It means that to update our \\(Q(S_t, A_t)\\):

+

+- We need \\(S_t, A_t, R_{t+1}, S_{t+1}\\).

+- To update our Q-value at a given state-action pair, we use the TD target.

+

+How do we form the TD target?

+1. We obtain the reward after taking the action \\(R_{t+1}\\).

+2. To get the **best next-state-action pair value**, we use a greedy policy to select the next best action. Note that this is not an epsilon greedy policy, this will always take the action with the highest state-action value.

+

+Then when the update of this Q-value is done. We start in a new_state and select our action **using our epsilon-greedy policy again.**

+

+**It's why we say that this is an off-policy algorithm.**

+

+## Off-policy vs On-policy [[off-vs-on]]

+

+The difference is subtle:

+

+- *Off-policy*: using **a different policy for acting and updating.**

+

+For instance, with Q-Learning, the Epsilon greedy policy (acting policy), is different from the greedy policy that is **used to select the best next-state action value to update our Q-value (updating policy).**

+

+

+

+ + Acting Policy

+

+

+Is different from the policy we use during the training part:

+

+

+

+

+ Acting Policy

+

+

+Is different from the policy we use during the training part:

+

+

+

+ + Updating policy

+

+

+- *On-policy:* using the **same policy for acting and updating.**

+

+For instance, with Sarsa, another value-based algorithm, **the Epsilon-Greedy Policy selects the next_state-action pair, not a greedy policy.**

+

+

+

+

+ Updating policy

+

+

+- *On-policy:* using the **same policy for acting and updating.**

+

+For instance, with Sarsa, another value-based algorithm, **the Epsilon-Greedy Policy selects the next_state-action pair, not a greedy policy.**

+

+

+

+ + Sarsa

+

+

+

+

+ Sarsa

+

+

+

+ +

diff --git a/units/en/unit2/quiz1.mdx b/units/en/unit2/quiz1.mdx

new file mode 100644

index 0000000..cc5692d

--- /dev/null

+++ b/units/en/unit2/quiz1.mdx

@@ -0,0 +1,105 @@

+# First Quiz [[quiz1]]

+

+The best way to learn and [to avoid the illusion of competence](https://www.coursera.org/lecture/learning-how-to-learn/illusions-of-competence-BuFzf) **is to test yourself.** This will help you to find **where you need to reinforce your knowledge**.

+

+

+### Q1: What are the two main approaches to find optimal policy?

+

+

+

+

+

+### Q2: What is the Bellman Equation?

+

+

+

diff --git a/units/en/unit2/quiz1.mdx b/units/en/unit2/quiz1.mdx

new file mode 100644

index 0000000..cc5692d

--- /dev/null

+++ b/units/en/unit2/quiz1.mdx

@@ -0,0 +1,105 @@

+# First Quiz [[quiz1]]

+

+The best way to learn and [to avoid the illusion of competence](https://www.coursera.org/lecture/learning-how-to-learn/illusions-of-competence-BuFzf) **is to test yourself.** This will help you to find **where you need to reinforce your knowledge**.

+

+

+### Q1: What are the two main approaches to find optimal policy?

+

+

+

+

+

+### Q2: What is the Bellman Equation?

+

+

+Solution

+

+**The Bellman equation is a recursive equation** that works like this: instead of starting for each state from the beginning and calculating the return, we can consider the value of any state as:

+

+Rt+1 + (gamma * V(St+1))

+The immediate reward + the discounted value of the state that follows

+

+

+

+### Q3: Define each part of the Bellman Equation

+

+ +

+

+

+

+

+

+Solution

+

+ +

+

+

+

+

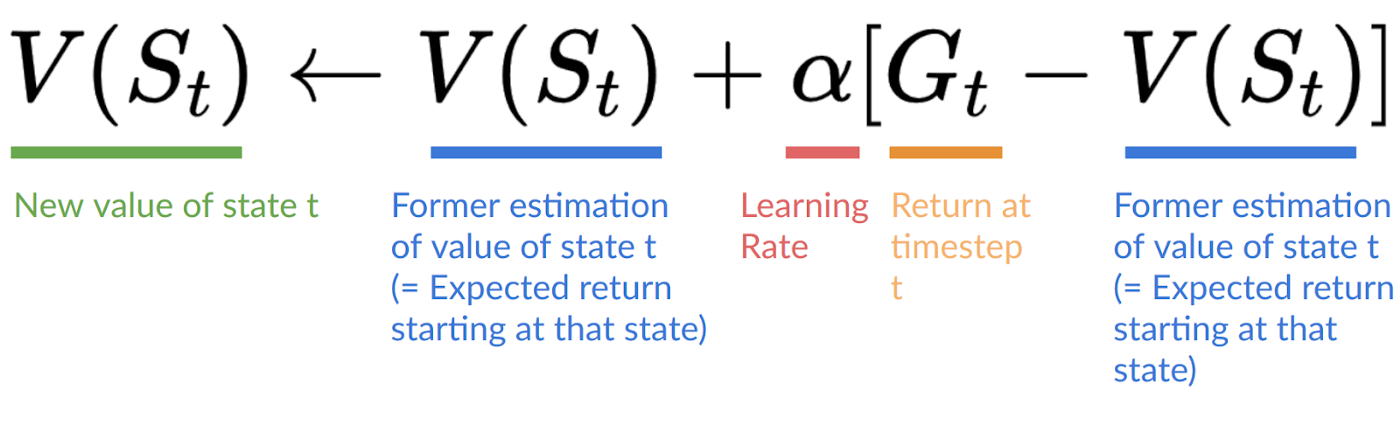

+### Q4: What is the difference between Monte Carlo and Temporal Difference learning methods?

+

+

+

+### Q5: Define each part of Temporal Difference learning formula

+

+ +

+

+

+

+Solution

+

+ +

+

+

+

+

+

+### Q6: Define each part of Monte Carlo learning formula

+

+ +

+

+

+

+Solution

+

+ +

+

+

+

+

+Congrats on finishing this Quiz 🥳, if you missed some elements, take time to read again the chapter to reinforce (😏) your knowledge.

diff --git a/units/en/unit2/quiz2.mdx b/units/en/unit2/quiz2.mdx

new file mode 100644

index 0000000..9d96d74

--- /dev/null

+++ b/units/en/unit2/quiz2.mdx

@@ -0,0 +1,97 @@

+# Second Quiz [[quiz2]]

+

+The best way to learn and [to avoid the illusion of competence](https://www.coursera.org/lecture/learning-how-to-learn/illusions-of-competence-BuFzf) **is to test yourself.** This will help you to find **where you need to reinforce your knowledge**.

+

+

+### Q1: What is Q-Learning?

+

+

+

+

+### Q2: What is a Q-Table?

+

+

+

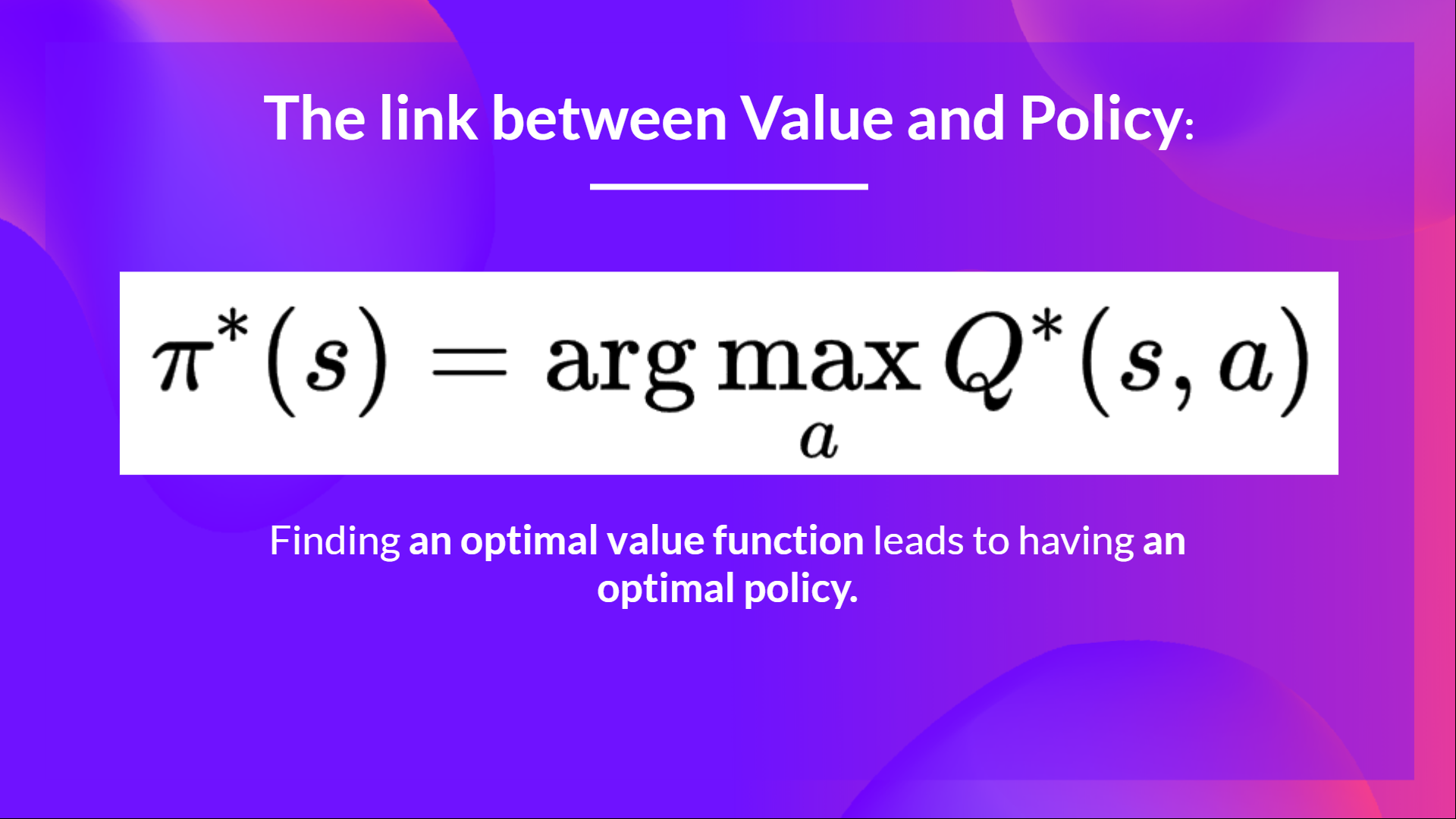

+### Q3: Why if we have an optimal Q-function Q* we have an optimal policy?

+

+

+Solution

+

+Because if we have an optimal Q-function, we have an optimal policy since we know for each state what is the best action to take.

+

+ +

+

+

+

+

+### Q4: Can you explain what is Epsilon-Greedy Strategy?

+

+

+Solution

+Epsilon Greedy Strategy is a policy that handles the exploration/exploitation trade-off.

+

+The idea is that we define epsilon ɛ = 1.0:

+

+- With *probability 1 — ɛ* : we do exploitation (aka our agent selects the action with the highest state-action pair value).

+- With *probability ɛ* : we do exploration (trying random action).

+

+ +

+

+

+

+

+

+

+### Q5: How do we update the Q value of a state, action pair?

+ +

+

+

+

+Solution

+ +

+

+

+

+

+

+

+### Q6: What's the difference between on-policy and off-policy

+

+

+Solution

+ +

+

+

+Congrats on finishing this Quiz 🥳, if you missed some elements, take time to read again the chapter to reinforce (😏) your knowledge.

diff --git a/units/en/unit2/summary1.mdx b/units/en/unit2/summary1.mdx

new file mode 100644

index 0000000..3a19d86

--- /dev/null

+++ b/units/en/unit2/summary1.mdx

@@ -0,0 +1,17 @@

+# Summary [[summary1]]

+

+Before diving on Q-Learning, let's summarize what we just learned.

+

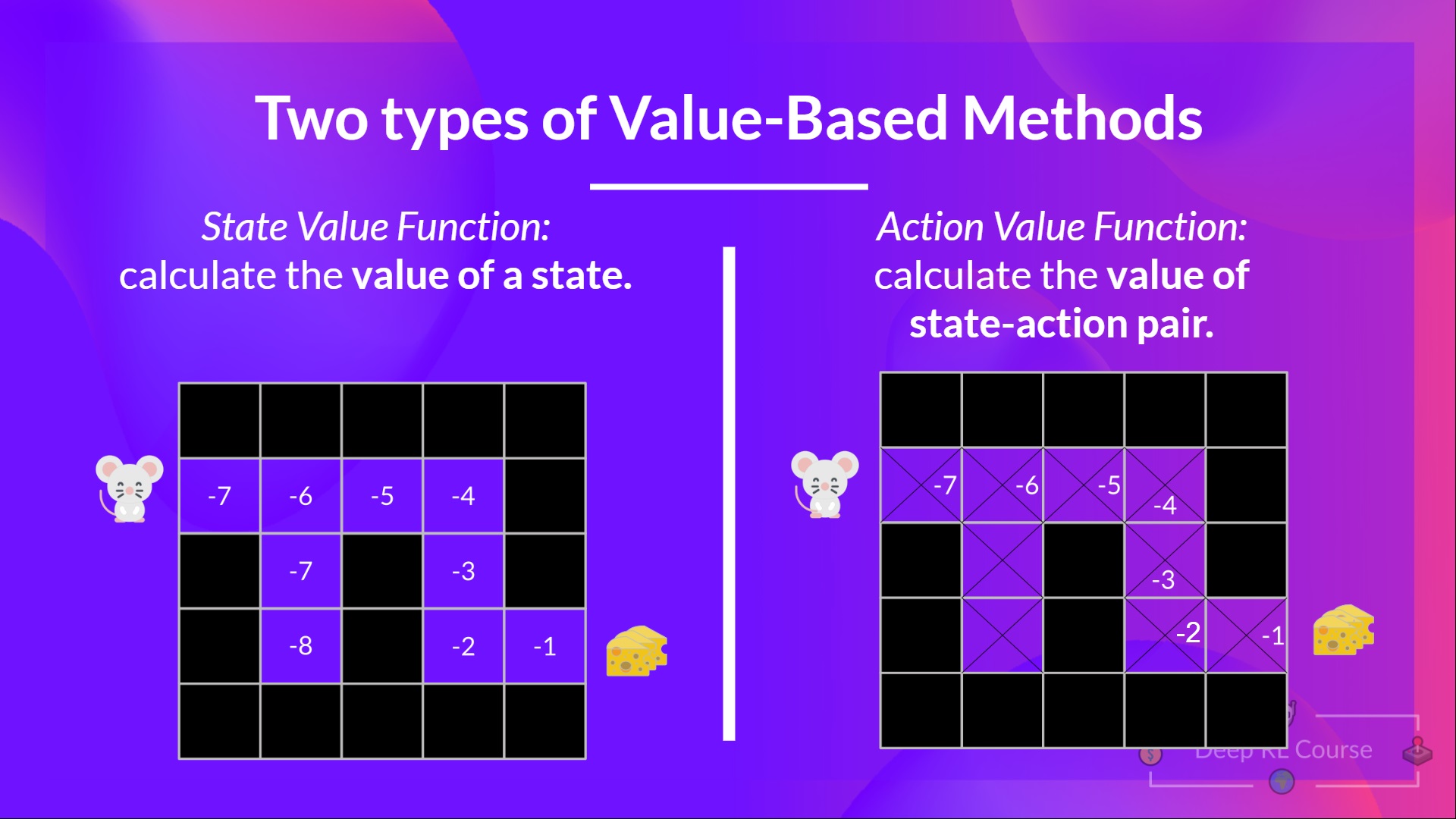

+We have two types of value-based functions:

+

+- State-Value function: outputs the expected return if **the agent starts at a given state and acts accordingly to the policy forever after.**

+- Action-Value function: outputs the expected return if **the agent starts in a given state, takes a given action at that state** and then acts accordingly to the policy forever after.

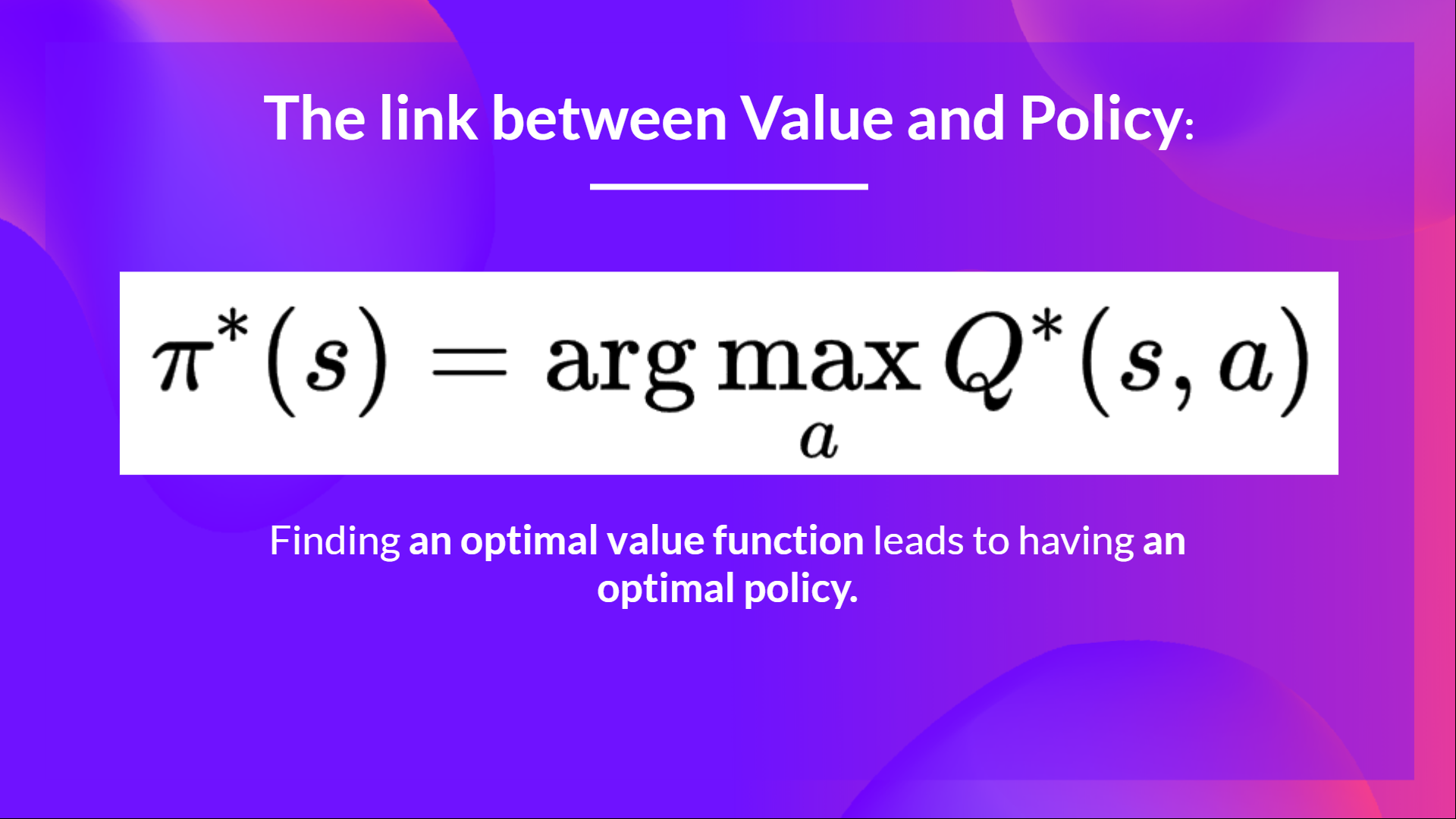

+- In value-based methods, **we define the policy by hand** because we don't train it, we train a value function. The idea is that if we have an optimal value function, we **will have an optimal policy.**

+

+There are two types of methods to learn a policy for a value function:

+

+- With *the Monte Carlo method*, we update the value function from a complete episode, and so we **use the actual accurate discounted return of this episode.**

+- With *the TD Learning method,* we update the value function from a step, so we replace Gt that we don't have with **an estimated return called TD target.**

+

+

+ diff --git a/units/en/unit2/two-types-value-based-methods.mdx b/units/en/unit2/two-types-value-based-methods.mdx

new file mode 100644

index 0000000..47da6ef

--- /dev/null

+++ b/units/en/unit2/two-types-value-based-methods.mdx

@@ -0,0 +1,86 @@

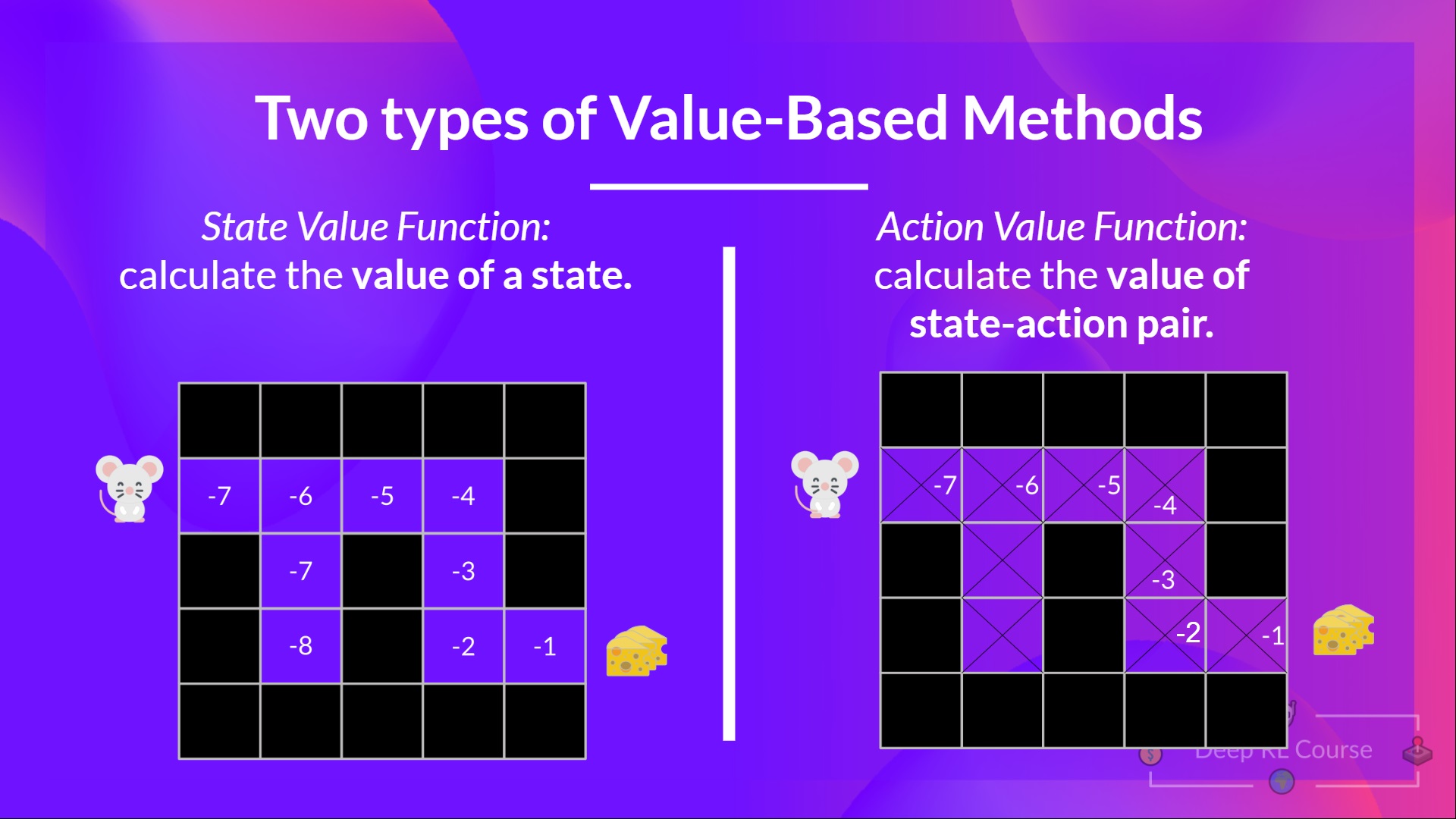

+# Two types of value-based methods [[two-types-value-based-methods]]

+

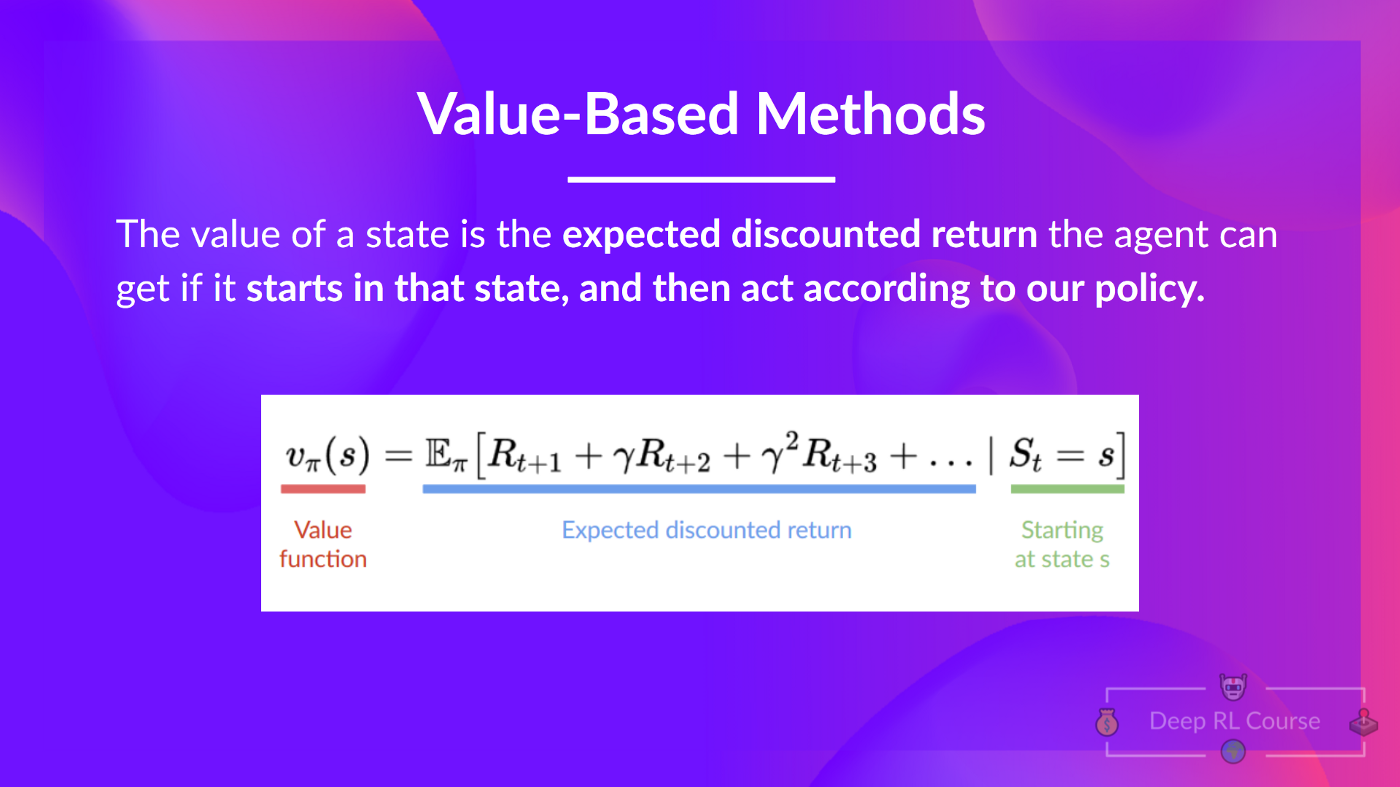

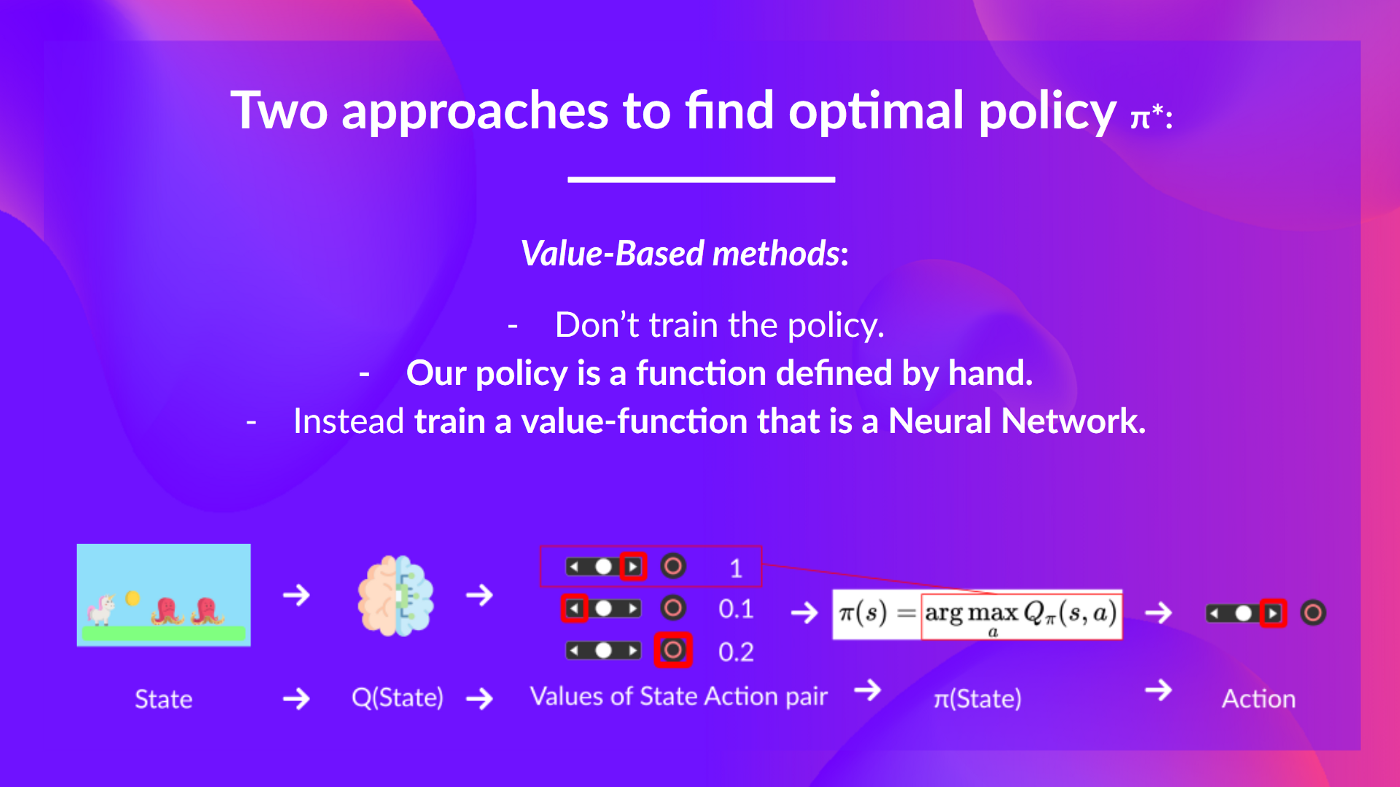

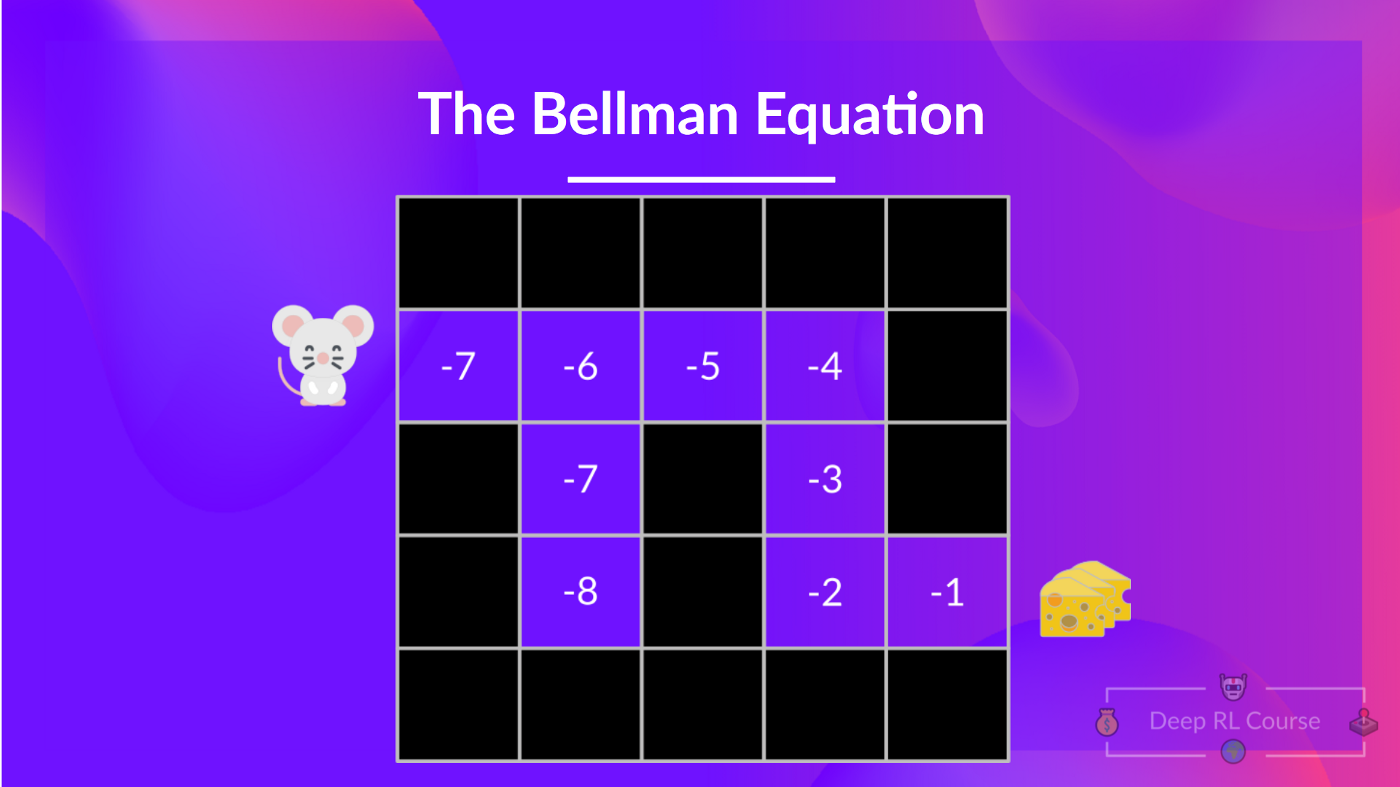

+In value-based methods, **we learn a value function** that **maps a state to the expected value of being at that state.**

+

+

diff --git a/units/en/unit2/two-types-value-based-methods.mdx b/units/en/unit2/two-types-value-based-methods.mdx

new file mode 100644

index 0000000..47da6ef

--- /dev/null

+++ b/units/en/unit2/two-types-value-based-methods.mdx

@@ -0,0 +1,86 @@

+# Two types of value-based methods [[two-types-value-based-methods]]

+

+In value-based methods, **we learn a value function** that **maps a state to the expected value of being at that state.**

+

+ +

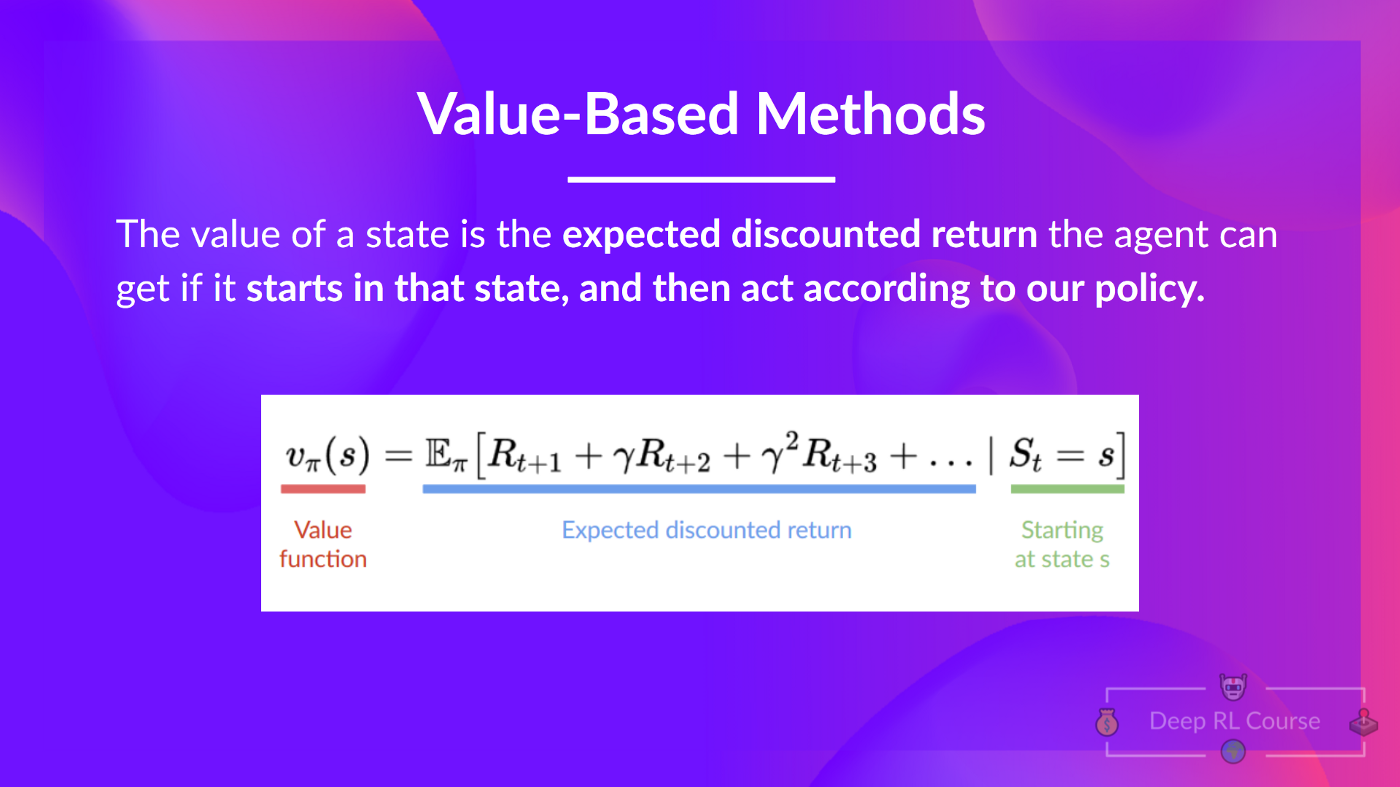

+The value of a state is the **expected discounted return** the agent can get if it **starts at that state and then acts according to our policy.**

+

+

+But what does it mean to act according to our policy? After all, we don't have a policy in value-based methods, since we train a value function and not a policy.

+

+

+Remember that the goal of an **RL agent is to have an optimal policy π.**

+

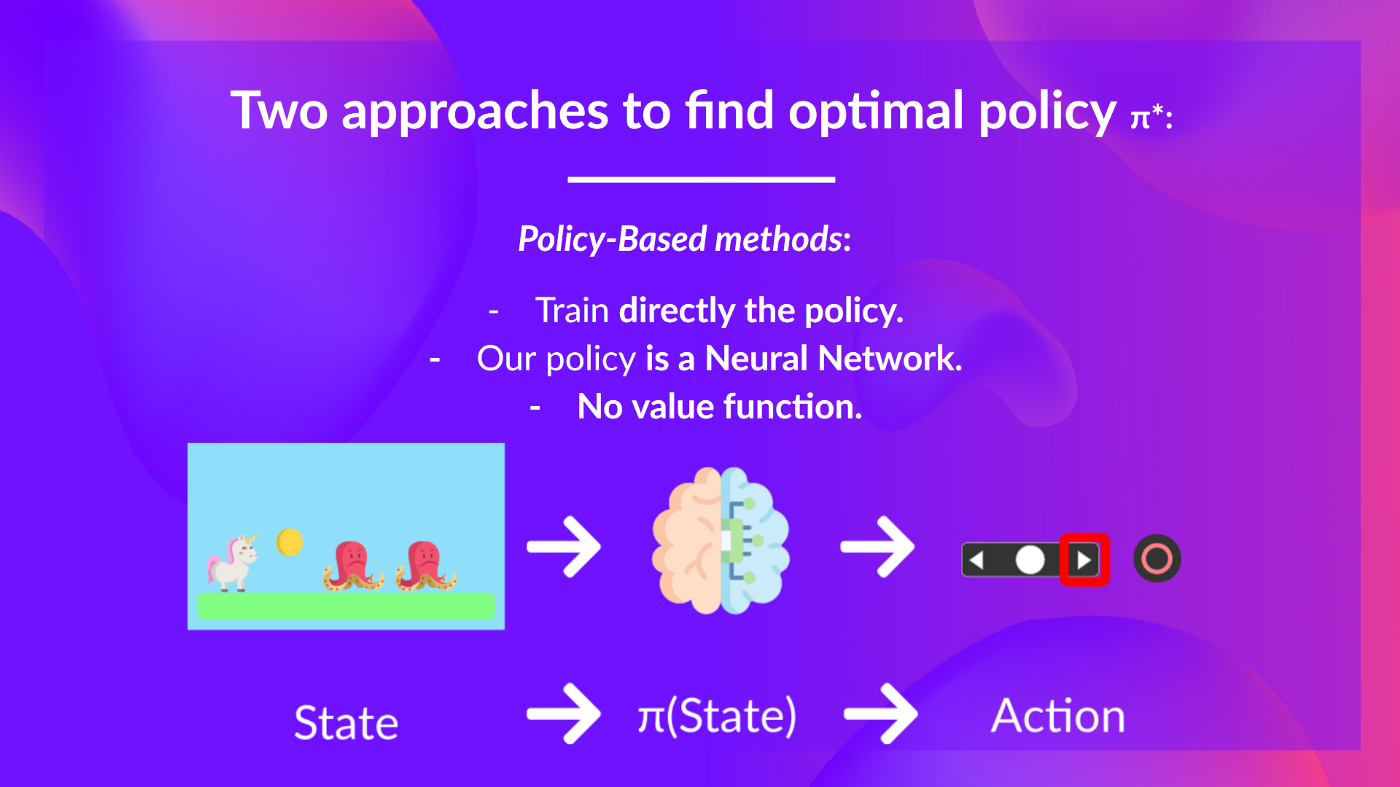

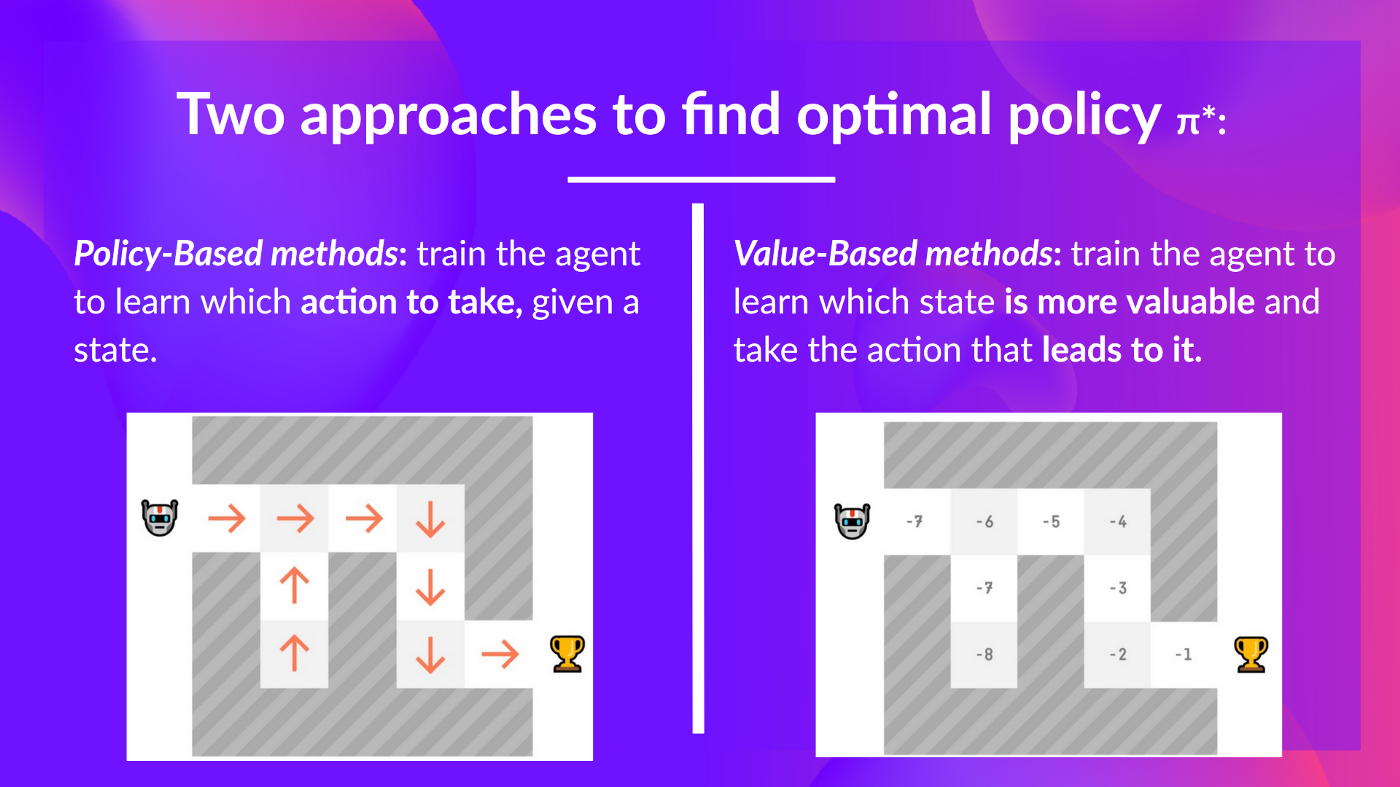

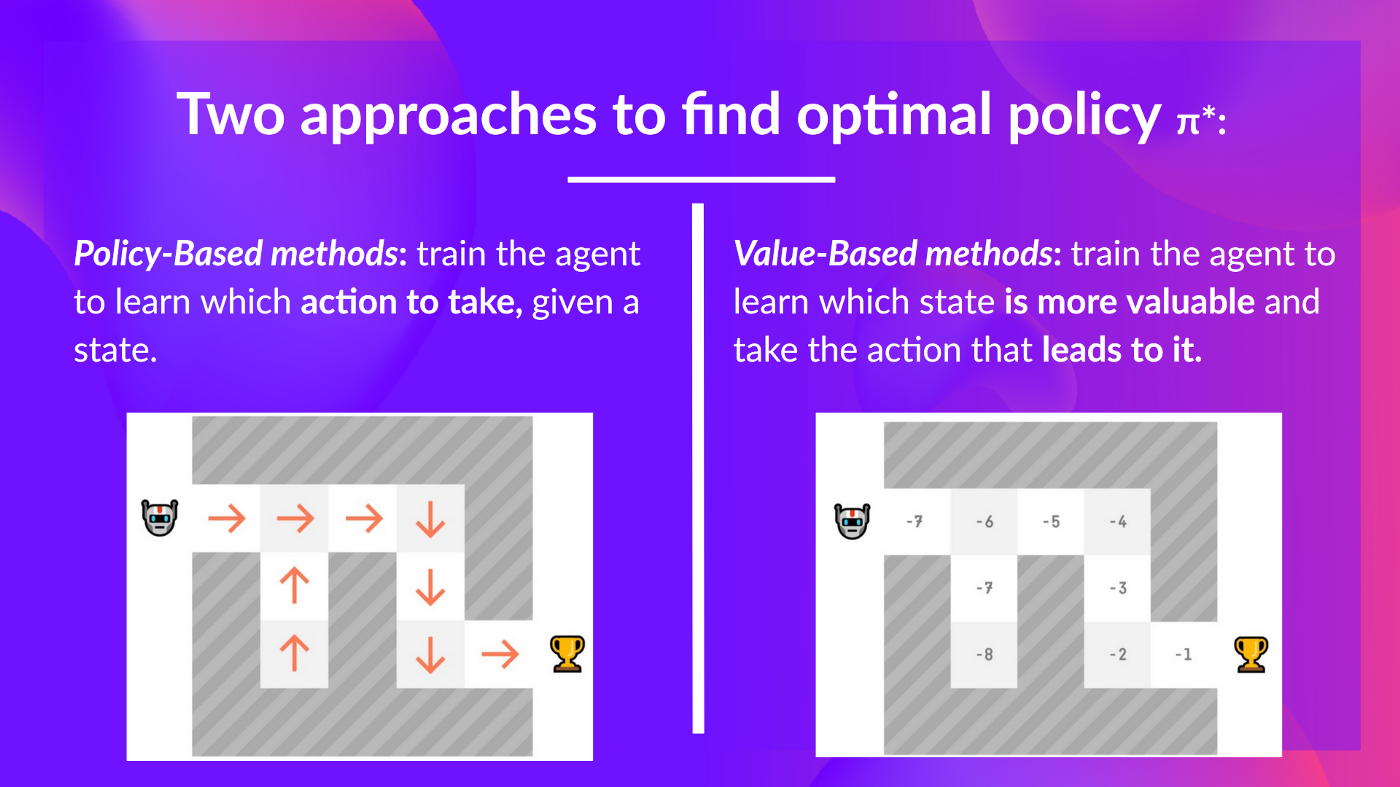

+To find the optimal policy, we learned about two different methods:

+

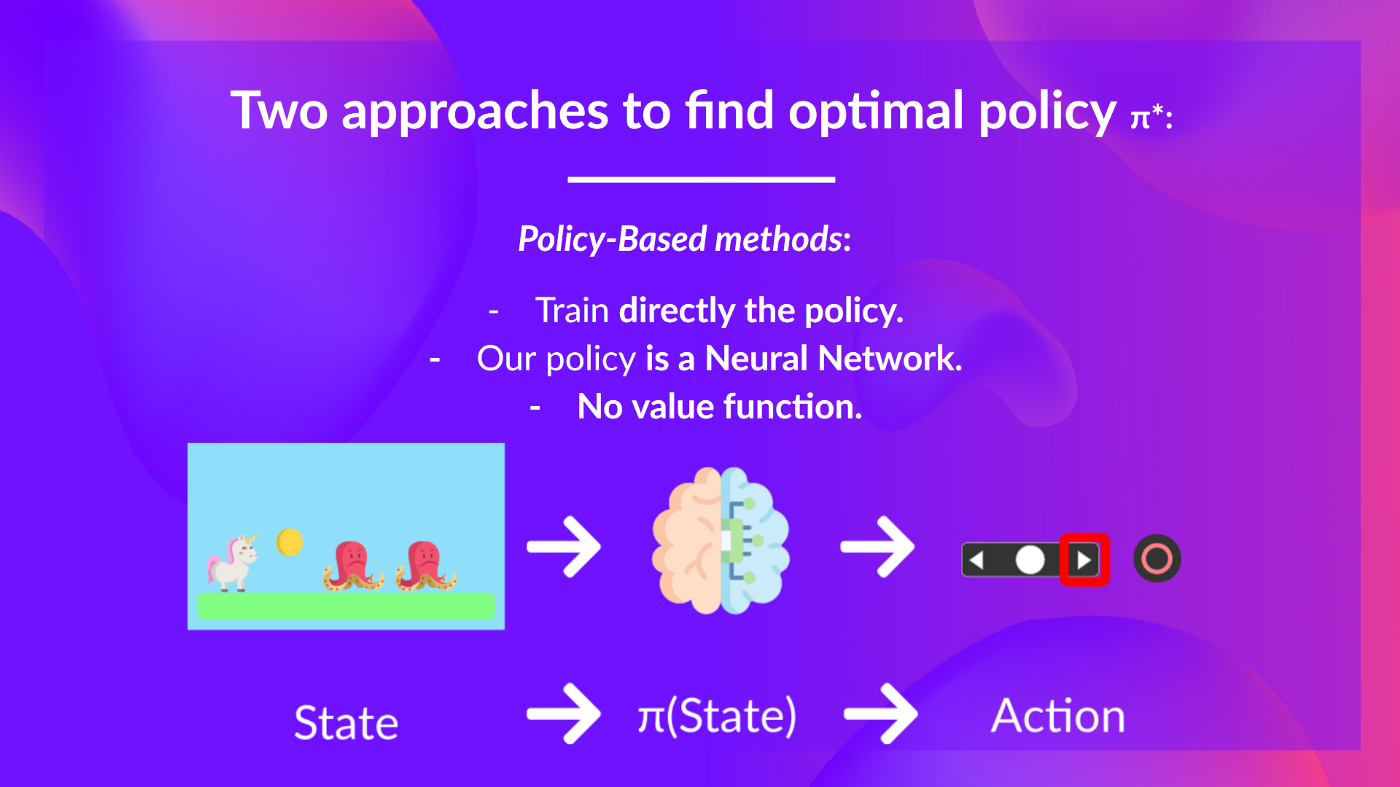

+- *Policy-based methods:* **Directly train the policy** to select what action to take given a state (or a probability distribution over actions at that state). In this case, we **don't have a value function.**

+

+

+

+The value of a state is the **expected discounted return** the agent can get if it **starts at that state and then acts according to our policy.**

+

+

+But what does it mean to act according to our policy? After all, we don't have a policy in value-based methods, since we train a value function and not a policy.

+

+

+Remember that the goal of an **RL agent is to have an optimal policy π.**

+

+To find the optimal policy, we learned about two different methods:

+

+- *Policy-based methods:* **Directly train the policy** to select what action to take given a state (or a probability distribution over actions at that state). In this case, we **don't have a value function.**

+

+ +

+The policy takes a state as input and outputs what action to take at that state (deterministic policy).

+

+And consequently, **we don't define by hand the behavior of our policy; it's the training that will define it.**

+

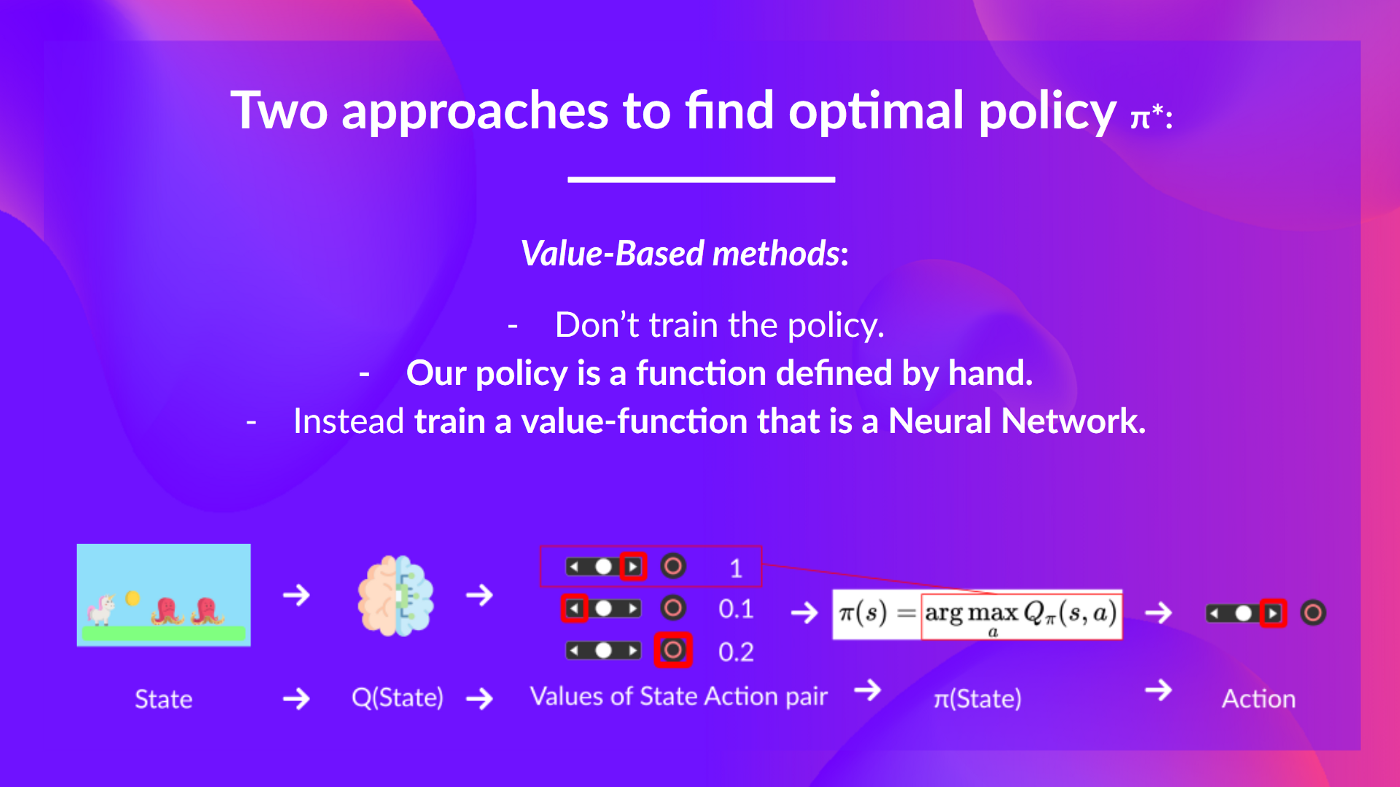

+- *Value-based methods:* **Indirectly, by training a value function** that outputs the value of a state or a state-action pair. Given this value function, our policy **will take action.**

+

+Since the policy is not trained/learned, **we need to specify its behavior.** For instance, if we want a policy that, given the value function, will take actions that always lead to the biggest reward, **we'll create a Greedy Policy.**

+

+

+

+

+The policy takes a state as input and outputs what action to take at that state (deterministic policy).

+

+And consequently, **we don't define by hand the behavior of our policy; it's the training that will define it.**

+

+- *Value-based methods:* **Indirectly, by training a value function** that outputs the value of a state or a state-action pair. Given this value function, our policy **will take action.**

+

+Since the policy is not trained/learned, **we need to specify its behavior.** For instance, if we want a policy that, given the value function, will take actions that always lead to the biggest reward, **we'll create a Greedy Policy.**

+

+

+  + Given a state, our action-value function (that we train) outputs the value of each action at that state. Then, our pre-defined Greedy Policy selects the action that will yield the highest value given a state or a state action pair.

+

+

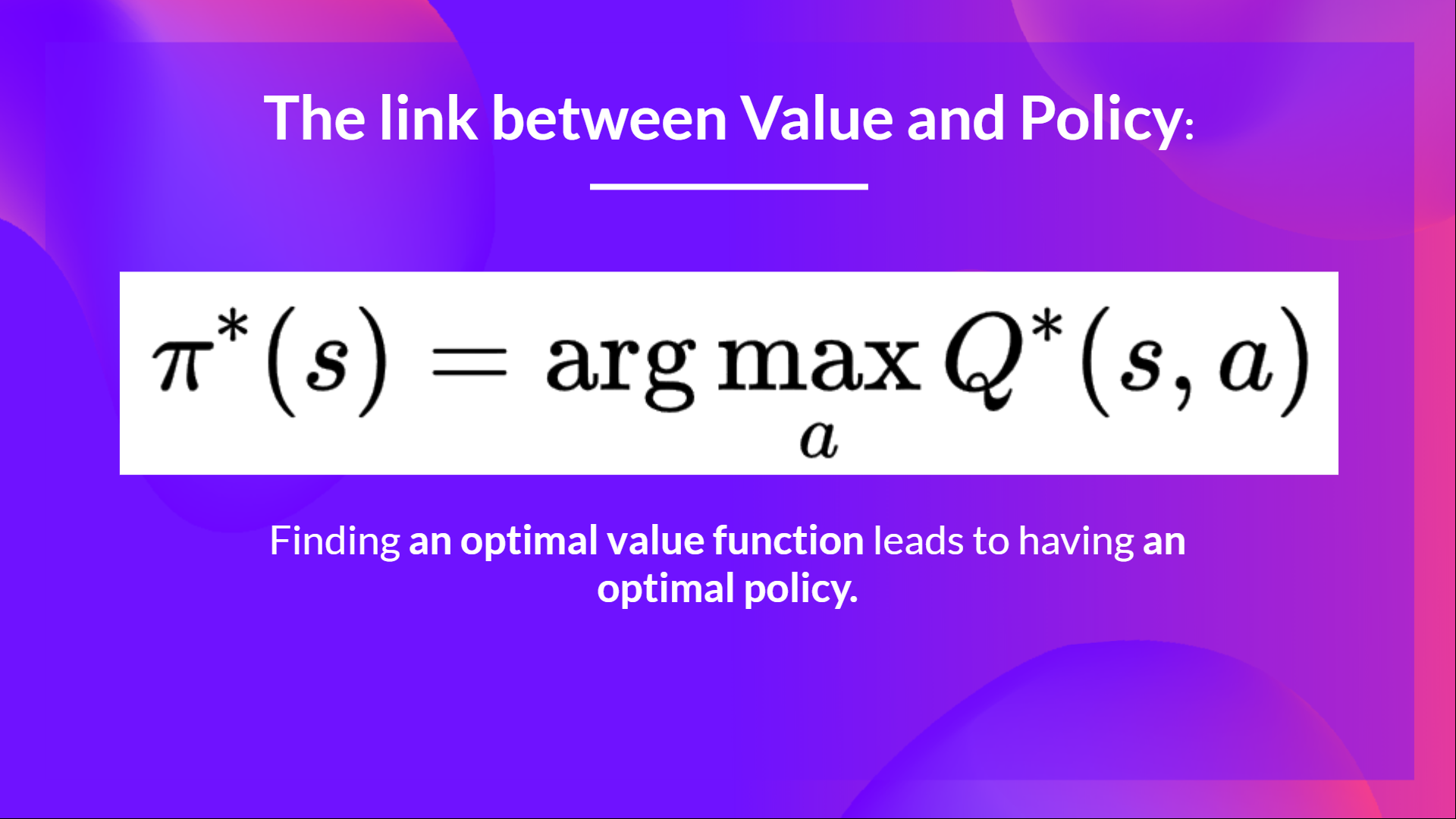

+Consequently, whatever method you use to solve your problem, **you will have a policy**. In the case of value-based methods, you don't train the policy: your policy **is just a simple pre-specified function** (for instance, Greedy Policy) that uses the values given by the value-function to select its actions.

+

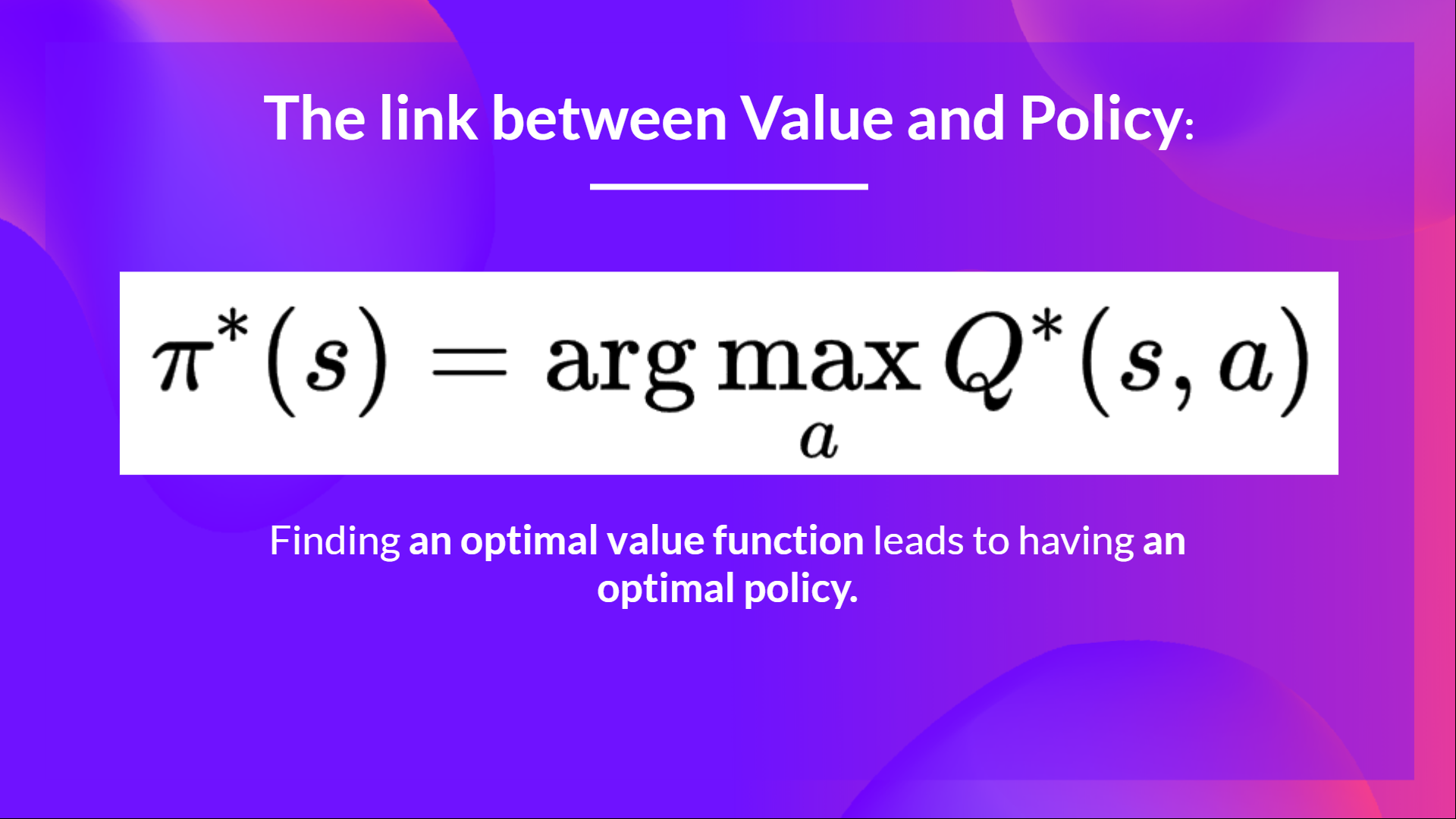

+So the difference is:

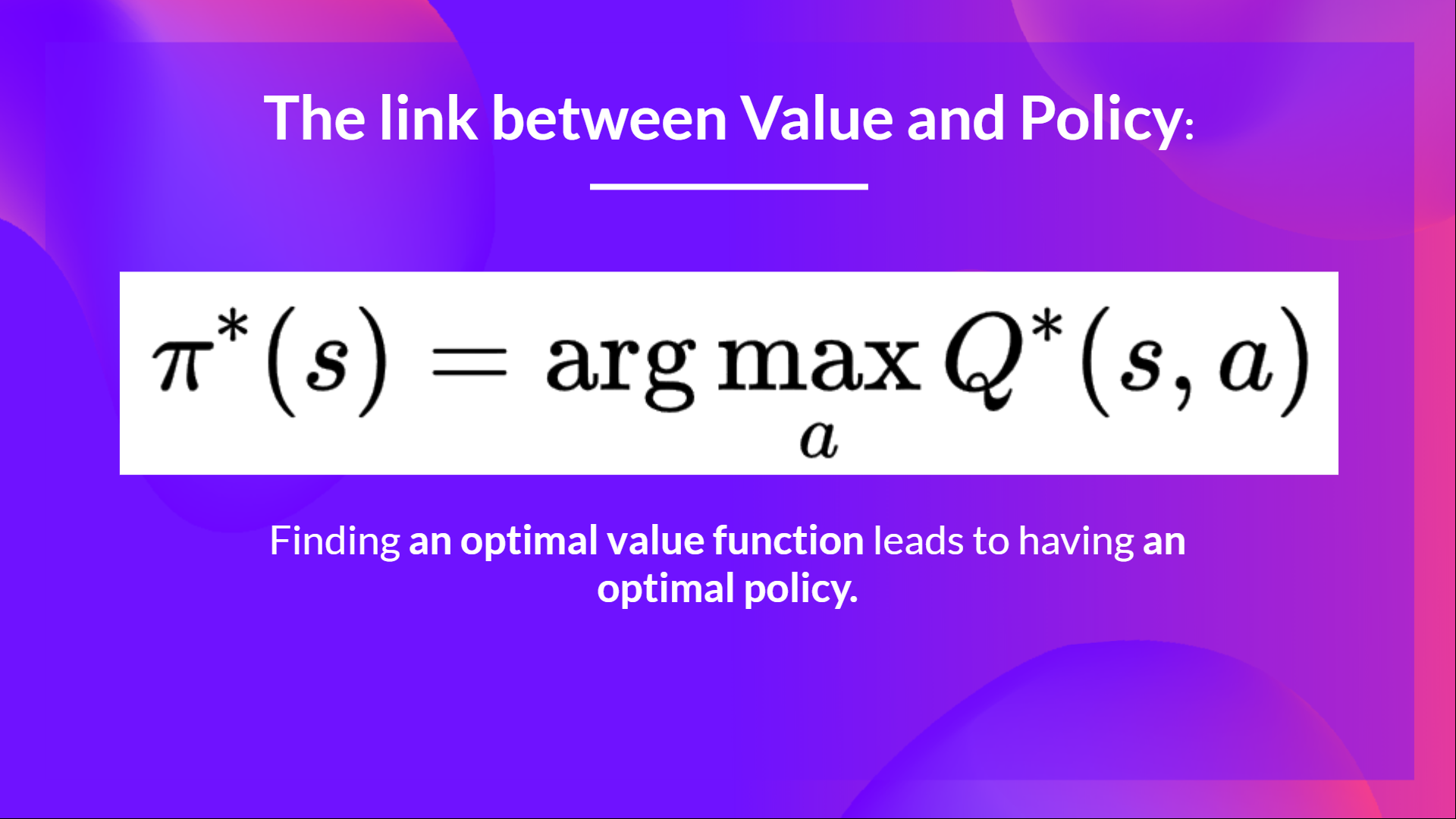

+

+- In policy-based, **the optimal policy is found by training the policy directly.**

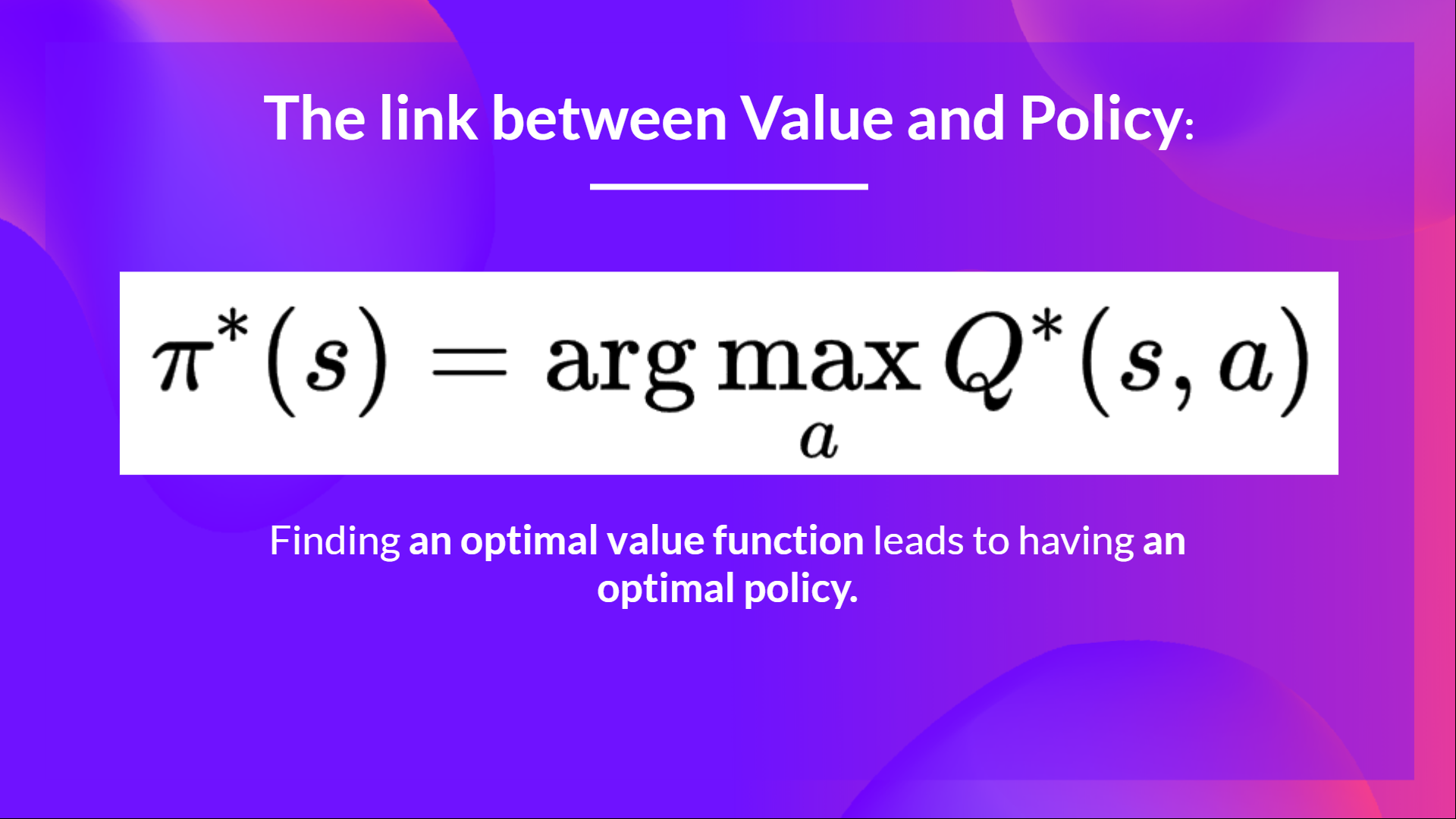

+- In value-based, **finding an optimal value function leads to having an optimal policy.**

+

+

+ Given a state, our action-value function (that we train) outputs the value of each action at that state. Then, our pre-defined Greedy Policy selects the action that will yield the highest value given a state or a state action pair.

+

+

+Consequently, whatever method you use to solve your problem, **you will have a policy**. In the case of value-based methods, you don't train the policy: your policy **is just a simple pre-specified function** (for instance, Greedy Policy) that uses the values given by the value-function to select its actions.

+

+So the difference is:

+

+- In policy-based, **the optimal policy is found by training the policy directly.**

+- In value-based, **finding an optimal value function leads to having an optimal policy.**

+

+ +

+In fact, most of the time, in value-based methods, you'll use **an Epsilon-Greedy Policy** that handles the exploration/exploitation trade-off; we'll talk about it when we talk about Q-Learning in the second part of this unit.

+

+

+So, we have two types of value-based functions:

+

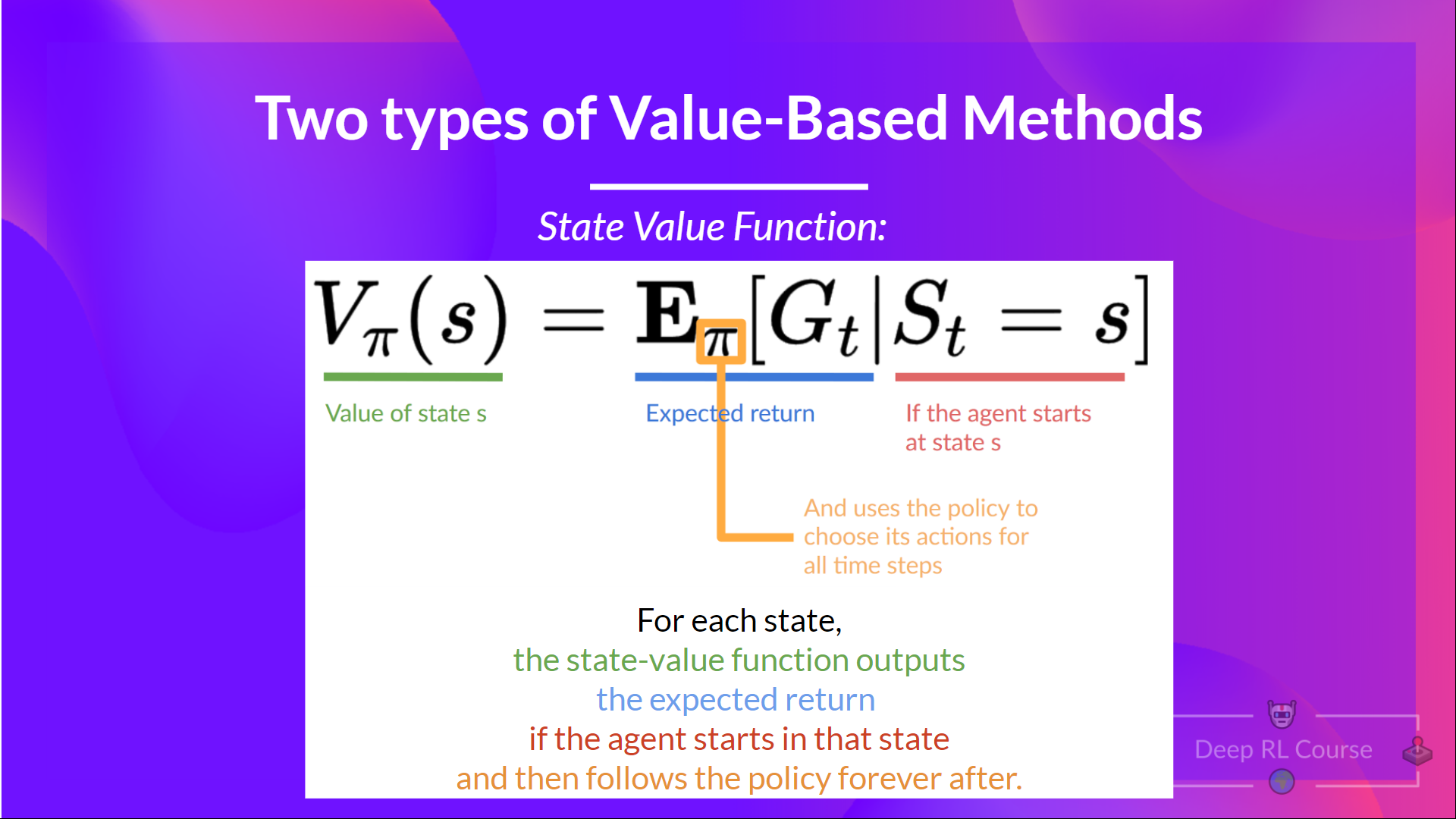

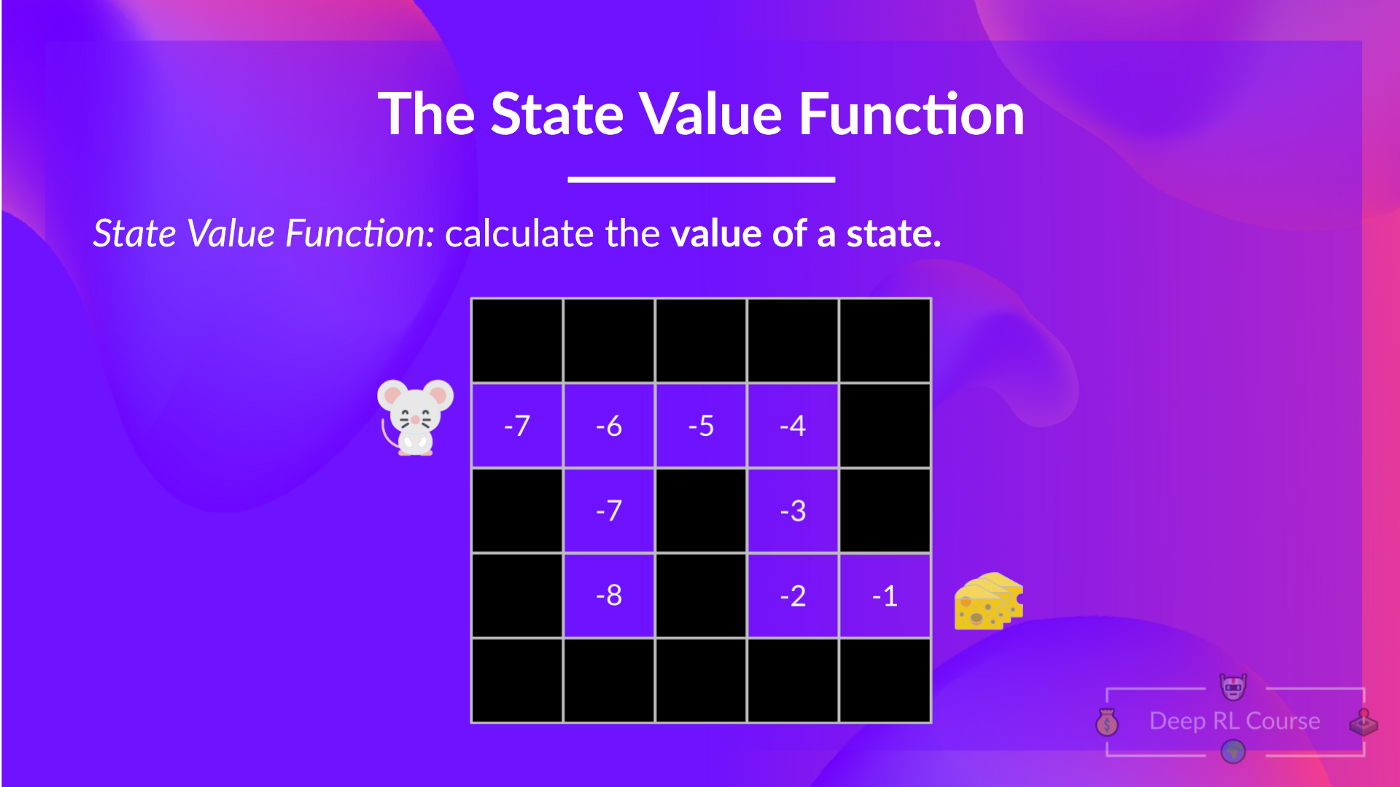

+## The State-Value function [[state-value-function]]

+

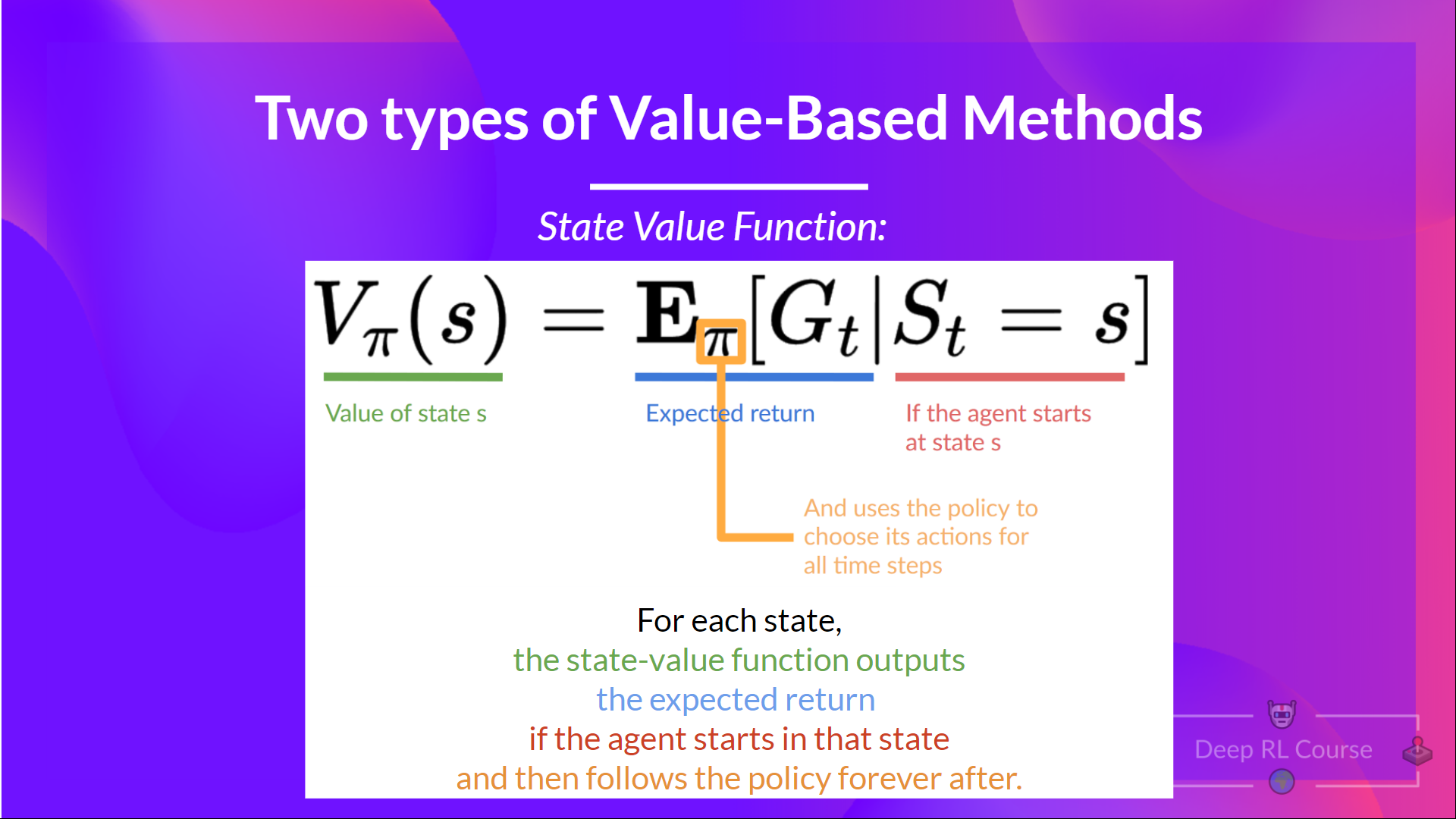

+We write the state value function under a policy π like this:

+

+

+

+In fact, most of the time, in value-based methods, you'll use **an Epsilon-Greedy Policy** that handles the exploration/exploitation trade-off; we'll talk about it when we talk about Q-Learning in the second part of this unit.

+

+

+So, we have two types of value-based functions:

+

+## The State-Value function [[state-value-function]]

+

+We write the state value function under a policy π like this:

+

+ +

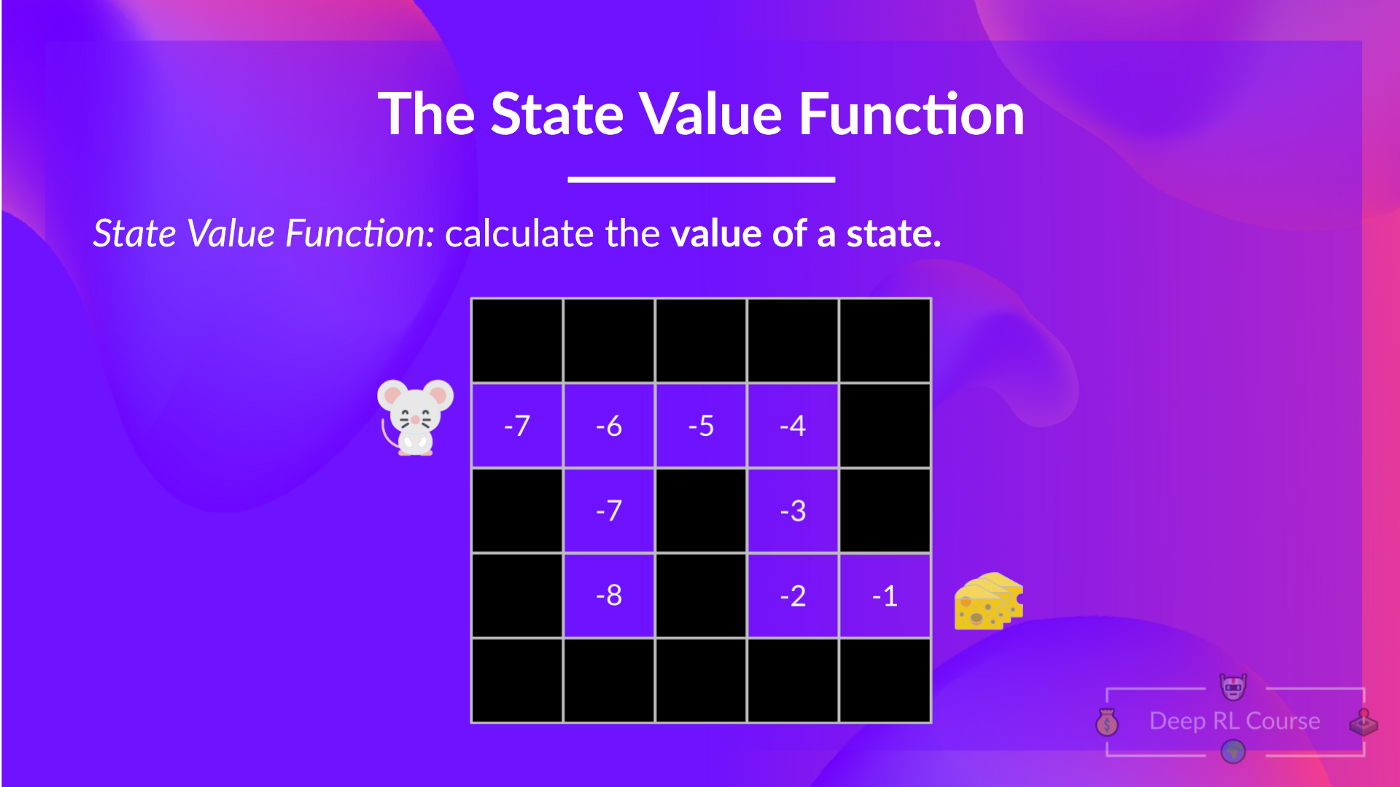

+For each state, the state-value function outputs the expected return if the agent **starts at that state,** and then follows the policy forever afterwards (for all future timesteps, if you prefer).

+

+

+

+

+For each state, the state-value function outputs the expected return if the agent **starts at that state,** and then follows the policy forever afterwards (for all future timesteps, if you prefer).

+

+

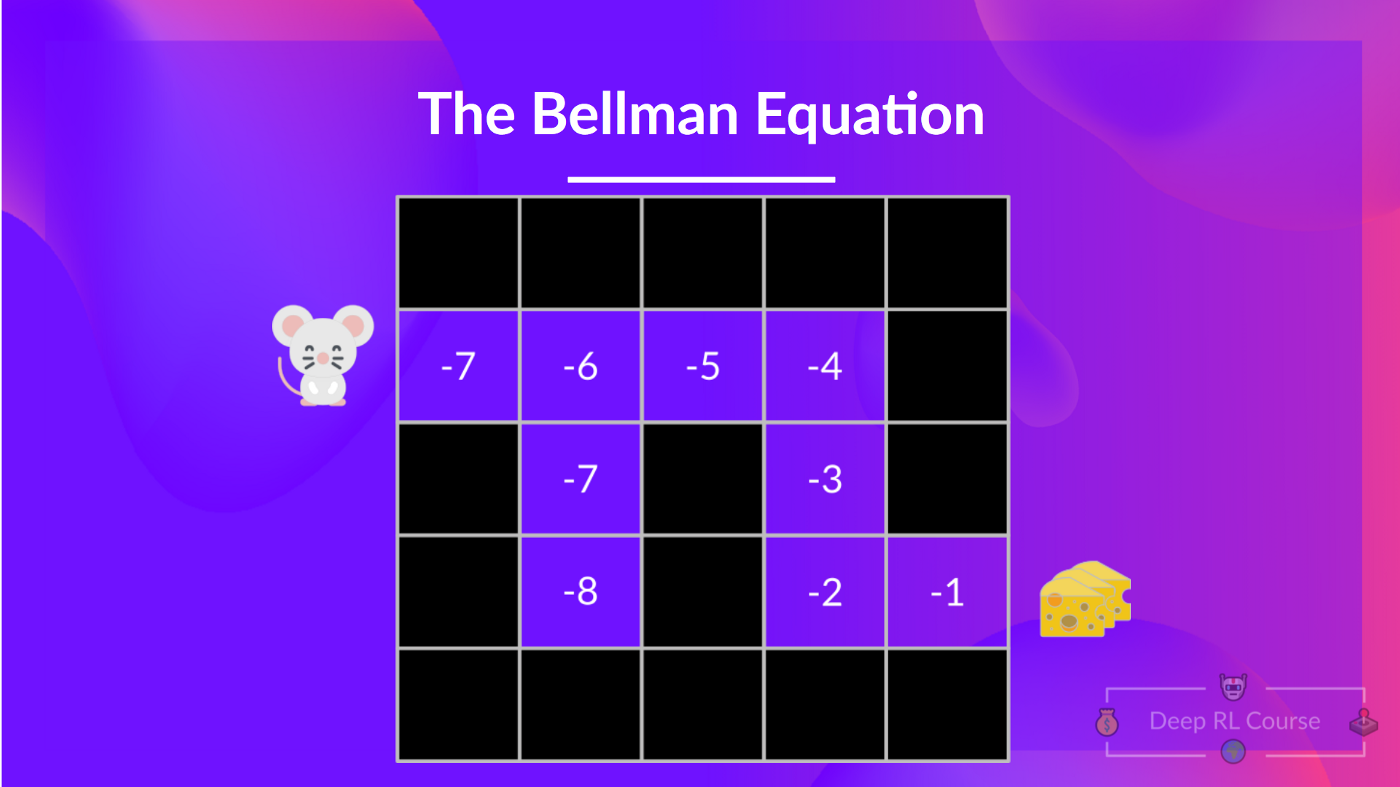

+ + If we take the state with value -7: it's the expected return starting at that state and taking actions according to our policy (greedy policy), so right, right, right, down, down, right, right.

+

+

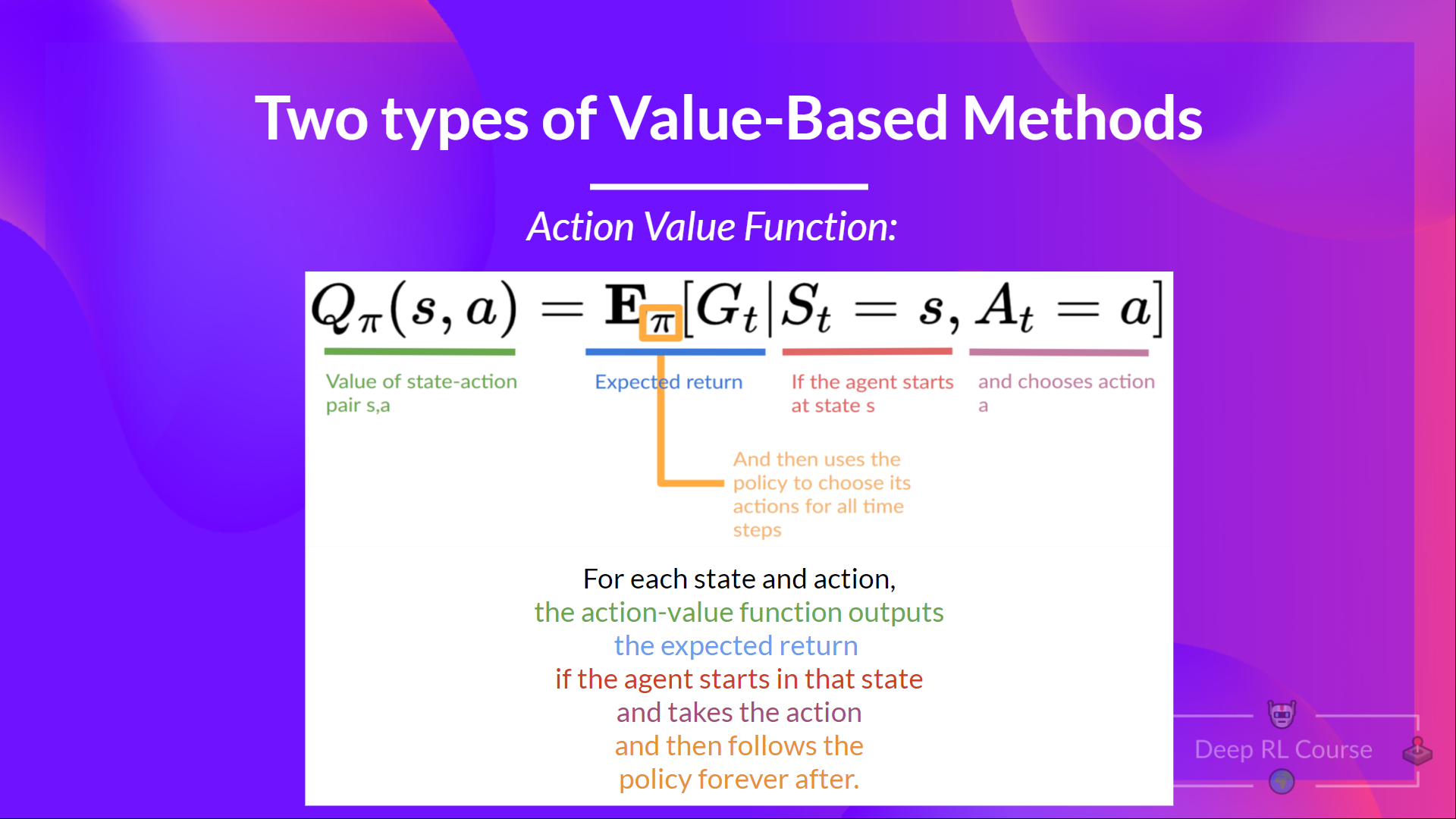

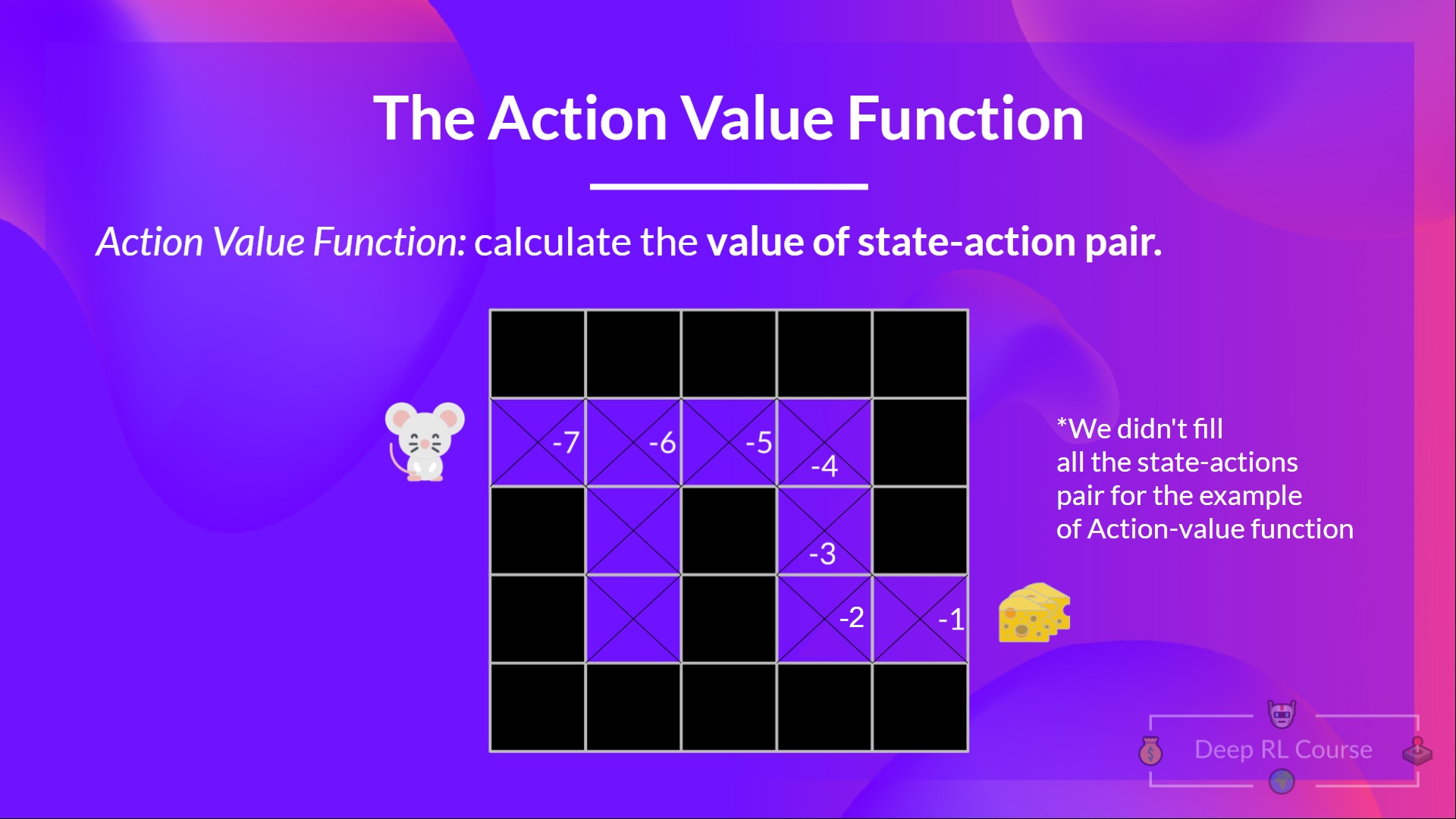

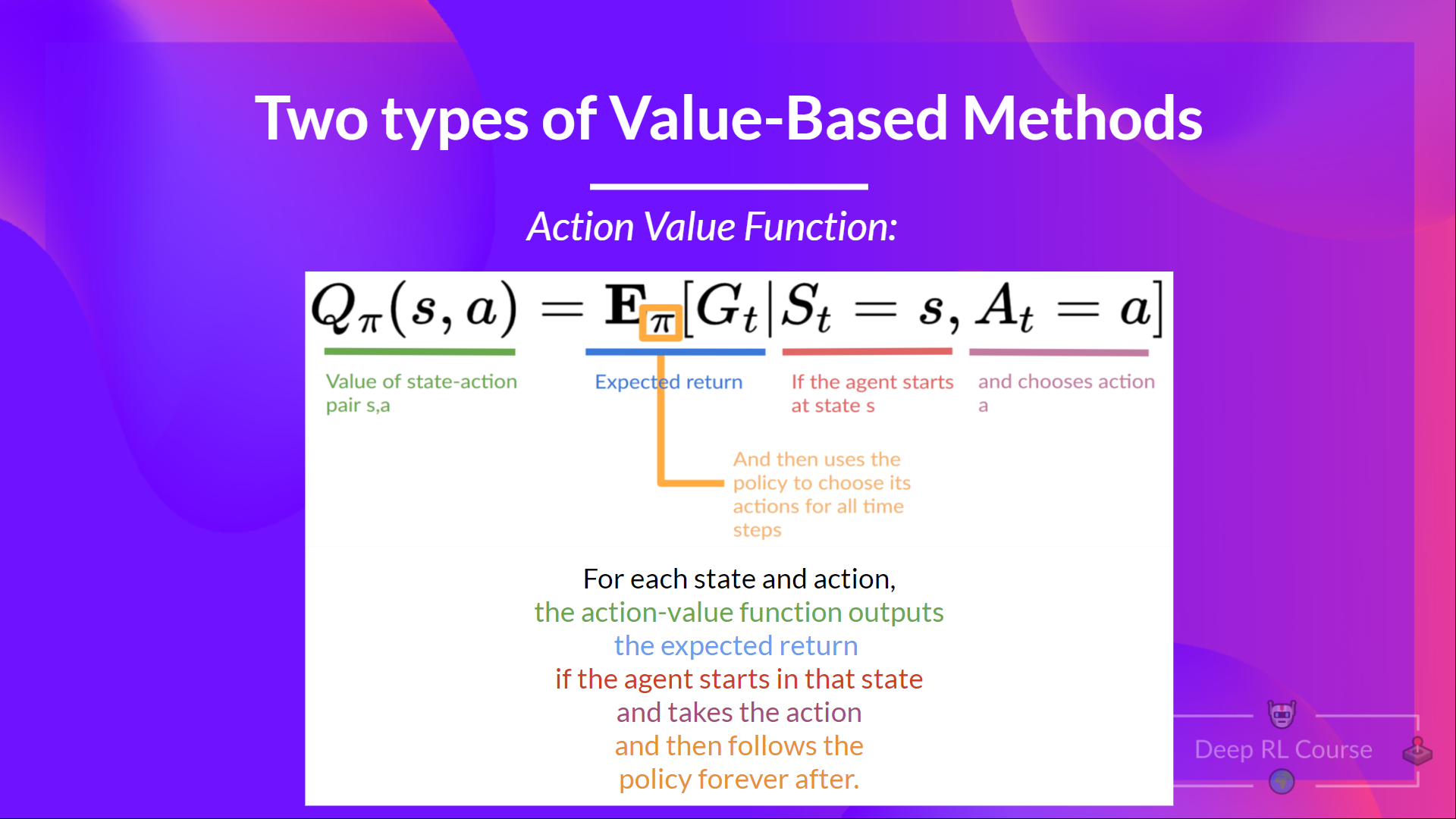

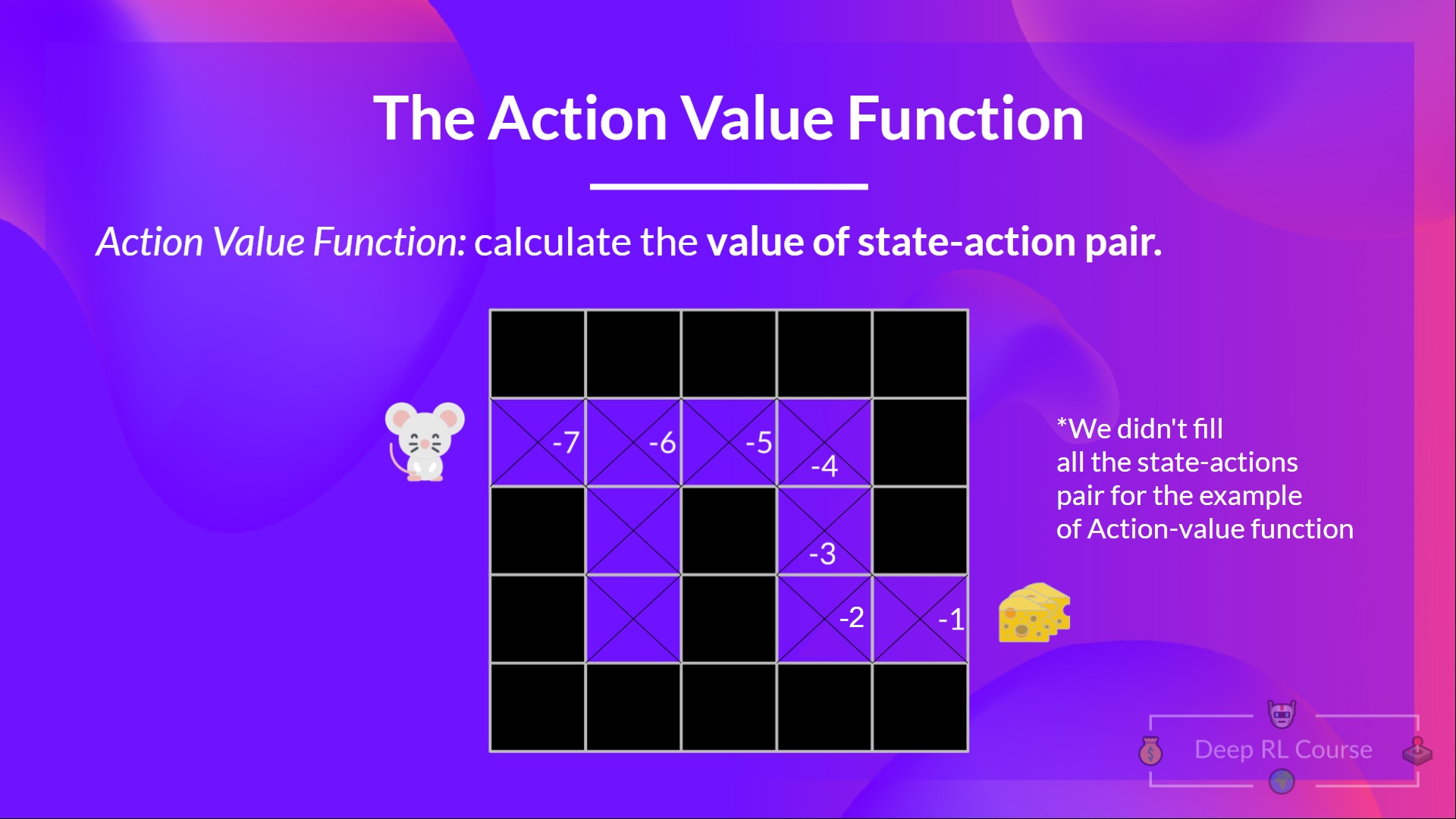

+## The Action-Value function [[action-value-function]]

+

+In the Action-value function, for each state and action pair, the action-value function **outputs the expected return** if the agent starts in that state and takes action, and then follows the policy forever after.

+

+The value of taking action an in state s under a policy π is:

+

+

+ If we take the state with value -7: it's the expected return starting at that state and taking actions according to our policy (greedy policy), so right, right, right, down, down, right, right.

+

+

+## The Action-Value function [[action-value-function]]

+

+In the Action-value function, for each state and action pair, the action-value function **outputs the expected return** if the agent starts in that state and takes action, and then follows the policy forever after.

+

+The value of taking action an in state s under a policy π is:

+

+ +

+ +

+

+We see that the difference is:

+

+- In state-value function, we calculate **the value of a state \\(S_t\\)**

+- In action-value function, we calculate **the value of the state-action pair ( \\(S_t, A_t\\) ) hence the value of taking that action at that state.**

+

+

+

+

+

+We see that the difference is:

+

+- In state-value function, we calculate **the value of a state \\(S_t\\)**

+- In action-value function, we calculate **the value of the state-action pair ( \\(S_t, A_t\\) ) hence the value of taking that action at that state.**

+

+

+  +

+Note: We didn't fill all the state-action pairs for the example of Action-value function

+

+

+In either case, whatever value function we choose (state-value or action-value function), **the value is the expected return.**

+

+However, the problem is that it implies that **to calculate EACH value of a state or a state-action pair, we need to sum all the rewards an agent can get if it starts at that state.**

+

+This can be a tedious process, and that's **where the Bellman equation comes to help us.**

diff --git a/units/en/unit2/what-is-rl.mdx b/units/en/unit2/what-is-rl.mdx

new file mode 100644

index 0000000..2c31486

--- /dev/null

+++ b/units/en/unit2/what-is-rl.mdx

@@ -0,0 +1,25 @@

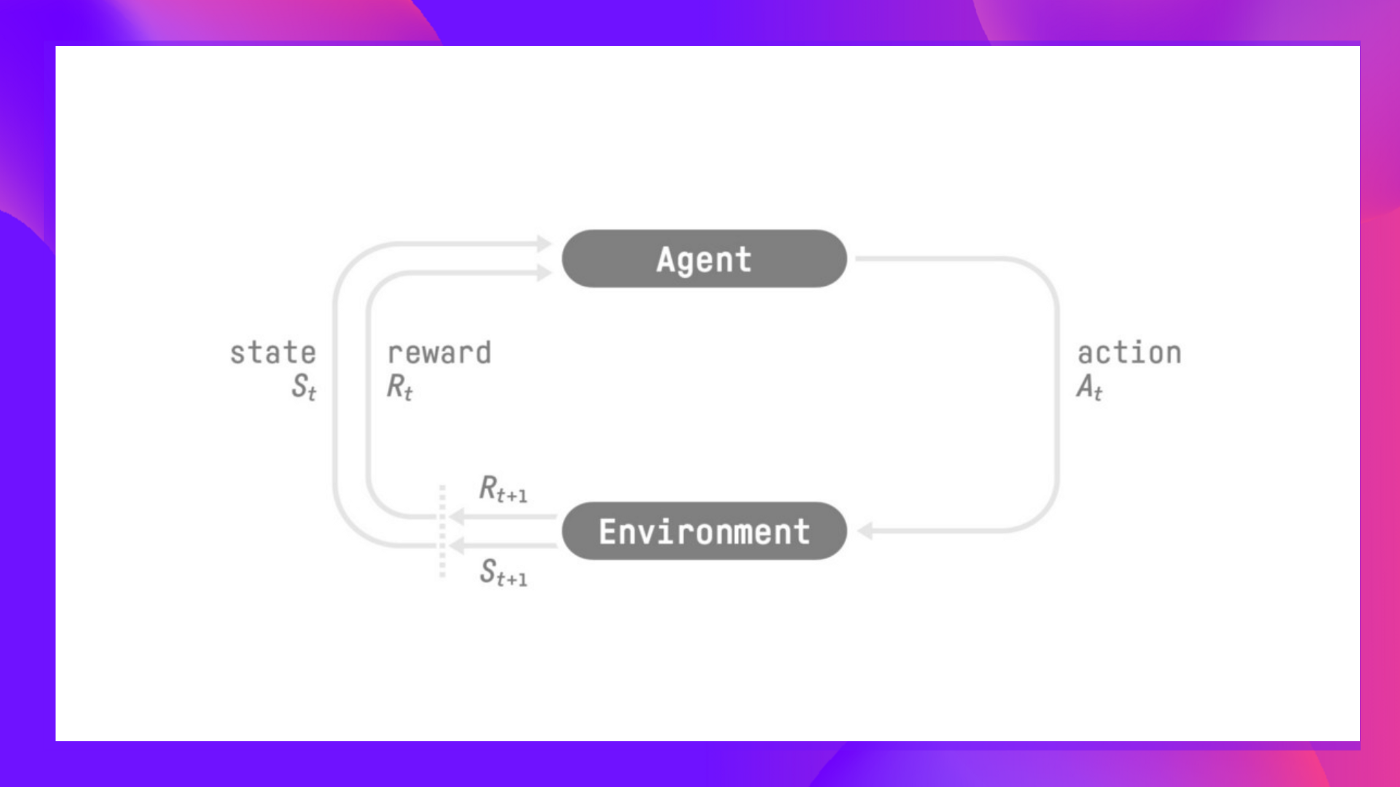

+# What is RL? A short recap [[what-is-rl]]

+

+In RL, we build an agent that can **make smart decisions**. For instance, an agent that **learns to play a video game.** Or a trading agent that **learns to maximize its benefits** by making smart decisions on **what stocks to buy and when to sell.**

+

+

+

+Note: We didn't fill all the state-action pairs for the example of Action-value function

+

+

+In either case, whatever value function we choose (state-value or action-value function), **the value is the expected return.**

+

+However, the problem is that it implies that **to calculate EACH value of a state or a state-action pair, we need to sum all the rewards an agent can get if it starts at that state.**

+

+This can be a tedious process, and that's **where the Bellman equation comes to help us.**

diff --git a/units/en/unit2/what-is-rl.mdx b/units/en/unit2/what-is-rl.mdx

new file mode 100644

index 0000000..2c31486

--- /dev/null

+++ b/units/en/unit2/what-is-rl.mdx

@@ -0,0 +1,25 @@

+# What is RL? A short recap [[what-is-rl]]

+

+In RL, we build an agent that can **make smart decisions**. For instance, an agent that **learns to play a video game.** Or a trading agent that **learns to maximize its benefits** by making smart decisions on **what stocks to buy and when to sell.**

+

+ +

+

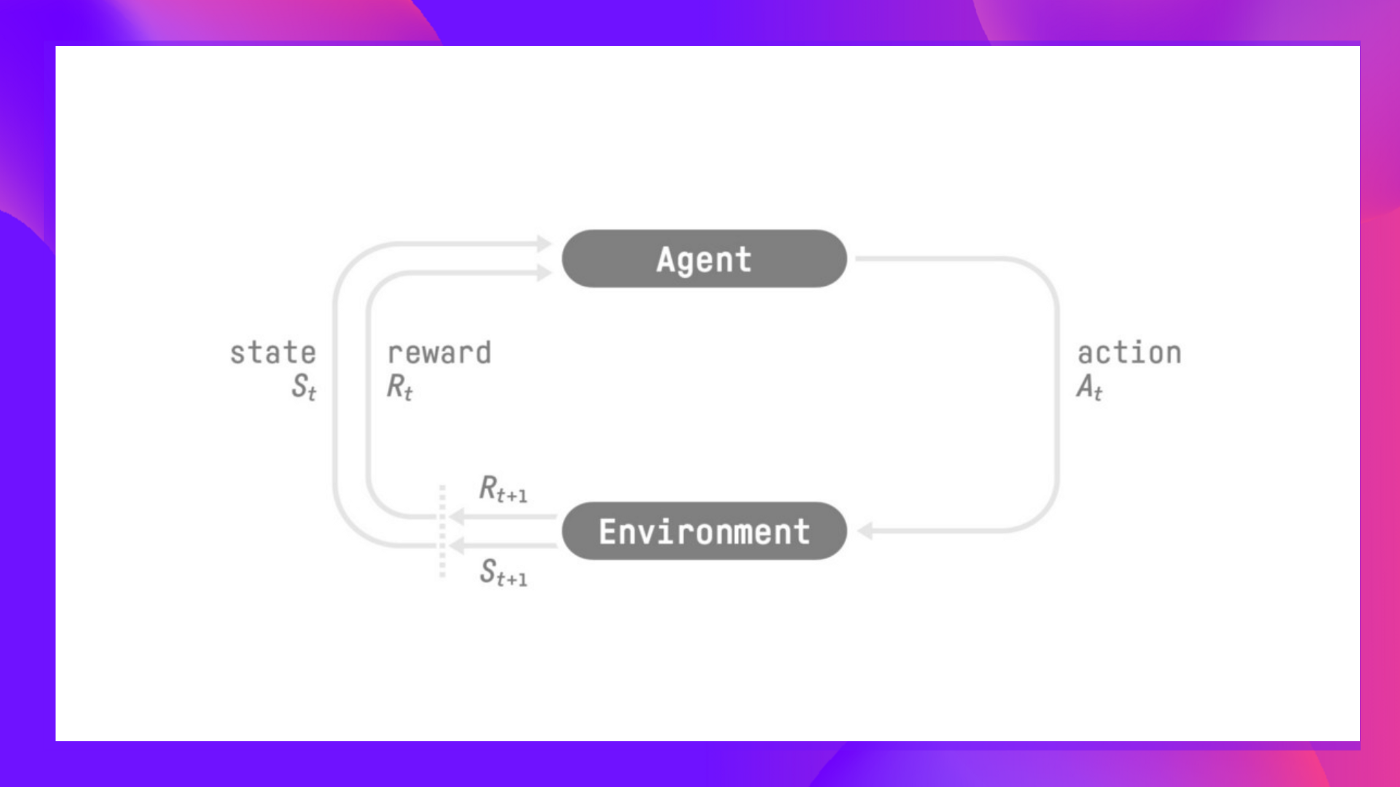

+But, to make intelligent decisions, our agent will learn from the environment by **interacting with it through trial and error** and receiving rewards (positive or negative) **as unique feedback.**

+

+Its goal **is to maximize its expected cumulative reward** (because of the reward hypothesis).

+

+**The agent's decision-making process is called the policy π:** given a state, a policy will output an action or a probability distribution over actions. That is, given an observation of the environment, a policy will provide an action (or multiple probabilities for each action) that the agent should take.

+

+

+

+

+But, to make intelligent decisions, our agent will learn from the environment by **interacting with it through trial and error** and receiving rewards (positive or negative) **as unique feedback.**

+

+Its goal **is to maximize its expected cumulative reward** (because of the reward hypothesis).

+

+**The agent's decision-making process is called the policy π:** given a state, a policy will output an action or a probability distribution over actions. That is, given an observation of the environment, a policy will provide an action (or multiple probabilities for each action) that the agent should take.

+

+ +

+**Our goal is to find an optimal policy π* **, aka., a policy that leads to the best expected cumulative reward.

+

+And to find this optimal policy (hence solving the RL problem), there **are two main types of RL methods**:

+

+- *Policy-based methods*: **Train the policy directly** to learn which action to take given a state.

+- *Value-based methods*: **Train a value function** to learn **which state is more valuable** and use this value function **to take the action that leads to it.**

+

+

+

+**Our goal is to find an optimal policy π* **, aka., a policy that leads to the best expected cumulative reward.

+

+And to find this optimal policy (hence solving the RL problem), there **are two main types of RL methods**:

+

+- *Policy-based methods*: **Train the policy directly** to learn which action to take given a state.

+- *Value-based methods*: **Train a value function** to learn **which state is more valuable** and use this value function **to take the action that leads to it.**

+

+ +

+And in this unit, **we'll dive deeper into the value-based methods.**

+

+And in this unit, **we'll dive deeper into the value-based methods.**

+

+  +

+With what we have learned so far, we know that if we calculate the \\(V(S_t)\\) (value of a state), we need to calculate the return starting at that state and then follow the policy forever after. **(The policy we defined in the following example is a Greedy Policy; for simplification, we don't discount the reward).**

+

+So to calculate \\(V(S_t)\\), we need to calculate the sum of the expected rewards. Hence:

+

+

+

+With what we have learned so far, we know that if we calculate the \\(V(S_t)\\) (value of a state), we need to calculate the return starting at that state and then follow the policy forever after. **(The policy we defined in the following example is a Greedy Policy; for simplification, we don't discount the reward).**

+

+So to calculate \\(V(S_t)\\), we need to calculate the sum of the expected rewards. Hence:

+

+ +

+  +

+  +

+  +

+

+To calculate the value of State 1: the sum of rewards **if the agent started in that state 1** and then followed the **policy for all the time steps.**

+

+This is equivalent to \\(V(S_{t})\\) = Immediate reward \\(R_{t+1}\\) + Discounted value of the next state \\(gamma * V(S_{t+1})\\)

+

+

+

+

+To calculate the value of State 1: the sum of rewards **if the agent started in that state 1** and then followed the **policy for all the time steps.**

+

+This is equivalent to \\(V(S_{t})\\) = Immediate reward \\(R_{t+1}\\) + Discounted value of the next state \\(gamma * V(S_{t+1})\\)

+

+ +

+

+In the interest of simplicity, here we don't discount, so gamma = 1.

+

+- The value of \\(V(S_{t+1}) \\) = Immediate reward \\(R_{t+2}\\) + Discounted value of the next state ( \\(gamma * V(S_{t+2})\\) ).

+- And so on.

+

+To recap, the idea of the Bellman equation is that instead of calculating each value as the sum of the expected return, **which is a long process.** This is equivalent **to the sum of immediate reward + the discounted value of the state that follows.**

+

+Before going to the next section, think about the role of gamma in the Bellman equation. What happens if the value of gamma is very low (e.g. 0.1 or even 0)? What happens if the value is 1? What happens if the value is very high, such as a million?

diff --git a/units/en/unit2/conclusion.mdx b/units/en/unit2/conclusion.mdx

new file mode 100644

index 0000000..f271ce0

--- /dev/null

+++ b/units/en/unit2/conclusion.mdx

@@ -0,0 +1,19 @@

+# Conclusion [[conclusion]]

+

+Congrats on finishing this chapter! There was a lot of information. And congrats on finishing the tutorials. You’ve just implemented your first RL agent from scratch and shared it on the Hub 🥳.

+

+Implementing from scratch when you study a new architecture **is important to understand how it works.**

+

+That’s **normal if you still feel confused** with all these elements. **This was the same for me and for all people who studied RL.**

+

+Take time to really grasp the material before continuing.

+

+

+In the next chapter, we’re going to dive deeper by studying our first Deep Reinforcement Learning algorithm based on Q-Learning: Deep Q-Learning. And you'll train a **DQN agent with RL-Baselines3 Zoo to play Atari Games**.

+

+

+

+

+

+In the interest of simplicity, here we don't discount, so gamma = 1.

+

+- The value of \\(V(S_{t+1}) \\) = Immediate reward \\(R_{t+2}\\) + Discounted value of the next state ( \\(gamma * V(S_{t+2})\\) ).

+- And so on.

+

+To recap, the idea of the Bellman equation is that instead of calculating each value as the sum of the expected return, **which is a long process.** This is equivalent **to the sum of immediate reward + the discounted value of the state that follows.**

+

+Before going to the next section, think about the role of gamma in the Bellman equation. What happens if the value of gamma is very low (e.g. 0.1 or even 0)? What happens if the value is 1? What happens if the value is very high, such as a million?

diff --git a/units/en/unit2/conclusion.mdx b/units/en/unit2/conclusion.mdx

new file mode 100644

index 0000000..f271ce0

--- /dev/null

+++ b/units/en/unit2/conclusion.mdx

@@ -0,0 +1,19 @@

+# Conclusion [[conclusion]]

+

+Congrats on finishing this chapter! There was a lot of information. And congrats on finishing the tutorials. You’ve just implemented your first RL agent from scratch and shared it on the Hub 🥳.

+

+Implementing from scratch when you study a new architecture **is important to understand how it works.**

+

+That’s **normal if you still feel confused** with all these elements. **This was the same for me and for all people who studied RL.**

+

+Take time to really grasp the material before continuing.

+

+

+In the next chapter, we’re going to dive deeper by studying our first Deep Reinforcement Learning algorithm based on Q-Learning: Deep Q-Learning. And you'll train a **DQN agent with RL-Baselines3 Zoo to play Atari Games**.

+

+

+ +

+

+

+### Keep Learning, stay awesome 🤗

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

new file mode 100644

index 0000000..d683cac

--- /dev/null

+++ b/units/en/unit2/hands-on.mdx

@@ -0,0 +1,14 @@

+# Hands-on [[hands-on]]

+

+Now that we studied the Q-Learning algorithm, let's implement it from scratch and train our Q-Learning agent in two environments:

+1. [Frozen-Lake-v1 (non-slippery and slippery version)](https://www.gymlibrary.dev/environments/toy_text/frozen_lake/) ☃️ : where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. [An autonomous taxi](https://www.gymlibrary.dev/environments/toy_text/taxi/) 🚖 will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+

+

+

+### Keep Learning, stay awesome 🤗

diff --git a/units/en/unit2/hands-on.mdx b/units/en/unit2/hands-on.mdx

new file mode 100644

index 0000000..d683cac

--- /dev/null

+++ b/units/en/unit2/hands-on.mdx

@@ -0,0 +1,14 @@

+# Hands-on [[hands-on]]

+

+Now that we studied the Q-Learning algorithm, let's implement it from scratch and train our Q-Learning agent in two environments:

+1. [Frozen-Lake-v1 (non-slippery and slippery version)](https://www.gymlibrary.dev/environments/toy_text/frozen_lake/) ☃️ : where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. [An autonomous taxi](https://www.gymlibrary.dev/environments/toy_text/taxi/) 🚖 will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+ +

+Thanks to a [leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard), you'll be able to compare your results with other classmates and exchange the best practices to improve your agent's scores Who will win the challenge for Unit 2?

+

+

+**To start the hands-on click on Open In Colab button** 👇 :

+

+[]()

diff --git a/units/en/unit2/introduction.mdx b/units/en/unit2/introduction.mdx

new file mode 100644

index 0000000..409f025

--- /dev/null

+++ b/units/en/unit2/introduction.mdx

@@ -0,0 +1,26 @@

+# Introduction to Q-Learning [[introduction-q-learning]]

+

+

+

+Thanks to a [leaderboard](https://huggingface.co/spaces/huggingface-projects/Deep-Reinforcement-Learning-Leaderboard), you'll be able to compare your results with other classmates and exchange the best practices to improve your agent's scores Who will win the challenge for Unit 2?

+

+

+**To start the hands-on click on Open In Colab button** 👇 :

+

+[]()

diff --git a/units/en/unit2/introduction.mdx b/units/en/unit2/introduction.mdx

new file mode 100644

index 0000000..409f025

--- /dev/null

+++ b/units/en/unit2/introduction.mdx

@@ -0,0 +1,26 @@

+# Introduction to Q-Learning [[introduction-q-learning]]

+

+ +

+

+In the first unit of this class, we learned about Reinforcement Learning (RL), the RL process, and the different methods to solve an RL problem. We also **trained our first agents and uploaded them to the Hugging Face Hub.**

+

+In this unit, we're going to **dive deeper into one of the Reinforcement Learning methods: value-based methods** and study our first RL algorithm: **Q-Learning.**

+

+We'll also **implement our first RL agent from scratch**, a Q-Learning agent, and will train it in two environments:

+

+1. Frozen-Lake-v1 (non-slippery version): where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. An autonomous taxi: where our agent will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+

+

+

+In the first unit of this class, we learned about Reinforcement Learning (RL), the RL process, and the different methods to solve an RL problem. We also **trained our first agents and uploaded them to the Hugging Face Hub.**

+

+In this unit, we're going to **dive deeper into one of the Reinforcement Learning methods: value-based methods** and study our first RL algorithm: **Q-Learning.**

+

+We'll also **implement our first RL agent from scratch**, a Q-Learning agent, and will train it in two environments:

+

+1. Frozen-Lake-v1 (non-slippery version): where our agent will need to **go from the starting state (S) to the goal state (G)** by walking only on frozen tiles (F) and avoiding holes (H).

+2. An autonomous taxi: where our agent will need **to learn to navigate** a city to **transport its passengers from point A to point B.**

+

+

+ +

+Concretely, we will:

+

+- Learn about **value-based methods**.

+- Learn about the **differences between Monte Carlo and Temporal Difference Learning**.

+- Study and implement **our first RL algorithm**: Q-Learning.s

+

+This unit is **fundamental if you want to be able to work on Deep Q-Learning**: the first Deep RL algorithm that played Atari games and beat the human level on some of them (breakout, space invaders…).

+

+So let's get started! 🚀

diff --git a/units/en/unit2/mc-vs-td.mdx b/units/en/unit2/mc-vs-td.mdx

new file mode 100644

index 0000000..e78ee78

--- /dev/null

+++ b/units/en/unit2/mc-vs-td.mdx

@@ -0,0 +1,126 @@

+# Monte Carlo vs Temporal Difference Learning [[mc-vs-td]]

+

+The last thing we need to talk about before diving into Q-Learning is the two ways of learning.

+

+Remember that an RL agent **learns by interacting with its environment.** The idea is that **using the experience taken**, given the reward it gets, will **update its value or policy.**

+

+Monte Carlo and Temporal Difference Learning are two different **strategies on how to train our value function or our policy function.** Both of them **use experience to solve the RL problem.**

+

+On one hand, Monte Carlo uses **an entire episode of experience before learning.** On the other hand, Temporal Difference uses **only a step ( \\(S_t, A_t, R_{t+1}, S_{t+1}\\) ) to learn.**

+

+We'll explain both of them **using a value-based method example.**

+

+## Monte Carlo: learning at the end of the episode [[monte-carlo]]

+

+Monte Carlo waits until the end of the episode, calculates \\(G_t\\) (return) and uses it as **a target for updating \\(V(S_t)\\).**

+