mirror of

https://github.com/huggingface/deep-rl-class.git

synced 2026-02-08 12:54:32 +08:00

Merge branch 'main' into ThomasSimonini/A2C

This commit is contained in:

@@ -13,7 +13,7 @@ This repository contains the Deep Reinforcement Learning Course mdx files and no

|

||||

<br>

|

||||

<br>

|

||||

|

||||

# The documentation below is for v1.0 (depreciated)

|

||||

# The documentation below is for v1.0 (deprecated)

|

||||

|

||||

We're launching a **new version (v2.0) of the course starting December the 5th,**

|

||||

|

||||

@@ -26,7 +26,7 @@ The syllabus 📚: https://simoninithomas.github.io/deep-rl-course

|

||||

<br>

|

||||

<br>

|

||||

|

||||

# The documentation below is for v1.0 (depreciated)

|

||||

# The documentation below is for v1.0 (deprecated)

|

||||

|

||||

In this free course, you will:

|

||||

|

||||

|

||||

@@ -509,7 +509,7 @@

|

||||

"\n",

|

||||

"This step is the simplest:\n",

|

||||

"\n",

|

||||

"- Open the game Huggy in your browser: https://huggingface.co/spaces/ThomasSimonini/Huggy\n",

|

||||

"- Open the game Huggy in your browser: https://singularite.itch.io/huggy\n",

|

||||

"\n",

|

||||

"- Click on Play with my Huggy model\n",

|

||||

"\n",

|

||||

@@ -569,4 +569,4 @@

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 0

|

||||

}

|

||||

}

|

||||

|

||||

5

notebooks/unit1/requirements-unit1.txt

Normal file

5

notebooks/unit1/requirements-unit1.txt

Normal file

@@ -0,0 +1,5 @@

|

||||

stable-baselines3[extra]

|

||||

box2d

|

||||

box2d-kengz

|

||||

huggingface_sb3

|

||||

pyglet==1.5.1

|

||||

@@ -230,15 +230,6 @@

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"TODO CHANGE LINK OF THE REQUIREMENTS"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "32e3NPYgH5ET"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

@@ -247,7 +238,7 @@

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!pip install -r https://huggingface.co/spaces/ThomasSimonini/temp-space-requirements/raw/main/requirements/requirements-unit1.txt"

|

||||

"!pip install -r https://raw.githubusercontent.com/huggingface/deep-rl-class/main/notebooks/unit1/requirements-unit1.txt"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1155,4 +1146,4 @@

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 0

|

||||

}

|

||||

}

|

||||

|

||||

@@ -127,7 +127,7 @@

|

||||

"source": [

|

||||

"# Let's train a Deep Q-Learning agent playing Atari' Space Invaders 👾 and upload it to the Hub.\n",

|

||||

"\n",

|

||||

"To validate this hands-on for the certification process, you need to push your trained model to the Hub and **get a result of >= 500**.\n",

|

||||

"To validate this hands-on for the certification process, you need to push your trained model to the Hub and **get a result of >= 200**.\n",

|

||||

"\n",

|

||||

"To find your result, go to the leaderboard and find your model, **the result = mean_reward - std of reward**\n",

|

||||

"\n",

|

||||

@@ -799,4 +799,4 @@

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 0

|

||||

}

|

||||

}

|

||||

|

||||

6

notebooks/unit4/requirements-unit4.txt

Normal file

6

notebooks/unit4/requirements-unit4.txt

Normal file

@@ -0,0 +1,6 @@

|

||||

gym

|

||||

git+https://github.com/ntasfi/PyGame-Learning-Environment.git

|

||||

git+https://github.com/qlan3/gym-games.git

|

||||

huggingface_hub

|

||||

imageio-ffmpeg

|

||||

pyyaml==6.0

|

||||

1614

notebooks/unit4/unit4.ipynb

Normal file

1614

notebooks/unit4/unit4.ipynb

Normal file

File diff suppressed because it is too large

Load Diff

844

notebooks/unit5/unit5.ipynb

Normal file

844

notebooks/unit5/unit5.ipynb

Normal file

@@ -0,0 +1,844 @@

|

||||

{

|

||||

"cells": [

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "view-in-github",

|

||||

"colab_type": "text"

|

||||

},

|

||||

"source": [

|

||||

"<a href=\"https://colab.research.google.com/github/huggingface/deep-rl-class/blob/ThomasSimonini%2FMLAgents/notebooks/unit5/unit5.ipynb\" target=\"_parent\"><img src=\"https://colab.research.google.com/assets/colab-badge.svg\" alt=\"Open In Colab\"/></a>"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "2D3NL_e4crQv"

|

||||

},

|

||||

"source": [

|

||||

"# Unit 5: An Introduction to ML-Agents\n",

|

||||

"\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/thumbnail.png\" alt=\"Thumbnail\"/>\n",

|

||||

"\n",

|

||||

"In this notebook, you'll learn about ML-Agents and train two agents.\n",

|

||||

"\n",

|

||||

"- The first one will learn to **shoot snowballs onto spawning targets**.\n",

|

||||

"- The second need to press a button to spawn a pyramid, then navigate to the pyramid, knock it over, **and move to the gold brick at the top**. To do that, it will need to explore its environment, and we will use a technique called curiosity.\n",

|

||||

"\n",

|

||||

"After that, you'll be able **to watch your agents playing directly on your browser**.\n",

|

||||

"\n",

|

||||

"For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "97ZiytXEgqIz"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"⬇️ Here is an example of what **you will achieve at the end of this unit.** ⬇️\n"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "FMYrDriDujzX"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/pyramids.gif\" alt=\"Pyramids\"/>\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/snowballtarget.gif\" alt=\"SnowballTarget\"/>"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "cBmFlh8suma-"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### 🎮 Environments: \n",

|

||||

"\n",

|

||||

"- [Pyramids](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Learning-Environment-Examples.md#pyramids)\n",

|

||||

"- SnowballTarget\n",

|

||||

"\n",

|

||||

"### 📚 RL-Library: \n",

|

||||

"\n",

|

||||

"- [ML-Agents (HuggingFace Experimental Version)](https://github.com/huggingface/ml-agents)\n",

|

||||

"\n",

|

||||

"⚠ We're going to use an experimental version of ML-Agents were you can push to hub and load from hub Unity ML-Agents Models **you need to install the same version**"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "A-cYE0K5iL-w"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"We're constantly trying to improve our tutorials, so **if you find some issues in this notebook**, please [open an issue on the GitHub Repo](https://github.com/huggingface/deep-rl-class/issues)."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "qEhtaFh9i31S"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## Objectives of this notebook 🏆\n",

|

||||

"\n",

|

||||

"At the end of the notebook, you will:\n",

|

||||

"\n",

|

||||

"- Understand how works **ML-Agents**, the environment library.\n",

|

||||

"- Be able to **train agents in Unity Environments**.\n"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "j7f63r3Yi5vE"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## This notebook is from the Deep Reinforcement Learning Course\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/deep-rl-course-illustration.jpg\" alt=\"Deep RL Course illustration\"/>"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "viNzVbVaYvY3"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "6p5HnEefISCB"

|

||||

},

|

||||

"source": [

|

||||

"In this free course, you will:\n",

|

||||

"\n",

|

||||

"- 📖 Study Deep Reinforcement Learning in **theory and practice**.\n",

|

||||

"- 🧑💻 Learn to **use famous Deep RL libraries** such as Stable Baselines3, RL Baselines3 Zoo, CleanRL and Sample Factory 2.0.\n",

|

||||

"- 🤖 Train **agents in unique environments** \n",

|

||||

"\n",

|

||||

"And more check 📚 the syllabus 👉 https://huggingface.co/deep-rl-course/communication/publishing-schedule\n",

|

||||

"\n",

|

||||

"Don’t forget to **<a href=\"http://eepurl.com/ic5ZUD\">sign up to the course</a>** (we are collecting your email to be able to **send you the links when each Unit is published and give you information about the challenges and updates).**\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"The best way to keep in touch is to join our discord server to exchange with the community and with us 👉🏻 https://discord.gg/ydHrjt3WP5"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "Y-mo_6rXIjRi"

|

||||

},

|

||||

"source": [

|

||||

"## Prerequisites 🏗️\n",

|

||||

"Before diving into the notebook, you need to:\n",

|

||||

"\n",

|

||||

"🔲 📚 **Study [what is ML-Agents and how it works by reading Unit 5](https://huggingface.co/deep-rl-course/unit5/introduction)** 🤗 "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"# Let's train our agents 🚀\n",

|

||||

"\n",

|

||||

"The ML-Agents integration on the Hub is **still experimental**, some features will be added in the future. \n",

|

||||

"\n",

|

||||

"But for now, **to validate this hands-on for the certification process, you just need to push your trained models to the Hub**. There’s no results to attain to validate this one. But if you want to get nice results you can try to attain:\n",

|

||||

"\n",

|

||||

"- For `Pyramids` : Mean Reward = 1.75\n",

|

||||

"- For `SnowballTarget` : Mean Reward = 15 or 30 targets hit in an episode.\n"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "xYO1uD5Ujgdh"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## Set the GPU 💪\n",

|

||||

"- To **accelerate the agent's training, we'll use a GPU**. To do that, go to `Runtime > Change Runtime type`\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/gpu-step1.jpg\" alt=\"GPU Step 1\">"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "DssdIjk_8vZE"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"- `Hardware Accelerator > GPU`\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/gpu-step2.jpg\" alt=\"GPU Step 2\">"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "sTfCXHy68xBv"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "an3ByrXYQ4iK"

|

||||

},

|

||||

"source": [

|

||||

"## Clone the repository and install the dependencies 🔽\n",

|

||||

"- We need to clone the repository, that **contains the experimental version of the library that allows you to push your trained agent to the Hub.**"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "6WNoL04M7rTa"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"%%capture\n",

|

||||

"# Clone the repository\n",

|

||||

"!git clone --depth 1 https://github.com/huggingface/ml-agents/ "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "d8wmVcMk7xKo"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"%%capture\n",

|

||||

"# Go inside the repository and install the package\n",

|

||||

"%cd ml-agents\n",

|

||||

"!pip3 install -e ./ml-agents-envs\n",

|

||||

"!pip3 install -e ./ml-agents"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## SnowballTarget ⛄\n",

|

||||

"\n",

|

||||

"If you need a refresher on how this environments work check this section 👉\n",

|

||||

"https://huggingface.co/deep-rl-course/unit5/snowball-target"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "R5_7Ptd_kEcG"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "HRY5ufKUKfhI"

|

||||

},

|

||||

"source": [

|

||||

"### Download and move the environment zip file in `./training-envs-executables/linux/`\n",

|

||||

"- Our environment executable is in a zip file.\n",

|

||||

"- We need to download it and place it to `./training-envs-executables/linux/`\n",

|

||||

"- We use a linux executable because we use colab, and colab machines OS is Ubuntu (linux)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "C9Ls6_6eOKiA"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Here, we create training-envs-executables and linux\n",

|

||||

"!mkdir ./training-envs-executables\n",

|

||||

"!mkdir ./training-envs-executables/linux"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "jsoZGxr1MIXY"

|

||||

},

|

||||

"source": [

|

||||

"Download the file SnowballTarget.zip from https://drive.google.com/file/d/1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5 using `wget`. \n",

|

||||

"\n",

|

||||

"Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "QU6gi8CmWhnA"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget --load-cookies /tmp/cookies.txt \"https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\\1\\n/p')&id=1YHHLjyj6gaZ3Gemx1hQgqrPgSS2ZhmB5\" -O ./training-envs-executables/linux/SnowballTarget.zip && rm -rf /tmp/cookies.txt"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"We unzip the executable.zip file"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "_LLVaEEK3ayi"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "8FPx0an9IAwO"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"%%capture\n",

|

||||

"!unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/SnowballTarget.zip"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "nyumV5XfPKzu"

|

||||

},

|

||||

"source": [

|

||||

"Make sure your file is accessible "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "EdFsLJ11JvQf"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!chmod -R 755 ./training-envs-executables/linux/SnowballTarget"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### Define the SnowballTarget config file\n",

|

||||

"- In ML-Agents, you define the **training hyperparameters into config.yaml files.**\n",

|

||||

"\n",

|

||||

"There are multiple hyperparameters. To know them better, you should check for each explanation with [the documentation](https://github.com/Unity-Technologies/ml-agents/blob/release_20_docs/docs/Training-Configuration-File.md)\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"So you need to create a `SnowballTarget.yaml` config file in ./content/ml-agents/config/ppo/\n",

|

||||

"\n",

|

||||

"We'll give you here a first version of this config (to copy and paste into your `SnowballTarget.yaml file`), **but you should modify it**.\n",

|

||||

"\n",

|

||||

"```\n",

|

||||

"behaviors:\n",

|

||||

" SnowballTarget:\n",

|

||||

" trainer_type: ppo\n",

|

||||

" summary_freq: 10000\n",

|

||||

" keep_checkpoints: 10\n",

|

||||

" checkpoint_interval: 50000\n",

|

||||

" max_steps: 200000\n",

|

||||

" time_horizon: 64\n",

|

||||

" threaded: true\n",

|

||||

" hyperparameters:\n",

|

||||

" learning_rate: 0.0003\n",

|

||||

" learning_rate_schedule: linear\n",

|

||||

" batch_size: 128\n",

|

||||

" buffer_size: 2048\n",

|

||||

" beta: 0.005\n",

|

||||

" epsilon: 0.2\n",

|

||||

" lambd: 0.95\n",

|

||||

" num_epoch: 3\n",

|

||||

" network_settings:\n",

|

||||

" normalize: false\n",

|

||||

" hidden_units: 256\n",

|

||||

" num_layers: 2\n",

|

||||

" vis_encode_type: simple\n",

|

||||

" reward_signals:\n",

|

||||

" extrinsic:\n",

|

||||

" gamma: 0.99\n",

|

||||

" strength: 1.0\n",

|

||||

"```"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "NAuEq32Mwvtz"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/snowballfight_config1.png\" alt=\"Config SnowballTarget\"/>\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/snowballfight_config2.png\" alt=\"Config SnowballTarget\"/>"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "4U3sRH4N4h_l"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"As an experimentation, you should also try to modify some other hyperparameters. Unity provides very [good documentation explaining each of them here](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Training-Configuration-File.md).\n",

|

||||

"\n",

|

||||

"Now that you've created the config file and understand what most hyperparameters do, we're ready to train our agent 🔥."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "JJJdo_5AyoGo"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "f9fI555bO12v"

|

||||

},

|

||||

"source": [

|

||||

"### Train the agent\n",

|

||||

"\n",

|

||||

"To train our agent, we just need to **launch mlagents-learn and select the executable containing the environment.**\n",

|

||||

"\n",

|

||||

"We define four parameters:\n",

|

||||

"\n",

|

||||

"1. `mlagents-learn <config>`: the path where the hyperparameter config file is.\n",

|

||||

"2. `--env`: where the environment executable is.\n",

|

||||

"3. `--run_id`: the name you want to give to your training run id.\n",

|

||||

"4. `--no-graphics`: to not launch the visualization during the training.\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/mlagentslearn.png\" alt=\"MlAgents learn\"/>\n",

|

||||

"\n",

|

||||

"Train the model and use the `--resume` flag to continue training in case of interruption. \n",

|

||||

"\n",

|

||||

"> It will fail first time if and when you use `--resume`, try running the block again to bypass the error. \n",

|

||||

"\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"The training will take 10 to 35min depending on your config, go take a ☕️you deserve it 🤗."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "lN32oWF8zPjs"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "bS-Yh1UdHfzy"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!mlagents-learn ./config/ppo/SnowballTarget.yaml --env=./training-envs-executables/linux/SnowballTarget/SnowballTarget --run-id=\"SnowballTarget1\" --no-graphics"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "5Vue94AzPy1t"

|

||||

},

|

||||

"source": [

|

||||

"### Push the agent to the 🤗 Hub\n",

|

||||

"\n",

|

||||

"- Now that we trained our agent, we’re **ready to push it to the Hub to be able to visualize it playing on your browser🔥.**"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"To be able to share your model with the community there are three more steps to follow:\n",

|

||||

"\n",

|

||||

"1️⃣ (If it's not already done) create an account to HF ➡ https://huggingface.co/join\n",

|

||||

"\n",

|

||||

"2️⃣ Sign in and then, you need to store your authentication token from the Hugging Face website.\n",

|

||||

"- Create a new token (https://huggingface.co/settings/tokens) **with write role**\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/notebooks/create-token.jpg\" alt=\"Create HF Token\">\n",

|

||||

"\n",

|

||||

"- Copy the token \n",

|

||||

"- Run the cell below and paste the token"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "izT6FpgNzZ6R"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "rKt2vsYoK56o"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"from huggingface_hub import notebook_login\n",

|

||||

"notebook_login()"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"If you don't want to use a Google Colab or a Jupyter Notebook, you need to use this command instead: `huggingface-cli login`"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "aSU9qD9_6dem"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"Then, we simply need to run `mlagents-push-to-hf`.\n",

|

||||

"\n",

|

||||

"And we define 4 parameters:\n",

|

||||

"\n",

|

||||

"1. `--run-id`: the name of the training run id.\n",

|

||||

"2. `--local-dir`: where the agent was saved, it’s results/<run_id name>, so in my case results/First Training.\n",

|

||||

"3. `--repo-id`: the name of the Hugging Face repo you want to create or update. It’s always <your huggingface username>/<the repo name>\n",

|

||||

"If the repo does not exist **it will be created automatically**\n",

|

||||

"4. `--commit-message`: since HF repos are git repository you need to define a commit message.\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/mlagentspushtohub.png\" alt=\"Push to Hub\"/>\n",

|

||||

"\n",

|

||||

"For instance:\n",

|

||||

"\n",

|

||||

"`!mlagents-push-to-hf --run-id=\"SnowballTarget1\" --local-dir=\"./results/SnowballTarget1\" --repo-id=\"ThomasSimonini/ppo-SnowballTarget\" --commit-message=\"First Push\"`"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "KK4fPfnczunT"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "dGEFAIboLVc6"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"Else, if everything worked you should have this at the end of the process(but with a different url 😆) :\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"```\n",

|

||||

"Your model is pushed to the hub. You can view your model here: https://huggingface.co/ThomasSimonini/ppo-SnowballTarget\n",

|

||||

"```\n",

|

||||

"\n",

|

||||

"It’s the link to your model, it contains a model card that explains how to use it, your Tensorboard and your config file. **What’s awesome is that it’s a git repository, that means you can have different commits, update your repository with a new push etc.**"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "yborB0850FTM"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"But now comes the best: **being able to visualize your agent online 👀.**"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "5Uaon2cg0NrL"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### Watch your agent playing 👀\n",

|

||||

"\n",

|

||||

"For this step it’s simple:\n",

|

||||

"\n",

|

||||

"1. Remember your repo-id\n",

|

||||

"\n",

|

||||

"2. Go here: https://singularite.itch.io/snowballtarget\n",

|

||||

"\n",

|

||||

"3. Launch the game and put it in full screen by clicking on the bottom right button\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/snowballtarget_load.png\" alt=\"Snowballtarget load\"/>"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "VMc4oOsE0QiZ"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"1. In step 1, choose your model repository which is the model id (in my case ThomasSimonini/ppo-SnowballTarget).\n",

|

||||

"\n",

|

||||

"2. In step 2, **choose what model you want to replay**:\n",

|

||||

" - I have multiple one, since we saved a model every 500000 timesteps. \n",

|

||||

" - But if I want the more recent I choose `SnowballTarget.onnx`\n",

|

||||

"\n",

|

||||

"👉 What’s nice **is to try with different models step to see the improvement of the agent.**\n",

|

||||

"\n",

|

||||

"And don't hesitate to share the best score your agent gets on discord in #rl-i-made-this channel 🔥\n",

|

||||

"\n",

|

||||

"Let's now try a harder environment called Pyramids..."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "Djs8c5rR0Z8a"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## Pyramids 🏆\n",

|

||||

"\n",

|

||||

"### Download and move the environment zip file in `./training-envs-executables/linux/`\n",

|

||||

"- Our environment executable is in a zip file.\n",

|

||||

"- We need to download it and place it to `./training-envs-executables/linux/`\n",

|

||||

"- We use a linux executable because we use colab, and colab machines OS is Ubuntu (linux)"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "rVMwRi4y_tmx"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "NyqYYkLyAVMK"

|

||||

},

|

||||

"source": [

|

||||

"Download the file Pyramids.zip from https://drive.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H using `wget`. Check out the full solution to download large files from GDrive [here](https://bcrf.biochem.wisc.edu/2021/02/05/download-google-drive-files-using-wget/)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "AxojCsSVAVMP"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!wget --load-cookies /tmp/cookies.txt \"https://docs.google.com/uc?export=download&confirm=$(wget --quiet --save-cookies /tmp/cookies.txt --keep-session-cookies --no-check-certificate 'https://docs.google.com/uc?export=download&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H' -O- | sed -rn 's/.*confirm=([0-9A-Za-z_]+).*/\\1\\n/p')&id=1UiFNdKlsH0NTu32xV-giYUEVKV4-vc7H\" -O ./training-envs-executables/linux/Pyramids.zip && rm -rf /tmp/cookies.txt"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "bfs6CTJ1AVMP"

|

||||

},

|

||||

"source": [

|

||||

"**OR** Download directly to local machine and then drag and drop the file from local machine to `./training-envs-executables/linux`"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "H7JmgOwcSSmF"

|

||||

},

|

||||

"source": [

|

||||

"Wait for the upload to finish and then run the command below. \n",

|

||||

"\n",

|

||||

""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"Unzip it"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "iWUUcs0_794U"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "i2E3K4V2AVMP"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"%%capture\n",

|

||||

"!unzip -d ./training-envs-executables/linux/ ./training-envs-executables/linux/Pyramids.zip"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "KmKYBgHTAVMP"

|

||||

},

|

||||

"source": [

|

||||

"Make sure your file is accessible "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "Im-nwvLPAVMP"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!chmod -R 755 ./training-envs-executables/linux/Pyramids/Pyramids"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### Modify the PyramidsRND config file\n",

|

||||

"- Contrary to the first environment which was a custom one, **Pyramids was made by the Unity team**.\n",

|

||||

"- So the PyramidsRND config file already exists and is in ./content/ml-agents/config/ppo/PyramidsRND.yaml\n",

|

||||

"- You might asked why \"RND\" in PyramidsRND. RND stands for *random network distillation* it's a way to generate curiosity rewards. If you want to know more on that we wrote an article explaning this technique: https://medium.com/data-from-the-trenches/curiosity-driven-learning-through-random-network-distillation-488ffd8e5938\n",

|

||||

"\n",

|

||||

"For this training, we’ll modify one thing:\n",

|

||||

"- The total training steps hyperparameter is too high since we can hit the benchmark (mean reward = 1.75) in only 1M training steps.\n",

|

||||

"👉 To do that, we go to config/ppo/PyramidsRND.yaml,**and modify these to max_steps to 1000000.**\n",

|

||||

"\n",

|

||||

"<img src=\"https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit7/pyramids-config.png\" alt=\"Pyramids config\"/>"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "fqceIATXAgih"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"As an experimentation, you should also try to modify some other hyperparameters, Unity provides a very [good documentation explaining each of them here](https://github.com/Unity-Technologies/ml-agents/blob/main/docs/Training-Configuration-File.md).\n",

|

||||

"\n",

|

||||

"We’re now ready to train our agent 🔥."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "RI-5aPL7BWVk"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### Train the agent\n",

|

||||

"\n",

|

||||

"The training will take 30 to 45min depending on your machine, go take a ☕️you deserve it 🤗."

|

||||

],

|

||||

"metadata": {

|

||||

"id": "s5hr1rvIBdZH"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"id": "fXi4-IaHBhqD"

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"!mlagents-learn ./config/ppo/PyramidsRND.yaml --env=./training-envs-executables/linux/Pyramids/Pyramids --run-id=\"Pyramids Training\" --no-graphics"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"id": "txonKxuSByut"

|

||||

},

|

||||

"source": [

|

||||

"### Push the agent to the 🤗 Hub\n",

|

||||

"\n",

|

||||

"- Now that we trained our agent, we’re **ready to push it to the Hub to be able to visualize it playing on your browser🔥.**"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [],

|

||||

"metadata": {

|

||||

"id": "JZ53caJ99sX_"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"source": [

|

||||

"!mlagents-push-to-hf --run-id= # Add your run id --local-dir= # Your local dir --repo-id= # Your repo id --commit-message= # Your commit message"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "yiEQbv7rB4mU"

|

||||

},

|

||||

"execution_count": null,

|

||||

"outputs": []

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"### Watch your agent playing 👀\n",

|

||||

"\n",

|

||||

"The temporary link for Pyramids demo is: https://singularite.itch.io/pyramids"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "7aZfgxo-CDeQ"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

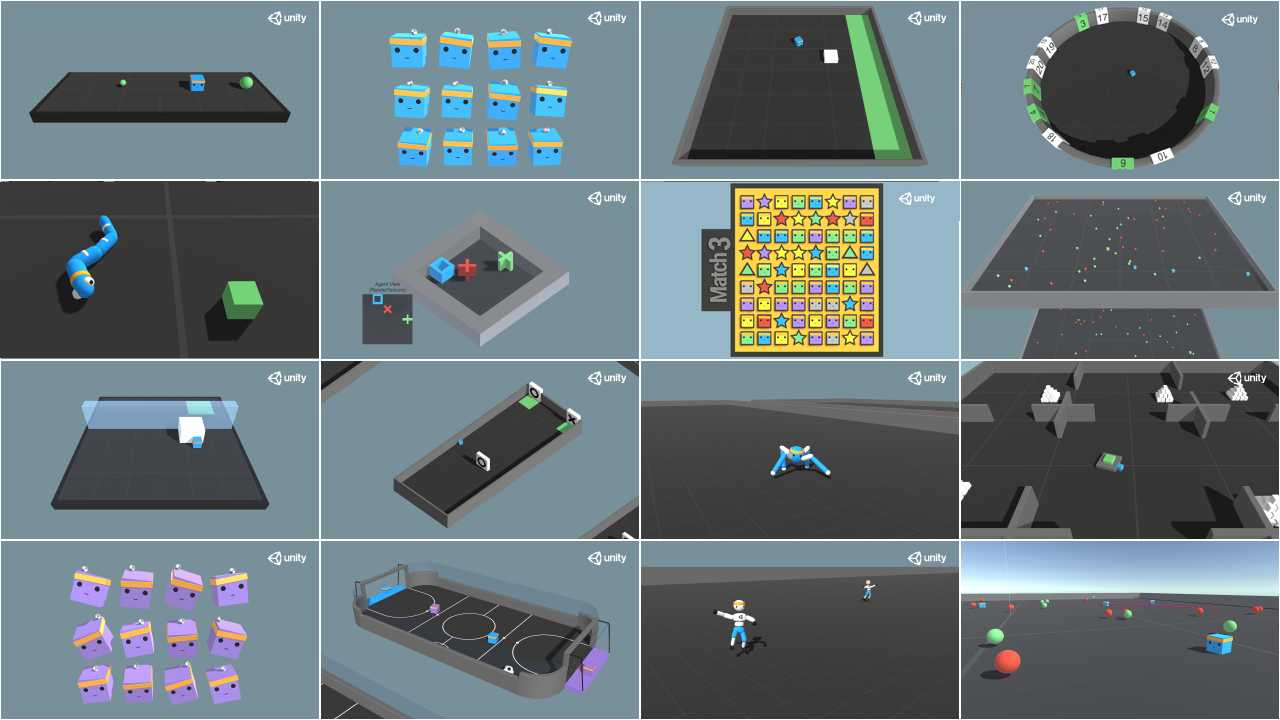

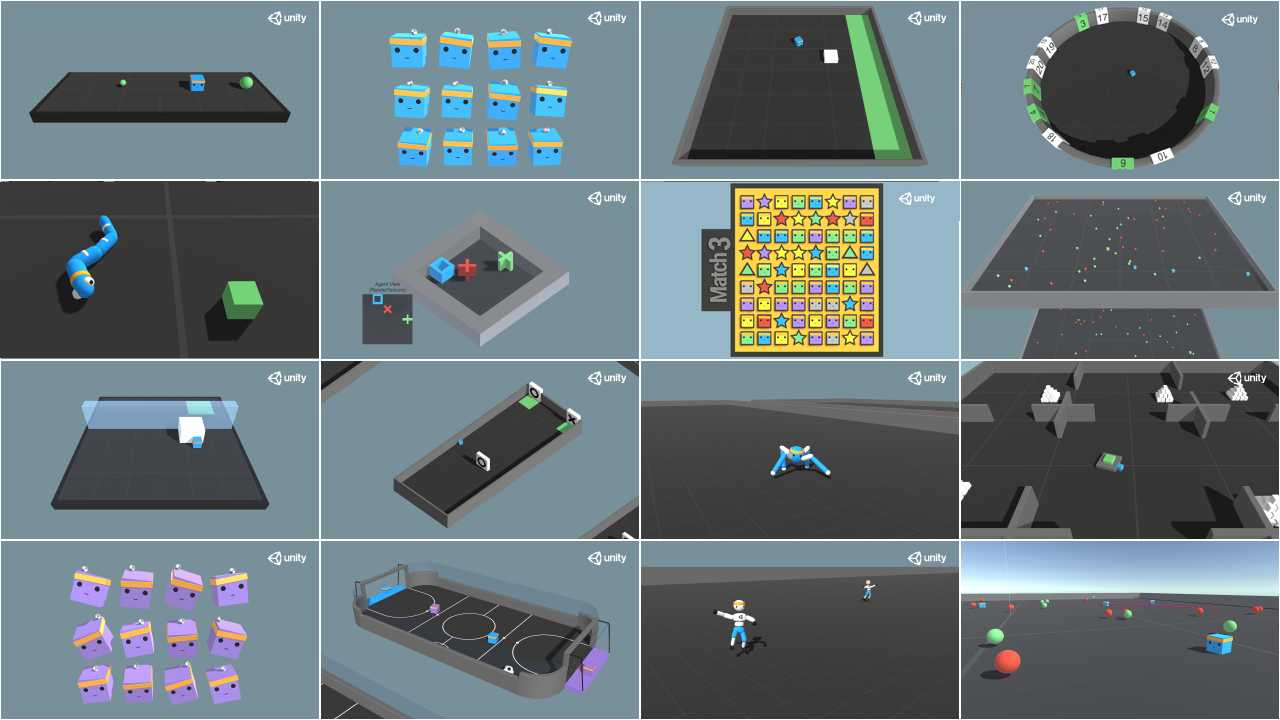

"### 🎁 Bonus: Why not train on another environment?\n",

|

||||

"Now that you know how to train an agent using MLAgents, **why not try another environment?** \n",

|

||||

"\n",

|

||||

"MLAgents provides 18 different and we’re building some custom ones. The best way to learn is to try things of your own, have fun.\n",

|

||||

"\n"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "hGG_oq2n0wjB"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

""

|

||||

],

|

||||

"metadata": {

|

||||

"id": "KSAkJxSr0z6-"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"You have the full list of the one currently available on Hugging Face here 👉 https://github.com/huggingface/ml-agents#the-environments\n",

|

||||

"\n",

|

||||

"For the demos to visualize your agent, the temporary link is: https://singularite.itch.io (temporary because we'll also put the demos on Hugging Face Space)\n",

|

||||

"\n",

|

||||

"For now we have integrated: \n",

|

||||

"- [Worm](https://singularite.itch.io/worm) demo where you teach a **worm to crawl**.\n",

|

||||

"- [Walker](https://singularite.itch.io/walker) demo where you teach an agent **to walk towards a goal**.\n",

|

||||

"\n",

|

||||

"If you want new demos to be added, please open an issue: https://github.com/huggingface/deep-rl-class 🤗"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "YiyF4FX-04JB"

|

||||

}

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"That’s all for today. Congrats on finishing this tutorial!\n",

|

||||

"\n",

|

||||

"The best way to learn is to practice and try stuff. Why not try another environment? ML-Agents has 18 different environments, but you can also create your own? Check the documentation and have fun!\n",

|

||||

"\n",

|

||||

"See you on Unit 6 🔥,\n",

|

||||

"\n",

|

||||

"## Keep Learning, Stay awesome 🤗"

|

||||

],

|

||||

"metadata": {

|

||||

"id": "PI6dPWmh064H"

|

||||

}

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

"accelerator": "GPU",

|

||||

"colab": {

|

||||

"provenance": [],

|

||||

"private_outputs": true,

|

||||

"include_colab_link": true

|

||||

},

|

||||

"gpuClass": "standard",

|

||||

"kernelspec": {

|

||||

"display_name": "Python 3",

|

||||

"name": "python3"

|

||||

},

|

||||

"language_info": {

|

||||

"name": "python"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

"nbformat_minor": 0

|

||||

}

|

||||

@@ -365,8 +365,6 @@

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"import gym\n",

|

||||

"\n",

|

||||

"# First, we create our environment called LunarLander-v2\n",

|

||||

"env = gym.make(\"LunarLander-v2\")\n",

|

||||

"\n",

|

||||

|

||||

3194

unit5/unit5.ipynb

3194

unit5/unit5.ipynb

File diff suppressed because one or more lines are too long

@@ -46,6 +46,10 @@

|

||||

title: Play with Huggy

|

||||

- local: unitbonus1/conclusion

|

||||

title: Conclusion

|

||||

- title: Live 1. How the course work, Q&A, and playing with Huggy

|

||||

sections:

|

||||

- local: live1/live1

|

||||

title: Live 1. How the course work, Q&A, and playing with Huggy 🐶

|

||||

- title: Unit 2. Introduction to Q-Learning

|

||||

sections:

|

||||

- local: unit2/introduction

|

||||

@@ -88,6 +92,8 @@

|

||||

title: The Deep Q-Network (DQN)

|

||||

- local: unit3/deep-q-algorithm

|

||||

title: The Deep Q Algorithm

|

||||

- local: unit3/glossary

|

||||

title: Glossary

|

||||

- local: unit3/hands-on

|

||||

title: Hands-on

|

||||

- local: unit3/quiz

|

||||

@@ -96,7 +102,7 @@

|

||||

title: Conclusion

|

||||

- local: unit3/additional-readings

|

||||

title: Additional Readings

|

||||

- title: Unit Bonus 2. Automatic Hyperparameter Tuning with Optuna

|

||||

- title: Bonus Unit 2. Automatic Hyperparameter Tuning with Optuna

|

||||

sections:

|

||||

- local: unitbonus2/introduction

|

||||

title: Introduction

|

||||

@@ -104,6 +110,44 @@

|

||||

title: Optuna

|

||||

- local: unitbonus2/hands-on

|

||||

title: Hands-on

|

||||

- title: Unit 4. Policy Gradient with PyTorch

|

||||

sections:

|

||||

- local: unit4/introduction

|

||||

title: Introduction

|

||||

- local: unit4/what-are-policy-based-methods

|

||||

title: What are the policy-based methods?

|

||||

- local: unit4/advantages-disadvantages

|

||||

title: The advantages and disadvantages of policy-gradient methods

|

||||

- local: unit4/policy-gradient

|

||||

title: Diving deeper into policy-gradient

|

||||

- local: unit4/pg-theorem

|

||||

title: (Optional) the Policy Gradient Theorem

|

||||

- local: unit4/hands-on

|

||||

title: Hands-on

|

||||

- local: unit4/quiz

|

||||

title: Quiz

|

||||

- local: unit4/conclusion

|

||||

title: Conclusion

|

||||

- local: unit4/additional-readings

|

||||

title: Additional Readings

|

||||

- title: Unit 5. Introduction to Unity ML-Agents

|

||||

sections:

|

||||

- local: unit5/introduction

|

||||

title: Introduction

|

||||

- local: unit5/how-mlagents-works

|

||||

title: How ML-Agents works?

|

||||

- local: unit5/snowball-target

|

||||

title: The SnowballTarget environment

|

||||

- local: unit5/pyramids

|

||||

title: The Pyramids environment

|

||||

- local: unit5/curiosity

|

||||

title: (Optional) What is curiosity in Deep Reinforcement Learning?

|

||||

- local: unit5/hands-on

|

||||

title: Hands-on

|

||||

- local: unit5/bonus

|

||||

title: Bonus. Learn to create your own environments with Unity and MLAgents

|

||||

- local: unit5/conclusion

|

||||

title: Conclusion

|

||||

- title: Unit 6. Actor Critic methods with Robotics environments

|

||||

sections:

|

||||

- local: unit6/introduction

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Publishing Schedule [[publishing-schedule]]

|

||||

|

||||

We publish a **new unit every Monday** (except Monday, the 26th of December).

|

||||

We publish a **new unit every Tuesday**.

|

||||

|

||||

If you don't want to miss any of the updates, don't forget to:

|

||||

|

||||

|

||||

9

units/en/live1/live1.mdx

Normal file

9

units/en/live1/live1.mdx

Normal file

@@ -0,0 +1,9 @@

|

||||

# Live 1: How the course work, Q&A, and playing with Huggy

|

||||

|

||||

In this first live stream, we explained how the course work (scope, units, challenges, and more) and answered your questions.

|

||||

|

||||

And finally, we saw some LunarLander agents you've trained and play with your Huggies 🐶

|

||||

|

||||

<Youtube id="JeJIswxyrsM" />

|

||||

|

||||

To know when the next live is scheduled **check the discord server**. We will also send **you an email**. If you can't participate, don't worry, we record the live sessions.

|

||||

@@ -9,7 +9,13 @@ Discord is a free chat platform. If you've used Slack, **it's quite similar**. T

|

||||

|

||||

Starting in Discord can be a bit intimidating, so let me take you through it.

|

||||

|

||||

When you sign-up to our Discord server, you'll need to specify which topics you're interested in by **clicking #role-assignment at the left**. Here, you can pick different categories. Make sure to **click "Reinforcement Learning"**! :fire:. You'll then get to **introduce yourself in the `#introduction-yourself` channel**.

|

||||

When you sign-up to our Discord server, you'll need to specify which topics you're interested in by **clicking #role-assignment at the left**.

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/discord1.jpg" alt="Discord"/>

|

||||

|

||||

In #role-assignment, you can pick different categories. Make sure to **click "Reinforcement Learning"**. You'll then get to **introduce yourself in the `#introduction-yourself` channel**.

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/discord2.jpg" alt="Discord"/>

|

||||

|

||||

## So which channels are interesting to me? [[channels]]

|

||||

|

||||

|

||||

@@ -23,7 +23,7 @@ In this course, you will:

|

||||

|

||||

- 📖 Study Deep Reinforcement Learning in **theory and practice.**

|

||||

- 🧑💻 Learn to **use famous Deep RL libraries** such as [Stable Baselines3](https://stable-baselines3.readthedocs.io/en/master/), [RL Baselines3 Zoo](https://github.com/DLR-RM/rl-baselines3-zoo), [Sample Factory](https://samplefactory.dev/) and [CleanRL](https://github.com/vwxyzjn/cleanrl).

|

||||

- 🤖 **Train agents in unique environments** such as [SnowballFight](https://huggingface.co/spaces/ThomasSimonini/SnowballFight), [Huggy the Doggo 🐶](https://huggingface.co/spaces/ThomasSimonini/Huggy), [MineRL (Minecraft ⛏️)](https://minerl.io/), [VizDoom (Doom)](https://vizdoom.cs.put.edu.pl/) and classical ones such as [Space Invaders](https://www.gymlibrary.dev/environments/atari/) and [PyBullet](https://pybullet.org/wordpress/).

|

||||

- 🤖 **Train agents in unique environments** such as [SnowballFight](https://huggingface.co/spaces/ThomasSimonini/SnowballFight), [Huggy the Doggo 🐶](https://singularite.itch.io/huggy), [VizDoom (Doom)](https://vizdoom.cs.put.edu.pl/) and classical ones such as [Space Invaders](https://www.gymlibrary.dev/environments/atari/), [PyBullet](https://pybullet.org/wordpress/) and more.

|

||||

- 💾 Share your **trained agents with one line of code to the Hub** and also download powerful agents from the community.

|

||||

- 🏆 Participate in challenges where you will **evaluate your agents against other teams. You'll also get to play against the agents you'll train.**

|

||||

|

||||

@@ -52,20 +52,21 @@ The course is composed of:

|

||||

|

||||

You can choose to follow this course either:

|

||||

|

||||

- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of March 2023.

|

||||

- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of March 2023.

|

||||

- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of April 2023.

|

||||

- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of April 2023.

|

||||

- *As a simple audit*: you can participate in all challenges and do assignments if you want, but you have no deadlines.

|

||||

|

||||

Both paths **are completely free**.

|

||||

Whatever path you choose, we advise you **to follow the recommended pace to enjoy the course and challenges with your fellow classmates.**

|

||||

You don't need to tell us which path you choose. At the end of March, when we verify the assignments **if you get more than 80% of the assignments done, you'll get a certificate.**

|

||||

|

||||

You don't need to tell us which path you choose. At the end of March, when we will verify the assignments **if you get more than 80% of the assignments done, you'll get a certificate.**

|

||||

|

||||

## The Certification Process [[certification-process]]

|

||||

|

||||

The certification process is **completely free**:

|

||||

|

||||

- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of March 2023.

|

||||

- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of March 2023.

|

||||

- *To get a certificate of completion*: you need to complete 80% of the assignments before the end of April 2023.

|

||||

- *To get a certificate of honors*: you need to complete 100% of the assignments before the end of April 2023.

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/certification.jpg" alt="Course certification" width="100%"/>

|

||||

|

||||

@@ -92,7 +93,7 @@ You need only 3 things:

|

||||

## What is the publishing schedule? [[publishing-schedule]]

|

||||

|

||||

|

||||

We publish **a new unit every Monday** (except Monday, the 26th of December).

|

||||

We publish **a new unit every Tuesday**.

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/communication/schedule1.png" alt="Schedule 1" width="100%"/>

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/communication/schedule2.png" alt="Schedule 2" width="100%"/>

|

||||

@@ -128,7 +129,7 @@ In this new version of the course, you have two types of challenges:

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit0/challenges.jpg" alt="Challenges" width="100%"/>

|

||||

|

||||

These AI vs.AI challenges will be announced **later in December**.

|

||||

These AI vs.AI challenges will be announced **in January**.

|

||||

|

||||

|

||||

## I found a bug, or I want to improve the course [[contribute]]

|

||||

|

||||

@@ -18,9 +18,10 @@ You can now sign up for our Discord Server. This is the place where you **can ex

|

||||

When you join, remember to introduce yourself in #introduce-yourself and sign-up for reinforcement channels in #role-assignments.

|

||||

|

||||

We have multiple RL-related channels:

|

||||

- `rl-announcements`: where we give the last information about the course.

|

||||

- `rl-announcements`: where we give the latest information about the course.

|

||||

- `rl-discussions`: where you can exchange about RL and share information.

|

||||

- `rl-study-group`: where you can create and join study groups.

|

||||

- `rl-i-made-this`: where you can share your projects and models.

|

||||

|

||||

If this is your first time using Discord, we wrote a Discord 101 to get the best practices. Check the next section.

|

||||

|

||||

|

||||

@@ -12,5 +12,10 @@ In the next (bonus) unit, we’re going to reinforce what we just learned by **t

|

||||

|

||||

You will be able then to play with him 🤗.

|

||||

|

||||

<video src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/huggy.mp4" alt="Huggy" type="video/mp4">

|

||||

</video>

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/huggy.jpg" alt="Huggy"/>

|

||||

|

||||

Finally, we would love **to hear what you think of the course and how we can improve it**. If you have some feedback then, please 👉 [fill this form](https://forms.gle/BzKXWzLAGZESGNaE9)

|

||||

|

||||

### Keep Learning, stay awesome 🤗

|

||||

|

||||

|

||||

|

||||

@@ -24,6 +24,8 @@ To find your result, go to the [leaderboard](https://huggingface.co/spaces/huggi

|

||||

|

||||

For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process

|

||||

|

||||

And you can check your progress here 👉 https://huggingface.co/spaces/ThomasSimonini/Check-my-progress-Deep-RL-Course

|

||||

|

||||

So let's get started! 🚀

|

||||

|

||||

**To start the hands-on click on Open In Colab button** 👇 :

|

||||

@@ -139,7 +141,7 @@ To make things easier, we created a script to install all these dependencies.

|

||||

```

|

||||

|

||||

```python

|

||||

!pip install -r https://huggingface.co/spaces/ThomasSimonini/temp-space-requirements/raw/main/requirements/requirements-unit1.txt

|

||||

!pip install -r https://raw.githubusercontent.com/huggingface/deep-rl-class/main/notebooks/unit1/requirements-unit1.txt

|

||||

```

|

||||

|

||||

During the notebook, we'll need to generate a replay video. To do so, with colab, **we need to have a virtual screen to be able to render the environment** (and thus record the frames).

|

||||

|

||||

@@ -22,7 +22,6 @@ It's essential **to master these elements** before diving into implementing Dee

|

||||

|

||||

After this unit, in a bonus unit, you'll be **able to train Huggy the Dog 🐶 to fetch the stick and play with him 🤗**.

|

||||

|

||||

<video src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/huggy.mp4" alt="Huggy" type="video/mp4">

|

||||

</video>

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/huggy.jpg" alt="Huggy"/>

|

||||

|

||||

So let's get started! 🚀

|

||||

|

||||

@@ -15,5 +15,7 @@ In the next chapter, we’re going to dive deeper by studying our first Deep Rei

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit4/atari-envs.gif" alt="Atari environments"/>

|

||||

|

||||

|

||||

Finally, we would love **to hear what you think of the course and how we can improve it**. If you have some feedback then, please 👉 [fill this form](https://forms.gle/BzKXWzLAGZESGNaE9)

|

||||

|

||||

### Keep Learning, stay awesome 🤗

|

||||

|

||||

|

||||

@@ -13,9 +13,24 @@ This is a community-created glossary. Contributions are welcomed!

|

||||

- **The state-value function.** For each state, the state-value function is the expected return if the agent starts in that state and follows the policy until the end.

|

||||

- **The action-value function.** In contrast to the state-value function, the action-value calculates for each state and action pair the expected return if the agent starts in that state and takes an action. Then it follows the policy forever after.

|

||||

|

||||

### Epsilon-greedy strategy:

|

||||

|

||||

- Common exploration strategy used in reinforcement learning that involves balancing exploration and exploitation.

|

||||

- Chooses the action with the highest expected reward with a probability of 1-epsilon.

|

||||

- Chooses a random action with a probability of epsilon.

|

||||

- Epsilon is typically decreased over time to shift focus towards exploitation.

|

||||

|

||||

### Greedy strategy:

|

||||

|

||||

- Involves always choosing the action that is expected to lead to the highest reward, based on the current knowledge of the environment. (only exploitation)

|

||||

- Always chooses the action with the highest expected reward.

|

||||

- Does not include any exploration.

|

||||

- Can be disadvantageous in environments with uncertainty or unknown optimal actions.

|

||||

|

||||

|

||||

If you want to improve the course, you can [open a Pull Request.](https://github.com/huggingface/deep-rl-class/pulls)

|

||||

|

||||

This glossary was made possible thanks to:

|

||||

|

||||

- [Ramón Rueda](https://github.com/ramon-rd)

|

||||

- [Hasarindu Perera](https://github.com/hasarinduperera/)

|

||||

|

||||

@@ -22,6 +22,8 @@ To find your result, go to the [leaderboard](https://huggingface.co/spaces/huggi

|

||||

|

||||

For more information about the certification process, check this section 👉 https://huggingface.co/deep-rl-course/en/unit0/introduction#certification-process

|

||||

|

||||

And you can check your progress here 👉 https://huggingface.co/spaces/ThomasSimonini/Check-my-progress-Deep-RL-Course

|

||||

|

||||

|

||||

**To start the hands-on click on Open In Colab button** 👇 :

|

||||

|

||||

|

||||

@@ -62,7 +62,7 @@ For each state, the state-value function outputs the expected return if the agen

|

||||

|

||||

In the action-value function, for each state and action pair, the action-value function **outputs the expected return** if the agent starts in that state and takes action, and then follows the policy forever after.

|

||||

|

||||

The value of taking action an in state \\(s\\) under a policy \\(π\\) is:

|

||||

The value of taking action \\(a\\) in state \\(s\\) under a policy \\(π\\) is:

|

||||

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit3/action-state-value-function-1.jpg" alt="Action State value function"/>

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit3/action-state-value-function-2.jpg" alt="Action State value function"/>

|

||||

|

||||

@@ -11,4 +11,7 @@ Don't hesitate to train your agent in other environments (Pong, Seaquest, QBert,

|

||||

|

||||

In the next unit, **we're going to learn about Optuna**. One of the most critical task in Deep Reinforcement Learning is to find a good set of training hyperparameters. And Optuna is a library that helps you to automate the search.

|

||||

|

||||

Finally, we would love **to hear what you think of the course and how we can improve it**. If you have some feedback then, please 👉 [fill this form](https://forms.gle/BzKXWzLAGZESGNaE9)

|

||||

|

||||

### Keep Learning, stay awesome 🤗

|

||||

|

||||

|

||||

@@ -30,7 +30,7 @@ No, because one frame is not enough to have a sense of motion! But what if I add

|

||||

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit4/temporal-limitation-2.jpg" alt="Temporal Limitation"/>

|

||||

That’s why, to capture temporal information, we stack four frames together.

|

||||

|

||||

Then, the stacked frames are processed by three convolutional layers. These layers **allow us to capture and exploit spatial relationships in images**. But also, because frames are stacked together, **you can exploit some spatial properties across those frames**.

|

||||

Then, the stacked frames are processed by three convolutional layers. These layers **allow us to capture and exploit spatial relationships in images**. But also, because frames are stacked together, **you can exploit some temporal properties across those frames**.

|

||||

|

||||

If you don't know what are convolutional layers, don't worry. You can check the [Lesson 4 of this free Deep Reinforcement Learning Course by Udacity](https://www.udacity.com/course/deep-learning-pytorch--ud188)

|

||||

|

||||

|

||||

@@ -13,12 +13,12 @@ Internally, our Q-function has **a Q-table, a table where each cell corresponds

|

||||

The problem is that Q-Learning is a *tabular method*. This raises a problem in which the states and actions spaces **are small enough to approximate value functions to be represented as arrays and tables**. Also, this is **not scalable**.

|

||||

Q-Learning worked well with small state space environments like:

|

||||

|

||||

- FrozenLake, we had 14 states.

|

||||

- FrozenLake, we had 16 states.

|

||||

- Taxi-v3, we had 500 states.

|

||||

|

||||

But think of what we're going to do today: we will train an agent to learn to play Space Invaders a more complex game, using the frames as input.

|

||||

|

||||

As **[Nikita Melkozerov mentioned](https://twitter.com/meln1k), Atari environments** have an observation space with a shape of (210, 160, 3)*, containing values ranging from 0 to 255 so that gives us \\(256^{210x160x3} = 256^{100800}\\) (for comparison, we have approximately \\(10^{80}\\) atoms in the observable universe).

|

||||

As **[Nikita Melkozerov mentioned](https://twitter.com/meln1k), Atari environments** have an observation space with a shape of (210, 160, 3)*, containing values ranging from 0 to 255 so that gives us \\(256^{210 \times 160 \times 3} = 256^{100800}\\) (for comparison, we have approximately \\(10^{80}\\) atoms in the observable universe).

|

||||

|

||||

* A single frame in Atari is composed of an image of 210x160 pixels. Given the images are in color (RGB), there are 3 channels. This is why the shape is (210, 160, 3). For each pixel, the value can go from 0 to 255.

|

||||

|

||||

|

||||

39

units/en/unit3/glossary.mdx

Normal file

39

units/en/unit3/glossary.mdx

Normal file

@@ -0,0 +1,39 @@

|

||||

# Glossary

|

||||

|

||||

This is a community-created glossary. Contributions are welcomed!

|

||||

|

||||

- **Tabular Method:** type of problem in which the state and action spaces are small enough to approximate value functions to be represented as arrays and tables.

|

||||